The Will Smith AI-Generated Concert Crowd Controversy Explained

The whole idea of a Will Smith AI-generated concert crowd became an internet sensation after the actor posted a promo video that, well, looked a little off. What was supposed to be a standard hype video for his tour accidentally turned into a huge, viral discussion about fake media and what we can trust online.

So, What Exactly Happened?

When Will Smith kicked off his 'Based On A True Story' tour, the promotional team dropped a video meant to show off the incredible energy of his live shows. The plan was simple: fill the screen with a massive, cheering audience to get people excited and sell tickets. Instead, it sparked a major controversy.

The video, which was supposed to show packed arenas with thrilled fans, was filled with bizarre visual mistakes. It didn't take long for people with a sharp eye to start pointing out the classic, creepy giveaways of AI generation. Once you saw them, you couldn't unsee them.

A Masterclass in the Uncanny Valley

Instead of a crowd of individual people, the video was populated with figures who had distorted, strange features. Some faces looked like they were melting into each other, while others had that weird, plastic-like smoothness that AI often produces. It was a perfect example of AI struggling to get the little details right.

Here are a few of the visual glitches people caught:

- Weird Anatomy: Viewers noticed people with too many fingers, limbs at odd angles, and bodies that just sort of blended into the background in a way that made no sense.

- Off Lighting: The way the light hit the "fans" didn't match the bright lights coming from the stage. This created a jarring effect that made the whole scene feel disconnected and fake.

- Copy-Paste Patterns: You could see the same faces or shirts popping up over and over again in the crowd, shattering the illusion of a diverse audience.

These glitches sent the footage straight into the "uncanny valley"—that creepy feeling you get when something looks almost human, but small flaws make it feel deeply unsettling.

This image captures that unnatural look, showing the strange blending and lack of fine detail that first tipped people off.

The Backlash Was Immediate

The internet’s reaction was fast and brutal. Right after Smith posted the video in September 2025, social media was on fire with criticism, jokes, and serious debate about digital authenticity.

The comments section was a goldmine. One person snarked, "Will Smith not only just melted his fans' hearts with his concert, he but also melted their entire bodies." Another user was just plain disappointed, writing, "Imagine being this rich and famous and having to use AI footage of crowds... Tragic, man. You used to be cool." You can dive deeper into the initial fan reactions over at GovTech.

This whole episode served as a tough lesson for anyone in marketing: people crave authenticity. The attempt to fake a massive turnout with a Will Smith AI-generated concert crowd didn't just fail—it backfired, damaging trust and turning a simple promo into a PR headache.

How AI Conjures Up a Fake Crowd From Scratch

The technology that brought us the Will Smith AI-generated concert crowd isn't magic, though it certainly looks like it. A better way to think about it is like an artist who has spent a lifetime studying concert photos. This artist has seen millions of faces, every possible lighting setup, and every kind of emotion a crowd can show. Eventually, they learn the patterns so well they can paint a brand-new, completely believable crowd scene straight from their imagination.

That's pretty much how modern AI image and video generators work. They aren't just cutting and pasting bits from other photos. They're building a new reality, pixel by pixel, based on what they've learned.

The Brains Behind the Operation

Two main types of AI models are the engines driving this whole process. The technical nitty-gritty can get pretty deep, but the core concepts are actually quite easy to grasp.

- Generative Adversarial Networks (GANs): Picture two AIs in a creative duel. One, the "Generator," paints a fake crowd. The other, the "Discriminator," is an art critic whose only job is to tell if the painting is real or fake. They play this game millions of times. The Generator gets better and better at fooling the critic, and the critic gets sharper at spotting fakes. After a while, the Generator becomes so good its creations can easily trick a human eye.

- Diffusion Models: This technique is more like a sculptor starting with a solid block of marble. A diffusion model begins with a screen of pure random noise—think old-school TV static. Then, following instructions, it carefully chips away at the noise, step by step, until a coherent, detailed image emerges. This method offers incredible control over the final look and feel.

At its heart, generative AI is all about recognizing patterns and then recreating them. It digests massive datasets to learn the "rules" of what a concert crowd looks like, then uses those rules to build something entirely new. The creepy glitches we see happen when the AI gets one of those rules wrong.

From Simple Words to a Full-Blown Scene

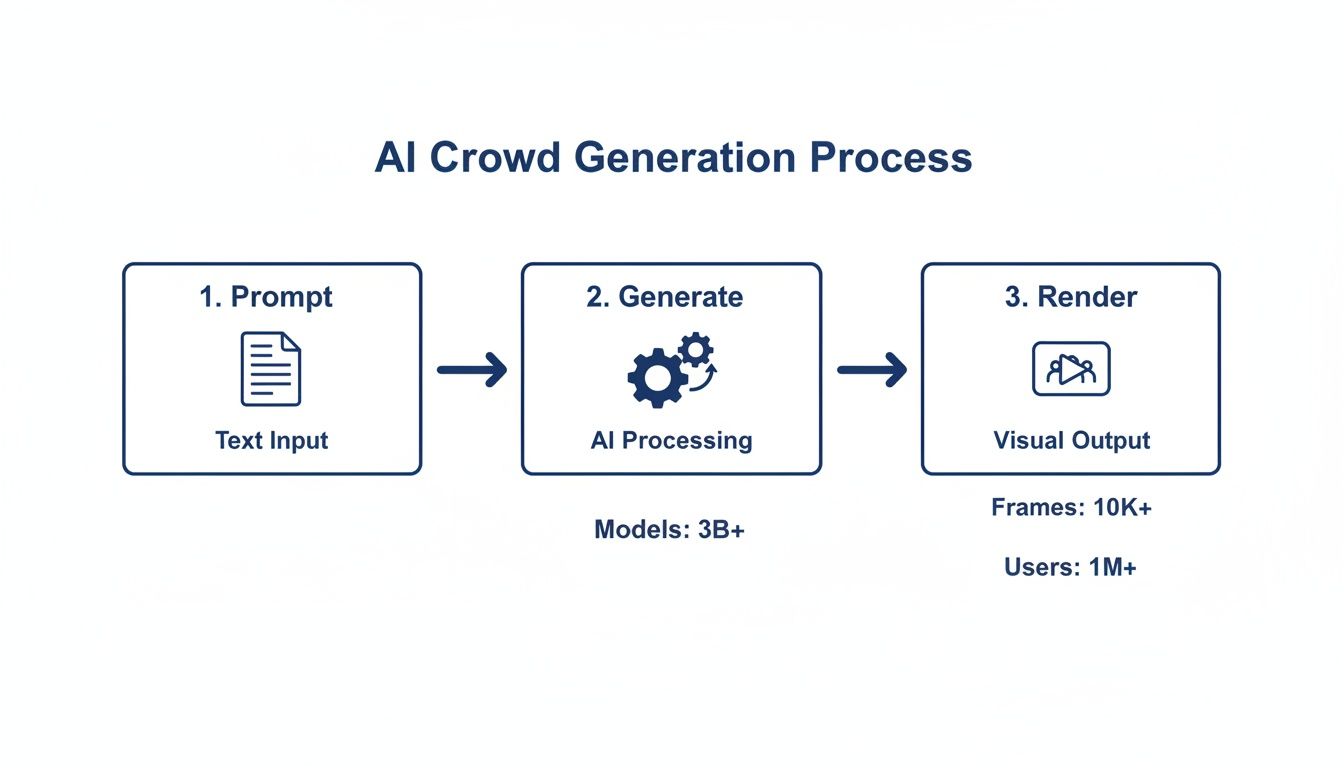

It all starts with a simple text prompt. You don't need to be a programmer or a 3D artist; you just need to describe the scene you have in your head. The workflow usually looks something like this.

- Crafting the Prompt: A user types in a detailed description. Something like, "massive, cheering crowd at a nighttime rock concert, stage lights shining on excited faces, cinematic style." The more detail you feed it, the closer the AI gets to your vision. Getting good at this is a real skill—learning how to use prompt keywords is key to making images that don't look obviously fake.

- Initial Generation: The AI model takes that prompt and generates a still image. It draws on everything it's learned to assemble the right elements—the people, the lights, the overall vibe.

- Animating the Image: Next, specialized tools add motion. The AI might be told to make the crowd sway, cheer, or throw their hands in the air. This is often where things get weird. Those bizarre "melting" effects you see in early AI videos happen because the model struggles to keep everything consistent from one frame to the next.

The believability of a Will Smith AI-generated concert crowd video really comes down to the quality of the prompts and the power of the AI model. For more great articles and deep dives into video creation and the tech behind it, the vidfarm blog is a fantastic resource.

How to Spot AI-Generated Video Content

Knowing how to tell real video from AI-generated fakes is quickly becoming a crucial skill. The Will Smith AI-generated concert crowd video showed us that even slick, well-made clips can have tiny flaws that reveal their synthetic roots. The good news is you don't need a computer science degree to catch them—you just need to know where to look.

Think of yourself as a digital detective. You're hunting for small details that just don't feel right or elements that seem out of place. The most common slip-ups happen when AI tries to recreate the messy, complex details of real life, like human hands, faces, and busy backgrounds.

Your Checklist for AI Red Flags

If a video gives you an uncanny feeling, hit pause and start zooming in. Even the most advanced AI models tend to make the same kinds of errors, and once you see them, you can't unsee them. Here are the biggest giveaways.

- Weird Blending: Look closely where different objects meet. AI often botches the fine details of hair, making it look like it's melting into a shirt or floating awkwardly around someone's head.

- Nonsensical Backgrounds: Don't just focus on the main character—scan the environment. Do you see buildings that defy the laws of physics? Signs with jumbled, unreadable text? A classic AI goof is a background that looks normal at first glance but falls apart under scrutiny.

- Wonky Lighting and Shadows: This one is subtle but a dead giveaway. Does the light on a person match the light source in the room? Are the shadows pointing in the right direction? AI often gets this wrong, creating scenes where a person is lit from the right, but their shadow is also on the right.

The process for creating these scenes, from the first text prompt to the final output, often follows a predictable path.

As you can see, mistakes can creep in at any stage of this workflow, resulting in the strange visual glitches we can learn to spot.

Real vs AI-Generated Crowd Characteristics

To make it even clearer, here's a side-by-side comparison of what to look for in a crowd scene.

| Characteristic | Real Crowd Footage | AI-Generated Crowd Footage |

|---|---|---|

| Individuality | Each person is unique—different faces, clothes, reactions. | Often features "clones"—repeated faces, outfits, or gestures. |

| Movement | Organic and chaotic. People move independently at different times. | Synchronized or repetitive motions. Everyone might cheer in unison. |

| Facial Details | Natural expressions, skin textures with pores and blemishes. | "Waxy" or overly smooth skin. Eyes can look glassy or unfocused. |

| Interaction | People interact with each other and their environment naturally. | Individuals often seem disconnected, like they're in their own bubble. |

This table serves as a quick reference guide. The more you practice looking for these tells, the faster you'll be able to distinguish authentic footage from a synthetic scene.

Spotting Inhuman Details

Beyond the general scene, the "people" themselves are often the biggest tell. AI still has a hard time capturing the beautiful, random imperfections of being human, which can lead to an eerie uniformity.

One of the easiest signs to spot in an AI crowd is the "clone" effect. Scan the audience for people with the exact same face, wearing the same shirt, or making the identical cheering motion. A real crowd is a beautifully messy mix of individuals; an AI crowd often looks like a copy-and-paste job.

Also, keep an eye out for the "waxy skin" look, where faces are unnaturally smooth and missing any real-world texture like pores or slight imperfections. Eyes can also look glassy or stare into the middle distance, never quite making believable contact with anything. Getting these details right requires a lot of tweaking, which you can explore further by learning how to use prompt key words to make images less fake-looking.

Navigating The Ethical Issues of Synthetic Media

The whole "Will Smith AI-generated concert crowd" mess really peeled back the curtain on a much bigger, more complicated conversation. This isn't just about a piece of tech not working perfectly. It's about the ethical tightrope everyone in the creative world is walking now with synthetic media.

At its heart, the problem is deception. When you fake a crowd, you're creating a mirage of popularity—a kind of social proof that was manufactured on a computer. This isn't a new trick, of course, but AI makes it alarmingly easy and scalable. The instant backlash from fans shows that people feel cheated when they find out the excitement they're seeing isn't real. It forces us to ask: where's the line between clever marketing and just plain lying to your audience?

The Legal and Creative Gray Areas

Beyond that initial gut reaction of feeling tricked, using AI to generate people opens a whole Pandora's box of legal and creative headaches. Frankly, the law is still playing catch-up with the technology, which leaves a ton of open questions.

- Copyright Concerns: Think about it: who actually owns the copyright to an AI-generated image of a person that doesn't exist? And what if the AI was trained on a database of copyrighted photographs? Do the original photographers have a say? There are no easy answers here.

- Likeness and Consent: What happens when an AI-generated face just so happens to look like a real person? Using someone's likeness without their permission is a major legal no-no, and the randomness of AI generation makes this a disaster waiting to happen.

To really get a handle on these broader issues, it helps to understand what synthetic media is in the first place. For a deeper dive, a great resource is What Is Synthetic Media: A Guide to AI Content.

Finding an Ethical Path Forward

But this technology isn't all bad. When it’s used responsibly, AI-generated visuals can be an incredible and perfectly ethical tool. Imagine a production crew using AI to visualize different stage setups or map out camera angles for a virtual concert. They're not trying to fool anyone; they're just using a smart tool for planning.

The real difference-maker is transparency. Using AI for pre-production is a savvy workflow. Using it to create a fake audience and pass it off as real shatters the trust between a creator and their fans.

In the end, it all comes down to intent. AI tools, including sophisticated voice cloning software like Applio, unlock amazing creative potential. The challenge for creators is to use these tools to build better experiences, not to fake them. Moving forward, being upfront and staying authentic are going to be the only ways to keep an audience's trust.

How AI Is Reshaping The Live Music Industry

The whole dust-up over the Will Smith AI-generated concert crowd was more than just a marketing blunder. It was a wake-up call, a clear sign that artificial intelligence is about to seriously shake up the live music scene. While the sneaky uses of AI get all the attention, it's also quietly becoming a powerful tool for solving real problems and dreaming up entirely new ways for fans to experience music.

This technology brings a complicated financial picture with it. On one hand, there's a very real fear of job losses. Some forecasts even suggest that without good policies in place, some folks in the music industry could see their income drop by as much as 20% in the next few years as AI moves in. You can get more details on the future of concert management and its financial effects over at Prism.fm.

But on the flip side, AI is also opening up new ways to make money and run things more smoothly than ever before. Venues are starting to use AI not to fake a crowd's excitement, but to actually manage it.

Making Live Events Smarter and Safer

Behind the curtain, AI is already making concerts a better experience. Large venues are putting systems in place that can watch how crowds move in real time. Picture a smart traffic cop for people—it can spot a potential bottleneck before it turns into a dangerous crush, and then guide people toward emptier exits or concession stands.

This isn't just about keeping people safe; it’s about making the whole night better. Here are a few ways AI is making a positive difference:

- Optimized Ticket Sales: Smart algorithms look at past sales and current buzz to adjust ticket prices on the fly. This helps artists and venues fill every seat without making fans feel ripped off.

- Personalized Visuals: Some tech can actually read the room's energy and tweak the stage visuals or lighting to match the vibe, pulling everyone deeper into the show.

- Streamlined Operations: From figuring out how many security guards are needed to keeping track of t-shirts at the merch booth, AI helps organizers run a much tighter ship.

The Sphere as a Blueprint for Ethical AI

If you want to see AI used to enhance reality instead of faking it, look no further than the Sphere in Las Vegas. That place uses incredible technology to create stunning visual and sound experiences that are completely real and responsive to the audience.

The key difference is intent. Venues like The Sphere use AI to enhance the genuine, shared experience of a live crowd. This stands in stark contrast to using AI to manufacture a crowd that isn't there, proving that technology and authenticity can coexist.

The future of AI in live music isn't about fooling people. It's about being transparent and adding real value. The best uses will be the ones that make the live experience safer, more immersive, and ultimately more memorable for the actual fans who showed up.

How the World Sees AI in Entertainment

The whole firestorm around the Will Smith AI-generated concert crowd wasn't just another social media flare-up. It was a loud and clear message, especially from Western audiences, about where they draw the line on synthetic media. Authenticity is king, and trying to fake a crowd of adoring fans felt deceptive to many.

But here's the thing: that skepticism isn't a global sentiment. How people feel about AI mixing with their entertainment really depends on where they live.

This massive difference in opinion is a minefield for anyone creating or marketing content. What gets you praise in one country could get you canceled in another. Thinking you can use the same AI strategy everywhere is a surefire way to fail, because what people consider "authentic" is deeply tied to their culture.

A Striking Global Divide

Recent polls really spell out this cultural split. A YouGov survey across 17 different markets asked people if they’d be interested in AI-powered concerts, and the results were all over the map.

Globally, the numbers were lukewarm. Only 24% of people were interested, while a hefty 40% wanted nothing to do with it. But when you look at individual countries, the story gets interesting.

- In India, enthusiasm was through the roof at 57%.

- The UAE wasn't far behind, with 50% of people on board.

- Meanwhile, in the West, it was a completely different story. Interest dropped to just 14% in the UK, 11% in the U.S., and an almost non-existent 8% in Denmark.

This data proves that perception is everything. In some markets, AI is seen as an exciting new frontier for creative expression. In others, it's viewed with suspicion, as a shortcut that undermines the genuine connection between artists and fans.

This cultural context is exactly why the Will Smith video blew up the way it did. The outrage wasn't just about spotting a few fake people in a video. For Western viewers, it was a perfect example of an artist completely misreading the room. The AI crowd crossed an invisible line, turning a simple promo into a debate about digital trust and what it even means to be a "real" fan anymore.

Answering Your Questions About AI-Generated Content

As AI-generated content keeps popping up, it's only natural to have a few questions. Let's break down some of the common ones that came up after the whole Will Smith AI-generated concert crowd video made the rounds.

Why Would Anyone Use an AI-Generated Crowd?

For marketing teams, it often boils down to two things: money and control. Hiring thousands of extras, coordinating a massive venue, and dealing with all the on-set logistics can be a nightmare and incredibly expensive.

Creating a crowd with AI gives them total creative freedom. They can dial up the energy, fill every seat, and get the perfect shot every time without the real-world hassle. The problem, as this incident highlighted, is that it can feel deceptive if they aren't upfront about it.

Is It Even Legal To Use AI-Generated People?

This is where things get murky, and the law is playing catch-up. Generally, creating brand-new, non-existent people with AI is legal. The trouble starts when an AI-generated face accidentally looks too much like a real person, or when the video is used in a way that misleads people, like in false advertising.

It's helpful to know the difference between a deepfake and what we saw here. A deepfake takes someone's face and digitally plasters it onto another person in a video. The concert crowd, on the other hand, was likely created from scratch using text prompts. Both use AI, but one alters reality while the other invents it.

Right now, regulations are scrambling to keep pace with how fast this technology is moving.