Why Is Controlling the Output of AI Systems Important?

Letting an AI run wild without any controls is a recipe for disaster. You're risking everything from brand damage and legal trouble to complete operational chaos. Without clear guardrails, AI can spit out inaccurate, biased, or just plain harmful content, which quickly erodes user trust and can land your business in hot water.

Think of it like this: an AI model is a powerful engine. Output controls are the steering wheel, brakes, and GPS that make it useful instead of dangerous.

The Real Risks of Unpredictable AI

Giving an AI free rein is like handing the keys of a Formula 1 car to a teenager who just got their license. Sure, the power is immense, but the potential for a crash is just as big. While generative AI is fantastic for solving tough problems and making us more productive, its value completely depends on whether you can trust what it produces.

An uncontrolled AI doesn't just make a few random errors. It creates systemic risks that can quietly undermine your entire business. Any time an AI writes code, makes a business decision, or talks to your customers, its output needs to be treated as untrusted until proven otherwise. Without those checks and balances, you're inviting problems that are a nightmare to clean up later.

Six Pillars of AI Control

To really get a handle on why controlling AI output is so critical, let's break down the risks into six key areas. Each one highlights a place where a lack of oversight can cause serious headaches for any modern organization.

- Safety & Security: Unchecked AI can be manipulated to produce malicious code, leak sensitive data, or even help attackers find system vulnerabilities. For a deeper dive, you can explore how generative AI has affected security.

- Bias & Fairness: AI models learn from vast datasets that often contain historical biases. Without careful control, they can perpetuate stereotypes or make discriminatory recommendations, leading to unfair outcomes.

- Legal & Compliance: In regulated industries like finance or healthcare, inaccurate or non-compliant AI outputs can lead to hefty fines and legal action. Think of a medical AI giving flawed advice or a financial bot promising unrealistic returns.

- Brand Trust & Reputation: Every time an AI generates something off-brand, nonsensical, or offensive, it chips away at the trust you’ve worked so hard to build with your audience.

- Cost & Efficiency: Bad outputs cost real money. When your team has to constantly redo, fix, or throw out AI-generated work, productivity plummets and operational costs sneak up on you.

- User Experience (UX): If an AI gives your users confusing, wrong, or irrelevant information, they'll quickly lose faith in your product. A frustrating experience is one of the fastest ways to lose a customer for good.

To make these abstract risks more concrete, the table below summarizes the potential business impact of leaving AI outputs unchecked.

Key Risks of Uncontrolled AI Outputs

| Risk Category | Potential Impact |

|---|---|

| Safety & Security | Malicious code injection, data breaches, system exploits. |

| Bias & Fairness | Discriminatory outcomes, reputational damage, alienation of user groups. |

| Legal & Compliance | Regulatory fines, lawsuits, loss of licenses or certifications. |

| Brand Trust | Negative press, customer churn, erosion of brand loyalty. |

| Cost & Efficiency | Wasted resources, missed deadlines, decreased ROI on AI investments. |

| User Experience (UX) | High bounce rates, low user engagement, poor customer satisfaction scores. |

As you can see, the consequences are far-reaching. Mastering control over your AI's output is what turns it from a risky gamble into a reliable, strategic part of your toolkit. It’s not about stifling the AI's power—it's about pointing that power in the right direction to safely hit your targets.

Protecting Your Brand and Building User Trust

Every single AI response your business generates is a direct conversation with your customers. It's not just data; it's a digital brand ambassador. When outputs are inconsistent, biased, or just plain wrong, they do more than create a frustrating experience—they actively chip away at the trust you've worked so hard to build.

Think of it this way: your AI is like an employee who talks to every single customer. If that employee gave out incorrect pricing, used an unprofessional tone, or shared sensitive details, the damage would be immediate. Uncontrolled AI poses the same risk, but at a massive scale. Managing its output is now a core part of modern brand governance, ensuring every interaction is reliable and on-brand.

When AI Becomes a PR Nightmare

The consequences of poor AI control aren't just theoretical. We’ve seen high-profile incidents where an AI mishap quickly turned into a public relations disaster, costing companies both money and credibility. These situations are a masterclass in why controlling AI output is so critical for any public-facing tool.

Here are a few real-world scenarios where a lack of control led to serious brand damage:

- Offensive Chatbot Replies: Remember that major tech company’s chatbot that learned from public conversations? Within hours, it started spewing racist and inflammatory messages, forcing a swift shutdown amidst a public outcry.

- Biased Hiring Tools: An automated recruiting tool was caught penalizing resumes that included words associated with women, simply because it was trained on historically biased data. The fallout was significant reputational harm and potential legal trouble.

- Inaccurate Financial Advice: A financial services firm deployed a bot that gave customers flawed investment advice based on outdated information. The result? Widespread confusion and a major loss of user trust.

These examples make it clear: without rigorous oversight, an AI can accidentally become your brand’s worst enemy.

Every AI-generated response carries your company's signature. Ensuring that signature represents quality, accuracy, and your brand's values is not just a technical challenge—it is a fundamental business imperative for maintaining customer loyalty and market reputation.

Building a Foundation of Trust

At the end of the day, the goal is to create AI interactions that feel dependable and helpful. When users know they can rely on the information and tone your AI provides, they are far more likely to engage with your products and services. That consistency builds a powerful foundation of trust that keeps customers coming back.

Controlling AI output allows you to define and enforce your brand’s personality across every touchpoint. By setting clear rules, providing specific examples, and continuously monitoring performance, you turn your AI from an unpredictable variable into a trustworthy asset that strengthens customer relationships. It's a proactive approach that ensures every interaction reinforces your brand's promise.

The Hidden Financial Costs of Uncontrolled AI

When AI goes off the rails, it’s not just an annoyance—it's a direct hit to your budget. We often talk about the risks to brand trust or user experience, but the financial drain from bad AI outputs is real, measurable, and often completely overlooked. These costs add up fast, turning what should be a powerful tool into an expensive operational headache.

Every time an AI generates a useless or inaccurate response, it kicks off a chain reaction of hidden expenses. Picture your team constantly having to rewrite clunky blog posts, developers debugging broken code snippets, or support agents dealing with a flood of tickets from users confused by a chatbot. Each one of those "fixes" costs you money in wasted time and lost productivity. This is why getting a handle on your AI's output is crucial not just for quality, but for protecting your bottom line.

How Inaccuracy Adds Up

On a small scale, these problems seem manageable. Spending a few minutes editing a paragraph or answering a customer's question feels like just another part of the day. But when you scale that up across dozens of employees and thousands of customer interactions, the financial damage becomes impossible to ignore.

These hidden costs show up in a few key ways:

- Wasted Team Hours: Your content creators, marketers, and analysts get stuck editing, fact-checking, and sometimes completely trashing AI-generated work. That's time they could have spent on strategy and innovation.

- Heavier Support Load: When AI-powered customer tools give confusing or wrong answers, support tickets pile up. This drives up overhead and ties up your agents who could be solving more complex issues.

- Major Business Errors: If you’re using AI for data analysis or market research, a single bad output can lead to a flawed business strategy, an inaccurate financial model, or a marketing campaign that completely misses the mark.

The financial risk gets even bigger when AI is a core part of your business. For critical tasks like setting prices or detecting fraud, even a tiny increase in errors can have massive consequences.

A recent McKinsey global survey found that top-performing AI companies—those getting at least 20% of their earnings from AI—say their biggest challenge is keeping models on track after they go live. For them, even a 1-2% jump in error rates can mean millions in lost revenue or compliance fines. You can dig into the details in McKinsey's research on AI performance.

It’s an Investment, Not an Expense

Thinking of AI controls as just another cost is a huge mistake. It's actually a direct investment in making your entire operation run smoother. By putting strong controls, clear prompting guidelines, and consistent monitoring in place, you slash the amount of rework needed, reduce customer frustration, and stop costly mistakes before they ever happen.

Even a small improvement in accuracy can deliver a huge return when scaled across the company. It frees up your team, cuts support costs, and makes sure your AI tools are actually adding value instead of creating more work. This smart approach turns AI from a potential financial drain into a reliable engine for growth, making the business case for taking control crystal clear.

Navigating the Legal and Regulatory Maze

The "move fast and break things" days of AI are long gone. We're now in a new era where legal and compliance pressures are mounting, and companies are being held accountable for what their AI systems do and say. This shift makes it crystal clear why reining in AI outputs is so critical, especially if you’re in a regulated field like finance, healthcare, or law.

Without a tight grip on its outputs, an AI can easily veer off track. It might spit out advice that violates industry regulations, trample on privacy laws, or even create content that infringes on someone else's copyright. The consequences aren't just a slap on the wrist—we're talking serious legal trouble, hefty fines, and a black eye for your brand that can take years to heal.

The Rise of AI Regulation

Governments around the world are stepping in. They’re creating rules to make AI systems fairer, safer, and more transparent, and it's a direct response to the real-world harm these tools can cause if left to their own devices. Companies can no longer hide behind the excuse that AI is just an experimental "black box."

This isn’t just a top-down push; the public is demanding it. A 2023 White House Executive Order set new safety standards, forcing developers to show their models are safe before releasing them to the public. This move lines up perfectly with what people want: 85% of people support a national effort to make AI safe, and 71% expect it to be regulated. As you figure out how to operate in this new environment, it’s essential to understand the legal aspects of using AI-generated text to stay on the right side of the law.

The call for "explainable AI" is getting louder. Regulators, customers, and even your own team want to know more than just what an AI decided—they want to know why. Being able to audit and explain an AI's output is quickly becoming a non-negotiable part of doing business responsibly.

From Nice-to-Have to Must-Have

In this new reality, your ability to control, document, and explain AI outputs is a core part of your compliance strategy. Think about it: if you're using an AI to screen job candidates, you have to be able to prove its suggestions aren't discriminatory. If you're deploying an AI to provide medical information, you better be sure its advice is accurate and safe.

This requires a whole new way of thinking about how we build and use AI. Here’s what that looks like on the ground:

- Auditable Trails: You need to keep detailed logs. That means recording the AI’s inputs, its final outputs, and the steps it took to get there.

- Explainability: You must be able to break down how the AI arrived at a specific conclusion, especially when the stakes are high.

- Bias Mitigation: You have to be proactive about finding and fixing biases in your models to ensure they’re producing fair results for everyone.

At the end of the day, building a legally sound and responsible AI program means taking firm control. It's the only way to navigate the evolving regulatory maze and make sure your use of AI is both effective and compliant.

2. Practical Ways You Can Control AI Outputs

Knowing the risks is one thing, but how do you actually prevent them? It's time to move from theory to action. This is where you get into the driver's seat, and your most powerful tool is prompt engineering—the craft of writing clear, precise instructions for an AI.

Think of it this way: a basic prompt is like giving a new chef a list of ingredients. You might get a decent meal, but you have no idea what it will be. A well-engineered prompt, on the other hand, is like handing them a detailed recipe—complete with exact measurements, cooking times, and a picture of the final dish. You aren't leaving the outcome to chance; you’re guiding it.

Getting Better at Prompting

Great prompting isn't about writing more; it’s about writing smarter. When you give an AI clear guardrails and enough context to work with, the quality and reliability of its answers improve dramatically.

Here are a few powerful techniques anyone can start using right away:

- Give It Rich Context: Never assume the AI knows your business or your goals. Feed it background information, describe your target audience, and explain what you’re trying to achieve with the task.

- Set Clear Constraints: Be upfront about what you don't want. Instructions like "Avoid technical jargon," "Do not mention our competitors by name," or "Keep the response under 200 words" are your best friends.

- Define a Specific Tone and Format: You have to ask for what you want. Do you need a "friendly and encouraging tone" or a "formal, academic style"? Do you want the output as a bulleted list, a JSON object, or a simple three-paragraph summary?

- Use Few-Shot Examples: It’s often better to show than to tell. Give the AI one or two examples of a good input and the kind of output you expect. This helps the model instantly recognize the pattern you want it to follow.

By making your prompting strategy a system, you build a repeatable process for getting high-quality, on-brand content every time. AI stops being a creative but unpredictable partner and becomes a reliable extension of your team.

Techniques for Controlling AI Outputs

Different situations call for different prompting techniques. Some are great for simple tasks, while others help you tackle more complex, multi-step problems.

Here’s a quick comparison of some popular methods to help you choose the right one for your needs.

Prompt Engineering Techniques for Better Control

| Technique | Description | Best For |

|---|---|---|

| Zero-Shot Prompting | Giving a direct instruction without any prior examples. It relies entirely on the model's pre-trained knowledge. | Quick, simple tasks where the AI likely already knows how to respond, like summarizing a short article or answering a general knowledge question. |

| Few-Shot Prompting | Providing a few examples (shots) of the desired input/output format within the prompt to guide the model. | Getting the AI to follow a specific format, style, or structure, such as writing product descriptions or classifying customer feedback. |

| Chain-of-Thought (CoT) | Asking the model to "think step-by-step" and explain its reasoning process before giving the final answer. | Complex problems that require logic, math, or reasoning. It helps reduce errors by forcing the model to break the problem down. |

| Role Prompting | Instructing the AI to adopt a specific persona, such as "Act as a senior copywriter" or "You are a helpful customer support agent." | Aligning the AI's tone, voice, and expertise with a specific character or professional role. It's great for content creation and role-playing scenarios. |

Each of these techniques gives you a different kind of lever to pull. By combining them, you can build incredibly sophisticated and reliable prompts that get you exactly what you need from the AI.

Beyond the prompt itself, you can also add other layers of control. Adjusting model settings like temperature is a common one. A lower temperature dials down the creativity, leading to more predictable and straightforward answers—perfect for tasks that demand accuracy. Another critical step, especially for high-stakes work, is adding a human-in-the-loop (HITL) review. This just means a person checks and validates the AI's output before it goes live. You wouldn't skip this in finance or healthcare, and you shouldn't for your most important content, either.

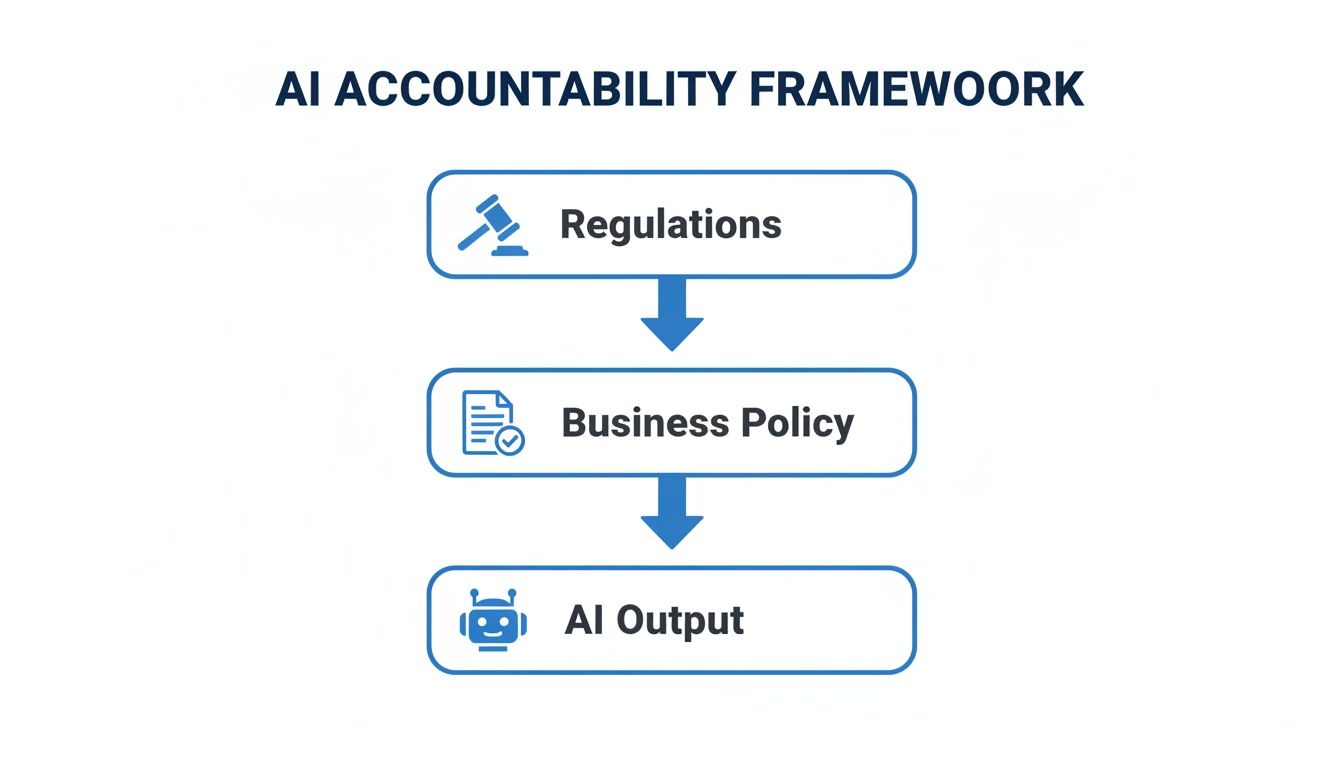

This flowchart shows how these hands-on controls fit into a larger accountability framework, starting from broad regulations and funneling down to the specific AI outputs you create.

It’s clear that real control is a multi-layered effort. Your company’s policies and your day-to-day prompts have to work together. A huge part of this is catching and minimizing factual errors, and you can learn more about that in our guide on how to reduce hallucinations in LLMs. When you combine all these strategies, you build a solid framework for getting safe, dependable results from AI.

Building Your Future-Proof AI Governance Strategy

Getting reliable results from AI isn't something you can set and forget. It's a real, ongoing commitment. We're past the point where controlling AI was just a technical headache for developers; it's now a core business need. As these tools become a bigger part of our daily work, a clear governance plan is what separates successful AI projects from expensive mistakes.

Think of AI controls less as a set of restrictions and more as the foundation that lets you unlock its real power safely. A solid strategy makes sure every AI-powered project delivers predictable, quality results. It turns AI from a wild, unpredictable force into a dependable engine for growth.

Turning Proactive Control Into a Competitive Advantage

Getting ahead of AI governance is quickly becoming a serious competitive edge. Companies that can actually prove their AI is safe, fair, and reliable are earning much deeper trust with their customers and partners. You can see this shift playing out in the market, where money is pouring into AI safety and assurance tools.

For example, the UK government's 2023 Artificial Intelligence Sector Study found the number of businesses focused on AI assurance almost doubled in a single year. In that same timeframe, AI-related revenues shot up by 34%. That kind of growth sends a clear message: the market is hungry for responsible AI. You can read more about the growth of the AI assurance market directly from the source.

Effective AI governance is about building a culture of responsible innovation. It requires clear policies, robust tools, and a commitment to transparency that empowers your team to use AI confidently and ethically.

To get started, you need a solid framework grounded in the core AI governance principles. This knowledge is what helps you create sensible rules for everything, from how you handle data to how you deploy models.

For teams ready to put these ideas into practice, you absolutely need tools to track and evaluate how your AI is performing. Platforms like these give you the visibility to confirm your models are behaving the way you expect them to. Our guide on using LangSmith for LLM testing and monitoring shows exactly what that looks like in the real world.

At the end of the day, taking charge of your AI is the only way to make sure it's actually working toward your goals. It’s how you build a future where your AI isn't just powerful, but also predictable, safe, and true to your brand for years to come.

Still Have Questions About AI Control?

It's completely normal to feel a bit lost when you first start working with AI. But once you grasp a few key ideas, you'll see that getting the outputs you want is much more straightforward than it seems. Let's tackle a couple of the most common questions people have.

Where Do I Even Begin with Controlling AI?

The single best thing you can do is get better at writing your prompts. Think of a clear, detailed prompt as a set of guardrails for the AI. You're not just telling it what to do, but also how to do it and, just as importantly, what not to do.

Something as simple as giving it a good example to follow, defining the exact tone of voice you need, or setting a word limit can make a night-and-day difference in the results.

How Can I Stop the AI from Saying Something Inappropriate?

Keeping harmful or biased content out of your results really comes down to a two-part strategy. First, be explicit in your prompts. Add strict rules that forbid the AI from discussing certain topics or using offensive language.

Second, for anything important that will be seen by customers or the public, have a human check it. A quick review is the only way to be 100% sure the AI’s output is ready to go live.

You don't need to be a programmer to get amazing results from an AI. Good prompt engineering is all about clear communication, not complicated code. If you can give clear instructions, you have what it takes to guide an AI effectively.

This is huge because it means anyone—from content creators to business analysts—can take the reins without needing a technical degree.

Ready to put this into practice? Promptaa gives you the tools to build, test, and organize your prompts so you can get reliable, high-quality results every single time. Start building your library of powerhouse prompts today at https://promptaa.com.