What Are Embeddings? Your Guide to AI Language

Think of embeddings as a universal translator for AI. They take complex, human-centric data—like words, images, and sounds—and convert it into a numerical language that machines can actually process. This isn't just a simple conversion; it creates a sophisticated "map" where related concepts are grouped together, allowing AI to finally grasp context and relationships.

What Are Embeddings? A Deeper Look

At its core, an embedding is a way of representing real-world objects and their relationships as a list of numbers, technically known as a vector. But don't get bogged down by the jargon. It’s more helpful to think of it as giving everything coordinates on a giant, multi-dimensional map of meaning.

With this map, an AI doesn't just know what a word is; it understands what it means by checking out its neighbors. For instance, the vectors for "king" and "queen" would be plotted very close to each other. The vector for "cabbage," on the other hand, would be miles away because it shares almost no contextual meaning. This concept of proximity is everything—it's how AI learns nuance.

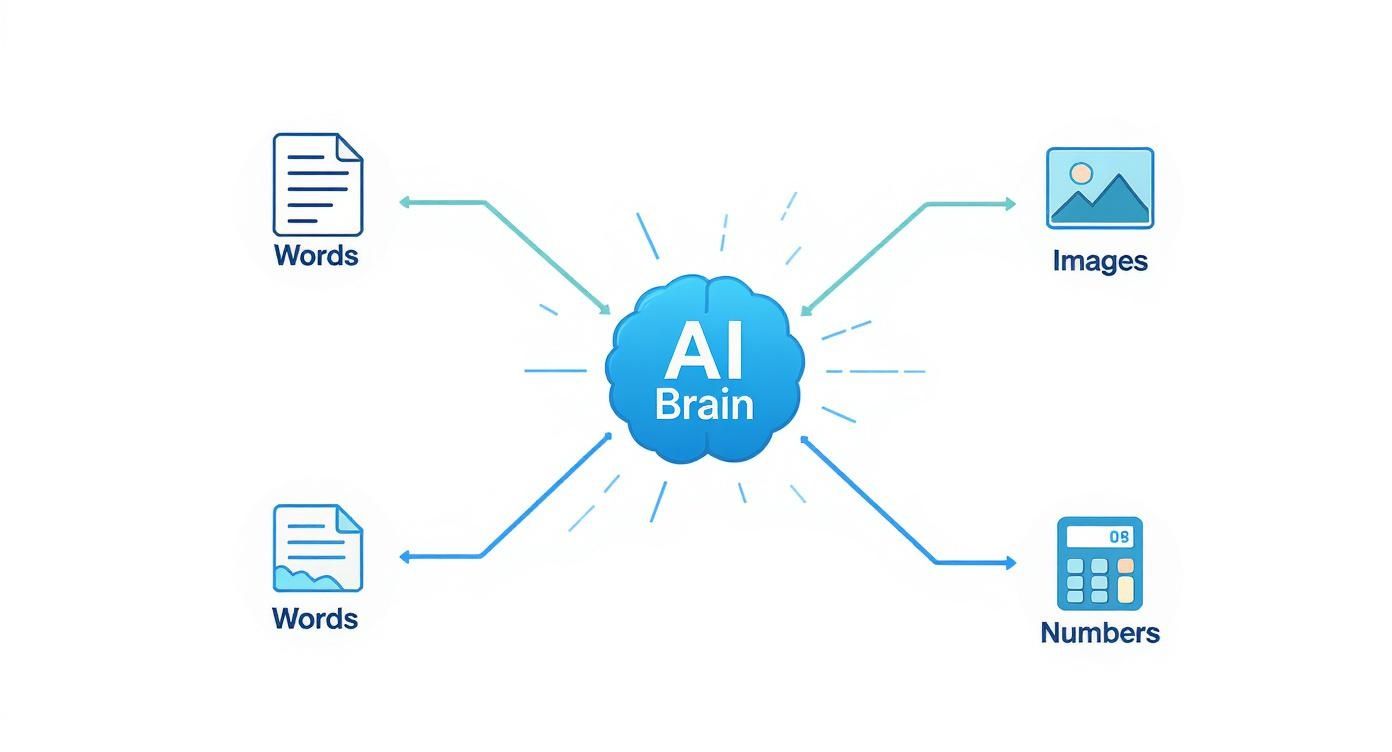

This infographic gives you a great visual breakdown of how an AI's "brain" takes different types of data and puts them onto this shared map.

As you can see, embeddings create a common language. They translate a jumble of inputs like text and images into a clean, numerical format that the AI can actually work with.

The Old Way vs. The New Way

To really appreciate embeddings, it helps to see what they replaced. Before, computers tried to understand words using a clunky method called one-hot encoding. It was incredibly inefficient and couldn't grasp any relationships between words.

Let's compare the two approaches side-by-side.

Traditional Data vs Embeddings At a Glance

| Aspect | Traditional Method (One-Hot Encoding) | Embeddings (Vector Representation) |

|---|---|---|

| Representation | Each word is a unique, isolated token. | Words are represented as dense vectors in a continuous space. |

| Relationships | Cannot capture semantic similarity. "Cat" and "Kitten" are totally unrelated. | Proximity indicates similarity. "Cat" and "Kitten" are very close. |

| Dimensionality | Extremely high-dimensional (e.g., 50,000 words = a 50,000-dimension vector). | Low-dimensional (typically 100-300 dimensions), capturing rich meaning. |

| Efficiency | Very sparse and computationally expensive. | Dense and much more efficient to process and store. |

| Context | Completely context-blind. | Excellent at capturing contextual nuances and word relationships. |

The move from the old, sparse method to these new, dense vectors was a game-changer. It's the difference between a simple checklist and a rich, detailed map.

Why This Matters for AI

This ability to map relationships numerically is the engine behind countless AI features you probably use every single day. Without embeddings, AI systems would be stuck doing basic keyword matching. They'd never be able to figure out the subtle intent behind your search queries or give you genuinely useful recommendations.

By converting the world into vectors, embeddings give AI a form of intuition. It's the mechanism that allows a machine to understand that a "puppy" is a young "dog," or that a picture of a golden retriever is related to the text "a loyal canine."

This numerical representation is what unlocks advanced AI tasks, from powering sophisticated search engines to building chatbots that can actually hold a conversation. If you want to see how this all connects to the broader field of AI language, you can learn more from our guide on the basics of Natural Language Processing.

How AI Learns to Create Embeddings

Think of an AI learning to create embeddings not as a student memorizing facts, but as a detective learning to spot relationships. It doesn't read a dictionary. Instead, it ingests truly massive amounts of text—we're talking billions of sentences—and learns everything it knows from context alone. This is how it turns a chaotic mess of raw data into a structured, meaningful map.

For instance, an AI might process countless sentences that include the word "puppy." It will notice that "puppy" consistently shows up near words like "playful," "small," "fetch," and "cute." Over time, the model figures out that these words all belong in the same conceptual neighborhood. So, when it builds its map, it plots the vector for "puppy" right next to the vectors for those related terms.

This same intuitive logic extends to more abstract ideas. The model sees "king" appearing alongside "man" and "ruler," while "queen" tends to be near "woman" and "ruler." By processing these patterns across a gigantic dataset, it learns to represent these relationships mathematically.

The Role of Models Like Word2Vec

This learning process isn't just a happy accident; it's guided by specific algorithms designed to act like cartographers for language. They systematically map out the complex terrain of meaning. A couple of key approaches really shaped the field:

- Contextual Proximity: These models look at a "window" of words surrounding a target word. If words frequently pop up together inside that window, they're considered related and are pulled closer together in the vector space.

- Predictive Analysis: Other models learn by playing a sort of fill-in-the-blank game. Given a sentence like, "The dog chased its ___," the model's job is to predict the missing word ("tail"). The better it gets at this game, the deeper its understanding of which words fit which contexts becomes.

The arrival of Word2Vec in 2013 was a genuine turning point. It proved that embeddings could be trained efficiently on huge datasets to capture incredibly nuanced relationships. Models trained on giant text collections, like the Google News dataset with its 100 billion words, hit over 70% accuracy on word analogy tasks. This breakthrough opened the floodgates for other powerful models and made pre-trained embeddings a staple for developers. You can dig deeper into the history and evolution of embedding models to see just how far things have come.

Unlocking Meaning with Vector Math

Here’s where it gets really interesting. The final vector space isn't just a random jumble of points; it's organized with a kind of semantic logic. This structure allows us to perform "vector arithmetic" to explore and uncover new relationships.

The classic example is the equation:vector('king') - vector('man') + vector('woman'). When you run this calculation, the resulting vector lands astonishingly close to the vector for'queen'.

This isn't a parlor trick. It's the mathematical expression of the relationship "royal male is to royal female." The AI didn't learn this from a grammar rulebook. It inferred the concept purely from observing patterns in human language. This ability to quantify and manipulate meaning is precisely what makes embeddings such a foundational building block for modern AI.

Exploring Different Types of Embeddings

While word embeddings are a great starting point, the technology truly shines in its versatility. The core idea—turning complex data into meaningful numerical vectors—goes far beyond individual words. This is what allows AI to make sense of a much wider range of information and makes embeddings a cornerstone of modern AI.

After all, we don't just communicate in single words; we use full sentences. That's where sentence embeddings come into play. Instead of just mapping one word at a time, these models are trained to grasp the entire meaning of a sentence or even a whole paragraph. This leads to a much more nuanced understanding, powering things like semantic search, where you find documents based on the intent of your query, not just the keywords.

From Words to Products and Beyond

This same principle has been a game-changer for businesses. E-commerce platforms and streaming services use product and user embeddings to understand our preferences with uncanny accuracy.

- Spotify creates a vector for every song based on its audio profile and another vector representing your listening habits. When it suggests a new playlist, it's really just finding songs whose vectors are huddled close to your personal taste vector.

- Amazon does the same thing with products. It learns that people who buy a specific coffee maker also tend to look at certain coffee grinders, and it uses this knowledge to place those items near each other in its massive "product space."

This screenshot from a classic model called Word2Vec gives you a visual for how this works, showing the relationships it learned between countries and their capitals.

The model figured out these geographical and political connections just by analyzing text, grouping related concepts together. Now, just imagine applying that same kind of relational mapping to products, user profiles, or even images. The potential is huge.

Unifying Different Data Types

The most exciting development in this space right now is probably multimodal embeddings. These are models that can map completely different kinds of data into the same shared vector space.

A multimodal model can learn that an image of a golden retriever and the text description "a happy dog playing fetch" should be neighbors in its vector space. This is the magic behind searching your phone's photo library using natural language.

Bringing different data types together like this is a massive step toward building more intuitive and powerful AI systems. It’s what enables a single system to seamlessly connect information from text, images, and audio. To see how this all fits into the bigger picture, take a look at our guide to the different types of LLM that rely on these advanced embedding techniques.

Why Embeddings Are So Important for AI

Embeddings aren't just a clever bit of data science; they're the foundational shift that makes modern AI feel so intuitive and powerful. They crack one of the toughest nuts in artificial intelligence: how to make machines truly understand the messy, abstract, and nuanced world we live in. At their core, embeddings are all about turning complex data into a language computers can work with, and their importance really shines in three key areas: context, efficiency, and scalability.

Think about how search engines used to work. You'd search for "car," and it would just look for that exact word. It had no clue that "automobile" or "sedan" were related concepts. This is where embeddings changed the game. By capturing context and semantic meaning, they allow AI to understand the relationships between ideas. Instead of just matching keywords, the system grasps what you actually mean.

This is what separates a basic search tool from an AI that truly understands your intent.

Efficiency and Scalability

The second huge win is efficiency. Trying to teach a computer every word and its relationship to every other word using old-school methods would be a nightmare. The datasets would be massive and impossibly slow to process. Embeddings solve this by compressing all that complexity into compact numerical vectors.

This compression is the secret sauce. It allows computers to run sophisticated calculations on text, images, or audio with incredible speed. It’s why you can get instant language translations or spot-on product recommendations in real-time.

Finally, embeddings provide incredible scalability through transfer learning. An AI model doesn't need to learn an entire language from scratch for every new project. Developers can start with a pre-trained model that has already analyzed billions of web pages to learn the intricate relationships between words.

From there, they can fine-tune this existing model for a specific task, which saves a phenomenal amount of time and computing power. This is how a small team can build a sophisticated chatbot without needing the server farms of a tech giant. To see this in action, it's worth understanding how Large Language Models are transforming data teams.

The real-world impact is staggering. In machine translation, for instance, embeddings have boosted accuracy by up to 15% over older techniques. For sentiment analysis, models built on embeddings can reach over 90% accuracy. With the global market for these AI technologies projected to hit $43 billion by 2025, it's clear they are a cornerstone of modern innovation.

Real-World Examples of Embeddings in Action

While the idea of embeddings might seem abstract, you actually interact with them every single day. They are the silent workhorses behind many of the smartest features you rely on, turning the messy, complex world of human data into something a machine can genuinely understand. From the songs on your playlist to the answers you get from a search engine, embeddings are constantly working behind the scenes.

Think about how much search engines have improved over the years. A decade ago, if you searched for "how to fix a leaky faucet," you'd get pages that literally contained those exact words. Now, it's a completely different game.

Thanks to embeddings, a search engine grasps the semantic meaning of your query. It understands you're looking for "plumbing help" or "dripping tap repair" even if you didn't type those specific terms. This ability to understand context, not just keywords, is why search feels so much more intuitive today.

Crafting Your Perfect Playlist

Spotify’s Discover Weekly playlist is a perfect case study. The magic behind that uncannily accurate playlist isn't just about tracking artists you like; it’s about understanding your musical DNA through embeddings.

Here’s a simplified look at how it works:

- Song Vectors: Every song in Spotify's massive catalog is analyzed and converted into its own embedding. This vector isn't random; it captures the song's core essence—its genre, tempo, mood, and even instrumentation.

- Your Taste Profile: As you listen, save songs, and skip others, Spotify builds a user embedding just for you. Think of this as the mathematical representation of your musical taste, an average of all the songs you enjoy.

- Finding New Music: To build your playlist, the algorithm searches for songs with embeddings that are mathematically close to your personal taste vector. It's like a matchmaker for music, and it’s incredibly effective.

This same principle is what powers recommendations on Netflix and product suggestions on Amazon, constantly mapping your preferences to find the next thing you’ll love.

Powering Smarter Conversations

Remember the early chatbots? They were notoriously rigid and often useless if you didn't ask your question in the exact way they expected. Embeddings have completely changed the game for conversational AI.

When you ask a chatbot, "My package hasn't arrived," it uses embeddings to understand that your query is semantically similar to "Where is my order?" or "delivery status." This allows it to provide a helpful answer instead of a generic "I don't understand."

This leap forward is what makes modern chatbots feel helpful instead of frustrating. A great real-world example is a vector embedding chatbot for WordPress, which relies on these techniques to provide far more accurate and context-aware support.

By understanding what a user truly means, these systems can resolve issues efficiently and dramatically improve the user experience. This contextual grasp is also key to making large language models more reliable. To learn more, check out our guide on how to reduce hallucinations in LLM.

Embeddings are not just a niche technology; their applications span countless fields. The table below highlights just a few of the ways different industries are putting them to work.

Applications of Embeddings Across Industries

| Industry | Application | Benefit |

|---|---|---|

| E-commerce | Product Recommendations | Creates personalized shopping experiences by suggesting items similar to a user's browsing history, increasing sales and engagement. |

| Healthcare | Medical Record Analysis | Identifies patterns in patient data and medical literature to help predict diseases and suggest potential treatments. |

| Finance | Fraud Detection | Analyzes transaction patterns to flag unusual or suspicious activity in real-time, preventing financial loss. |

| Entertainment | Content Discovery | Powers recommendation engines (e.g., Netflix, YouTube) by matching users with movies, shows, or videos based on their viewing habits. |

| Recruiting | Candidate Matching | Scans resumes and job descriptions to find the best-fit candidates based on skills and experience, not just keyword matches. |

From making our shopping carts smarter to helping doctors find life-saving information, the ability of embeddings to capture and compare meaning is what makes them such a fundamental building block of modern AI.

The Future of Embeddings in AI

The story of embeddings is still being written, and the next chapters are pushing the boundaries of what machines can truly understand. We're moving beyond the foundational models into an era where AI can grasp deeper context and integrate different kinds of data, making our interactions with it feel far more natural.

One of the biggest leaps forward has been the shift from static to contextual embeddings. Early models assigned a single, unchangeable vector to a word. The word "bank," for instance, had one meaning, whether you were talking about a river or a financial institution. This was a major blind spot.

Contextual models, like the kind powering BERT and modern GPT models, create embeddings on the fly. They look at the entire sentence to figure out what a word means in that specific situation. This dynamic approach is what allows AI to finally get a handle on nuance, sarcasm, and ambiguity.

It’s the difference between a clunky, literal translation and one that understands the spirit and slang of a language.

The Rise of Multimodal Understanding

The next frontier is what’s known as multimodal embeddings. The goal here is to create a single, unified map of meaning that connects completely different types of data. Imagine an AI that doesn't need one model for text and another for images but instead understands the inherent link between them.

This is the tech that lets a system recognize that a picture of a dog, the sound of a bark, and the word "canine" all refer to the same fundamental concept.

This unified understanding opens up some incredible possibilities:

- Cross-Modal Search: You could find a specific scene in a video library just by typing a detailed description of it, or even by using a sound clip as your search query.

- Richer Content Generation: An AI could compose a piece of music that perfectly captures the mood of a short story you fed it.

- Deeper Data Analysis: A system could analyze a company's financial reports while simultaneously processing the audio from its earnings call to develop a more holistic view of its performance.

As these more sophisticated methods become mainstream, embeddings are cementing their role as the bedrock of next-generation AI. They are the key to building machines that don't just compute data, but genuinely comprehend its meaning.

Frequently Asked Questions About Embeddings

Even after getting the basics down, a few practical questions always seem to pop up when you start working with embeddings. Let's tackle some of the most common ones to help fill in any gaps.

What’s the Difference Between Embeddings and One-Hot Encoding?

Think of one-hot encoding as the most basic, literal way to represent words. It creates a giant vector for your entire vocabulary and just flips a switch—a '1' for the word that’s present and '0' for everything else. It’s clunky and treats words like "dog" and "puppy" as if they have absolutely nothing in common.

Embeddings are far more sophisticated. Instead of a simple on/off switch, they place words on a complex map where proximity equals meaning. They create dense, multi-dimensional vectors that capture the rich, subtle relationships between concepts. It’s a much smarter and more efficient way for an AI to actually understand language.

Can Embeddings Be Used for Data Other Than Text?

Absolutely. Text is the classic example, but the real power of embeddings is their versatility. You can turn almost any complex data into a meaningful numerical vector. We see them used all the time for:

- Images: This is what powers visual search engines and image classification tools.

- Audio: It’s the magic behind music recommendations and speech recognition.

- Products: E-commerce sites use them to map out which items are similar or frequently bought together.

- Users: They can create a "profile vector" based on user behavior for incredibly accurate personalization.

This ability to represent different types of data is what makes embeddings such a cornerstone of modern AI.

Are Larger Embedding Dimensions Always Better?

Not necessarily. It’s tempting to think that a higher dimension (say, 768 versus 128) will always give you better results because it can capture more nuance, but there’s a catch. Bigger vectors mean more computational overhead—they demand more processing power, more memory, and more storage. For apps running on a phone, that’s a real problem.

The trick is to find the sweet spot. For huge, complex tasks with massive datasets, those higher dimensions might be worth it. But for simpler jobs or in environments where resources are tight, a smaller, more nimble embedding can often get the job done just as well.

How Are Embeddings Related to Large Language Models?

Embeddings are the front door for any Large Language Model (LLM) like the ones in the GPT family. Before an LLM can even begin to think about your prompt, it has to convert your words into embeddings. These numerical vectors are the only language the model's neural network truly understands.

Think of it this way: without high-quality embeddings, an LLM would be lost. It would have no way to grasp the meaning, context, or relationships in the text you give it. They are the essential bridge connecting our human language to the mathematical world of AI.

Ready to create better AI prompts with more control? Promptaa gives you the tools to build, organize, and refine your prompts for superior results. Get started for free.