A Practical Guide to Wan2.2 GGUF Video Generation

So, what exactly is Wan2.2 GGUF? Put simply, it’s a powerful AI video generator that's been cleverly packaged to run on a regular home computer.

Think of it this way: Wan2.2 is the talented artist, the brain that knows how to turn your text or images into video. GGUF is the special, compact toolkit that makes this artist's studio small enough to fit on your desk.

What Are Wan2.2 and GGUF Anyway?

To really get a handle on Wan2.2 GGUF, you need to see it as two separate pieces that work together beautifully. Once you understand both parts, you’ll see why it’s catching on with so many creators and tech enthusiasts.

First up, there’s Wan2.2. This is the actual AI model, the "brain" of the operation. It's been trained to understand language and visuals, acting like a digital animator. You can feed it a text prompt—something like "a golden retriever chasing a red ball in a sunny park"—and it will spit out a short video that brings your description to life. It can also take a still photo and add subtle, realistic motion, a technique called image-to-video.

Then you have GGUF, which stands for GPT-Generated Unified Format. This is the file format, the "box" that the Wan2.2 model comes in. AI models are typically massive, often requiring powerful, expensive servers to run. GGUF is a game-changer because it shrinks the model file down without totally wrecking its performance. This compression is what makes it possible for you to run a sophisticated video AI right on your own PC or Mac.

Let's break that down into a quick summary.

Wan2.2 GGUF At a Glance

Here’s a simple table that puts all the key pieces together.

| Component | Description | What It Means For You |

|---|---|---|

| Wan2.2 | The core AI model that generates video from text or images. | This is the creative engine that understands your ideas and turns them into motion. |

| GGUF | A highly efficient file format that compresses the AI model. | This format makes the model small enough to run on your personal computer, not a server farm. |

| MoE Architecture | A smart design that uses specialized "expert" sub-models for different tasks. | You get better quality and more complex video generation without needing a supercomputer. |

| Local Inference | The ability to run the model directly on your own hardware. | You have total privacy, no cloud fees, and complete control over the creative process. |

Essentially, Wan2.2 GGUF is all about bringing high-powered AI video creation out of the data center and into your home studio.

The Secret Sauce: Its Architecture

What makes Wan2.2 so good at its job is a clever design known as a Mixture-of-Experts (MoE) architecture. Instead of one giant, monolithic model trying to do everything, MoE uses a team of smaller, specialized sub-models. Each "expert" is good at a specific task, and they work together to create the final video.

This approach lets the model produce impressive, high-definition video at a smooth 24 frames per second (fps) without demanding insane amounts of processing power. It’s a smarter, more efficient way to build a powerful AI. It's a great example of the leading AI tools for content creators that are making professional-level effects more accessible.

What This Means For You

The combination of the Wan2.2 model and the GGUF format opens up some exciting doors for anyone interested in creating video content.

- No Supercomputer Needed: You can finally jump into AI video generation without needing a massive budget or specialized hardware.

- Total Creative Freedom: When you run a model locally, you call the shots. You can tweak prompts, experiment endlessly, and keep everything private on your own machine.

- It’s Way Cheaper: Forget about paying for every second of video you generate on a cloud service. Once you have the model, you can create as much as you want.

This technology is a big step forward from what was available just a short time ago. If you’re curious about its lineage, check out our previous write-up on the Wan 2.1 VACE model to see just how quickly things are evolving.

What Makes a Powerful Model Like This Run on My PC?

https://www.youtube.com/embed/K75j8MkwgJ0

The secret sauce that lets a beefy model like Wan2.2 GGUF run on a regular home computer is the GGUF format itself. It’s a bit like how Netflix streams a massive 4K movie to your TV without you having to download a 100-gigabyte file first. GGUF is a clever way to package and shrink a huge, power-hungry AI model into something much more manageable.

This is what opens the door for hobbyists, creators, and developers to experiment with high-end AI without needing a datacenter in their basement. Let's be real—without GGUF, a model with over 14 billion parameters would be a non-starter on most of our machines. The format is the crucial link between bleeding-edge AI research and what we can actually use every day.

So, how does it pull this off? It really comes down to two smart techniques working together.

The Magic of Quantization

The main trick up GGUF’s sleeve is something called quantization. Think of it like this: imagine you have a digital photo with millions of colors. Quantization is like telling the photo to use a smaller, more efficient palette. The picture still looks fantastic, but the file size drops dramatically.

In AI, models store their "knowledge" as numbers with very high precision (think lots of decimal places). Quantization cleverly reduces the precision of those numbers. This one change massively shrinks the model's file size and, just as importantly, reduces the amount of VRAM (video memory) it needs to operate.

You’ll see different quantization levels, like Q3_K_S or Q4_K_M. These let you pick the right balance between video quality and performance for your hardware. A lower number means a smaller, faster model, while a higher one keeps more detail but uses more resources.

This flexibility is a game-changer. It means someone with a brand new RTX 4090 can run a high-quality version of wan2.2 gguf, while someone with an older graphics card can still get in on the action with a more compressed version.

Smartly Sharing the Load with CPU Offloading

The other key feature is GGUF's ability to offload layers to the CPU. Your computer has two main brains: the GPU, which is a beast at parallel processing tasks like AI, and the CPU, which handles everything else.

In a perfect world, the whole AI model would live on the GPU's super-fast VRAM. The problem is, consumer GPUs have a limited amount—usually somewhere between 8GB and 24GB. When a model is too big to fit, GGUF-compatible tools like llama.cpp can "offload" the extra layers to your computer's regular RAM for the CPU to handle.

It’s definitely slower than running everything on the GPU, but it’s a brilliant workaround that stops the program from crashing with an "out of memory" error. Think of it as a safety valve that lets you run models that are technically too big for your GPU by splitting the work between both processors.

Setting Up Your Local Video Generation Studio

Before you can bring your first video to life with Wan2.2 GGUF, you’ll need to get your computer ready. Think of it like setting up your own digital art studio—you need a solid easel (your hardware) and the right set of brushes (the software) before you can start painting.

Getting this foundation right from the start will save you a world of headaches later. The whole point is to build a reliable setup so you can pour your energy into the creative side of things, not troubleshooting.

Checking Your Hardware Essentials

First things first, let's talk about your computer's hardware. Even though the GGUF format makes these models much more accessible, a powerful machine still makes a night-and-day difference.

For a smooth experience, a modern NVIDIA RTX GPU with at least 8GB of VRAM is what you should aim for. A card like the RTX 3060 (which comes with 12GB) or anything in the RTX 40 series is a fantastic place to start. The more VRAM you have, the longer and more detailed your videos can be without your system grinding to a halt. You can technically run this on a CPU, but trust me, it will feel like watching paint dry.

Key Takeaway: Your GPU is the star of the show here. The amount of VRAM it has directly limits the complexity of the videos you can generate. More VRAM gives you more creative freedom.

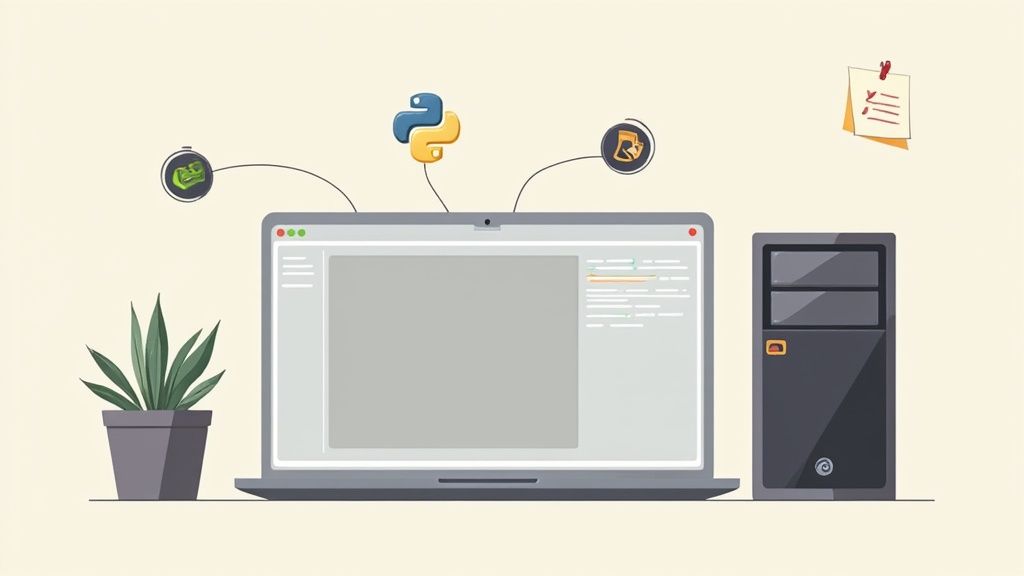

Installing the Core Software Tools

Once your hardware is sorted, it's time to install the software that makes everything tick. These are the behind-the-scenes tools that will handle the AI model.

You'll need two essential programs:

- Python: This is the language that fuels most AI development. Just make sure you have a relatively recent version installed, like Python 3.10 or newer.

- Git: This tool is the standard for downloading code directly from online repositories like GitHub. It’s how you’ll grab the latest and greatest version of projects like llama.cpp.

Getting these installed is pretty simple on any operating system. Windows users can grab installers from the official websites, while macOS and Linux folks can use package managers like Homebrew or APT. If you've ever set up other AI tools, this process will feel familiar; our guide on the Applio voice cloning tool walks through a very similar initial setup.

Setting Up Your Inference Engine

Alright, now for the main event: installing the program that will actually run the wan2.2 gguf file. One of the best and most popular choices is llama.cpp, a lean, mean inference engine written in C++.

Here’s a quick rundown of how to get it going:

- Clone the Repository: Pop open your terminal or command line and use Git to download the llama.cpp source code.

- Build the Project: Change into the newly created directory and run the

makecommand. This compiles the code into a program that’s perfectly optimized for your specific hardware, squeezing every last drop of performance out of your CPU and GPU.

This step is a game-changer. A properly compiled version of llama.cpp can give you a massive performance boost. Once that’s done, your local studio is officially open for business! While Wan2.2 GGUF is a powerful tool, it's always smart to keep an eye on other AI video generation platforms to have a well-rounded creative arsenal.

Generating Your First AI Video with Wan2.2

Alright, you've got your local setup ready to go. Now for the fun part: making your first video. We'll walk through the process of generating video with Wan2.2 GGUF, starting with a hands-on command-line approach before moving to some easier-to-use graphical tools. By the end, you'll be ready to start experimenting on your own.

The whole process might look a little technical at first glance, but it really boils down to three simple steps: load the model, write a clear instruction (your prompt), and choose your output settings. It doesn't matter if you're a command-line pro or prefer a point-and-click interface—the core idea is exactly the same.

Starting with the Command Line

If you like being in direct control, using a tool like llama.cpp from the command line is the most direct way to work with the wan2.2 gguf model. You'll be typing commands straight into a terminal, which gives you a transparent, under-the-hood view of what the AI is doing.

A typical command just needs to tell the program a few key things:

- Which Model to Use: You’ll simply point it to the GGUF file you downloaded earlier.

- Your Prompt: This is your creative direction, like "a majestic eagle soaring over a mountain range at sunset."

- Essential Settings: You'll also need to specify parameters like how many frames to generate and the video resolution.

Sure, it doesn't have a fancy visual interface, but this method is incredibly powerful and efficient. It's perfect for scripting or automating video creation once you're comfortable with it. Think of it as the purest way to communicate with the model.

Using Friendly Interfaces like LM Studio and Jan

Feeling a bit overwhelmed by the command line? No problem. Several fantastic graphical tools make running GGUF models a breeze. Applications like LM Studio and Jan wrap all the complex commands in a clean, intuitive interface.

With these apps, the process is as simple as clicking a button to load your wan2.2 gguf file, typing your prompt into a text box, and tweaking settings with easy-to-use sliders and dropdowns. They transform a technical task into a creative one, letting you focus on your vision instead of the code.

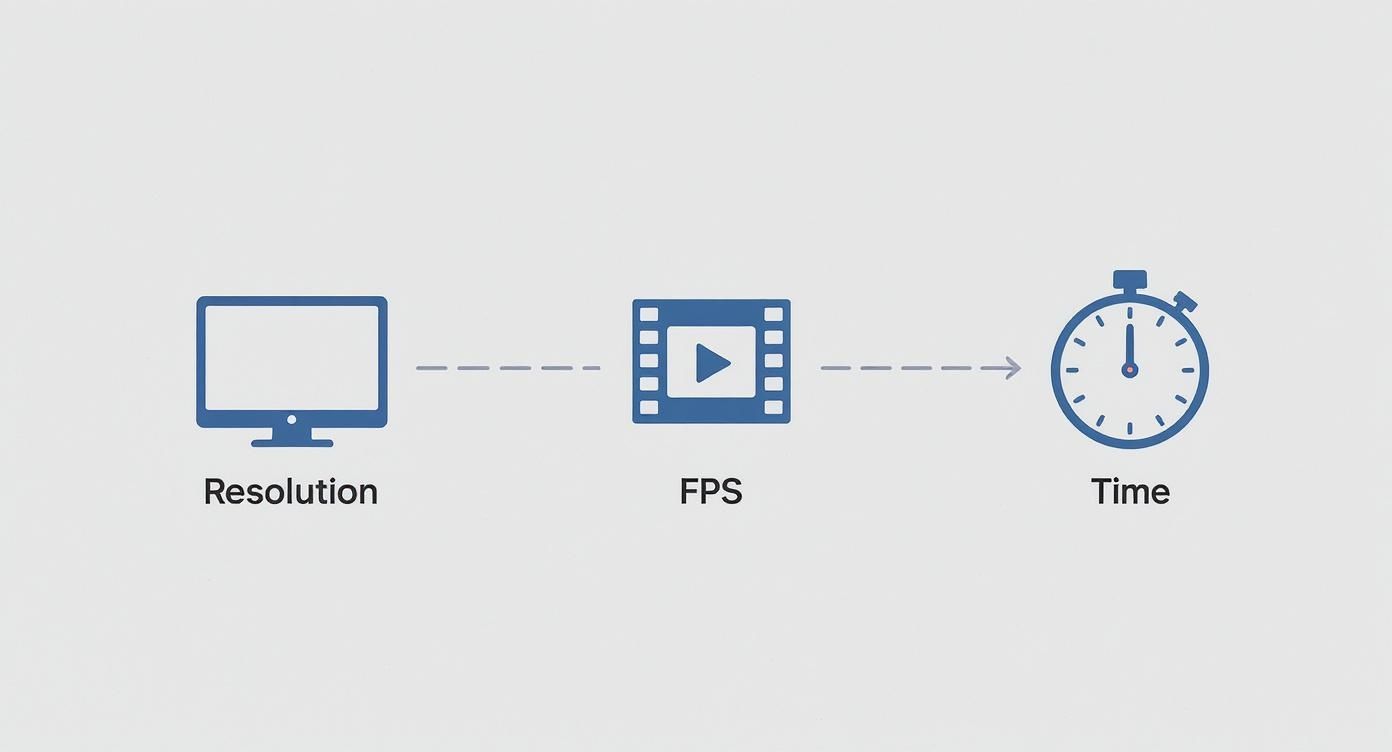

Wan2.2’s ability to run on home computers marks a huge step forward for AI video. The model can create 720p resolution videos at 24fps on consumer GPUs with 8 to 12 GB VRAM, like an NVIDIA RTX 4090. In real-world terms, you can generate a high-quality video from an image or text prompt in under 10 minutes on these systems. This opens the door for hobbyists and pros alike to make cinematic videos without needing expensive cloud servers. You can learn more about the Wan2.2 GGUF model's capabilities and its performance metrics.

Crafting Your First Prompts

The magic is in the prompt. The quality of your video is a direct reflection of how well you describe what you want. A fuzzy prompt leads to a fuzzy video. The key is to be specific and descriptive to really guide the AI toward the scene you have in your head.

Here are a few examples to get your creativity flowing:

- Simple: "A cat sleeping on a sunny windowsill."

- More Detailed: "A fluffy orange tabby cat, sleeping peacefully on a white windowsill, dust motes dancing in the warm afternoon sunlight."

- Adding Motion: "A majestic eagle soaring gracefully, its wings catching the wind as it circles high above a snow-capped mountain range."

Learning to write great prompts is a skill, and it's one of the most powerful you can develop in AI. If you want to go deeper, check out our guide on mastering prompt optimization to unlock AI’s full potential. It’s a great resource for taking your creative control to the next level.

How to Optimize Performance for Your PC

Getting the wan2.2 gguf model to run smoothly on your machine is all about finding that sweet spot between quality and speed. It's a bit like tuning an instrument—a few adjustments can make all the difference, letting you generate incredible content without your computer grinding to a halt.

We'll focus on two main techniques that unlock this performance: choosing the right quantization level and using GPU layer offloading. Let's break down what these mean in simple terms.

Understanding Quantization Levels

So, what's with all those different file versions like Q3_K_S or Q4_K_M? This is all about quantization, which is the secret sauce that makes massive AI models fit onto regular computers.

Think of it like this: an uncompressed digital photo is huge because it stores millions of colors with perfect precision. Quantization is like cleverly reducing that color palette. The picture still looks fantastic, but the file size shrinks dramatically. In the same way, quantization reduces the numerical precision inside the AI model, making it smaller and faster without sacrificing too much quality.

Here’s a simple rule to remember:

- Lower Q numbers (Q2, Q3): These are the smallest, fastest versions. They use the least VRAM, making them great for older hardware, but you might notice a small dip in output quality.

- Higher Q numbers (Q5, Q8): These offer the best quality because they keep more of the original model's detail. The trade-off? They're much larger and demand a powerful GPU with plenty of VRAM.

For most people, a middle-of-the-road option like Q4_K_M is the perfect starting point. It strikes an excellent balance, giving you great results without needing a supercomputer.

Choosing the right quantization level is the first and most important step in tailoring the model to your specific hardware. Below is a table that breaks down what you can expect from the most common options.

Wan2.2 GGUF Quantization Levels Compared

| Quantization Level | Typical File Size | VRAM Usage | Quality vs. Speed Trade-off |

|---|---|---|---|

| Q2_K | Smallest | Lowest | Fastest performance, but noticeable quality loss. Best for low-VRAM systems or testing. |

| Q3_K_M | Small | Low | A good compromise for older hardware. Faster than Q4 but with some minor quality reduction. |

| Q4_K_M | Medium | Moderate | The recommended "sweet spot" for most users. Great balance of quality, speed, and VRAM needs. |

| Q5_K_M | Large | High | Excellent quality with minimal loss. Ideal for powerful GPUs with 12GB+ of VRAM. |

| Q8_0 | Largest | Very High | Near-lossless quality, but very slow. Mostly used for evaluation or on high-end hardware. |

As you can see, the choice directly impacts your experience. Start with Q4_K_M and see how your system handles it, then adjust up or down from there.

Balancing the Load with GPU Offloading

Even with the right file, you might still hit that dreaded "out of memory" error. That's where GPU layer offloading comes to the rescue. It's a clever feature that lets your system share the workload between your super-fast GPU and your computer's regular RAM and CPU.

Imagine your GPU is a small, high-tech workshop, and your CPU and RAM are a bigger, slower warehouse. If a task is too large for the workshop, you can send some of the less critical parts to the warehouse. It takes a bit longer, but it means the job actually gets done.

In practice, you can tell your software how many of the model's "layers" to load into your GPU's VRAM. For instance, with an NVIDIA RTX 3060 and its 12GB of VRAM, you can probably offload all the layers for maximum speed. But on a card with only 8GB, you might offload fewer layers, letting the CPU handle the rest to avoid crashing.

The infographic below shows how all these settings tie together, affecting the final generation time based on video resolution and frame rate.

Ultimately, finding the right mix of quantization and GPU offloading is a bit of an experiment. By tweaking these settings, you can dial in the perfect configuration to get the best possible videos your PC is capable of producing.

Got Questions About Wan2.2 GGUF?

As you start experimenting with local AI video, you're bound to have some questions. Let's tackle a few of the most common ones that come up when working with the wan2.2 gguf model to clear up any confusion and get you on the right track.

Can I Run Wan2.2 GGUF Without a Powerful GPU?

You can, but it's not going to be fun. The beauty of the GGUF format is that it allows the model to run on a CPU, which is a huge plus for accessibility. However, generating video without a GPU will be painfully slow.

A clip that might take a few minutes on a good graphics card could easily stretch into hours on a CPU alone. For any kind of practical workflow, you'll really want a dedicated GPU with at least 8GB of VRAM. Even if you have a mid-range card, features like GPU layer offloading can make a big difference, but the GPU is still the star of the show here.

What’s the Difference Between Text-to-Video and Image-to-Video?

Both make videos, but they start from different places and are used for different things. Nailing this difference is key to getting the results you want.

- Text-to-Video (T2V): This is where you create a video from nothing but words. You write a prompt describing a scene, and the AI builds it from scratch. It’s perfect for when you have a totally new idea you want to bring to life.

- Image-to-Video (I2V): Here, you give the AI a starting image and it animates it based on your prompt. This is fantastic for adding motion to static art or making sure a character's look stays consistent.

The wan2.2 gguf model handles both, which makes it a really flexible tool for all sorts of creative projects.

A Quick Reminder on Licensing: Always double-check the license for the specific model file you download. While many open-source models have permissive licenses like Apache 2.0 that are fine for commercial work, you have to confirm this on the model's Hugging Face page before using your creations in a business setting.

Where Can I Safely Download Wan2.2 GGUF Files?

The one-stop shop for GGUF models is Hugging Face. Think of it as the main hub for the entire open-source AI community, making it the most reliable place to find what you need.

When you're there, stick to downloads from well-known creators or organizations. Always read the model card—the README file on the model's page—for instructions, license info, and any quirks. It's best to avoid grabbing models from random forums or unverified links to keep your system safe.

At Promptaa, we know that a great prompt is the secret to unlocking an AI’s true power. Our platform is designed to help you build, organize, and perfect your prompts for incredible results, every single time. Take charge of your creative vision by visiting Promptaa's official website and discover how better prompts lead to better content.