A Guide to the WAN2.1 VACE AI Video Model

Ever come across a tool that just changes how you approach a creative task? That's what WAN2.1 VACE is for video. It's an open-source AI model from Alibaba that bundles video generation, animation, and editing into one surprisingly easy-to-use package.

The real magic here is that it was designed to run on the kind of hardware most people actually own, not just on supercomputers in a lab.

What Is the WAN2.1 VACE AI Model

Think of WAN2.1 VACE as your personal video creation assistant. It's not just a single-trick pony; it’s a complete toolkit that can handle almost anything you throw at it, from creating a video from scratch to tweaking existing footage.

Most earlier models forced you to use different tools for different jobs. You'd have one for generating clips, another for animating photos, and yet another for editing. This model brings all that under one roof. To get a feel for what’s happening under the hood, it helps to understand the basics of AI video generation software. The unified approach is a big deal because it makes the whole process feel less clunky and more intuitive.

A Unified System for Video Creation

At its core, WAN2.1 VACE is built for both power and accessibility. Its standout feature is its ability to run smoothly on consumer-grade GPUs. This is a huge step forward, as advanced video AI has traditionally demanded expensive, specialized hardware, putting it out of reach for independent creators and small teams.

By lowering the barrier to entry, it opens up a world of possibilities for everyone from social media creators to indie filmmakers. It brings together several key functions:

- Text-to-Video: Write a description, and the model turns your words into a video clip.

- Image-to-Video: Take a still photo and bring it to life with motion.

- Video Editing: Remove an object, swap out a background, or alter parts of an existing video with simple commands.

The big idea with WAN2.1 VACE is to make powerful video tools available to everyone. By making it open-source and hardware-friendly, it gives professional-level creative capabilities to anyone with a vision.

Released by Alibaba in 2025, the model's unique architecture is what allows it to produce high-quality results, pushing out videos up to 1080P resolution. It’s also incredibly flexible, accepting text, images, and masks for fine-tuned control. For instance, it uses about 8.19 GB of VRAM to generate a 5-second, 480P video in around four minutes on an RTX 4090—which is pretty efficient for such a complex system. If you want to dive into the code and see it for yourself, check out the official Wan2.1 repository.

How the WAN2.1 VACE Architecture Works

To really get what makes the WAN2.1 VACE model tick, you have to look under the hood at its design. It’s built to see video not just as a pile of separate images, but as a single, flowing story. Think of it like a flipbook.

This method ensures every new frame connects logically to the one before it, which is the key to creating smooth, consistent motion. Older models often had trouble with this, leading to jittery videos or objects that seemed to morph and shift from one moment to the next. WAN2.1 VACE was specifically engineered to fix that problem.

Understanding the Spatio-Temporal Design

The secret sauce is its spatio-temporal architecture. Picture an artist painting a series of canvases. Instead of starting fresh each time, this artist remembers every detail from the previous paintings. This "memory" helps them make sure the next canvas logically continues the story, keeping the characters, background, and movement consistent.

That’s basically how WAN2.1 VACE works. It considers both space (the "spatio" part, or what's inside a single frame) and time (the "temporal" part, or how frames link together). By looking at video this way, it's much better at creating longer, more coherent clips that just feel right. This is a game-changer for producing believable animations and edits.

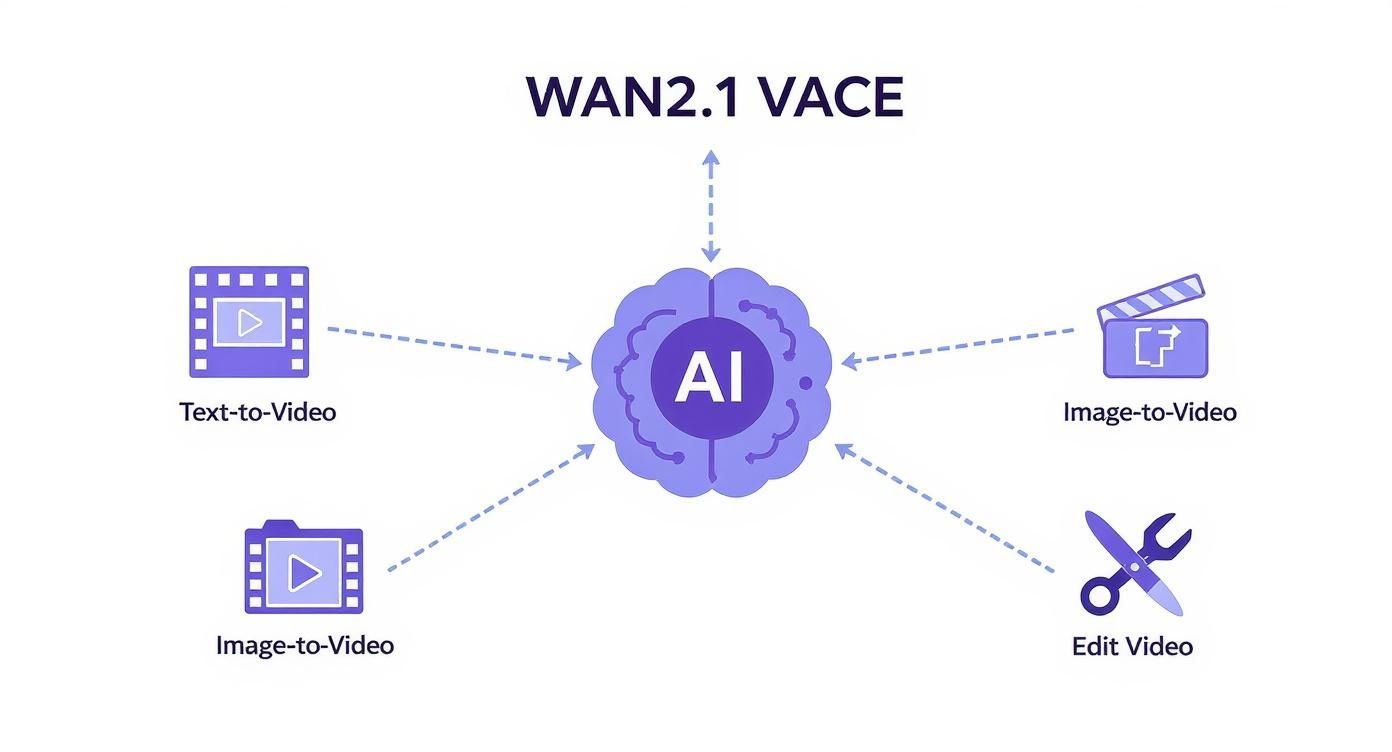

This infographic gives a great breakdown of how the architecture handles different kinds of input.

As you can see, the model's unified design lets it juggle text, image, and video editing tasks all within one powerful system.

Core Components and Efficiency

At the heart of this structure is something called a variational autoencoder (VAE). This component's job is to take the massive amount of data in a video and squeeze it into a much more manageable format—basically, turning all that visual information into numbers. To get a better handle on how this works, it helps to understand what are embeddings in AI. This compression step is what makes it so efficient.

The model's real strength is its ability to learn the fundamental rules of motion and appearance. It doesn’t just guess what the next frame should be; it makes an informed prediction based on everything it’s already seen. That’s what creates such stable, high-quality video.

The creation of Wan2.1 VACE was a big step forward, built on the back of huge training datasets and some clever engineering. It uses innovative 3D causal VAE structures that combine this spatio-temporal compression with smart memory-saving techniques, which is why it often outperforms many other open-source models.

Thanks to its release on platforms like Hugging Face, this powerful video editing tech is now available to everyone. If you want to dig into the technical details and see how it performs, you can find everything in the official VACE repository on GitHub.

Exploring Key Features and Creative Capabilities

The real magic of WAN2.1 VACE isn't just one single trick—it's the way it bundles a whole creative toolkit into one cohesive system. This isn't just a tool; it's a new way to think about and produce video. It lets creators jump from one idea to the next without constantly switching between different apps.

This all-in-one approach is what really makes it stand out. Forget juggling separate programs for text generation, image animation, and video editing. With WAN2.1 VACE, you can manage the entire creative process in a single, streamlined workflow. Let's dig into the core features that make this a reality.

From Text Prompts to Video Scenes

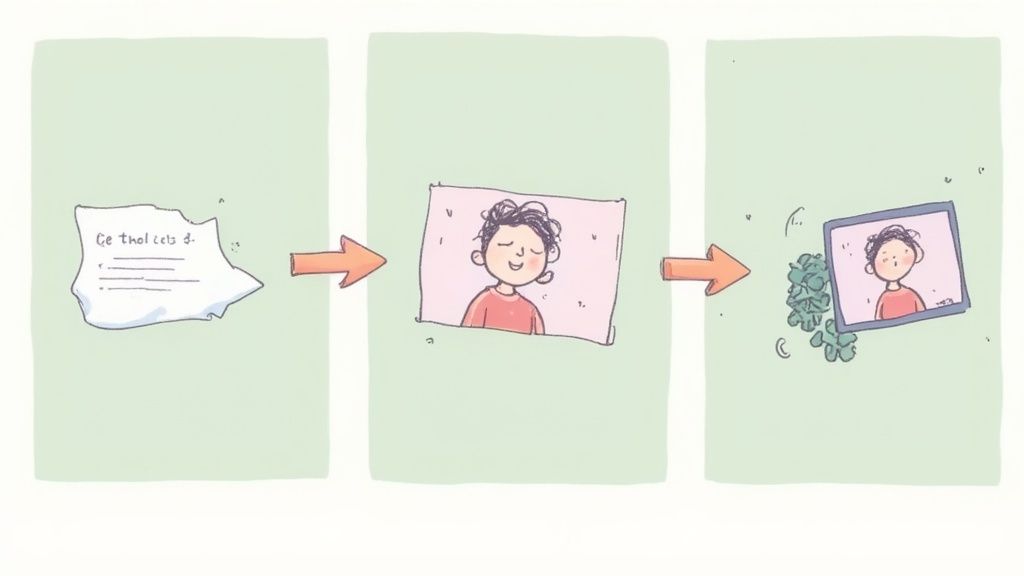

At its heart, WAN2.1 VACE is fantastic at turning simple words into moving pictures. You just give it a text description, and the model spins up a video clip that matches your vision. This text-to-video function is a game-changer for brainstorming or knocking out short, compelling content in minutes.

Imagine a social media manager for a pet food company. Instead of searching for the perfect stock video, they could just type in a prompt like, "a golden retriever catching a frisbee in a sunny park, slow motion." Instantly, they have a unique, custom-made clip for an Instagram Reel.

This isn't just a fun novelty. It’s a seriously practical tool for anyone who needs to create visual content quickly. Marketers, artists, and storytellers can now test out ideas visually without the cost or time commitment of a traditional video shoot.

Animating Still Images with Lifelike Motion

Another standout feature is its ability to make still images move. You can take any photograph and inject it with subtle, natural-looking motion. Think of a landscape photo where the clouds are actually drifting across the sky, or a portrait where the subject’s hair gently blows in a phantom breeze.

This capability breathes new life into your existing visuals. An online store could animate a static product photo to create a more eye-catching ad. An artist could add a touch of motion to their digital paintings, turning them into mesmerizing pieces of art.

The goal here is simple: add a layer of immersion. It turns a flat, static moment into a living, breathing scene that captures and holds your audience's attention.

This is especially powerful for grabbing eyeballs on platforms where motion is king, like social media feeds and website homepages.

Advanced Video Editing and Manipulation

WAN2.1 VACE goes way beyond just creating new clips. It also provides a suite of advanced editing tools that give you incredible control over your video footage. These aren't just your basic filters; they allow you to fundamentally change what’s happening in a scene.

To give you a better idea of what's possible, the table below breaks down the key features and how you might use them in a real-world project.

WAN2.1 VACE Feature Overview and Use Cases

| Feature | Description | Practical Use Case |

|---|---|---|

| Inpainting | Seamlessly removes unwanted objects or people from a video scene. | A travel vlogger removes a distracting tourist from the background of a perfect shot. |

| Outpainting | Intelligently expands the video frame, filling in the new space realistically. | A filmmaker needs to convert a vertical video into a widescreen format for YouTube. |

| Object Replacement | Swaps an object in the video for a different one while preserving motion and lighting. | An advertiser changes the soda can in a commercial to match a different regional brand. |

| Text-to-Video | Generates a video clip from a simple written description. | A marketing team quickly creates a short animated ad from a campaign slogan. |

| Image-to-Video | Adds subtle, realistic motion to a static photograph. | An e-commerce brand animates a product photo to create a more dynamic social media post. |

As you can see, these capabilities are a huge help in post-production. What once required hours of meticulous work in specialized software like Adobe After Effects can now be done much more intuitively. WAN2.1 VACE simplifies these complex editing tasks, making professional-level results accessible to more creators than ever before.

Real-World Applications of WAN2.1 VACE

Theory and technical specs are one thing, but the real test for any technology is how it performs in the wild. WAN2.1 VACE is more than just an interesting concept; it's a practical tool that’s already making waves in how visual content gets made across several industries.

What's really exciting is that its ability to run on standard hardware opens the door for everyone, from big marketing agencies to indie creators. You don't need a Hollywood-sized budget to tap into high-quality video production anymore.

Driving Social Media and Marketing

Digital marketing is probably where you see the most immediate impact. Social media platforms are hungry for a constant flow of fresh video, but creating it is often slow and costly. With WAN2.1 VACE, marketers can whip up slick video ads from a simple text prompt or bring a static product photo to life for a dynamic post.

That kind of speed is a game-changer. For instance, using models like this one is a core part of modern AI social media content creation strategies because it lets brands A/B test different ad concepts without the cost of a full video shoot for each one. It's all about staying relevant and reacting quickly.

This technology taps right into the massive global markets for online advertising and social media. Because it’s open-source, it's helping to lower the barrier to entry for AI-powered video, which is poised to completely change content workflows as more teams adopt generative AI. You can read more about Alibaba's open-source model and its impact.

A New Tool for Filmmakers and Educators

Independent filmmakers are also finding a lot to love here. Think about post-production tasks that used to eat up days of work, like painting out an unwanted object from a shot or digitally extending a background. Much of that can now be done in a fraction of the time. This frees up precious time and money, letting creators focus on the story instead of tedious technical fixes.

WAN2.1 VACE is like having a powerful assistant in the editing suite, ready to handle complex visual effects and clean-up tasks. It gives smaller studios the ability to achieve a level of polish that used to be reserved for major productions.

Education is another area where this model shines. It provides a simple way to create much more engaging learning materials. Imagine a history teacher being able to:

- Animate old photographs to make a history lesson feel more immediate.

- Generate simple videos that explain tough scientific concepts visually.

- Create custom video scenarios for language classes or job training.

By making video production so much easier, WAN2.1 VACE helps educators create the kind of rich, visual content that grabs a student's attention and helps the lessons stick. It’s a versatile tool that bridges technical power and everyday practicality.

How to Get Started with WAN2.1 VACE

https://www.youtube.com/embed/KGq96ag7FiY

Jumping into WAN2.1 VACE is a lot less intimidating than it sounds. It was built to be accessible, and the community has made it incredibly easy to download and run. You can go from zero to your first video creation in no time.

The first step is grabbing the open-source code and the pre-trained models. You'll find everything you need on popular AI community hubs, so getting the files is a breeze.

Your best bet for the WAN2.1 VACE model is a platform like Hugging Face or a user-friendly interface like ComfyUI. These spots don't just host the models; they often have example workflows to get you up and running right away.

Once you have the files, it's time to prep your local environment. This usually just means setting up a dedicated workspace so all the software dependencies play nicely together without messing with your main system.

Your Hardware and Setup Checklist

Before you start downloading, let’s talk about your gear. WAN2.1 VACE is built to run on consumer hardware, but your graphics card (GPU) is the star of the show here. A better GPU means faster video generation and the ability to handle higher resolutions.

For a smooth ride, you'll want to have:

- A solid consumer-grade GPU: An NVIDIA RTX 4090 is a fantastic choice, but even something like an RTX 4060 with 16GB of VRAM will get the job done well.

- Sufficient VRAM: You'll need at least 8GB of VRAM for basic tasks, like making a 480P video. Of course, more VRAM is always better if you're aiming for higher-quality output.

- Ample system memory: We recommend at least 64GB of RAM to keep things from getting sluggish, especially when the model is working on longer video clips.

After you've confirmed your hardware is up to the task, the setup is pretty straightforward. Tools like ComfyUI, for example, have ready-made templates for WAN2.1 VACE. These guide you through downloading the necessary models and getting your first workflow going. In many cases, you can just drag and drop a workflow file right into the interface. It’s that simple.

Running Your First Generation

With everything installed, you’re ready to run your first text-to-video generation. Start with something simple to get a feel for it. Try a prompt like, "a cat napping in a sunbeam," and see what kind of quality you get. From there, you can start getting creative with more detailed descriptions and settings.

As you get more comfortable, you might want to tweak the model for your own specific needs. For creators who love to customize, learning about techniques like parameter-efficient fine-tuning can unlock a whole new level of control, letting you train the model on your unique visual style.

Don't be shy! Play around with the different features like image-to-video or video-to-video editing. Each one offers a different way to make cool stuff. The best way to learn is to just dive in and see what this powerful tool can do.

Best Practices for High-Quality Video Generation

So, you've got the basics of WAN2.1 VACE down. That's great! But going from "good enough" to creating truly jaw-dropping videos takes a bit more know-how. The real magic happens when you start refining your technique, and it all begins with how you write your prompts.

Think of your prompt as a creative brief for the AI. A vague request like "a car driving" will get you a generic result. But what if you wrote, "a vintage red convertible driving along a coastal highway at sunset, cinematic lighting"? Suddenly, the AI has a much clearer vision to work with. Specificity is your best friend here. If you're ready to really dive deep, our guide on enhanced video prompt engineering is the perfect next step.

Fine-Tuning Your Technical Settings

Crafting the perfect prompt is only half the battle. A few small tweaks to the technical settings can make a world of difference in your final video. These settings are how you balance visual quality, how fast the video generates, and its overall consistency.

I like to think of them as the knobs and dials on a mixing board. Turning one up might affect another, and learning how they work together gives you precise control. This is especially true when you're trying to fix common headaches like blurry motion or weird, unnatural movements in a busy scene.

The best results always come from a mix of creative prompting and smart technical adjustments. Finding that sweet spot between descriptive language and optimized settings is what truly unlocks what WAN2.1 VACE can do.

Here are a few key settings I always pay attention to:

- Input Resolution: Don’t jump straight to 4K. Start with something practical like 480P or 720P. Higher resolutions eat up VRAM and take forever to generate, so find a balance that your hardware can handle without grinding to a halt.

- Masking for Precision: When you need to edit a specific part of your video, masks are your best tool. They let you tell the AI exactly where to make a change—like swapping out a background or removing an unwanted object. This keeps your edits clean and focused.

- Video Length: While the model is pretty good with longer videos, I’ve found that generating in shorter chunks of 5-10 seconds often works better. You can then stitch them together. This helps maintain a consistent style and prevents the visuals from "drifting" over time.

By keeping an eye on both your creative prompts and these technical details, you can guide the wan2.1 vace model to produce polished, professional-looking videos that actually match what you pictured in your head.

Got Questions About WAN2.1 VACE?

Whenever a new tool like WAN2.1 VACE comes along, it’s natural to have questions about how it works in the real world. Let's tackle some of the most common ones so you can get started on the right foot.

What Kind of Hardware Do I Need to Run It?

You don't need a server farm, but your hardware definitely matters. The model is built to run on consumer GPUs, but performance scales with power.

Think of an NVIDIA RTX 4090 with 8GB of VRAM as a solid starting point. With that, you’re looking at about four minutes to generate a 5-second video at 480P. More VRAM is always better—it lets you push for higher resolutions and will cut down your wait times significantly. It's always a good idea to check the official project docs for the most up-to-date hardware recommendations.

Can I Make Videos Longer Than a Few Seconds?

Absolutely. This is actually where wan2.1 vace really shines.

A single prompt gives you a short clip, but you can easily stitch these together. The model was designed specifically to keep things looking consistent over time, so you won't get that weird visual "drift" you see with other tools. By feeding one generated clip back into the model (a video-to-video approach), you can build out longer, coherent scenes. That temporal stability is a huge advantage.

Is WAN2.1 VACE free for commercial use?

It's an open-source project, so you can find the code and models on sites like HuggingFace for your own experiments and research. But for commercial work, you absolutely need to read the fine print. Check the specific open-source license tied to the project to make sure you're following all the rules.

Ready to create stunning visuals with the power of AI? At Promptaa, we provide the ultimate library to organize, enhance, and discover prompts that deliver exceptional results. Start building your perfect prompt library today!