A Guide to the Main Types of Prompting in AI

The way we give instructions to AI has changed dramatically over the years. We’ve gone from clunky, rigid commands for early systems to the creative, conversational prompts we use with today's powerful models. This journey really tells the story of how we learned to talk to machines in a more natural, human way.

How We Learned to Talk to AI

Think back to the early days. Giving instructions to an AI was like trying to use a robot that only knew a handful of very specific commands. If you stepped even slightly outside its programming, you'd get an error message—or just a digital blank stare. It was a world of strict, rule-based interactions where you had to be perfectly precise, leaving no room for creativity.

Fast forward to today, and we're having fluid conversations with models like ChatGPT, asking them to write a sonnet, find a bug in our code, or map out a travel itinerary. This incredible leap didn't happen overnight. It’s the result of decades of work that completely changed how we interact with artificial intelligence, moving us from simple commands to sophisticated dialogue. Getting a handle on this evolution is the first step to truly mastering the different types of prompts.

The Era of Rule-Based Systems

The very first methods for communicating with AI were all about rule-based prompting. A good analogy is an old-school vending machine: you press button C4 (the prompt), and you get a specific bag of chips (the response). There's no negotiation or interpretation. If you ask a slightly different question, the whole system just breaks.

These systems were completely deterministic, following scripts that were hardcoded by developers. An early customer service chatbot, for example, might have a rule like: "If a user types 'store hours,' respond with 'Our hours are 9 AM to 5 PM.'" It worked, but it was fragile. A user asking, "What time do you close?" would likely leave the AI completely stumped.

The Shift to Predictive and Generative Models

Thankfully, we moved past that rigidity. The history of AI is a clear path from these fixed systems to more dynamic ones. You can really group the different types of prompting by how they developed: from rule-based to predictive, and finally to generative.

From the 1950s through the 1980s, AI was all about those inflexible, rule-based systems. But with the growth of machine learning in the 1990s and 2000s, predictive prompting started to take hold. This allowed an AI to look at historical data to figure out what a user actually meant. Finally, a chatbot could understand that "When do you close?" and "What are your hours?" were asking the same thing. You can explore the complete history of AI on Coursera.org to see this progression in fascinating detail.

The real breakthrough, however, was generative prompting, which came with the arrival of massive neural networks.

The release of models like GPT-3, with its whopping 175 billion parameters, was a watershed moment. These new models could generate incredibly nuanced, human-like text without needing a specific rule for every single scenario. They could handle complex techniques like zero-shot or few-shot prompting right out of the box.

This jump from rigid scripts to genuine contextual understanding is what makes modern AI so incredibly capable. It also created a brand new field dedicated to figuring out the best way to ask these models for what we want.

Why Prompt Engineering Matters Now

Today, the instructions we give AI are far more than simple commands. They are nuanced requests that can be packed with context, examples, and even step-by-step instructions. The art and science of crafting these instructions is what we call prompt engineering.

Getting good at this is the key to unlocking what AI can really do. It's what separates a generic, useless answer from a brilliant, insightful one. As we'll get into, each type of prompt is a different tool in your toolbox, designed for a specific job. Learning how to use them is how you tap into the true power of AI. If you want to dive deeper into the practical side of this, check out our comprehensive guide to AI prompts engineering.

Zero-Shot Prompting: The Art of the Direct Ask

Out of all the ways you can talk to an AI, zero-shot prompting is the most straightforward. It's the "just ask" method.

Imagine you're talking to a seasoned world traveler and ask, "What are three must-see spots in Rome for a history buff?" You don't give them examples of what you like or a format for their answer. You just trust their vast knowledge to deliver a great response. That's zero-shot prompting in a nutshell.

You give a large language model (LLM) a direct command or question without providing any examples of the output you want. The model dips into its enormous reservoir of training data—all the text and code it's ever learned—to figure out what you mean and give you an answer. It's fast, simple, and the default way most people interact with AI.

When to Use a Direct Ask

Zero-shot prompting works best when your task is clear and doesn't require a highly specific or unusual format. The model has seen millions of similar requests before, so it doesn't need its hand held.

This approach is perfect for tasks like:

- Summarization: You can drop in a long article and say, "Summarize this text in three key takeaways."

- Translation: A simple prompt like, "Translate 'Where is the nearest cafe?' into Japanese," gets the job done instantly.

- Sentiment Analysis: Ask, "What is the sentiment of this review: 'The product arrived late, but it works perfectly'?" and the AI will understand the mixed feelings.

- Simple Q&A: Basic questions like, "Who wrote The Great Gatsby?" are what these models were built for.

The biggest win here is speed. You don't have to waste time crafting examples; you just ask for what you need. That simplicity makes it the perfect starting point for almost any task.

Understanding the Limitations

Of course, the "no examples" approach isn't foolproof. The main weakness of zero-shot prompting is that you're leaving the style and structure of the response entirely up to the AI.

Since you haven't provided a template, the output can sometimes feel generic or miss the exact format you were hoping for. The world traveler might give you a brilliant list of spots in Rome, but maybe you wanted it in a table with opening hours, and they gave you a paragraph instead.

A zero-shot prompt gives the AI total creative freedom. This is a double-edged sword—it can lead to some surprisingly creative answers, but it can also produce results that are off-target or inconsistent.

For example, if you ask an AI to "Pull all the company names from this article," it might return a comma-separated list, a bulleted list, or a full sentence. If you needed that data in a specific JSON format to feed into another program, a zero-shot prompt probably wouldn't deliver.

Practical Examples of Zero-Shot Prompts

Let's look at what this looks like in practice.

Example 1: Content Idea Generation

- Prompt: "Give me five blog post ideas about the benefits of container gardening for apartment dwellers."

The task is crystal clear. The AI can easily tap into its knowledge about gardening and urban living to come up with relevant, creative titles. No examples needed.

Example 2: Simple Classification

- Prompt: "Is this email urgent or not urgent? 'Quick reminder: Our weekly sync is tomorrow at 9 AM.'"

The AI understands the concepts of urgency and routine meetings, so it can correctly classify the email without you showing it what an "urgent" message looks like first.

Ultimately, zero-shot prompting is your go-to workhorse. It's the quickest path from question to answer for everyday tasks. But when you need more control over the output's precision, style, or structure, it's time to give the AI a few more clues. That brings us to our next technique.

Few-Shot Prompting: Guiding AI With Examples

While just asking an AI a direct question is great for simple jobs, what happens when you need a very specific, nuanced answer? This is exactly where few-shot prompting shines. Think of it like this: asking a chef to "make a spicy soup" is a zero-shot prompt. But handing them a few of your favorite spicy soup recipes to guide their cooking? That's few-shot prompting.

Instead of just telling the AI what to do, you show it.

By including a handful of high-quality examples—what we call "shots"—right in your prompt, you give the model a clear pattern to follow. This simple act of adding context can make a massive difference in the accuracy and consistency of what you get back.

You're essentially giving the model a quick, on-the-spot lesson. This ability to learn from examples within the prompt itself, known as in-context learning, is one of the most powerful features of modern AI. It lets them adapt to brand new tasks without needing to be completely retrained.

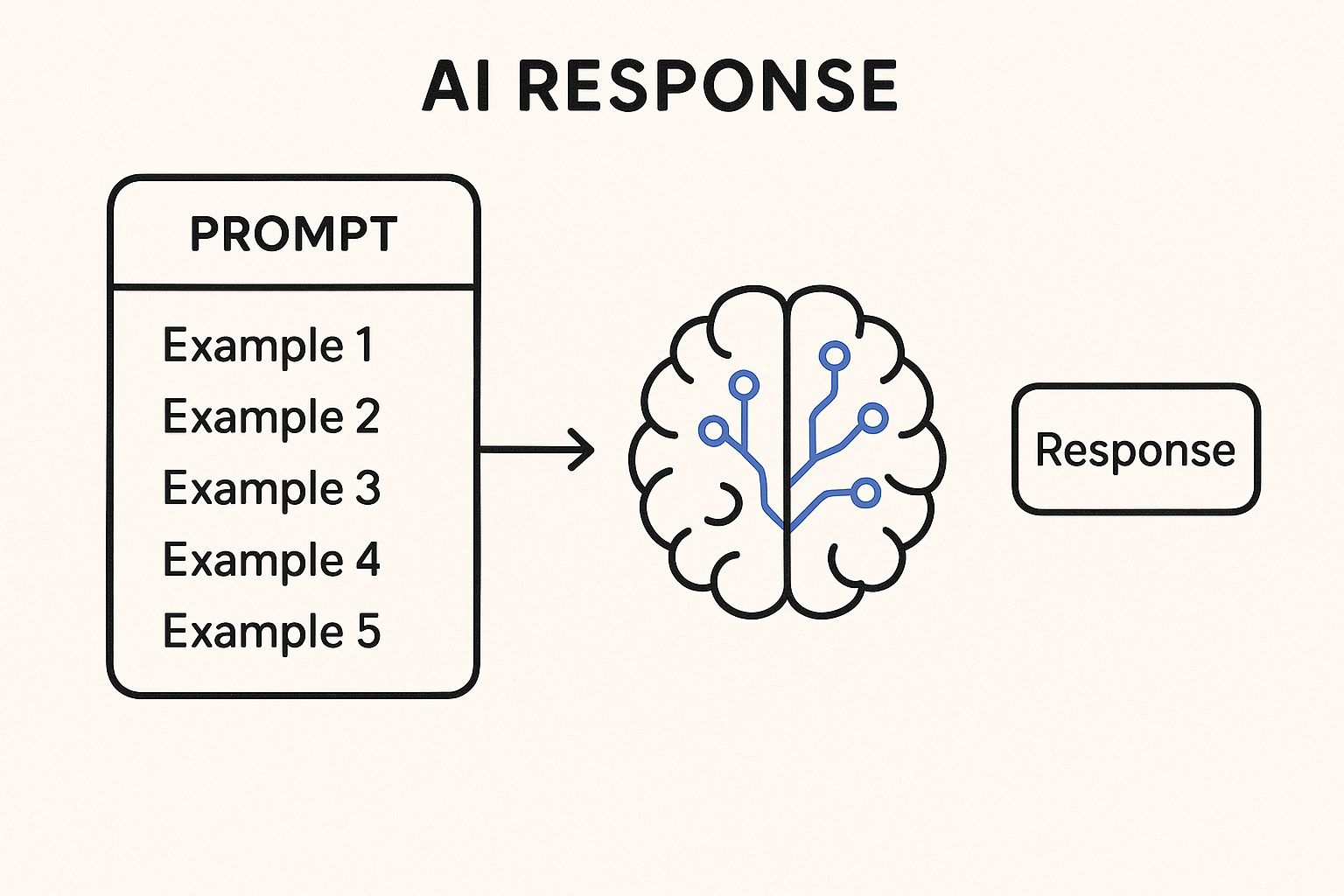

This visual shows how a few examples can nudge an AI toward a much more specific and useful response.

When you lay out a clear input-output pattern, you're actively steering the model's logic. This helps guarantee the final result matches the exact structure and style you had in mind.

Why Showing Is Better Than Telling

The biggest advantage of few-shot prompting is control. When you need a specific format, a particular tone, or a certain kind of analysis, providing examples takes all the guesswork out of it for the AI. This is a game-changer for tasks that have a lot of nuance or require a rigid structure that a simpler prompt would likely fumble.

Let's say you need to pull product names and their prices from a bunch of customer reviews. A zero-shot prompt might just give you a messy, unstructured paragraph. But with a few-shot prompt, you can dictate the exact format you need.

Zero-Shot Prompt (Less Effective):Extract the product and price from this review: "I bought the SolarFlare X1 for $299 and it's fantastic!"

Few-Shot Prompt (Much More Effective):Extract the product and price from the text in JSON format.

Text: "The new AquaStream filter costs 50 dollars and works wonders."

Output: {"product": "AquaStream filter", "price": "$50"}

Text: "I'm returning the Go-Go Gadget. It was $19.99 but broke."

Output: {"product": "Go-Go Gadget", "price": "$19.99"}

Text: "I bought the SolarFlare X1 for $299 and it's fantastic!"

Output:

See the difference? By providing just two clear examples, we’ve taught the AI the precise JSON structure we want. The result is a far more reliable and immediately useful response.

Few-shot prompting is the perfect tool for tasks that demand stylistic imitation, structured data extraction, or complex classification. Just as research shows articles with images get 94% more views, prompts loaded with good examples get much higher-quality responses.

This technique bridges the gap between a vague instruction and a perfectly executed task, making it a true cornerstone of effective prompt engineering.

How to Structure a Few-Shot Prompt

Putting together a solid few-shot prompt is all about creating a clear and consistent pattern for the model to follow. Remember, the quality of your examples is way more important than the quantity. For most tasks, just two to five well-crafted examples are plenty to get the AI on the right track.

Here are a few tips to keep in mind:

- Be Consistent: Make sure the format of your examples is identical. If you use labels like "Input:" and "Output:", use them for every single example. Consistency is key.

- Keep It Relevant: Your examples should be a close match for the actual task you want the AI to perform. Don't use examples of Shakespearean analysis if you're trying to prompt it for financial data extraction.

- Vary Your Examples: It’s smart to provide a little variety to help the model understand the range of the task. For instance, if you're classifying sentiment, make sure to include positive, negative, and neutral examples.

Comparing Zero-Shot vs Few-Shot Prompting

Knowing when to use each prompting style is crucial for getting the best results from any AI model. To make it simple, this table breaks down the core differences, use cases, and trade-offs between the two.

| Attribute | Zero-Shot Prompting | Few-Shot Prompting |

|---|---|---|

| Method | Relies on the model's pre-existing knowledge. | Provides 2-5 examples to guide the model. |

| Complexity | Very simple and fast to write. | Requires more effort to craft good examples. |

| Use Case | General tasks like summarization, translation, simple Q&A. | Specific tasks like data extraction, style imitation, classification. |

| Control | Low control over output format and style. | High control over output format and style. |

| Accuracy | Can be less accurate for nuanced or complex tasks. | Generally higher accuracy for specific tasks. |

Ultimately, zero-shot prompting is your go-to for quick, general requests. But when the details really matter, few-shot prompting is the precision instrument you need to get the job done right.

So what happens when a task requires more than just examples? What if it needs a logical, step-by-step process? For that, we need to teach the AI not just what to do, but how to think.

Chain-of-Thought Prompting: Teaching an AI to "Show Its Work"

While giving an AI examples can teach it to spot a pattern, some problems are too complex for simple mimicry. They demand actual reasoning. This is where one of the most powerful prompting techniques comes into play: Chain-of-Thought (CoT) prompting.

Think about how you'd tackle a tricky math word problem. You wouldn't just spit out the first number that pops into your head. You'd break it down, step by step, showing your work along the way. CoT prompting essentially asks the AI to do the same thing.

Instead of just demanding the final answer, you guide the model to explain its thinking process. This simple adjustment turns the AI from a mysterious black box into a transparent reasoning partner.

Unlocking Deeper AI Reasoning

The real genius of CoT is how it forces the AI to slow down. When an AI rushes to answer a complex question, it’s far more likely to make a logical error or miss a key detail. By making it lay out its logic, you dramatically increase the odds of it reaching the correct conclusion.

This is absolutely crucial for any task that involves multiple calculations, logical steps, or complex planning. Research has shown that CoT can boost performance on these kinds of tasks, sometimes improving accuracy by over 50%.

By asking the model to "think aloud," you get more than just an answer; you get a roadmap of its logic. This is priceless for troubleshooting. If the final output is wrong, you can look at its steps and pinpoint exactly where its reasoning went off the rails.

This approach effectively breaks down a big, intimidating problem into a sequence of smaller, manageable chunks. Each step builds on the last, creating a logical chain that's much easier for the AI to follow.

How to Structure a CoT Prompt

A Chain-of-Thought prompt is all about explicitly asking the model to show its process. You can trigger this mode with a simple phrase like, "Let's think step by step," or by providing an example that already includes a step-by-step breakdown.

Let’s use a classic brain teaser to see it in action.

Standard Prompt (Less Effective):Q: A farmer has 15 sheep, and all but 8 die. How many are left?

A: 7

The answer is wrong. The AI just latched onto the numbers and performed a basic subtraction (15 - 8) without actually reading the question carefully.

Now, let's try again with a CoT approach.

Chain-of-Thought Prompt (More Effective):Q: A farmer has 15 sheep, and all but 8 die. How many are left? Let's think step by step.

`A:

- The farmer starts with 15 sheep.

- The phrase "all but 8 die" is a tricky way of saying that 8 sheep are the ones that survived.

- Therefore, the number of sheep left is 8.`

By encouraging the AI to spell out its logic, it correctly deciphers the wordplay and gets the right answer. The step-by-step process prevented it from making a hasty, incorrect assumption.

When to Use Chain-of-Thought

This technique is your best friend for any task that needs more than a quick, surface-level answer. If you need accuracy and a sound logical foundation, CoT is the way to go.

Here are a few scenarios where it really shines:

- Mathematical Word Problems: You can guide the AI through each stage, from identifying the key numbers to performing the final calculation.

- Logic Puzzles and Riddles: It forces the model to evaluate each clue one by one instead of just guessing.

- Strategic Planning: Breaking down a huge request (like "Create a marketing plan") into logical phases like market research, audience analysis, and campaign execution.

- Code Debugging: You can ask the AI to trace its steps through a block of code to find where the error is happening.

Chain-of-Thought is a more advanced technique because it pushes the AI beyond just following instructions and into the realm of guided reasoning. It takes a little more effort to set up, but the payoff in accuracy and reliability is huge for complex tasks. It teaches the AI not just what the answer is, but how to find it.

How Technology Paved the Way for Modern Prompting

The prompting techniques we rely on today, from a simple question to a complex chain-of-thought, didn't just appear out of thin air. They're the direct result of a long, intertwined evolution of computing power and our methods for communicating with machines. To really get why these different prompt types work, it helps to look back at the technological leaps that made them possible.

This journey begins in an era of rigid, unforgiving command-line interfaces. Early computers were like hyper-literal assistants; they would only do exactly what you told them, using the precise, predefined command. There was no room for error, no interpretation, and certainly no creativity. This was the world that gave birth to the most basic, rule-based instructions.

From Command Lines to Neural Networks

The first seismic shift was the explosion in processing power, famously described by Moore's Law. As computers got exponentially faster, they could handle much more than simple, fixed commands. This opened the door for early machine learning and, eventually, the neural networks that are the engine of modern AI.

A neural network, loosely inspired by the human brain, doesn't just follow explicit instructions—it learns patterns from vast amounts of data. This was a monumental change. It meant we could stop telling a computer exactly how to do something and instead show it examples, letting it figure out the rules on its own. This technological breakthrough was the non-negotiable prerequisite for techniques like few-shot prompting.

This shift from rigid rules to adaptive learning wasn't an overnight thing. The history of prompting actually stretches back nine decades, with each new strategy emerging directly from breakthroughs in AI and computing. You can see the full timeline of these generative AI innovations over on TechTarget.com.

The Role of Big Data and Foundation Models

Sheer processing power was only half the equation. The other critical ingredient was data—and lots of it. The internet created an unprecedented flood of text, images, and raw information, giving developers the material they needed to teach an AI about language, context, and the subtle relationships between concepts.

This potent combination of powerful hardware and planet-scale datasets led to the creation of foundation models like the GPT (Generative Pre-trained Transformer) family. These models are pre-trained on a massive slice of the internet, which gives them an incredibly broad and deep understanding of human language right out of the box. This is precisely what makes zero-shot prompting a reality.

Think of it as three key pillars coming together:

- Massive Datasets: This provided the raw knowledge base for the AI to learn from.

- Advanced Processors (GPUs): These supplied the brute-force computational strength to churn through all that data.

- Transformer Architecture: This specific neural network design proved to be exceptionally good at understanding context and nuance in language.

The release of GPT-3, with its staggering 175 billion parameters, was a watershed moment. It showed the world that a model trained at this immense scale could perform a huge variety of tasks with little to no specific instruction. The ability to just ask a question and get a coherent, useful answer was no longer science fiction. It was the direct product of these converging technologies. Grasping this connection is central to getting better results; for more on this, check out our guide on mastering prompt optimization to unlock AI's full potential.

At the end of the day, the different types of prompts we use are simply a reflection of the AI's underlying abilities. We've moved from simple keywords to complex, example-filled prompts because the technology finally caught up, enabling a much richer, more human-like conversation with our machines.

Common Questions About Prompting Techniques

https://www.youtube.com/embed/p09yRj47kNM

As you start experimenting with different types of prompting, you're bound to run into a few questions. Getting a handle on the subtle differences between these methods is what separates a basic user from someone who can truly steer an AI's output.

This section is all about tackling those common questions head-on. My goal is to clear up the confusion with practical, no-fluff answers you can start using right away.

Which Prompting Type Is Best for Creative Writing?

For creative work, like drafting a story or brainstorming ad copy, I've found that a mix of zero-shot and few-shot prompting works wonders. It’s a great way to get the best of both worlds: a blast of initial creativity followed by fine-tuned control.

Kick things off with a wide-open, zero-shot prompt. Something like, "Write an opening paragraph for a mystery novel set in a futuristic, neon-lit city." This gives the AI a ton of room to play and surprise you.

Once you have a starting point, you might realize the tone isn't quite right. That's when you pivot to a few-shot prompt. You can feed it a short snippet of writing that has the exact voice you're after and ask the AI to continue in that style. This hybrid approach lets you capture that initial spark and then carefully shape it into something polished.

Can I Combine Different Prompting Techniques?

Yes, and you absolutely should! Stacking different prompting methods in a single request is one of the biggest signs you're moving into advanced prompt engineering. This layered strategy lets you tap into the unique strengths of each technique to tackle really specific or complex jobs.

For example, you could start a prompt with a Chain-of-Thought structure to walk the AI through a tough problem step-by-step. Then, within that same prompt, you could add a few-shot example to show it the exact output format you need, like a clean JSON object or a markdown table. It’s the ultimate combination of controlling how the AI thinks and what it produces.

If you want to go deeper, our guide on creating effective prompts for AI models gets into more of these advanced strategies.

How Many Examples Should I Use for Few-Shot Prompting?

There’s no perfect number, but the sweet spot is usually between two and five solid examples. The main goal here is to give the AI a clear, consistent pattern to mimic without cluttering up your prompt.

The secret is to focus on the quality and relevance of your examples, not the quantity. A few really good examples will always beat a dozen sloppy ones. Too few, and the AI might miss the pattern; too many, and you risk confusing it or watering down your main instruction.

Will Prompt Engineering Remain a Valuable Skill?

Without a doubt. As AI models get smarter and more powerful, the ability to communicate with them clearly and effectively becomes even more critical. Prompt engineering is quickly moving beyond just writing simple commands—it's about designing entire conversations and workflows between humans and AI.

Think of it as a fundamental skill for the modern workplace. As AI becomes a standard tool in nearly every field, the people who know how to get the most out of it will have a serious advantage. It's the key to unlocking what these systems are truly capable of.

Ready to organize your prompts and get better AI results? At Promptaa, we provide an advanced library to create, categorize, and share your best prompts. Stop losing track of what works and start building a powerful, reusable prompt collection today. Discover how Promptaa can transform your AI interactions.