A Practical Guide to system-prompts-and-models-of-ai-tools

Ever wonder why some AI chats feel generic while others are incredibly helpful and on-task? The secret often isn't just what you ask—it's the system prompt working behind the scenes. Think of it as the AI's core job description or a permanent set of instructions that shapes its personality, rules, and purpose for an entire conversation.

The Hidden Engine Behind Every Great AI Conversation

While your questions guide the AI turn-by-turn, the system prompt is the foundation that keeps every response consistent and aligned with a specific goal. It's what turns a general-purpose AI into a specialized expert—whether that's a professional Python coder, a cheerful customer support agent, or a meticulous data analyst.

This powerful instruction set separates a fleeting user query from a persistent AI persona. Before the model even sees your first question, the system prompt has already told it how to behave, what tone to adopt, and which constraints to follow. Getting the hang of this relationship between system prompts and the AI models that use them is the key to getting reliable, high-quality results.

Why System Prompts Matter More Than Ever

In the early days of AI, interactions were much simpler. But today’s models are so powerful that they need clear direction to be truly useful. Without a solid system prompt, an AI can easily drift off-topic, forget its purpose, or give you inconsistent answers. A well-crafted prompt is like a rudder, steering the model's vast knowledge toward a specific, productive outcome.

This guide dives into the crucial connection between system-prompts-and-models-of-ai-tools. Understanding this link helps you:

- Create Consistency: Ensure the AI maintains the same persona, tone, and quality across long conversations.

- Improve Reliability: Set clear boundaries to reduce the chances of the AI generating incorrect or off-base information.

- Boost Efficiency: Get the output format and style you want on the first try, saving tons of time on edits and follow-up questions.

- Unlock Specialization: Transform a generalist AI into a focused tool for specific tasks, from writing marketing copy to creating technical documentation.

A system prompt is the strategic blueprint for an AI's behavior. It’s not just about telling the AI what to do; it’s about defining what it is for the duration of your interaction.

Here’s another way to think about it: a user prompt is like telling a chef what dish you want for dinner. A system prompt is like handing them an entire cookbook and explaining that they are a five-star French chef who specializes in rustic cuisine and never uses processed ingredients. That context fundamentally changes every single dish—or in this case, every AI response—they create.

When you learn to write that "cookbook" effectively, you gain precise control over your AI's performance.

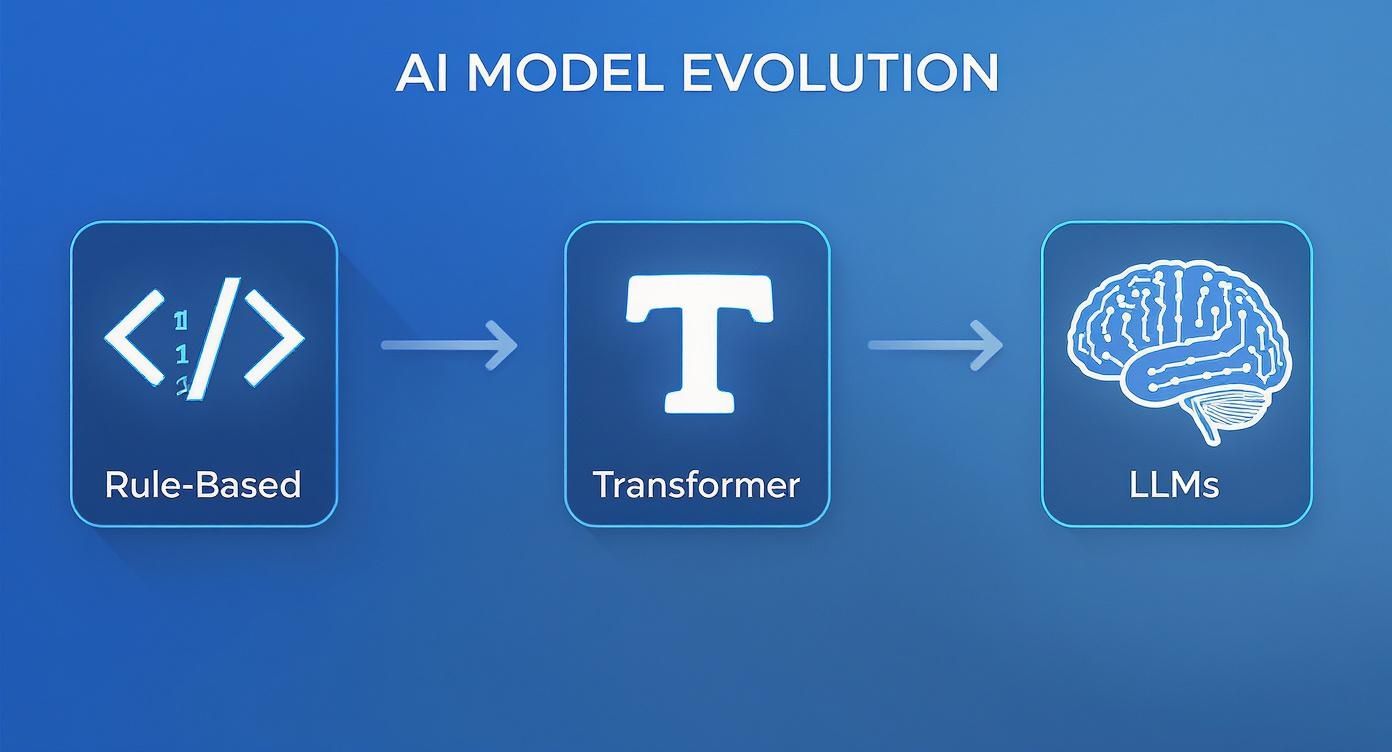

How We Got Here: A Brief History of AI Models

To really get a handle on what system prompts do, you have to understand the “brains” they’re steering. The AI models we use today didn't just appear overnight. Their journey was a slow burn of breakthroughs, with each step making them smarter and better at following the kind of complex instructions we pack into a system prompt.

Think back to the early days of AI. Everything was rule-based. You had simple chatbots that could only follow a handful of commands. If you typed "check balance," it worked. But if you asked, "How much money is in my account?"—it was completely stumped. These systems were rigid and had zero ability to grasp nuance or context.

That inflexibility meant they were useless for the creative, open-ended work we now expect from AI. They were more like a calculator: fantastic at one specific job but incapable of learning or adapting beyond their programming. For AI to really move forward, it had to learn to see language as more than just a list of commands.

The Transformer Revolution

The big "aha!" moment came in 2017 with the invention of the transformer architecture. This wasn't just another step; it was a giant leap that completely reshaped the AI world and laid the groundwork for every modern language model. The secret sauce was a concept called "attention," which let the model weigh the importance of different words in a sentence, giving it a much deeper sense of context. You can explore the fascinating history of this AI evolution to learn more about how these models developed.

This was a fundamental shift. Instead of just reading words one after another, a transformer model could understand how words relate to each other across an entire document. It could finally figure out that in the sentence "The cat sat on the mat, and it was happy," the word "it" refers to the cat, not the mat.

That ability to understand context was the key that unlocked everything. It paved the way for models that could grasp intent, adopt a persona, and follow complex rules—the very things a system prompt is designed to control. Shortly after, in 2018, both OpenAI's GPT-1 and Google's BERT hit the scene, each using this new architecture to tackle different language challenges.

The transformer architecture gave AI the ability to see the forest, not just the trees. It allowed models to understand how words connect to create meaning, making them sophisticated enough to be guided by high-level instructions.

The Scaling Race and the Rise of LLMs

Once the transformer provided the engine, the next phase was all about fuel. Researchers quickly realized that making the models bigger—by feeding them more data and adding more internal parameters—made them exponentially more capable. This led directly to the era of Large Language Models (LLMs), the category of AI we're all familiar with today.

The jump from GPT-2 to GPT-3 is the perfect case study. GPT-2 was clever, but GPT-3’s sheer scale gave it a jaw-dropping range of new skills, from writing poetry to spitting out functional code. This race to scale is what created the powerful, do-it-all AI tools we now have at our fingertips.

These massive models are packed with potential, but all that knowledge makes them easy to distract. Without clear guardrails, they can "drift" all over the place. They're so capable that they need a firm hand to keep them on track, which is precisely why system prompts became so essential.

This history lesson shows us that system prompts aren't just a neat feature; they're the direct answer to a problem created by success. The more powerful the models became, the more we needed a sophisticated way to control them. The evolution of AI itself set the stage for the system prompt to become the single most important tool for directing and refining its incredible power.

Choosing the Right AI Model for Your System Prompt

You can’t just throw a system prompt at any AI model and expect the same result. The truth is, not all models "listen" to your instructions in the same way. The choice between OpenAI's GPT series, Anthropic's Claude, or Google's Gemini isn't about brand loyalty; it's a strategic decision that has a huge impact on how well your system prompts actually work.

Each model family has its own personality—its own quirks and strengths. You wouldn't use a wildly creative AI for a job that requires rigid, by-the-book safety compliance. Getting to know these differences is the key to matching the right AI to your specific goal.

The journey from older, rule-based AI to the flexible Large Language Models we have today shows just how far we've come.

This evolution is exactly why understanding the nuances of different models is so critical for making the most of system-prompts-and-models-of-ai-tools.

OpenAI's GPT Series: The Creative Powerhouse

When you need an AI that can brainstorm, generate fresh ideas, or chew on a complex problem, OpenAI’s GPT models, particularly GPT-4, are often the go-to. They’re known for their impressive creativity and solid general reasoning skills. These models are incredibly adaptable and can often figure out what you mean even if your prompt isn't perfectly polished.

But this creative streak can be a double-edged sword. GPT models have a tendency to "improvise," sometimes taking creative liberties that stray from your core instructions. To rein this in, your system prompts need to be crystal clear. Set firm boundaries and use techniques like few-shot examples to show it exactly what you want. You can find more practical tips in various articles discussing ChatGPT and how to manage its creative tendencies.

Anthropic's Claude Series: The Constitutional Expert

Anthropic built its Claude models with a core focus on safety, reliability, and following rules. They call it "Constitutional AI"—the model operates based on a set of foundational principles that guide its behavior. The result is an AI that's exceptionally good at following instructions to the letter.

This makes Claude the perfect choice for any task where precision and consistency are top priorities.

- Persona Adoption: If you define a specific persona in the system prompt, Claude will stick to it with impressive consistency, which is great for customer service bots or brand-aligned content.

- Rule Adherence: Give Claude a list of "do's and don'ts," and it's far less likely to go off-script. This is essential for compliance and safety-critical work.

- Structured Outputs: Need perfectly formatted JSON or XML? Claude is highly reliable for generating structured data exactly as you specify.

The trade-off? Claude can be a bit more cautious and less creatively spontaneous than a GPT model. Its behavior is predictable by design. Our guide to the different types of LLM dives deeper into these architectural differences.

Google's Gemini Series: The Multimodal Integrator

Google's Gemini models were designed from the ground up to be "natively multimodal." That’s a fancy way of saying they are built to seamlessly understand and work with text, images, audio, and video all at once. While other models can process different media types, Gemini's architecture was specifically built for it.

This makes Gemini a beast when your system prompts require interpreting or generating content that mixes different media. For instance, you could ask it to create social media copy from a video transcript or write a product description based on a photo. Its performance strikes a strong balance between speed and capability, making it a versatile and powerful option.

To make it even clearer, here’s a quick rundown of how these models stack up when it comes to system prompts.

AI Model Behavior with System Prompts

| Model Family | Primary Strength | Best Use Case for System Prompts | Common Behavior |

|---|---|---|---|

| OpenAI GPT | Creativity & Reasoning | Complex problem-solving, brainstorming, and generating diverse content formats. | Highly adaptable and can infer intent, but may "improvise" if boundaries aren't firm. |

| Anthropic Claude | Adherence & Safety | Maintaining a consistent persona, enforcing strict rules, and generating structured outputs. | Exceptionally good at following instructions but can be less creatively spontaneous. |

| Google Gemini | Multimodality | Tasks involving mixed media, like generating text from images or summarizing video content. | Balances speed and capability well, built to integrate different data types seamlessly. |

Ultimately, picking the right model depends entirely on what you're trying to accomplish. Each has a distinct personality, and understanding that is the first step to getting the results you want.

The growth in model complexity is staggering. When GPT-2 was released in 2019, it was trained on 1.5 billion parameters. Then, GPT-3 arrived in 2020 with 175 billion parameters—a massive jump of over 116 times that directly translated to better performance.

Choosing the right model is like picking the right tool for a job. A hammer is great for nails, but not for screws. Similarly, matching your task requirements to a model's inherent strengths will dramatically improve your results.

How to Write System Prompts That Actually Work

Crafting a system prompt that gets you the right results isn't about writing a single perfect command. It's much more like building a detailed job description for your AI. A truly effective prompt layers instructions together, starting with a broad persona and then getting into specific rules and how you want the final output to look. This structured approach is what turns a general-purpose AI into a specialist tool you can rely on.

Think of it like building with LEGOs. You start with a solid base, then add bricks one by one until your creation matches what you had in your head. Every piece of the prompt has a job, and when they all work together, they guide the AI toward a predictable, high-quality result. Let's walk through the essential layers for building prompts that consistently deliver.

Start with a Clear Persona and Role

First things first: give your AI a job title. A vague instruction like "help me with my code" just won't cut it. Defining a clear persona right at the start sets the entire tone and context for how the AI will behave.

Instead of being generic, get specific:

- Weak Prompt: "Be a helpful assistant."

- Strong Prompt: "You are a Senior Python Developer with 10 years of experience specializing in data analysis with the Pandas library. You write clean, efficient, and well-documented code."

See the difference? This simple change immediately anchors the AI’s knowledge and style. It knows who it's supposed to be, what its expertise is, and how it should talk to you. Giving it a role is the foundation for getting the behavior you want from different system-prompts-and-models-of-ai-tools.

Layer on Explicit Rules and Constraints

Okay, the persona is set. Now it’s time to lay down the ground rules. This is where you tell the AI not just what to do, but just as importantly, what not to do. Setting explicit constraints is critical for stopping the model from going off on its own tangent or generating stuff you don't want.

A great system prompt is as much about what you forbid as what you ask for. Setting clear boundaries is the key to preventing "persona drift" and ensuring the AI stays on track throughout a conversation.

Here are a few examples of rules that work well:

- Do not use overly technical jargon unless specifically asked.

- Always provide code examples in fenced code blocks.

- Never apologize for not being a human.

- Prioritize clarity and conciseness in all explanations.

Think of these as the rules of the game. They keep the AI playing within the boundaries you’ve defined.

Provide Context and Define the Output Format

With the persona and rules in place, the next layer is to provide any necessary context and spell out the exact format for the output. This is one of the most powerful things you can do to get consistent, machine-readable results. Don't just ask for information; tell the AI precisely how to structure it. For a deeper look into structuring requests, our guide on the different types of prompting techniques offers more detail.

For instance, if you need structured data, be crystal clear:

- Specify JSON: "Provide your output as a valid JSON object with the keys 'summary', 'key_points', and 'action_items'."

- Request Markdown: "Format your response using Markdown. Use H2 headings for main sections and bullet points for lists."

Here's a pro-tip: try using XML tags to cleanly separate the parts of your prompt, like <rules>, <persona>, and <output_format>. This little trick helps the model see the distinct instructions and process them more accurately. To find practical examples and inspiration for crafting effective system prompts, it can be beneficial to explore a comprehensive ChatGPT prompts database. When you combine these methods—persona, rules, and formatting—you can build incredibly robust system prompts that make AI tools work exactly the way you want them to.

Troubleshooting Common System Prompt Problems

Even the most carefully written system prompt will have its off days. You'll inevitably run into moments where the AI forgets its persona, ignores a key instruction, or spits out content in a completely wrong format. These are just part of the game when you're working with AI, but the good news is, almost every issue is fixable.

The first step is figuring out why it's happening. The model isn't being disobedient; it's usually just confused. Your instructions might be clashing with each other, be too vague, or have simply been pushed out of its memory during a long chat. Once you learn to spot these patterns, you can get to the root of the problem and fine-tune your prompts for much more reliable results.

This kind of hands-on skill is becoming essential. When ChatGPT exploded onto the scene in late 2022 and hit 100 million users in two months, it changed everything. This massive adoption of AI has sped up innovation like crazy, making prompt engineering a crucial skill for anyone trying to get consistent value from these tools. You can learn more about the AI development timeline and its impact to see how quickly things are moving.

Diagnosing Persona Drift and Instruction Ignoring

One of the most common headaches is persona drift. You tell the AI to be a "Senior Data Analyst," and it starts out great, but after a few exchanges, it slips back into being a generic, friendly chatbot. Another classic problem is when the model flat-out ignores a negative constraint, like when you explicitly say, "Do not mention pricing."

So, what gives? It usually boils down to one of these culprits:

- Instruction Overload: Your system prompt is a wall of text with too many commands, and the model is losing the plot.

- Conflicting User Prompts: The questions you ask during the conversation might accidentally contradict or undermine your original instructions.

- Context Window Limits: In a really long conversation, the initial system prompt can get pushed out of the model's short-term memory. It literally forgets what it was supposed to be doing.

The fix is almost always to simplify and reinforce. Go back to your prompt and look for ways to make your instructions punchier and more direct.

Troubleshooting Tip: If a model is ignoring an instruction, your first instinct might be to add more words to clarify. Don't. The better move is usually to make the existing instruction shorter and clearer.

For instance, instead of writing a whole paragraph to describe a persona, try using a simple bulleted list of its key traits. This gives the model a quick-reference guide it can easily check throughout the conversation.

Strategies for Reinforcing Your Instructions

When your AI starts to wander, you need a few go-to moves to pull it back on track. It's all about iterating—make a small change, see how the model reacts, and build from there.

Here are three practical ways to reinforce your prompts:

- Simplify and Prioritize: Figure out the single most important instruction. Is it the persona? The output format? Put that instruction right at the top and rephrase it to be crystal clear. Trimming the fluff often solves the problem instantly.

- Use Explicit Formatting: Don't be afraid to structure your prompt like a document. Use clear headings or even XML tags like

<persona>,<rules>, and<output_format>. This helps the model organize the information and understand the different parts of its job. - Incorporate "Few-Shot" Examples: This one is a game-changer. Instead of just telling the model what to do, show it. Include a quick example of a user's question and the perfect response right inside your system prompt. It's one of the most effective ways to nail the exact style and format you're after.

By using these troubleshooting techniques, you can start to methodically debug your system-prompts-and-models-of-ai-tools. Every "mistake" the AI makes is really just an opportunity to learn, making you a more skilled and confident prompt engineer who can get great results from any model.

How to Organize and Scale Your Prompt Library

So, you’ve gotten good at writing system prompts for different AI models. That's great! But you’ve probably already run into the next big challenge: keeping them all straight.

At first, a simple notes app or a Google Doc seems like enough. But as you build more prompts for more projects, that "simple" system quickly turns into a digital junk drawer. It's a mess.

This isn't just about being untidy. Scattered prompts cause real problems. You forget which version of a prompt gave you the best results. Your team members accidentally use old, inconsistent instructions. You end up reinventing the wheel every time a new task comes up. Trying to scale your AI work without a system is a recipe for frustration.

When your prompts are all over the place, your AI's output will be, too. The reliability you worked so hard to achieve disappears. A messy library also makes it impossible to properly compare how different system prompts and AI models perform, which means you're leaving a ton of valuable insights on the table.

Building a Centralized Prompt Hub

The answer is to start treating your prompts like the valuable assets they are. You need a centralized library, and a dedicated platform like Promptaa is built for exactly this. It helps you move your prompts out of scattered notes and into an organized, collaborative system that can actually grow with you.

A proper prompt library gives you some serious advantages:

- Version Control: You can easily track every change, see what worked, and roll back to a previous version of a prompt. No more guessing which one was the "good one."

- Team Collaboration: Everyone on your team can access the same high-quality, approved prompts. This creates incredible consistency across all your work.

- Performance Testing: Run the same prompt through different models—like GPT-4 vs. Claude 3—and compare the outputs side-by-side to see what works best.

- Organized Structure: Group your prompts by project, department, model, or task. Finding what you need takes seconds, not minutes of searching.

Taking a systematic approach here is the difference between just playing around with AI and truly deploying it as a strategic tool. A well-organized library becomes a force multiplier, speeding up development and locking in quality.

How to Structure Your Prompt Library

Getting started is pretty straightforward. Think about the way your team already works. A logical structure is key to keeping your library from becoming another messy folder down the road.

A simple folder-based system with clear naming conventions is a great place to start. For instance, you could create top-level folders for "Marketing," "Engineering," and "Customer Support." Inside those, you can create subfolders for specific projects or common tasks.

This simple act of organizing keeps related prompts together and makes them dead simple to find. A well-structured library is the foundation for scaling your AI workflows. It lets your entire team build on what works instead of constantly starting from scratch.

Got Questions About System Prompts? Let's Clear Things Up.

As you start working more with system prompts and different AI models, you're bound to have some questions. It’s totally normal. Getting a handle on these ideas is one thing, but sometimes you just need a straight answer to get unstuck.

Let's walk through some of the most common questions we hear. We'll cut through the confusion and give you practical answers to help you build and manage your prompts like a pro.

What's the Real Difference Between a System Prompt and a User Prompt?

Here’s a simple way to think about it: the system prompt is the AI's permanent job description for the entire chat. It lays down the ground rules, establishes the persona, and defines its main purpose before you even type your first message. It’s the behind-the-scenes director.

A user prompt, on the other hand, is your specific question or command in the moment. Each time you send a message, that’s a user prompt. The system prompt is always there in the background, shaping how the AI responds to every single one of your user prompts.

Why Does My AI Seem to Forget Its Instructions Midway Through a Chat?

Ah, the classic case of "instruction drift." It's a common and frustrating problem, and it usually happens for a couple of reasons. If a conversation gets really long, the original system prompt can get pushed out of the AI's limited short-term memory, which we call its context window.

Sometimes, a particularly complex user request can also confuse the AI and cause it to temporarily ignore its original instructions. To get things back on track, you can try re-stating the core rules in your next user prompt or just start a new chat to give it a fresh slate.

A lot of people think AI models have a memory like ours, but they don't. Their "memory" is just the text currently fitting inside the context window. Once information scrolls out of that window, it's gone for good.

Should I Bother Making Different System Prompts for Different AI Models?

Yes, you absolutely should. While you can often get decent results with a single prompt across models like GPT, Claude, and Gemini, you'll leave a lot of performance on the table. Each model has its own quirks and strengths.

Tailoring your prompt to the model you're using is key to getting the best possible output. For instance:

- Claude: Loves clear, explicit rules. It’s great at following strict instructions, so spell things out.

- GPT: It's incredibly creative. Lean into that, but give it firm guardrails to keep it focused.

- Gemini: Excels at tasks that mix different types of media. Craft prompts that ask it to analyze text and images together.

Can I Put Examples Directly into a System Prompt?

Not only can you, but you definitely should! This is a game-changing technique known as few-shot prompting.

Giving the AI a few clear examples of what you want—showing it the kind of input it will get and the exact output you expect—is one of the most effective ways to improve accuracy. It works wonders for getting structured data like JSON or nailing a very specific tone of voice. Your results will be far more consistent.

Tired of managing prompts in spreadsheets and text files? Promptaa gives you a central command center to organize, test, and share your prompts. Get your whole team on the same page and produce consistent, high-quality results from any AI model. See how it works at https://promptaa.com.