Your Guide to Qwen2.5-Omni-7B Multimodal AI

What if you could have an AI that not only reads text but also sees images and hears audio, all packed into a model that runs smoothly on everyday hardware? That’s exactly what Qwen2.5-Omni-7B brings to the table. It’s a powerful step forward in making multimodal AI practical, squeezing the ability to process text, vision, and sound into a nimble 7-billion-parameter model.

A New Era for Multimodal AI

In a world where AI models are often gigantic and power-hungry, Qwen2.5-Omni-7B is a breath of fresh air. It's built to put sophisticated AI tools into the hands of more developers, creators, and tinkerers—people who don’t have access to a supercomputer.

This isn’t just another chatbot. It's a true multimodal AI. The best way to think about it is giving an AI a set of eyes and ears to go along with its brain. Instead of just reading words on a screen, it can look at a photo, listen to a conversation, and actually understand how they all relate. You could show it a picture of your dog and ask, "What breed is he?" out loud, and it would use both the image and your spoken words to give you an answer.

What Makes This Model Different

The creation of Qwen2.5-Omni-7B is part of a bigger movement toward building AI that is both powerful and efficient. Its relatively small size—just 7 billion parameters—is its defining feature. This makes it far more realistic to deploy on devices you use every day, like a laptop or even a high-end smartphone.

This practicality is a game-changer for building real-world applications. As models like this become more common, it's interesting to see how AI software development is shaping the future and weaving these tools directly into our lives.

Here’s why this approach matters:

- Efficiency: It doesn't demand massive computational resources, which helps keep costs and energy use down.

- Accessibility: Anyone with a decent computer can start experimenting and building with it.

- Versatility: The ability to juggle different types of data opens the door to all sorts of creative and helpful applications.

The real magic is its unified, end-to-end design. It lets Qwen2.5-Omni-7B effortlessly process different inputs and respond with text or natural-sounding speech in real time, setting a new benchmark for what a deployable multimodal AI can do.

Paving the Way for New Applications

In this guide, we're going to pull back the curtain on the Qwen2.5-Omni-7B model. We'll explore its architecture, see how it stacks up against the competition, and get our hands dirty with some real-world examples. The possibilities are huge, from smarter voice assistants to innovative accessibility tools. By the time you're done reading, you’ll have a solid grasp of what this model can do and why it’s making advanced AI more approachable than ever.

How Qwen2.5-Omni-7B’s Architecture Actually Works

So, how does a relatively small 7-billion-parameter model manage to understand text, images, and sound all at once? The secret isn't brute force; it's a really clever design. Qwen2.5-Omni-7B was built from the ground up to be a true multimodal thinker, not just a text model with extra senses bolted on.

At its core, it runs on a sophisticated transformer architecture. This is the same fundamental tech that powers most of the top-tier AIs you hear about today—it’s the engine for reasoning and generating language. But what makes Qwen special are the specialized components layered on top of that foundation.

The whole design philosophy revolves around efficiency. Those 7 billion parameters are like the neurons in its brain. There are enough of them to tackle complex tasks, but the model is optimized to avoid the insane resource hunger you see with much larger models.

The Thinker and The Talker

To pull off its impressive conversational skills, Qwen2.5-Omni-7B uses a smart structure called the Thinker-Talker architecture. It’s a simple but brilliant way to divide the work of understanding and responding.

- The Thinker: This is the brains of the operation. It’s responsible for taking in all the information—text, audio, video—and making sense of it. It analyzes these different data streams, figures out how they connect, and forms a high-level understanding of what you're asking. Then, it generates the core text of the answer.

- The Talker: This part is like the mouth. It takes the text and concepts from the Thinker and converts them into natural-sounding speech. By separating these jobs, the model prevents the different modalities from interfering with each other, so both the thinking and the speaking are top-notch.

This dual-system approach is what makes real-time interactions feel so fluid. The Thinker can be busy processing your input while the Talker is already starting to generate speech, which dramatically cuts down on lag.

Making Sense of Different Data Streams

One of the biggest hurdles in multimodal AI is getting a model to connect different types of data. How does it learn that the sound of a bark relates to the image of a dog? Qwen2.5-Omni-7B solves this by translating everything into a common mathematical language.

Basically, it turns the pixels from an image and the soundwaves from audio into numerical representations known as embeddings. If you want to get a better handle on this, understanding the fundamentals is key. You can dive deeper into how models represent complex data in our guide on what are embeddings in AI.

Once all the inputs are in this unified format, the model can spot patterns and relationships that would otherwise be hidden. This is how it can watch a video and answer a spoken question about it, seamlessly weaving sight and sound together.

The model's architecture isn't just about processing different inputs; it's about creating a unified understanding. It learns the deep connections between words, images, and sounds, allowing it to reason across them as a single, cohesive intelligence.

Training on a Truly Massive Scale

The architecture is only one piece of the puzzle. What the model learned during training is just as important. Released under the permissive Apache 2.0 license, Qwen2.5-Omni-7B was trained on an incredible amount of multimodal data.

We’re talking 18 trillion tokens of text, 800 billion tokens from images and videos, and 300 billion tokens of audio. This massive training diet is what allowed it to achieve top-tier results on specialized benchmarks and become such a powerful tool for developers.

This diverse dataset is the fuel for its architectural engine, giving it the deep, nuanced knowledge it needs to understand the real world.

Exploring Core Multimodal Capabilities

The real magic of Qwen2.5-Omni-7B isn’t just that it handles different types of data, but how it weaves them together into a single, cohesive understanding. It doesn't just see, hear, or read in a silo. It connects these senses all at once, perceiving the world much like we do.

This ability to reason across different modalities is what truly sets it apart and opens up a whole new world of practical uses.

Let's break down its three primary talents—text, vision, and audio—with some real-world examples to show you what this model can actually do. We'll look at each sense on its own before exploring how it combines them for even more impressive results.

Text Understanding and Generation

At its core, Qwen2.5-Omni-7B is an expert with language. It's built on a powerful language model trained on a mind-boggling amount of text, so it's a natural at traditional text-based tasks. But we're talking about much more than just answering simple questions.

For instance, you could feed it a dense technical paper and ask for a summary for someone who isn't an expert. The model can cut through the jargon, pull out the key arguments, and rephrase them in simple, clear language. It's also great at creative writing, generating code, and following complex, multi-step instructions given only in text. This solid linguistic foundation is the bedrock for all its other senses.

Advanced Vision Capabilities

This is where things start to get really interesting. Qwen2.5-Omni-7B can analyze and interpret images with incredible detail. Think of it as a descriptive partner that sees exactly what you see and can explain it back to you.

Imagine you upload a photo of a bustling city street. You could ask it:

- "Describe the overall atmosphere of this scene." It might point out the bright sunlight, hurried pedestrians, and mix of architecture to tell you the mood feels vibrant and energetic.

- "Count the number of bicycles visible in the picture." The model can scan the image to identify and tally specific objects, showing off its precise object recognition skills.

- "What potential traffic hazards do you see?" It could highlight a person stepping off a curb or a car too close to a cyclist, demonstrating its ability to reason about how objects in the scene relate to one another.

This goes way beyond simple image tagging. The model understands context, spatial relationships, and even what’s implied in a picture, turning a static image into a rich source of information.

Sharp Audio Interpretation

The model’s "ears" are just as impressive as its eyes. It can process raw audio to transcribe, translate, and analyze sounds with high accuracy, even in messy, real-world conditions.

Take a recording from a noisy café. Qwen2.5-Omni-7B can accurately transcribe the main conversation while filtering out the background clatter of dishes and other chatter. It can even identify specific non-speech sounds, like noting a "dog barking" or a "siren in the distance." This makes it incredibly useful for things like automated meeting summaries or audio content moderation.

The most significant breakthrough is not in any single capability, but in the model’s ability to fuse them together. It processes text, image, and audio inputs simultaneously to build a holistic understanding of a query, allowing for more natural and intelligent interactions.

This integrated approach is where the real power lies. You could show the model a chart from a business report (image), ask a question about it out loud (audio), and get a detailed explanation back in text. The model uses the visual data from the chart and the context from your spoken words to put together a complete answer.

It's this seamless fusion of senses that makes Qwen2.5-Omni-7B such a versatile and powerful tool.

How Qwen2.5-Omni-7B Stacks Up Against the Competition

Specs on a page are one thing, but how a model performs in a real showdown is what truly matters. This is where Qwen2.5-Omni-7B really starts to impress. It’s a perfect example of a smaller, more agile model that can seriously punch above its weight class. By looking at standardized benchmarks, we get a clear, data-backed picture of how it stacks up against its peers.

Think of AI benchmarks as standardized tests for models. They throw a series of challenges at them—like picking out objects in a cluttered photo or understanding spoken commands—to measure their skills objectively. A high score isn't just a number; it translates directly to better, more reliable performance in the real world. A strong showing on a visual test, for example, means the model is more dependable for things like analyzing product images or moderating user-uploaded content.

A Standout in Multimodal Reasoning

One of the areas where Qwen2.5-Omni-7B really shines is on comprehensive multimodal tests. Benchmarks like OmniBench are built to see how well a model can recognize, interpret, and reason across visual, audio, and text inputs all at once. It's the ultimate test for a true "omni" model.

On these integrated tasks, Qwen2.5-Omni-7B hits state-of-the-art results, often outperforming models that are much larger and hungrier for resources. This tells us its architecture isn't just a collection of separate skills bolted together, but a genuinely unified intelligence that can connect the dots between what it sees, hears, and reads.

This strong performance in combined reasoning is a huge deal. It shows the model is more than the sum of its parts, excelling at tasks that require a deep, contextual grasp of mixed-media inputs—a key advantage in a crowded field.

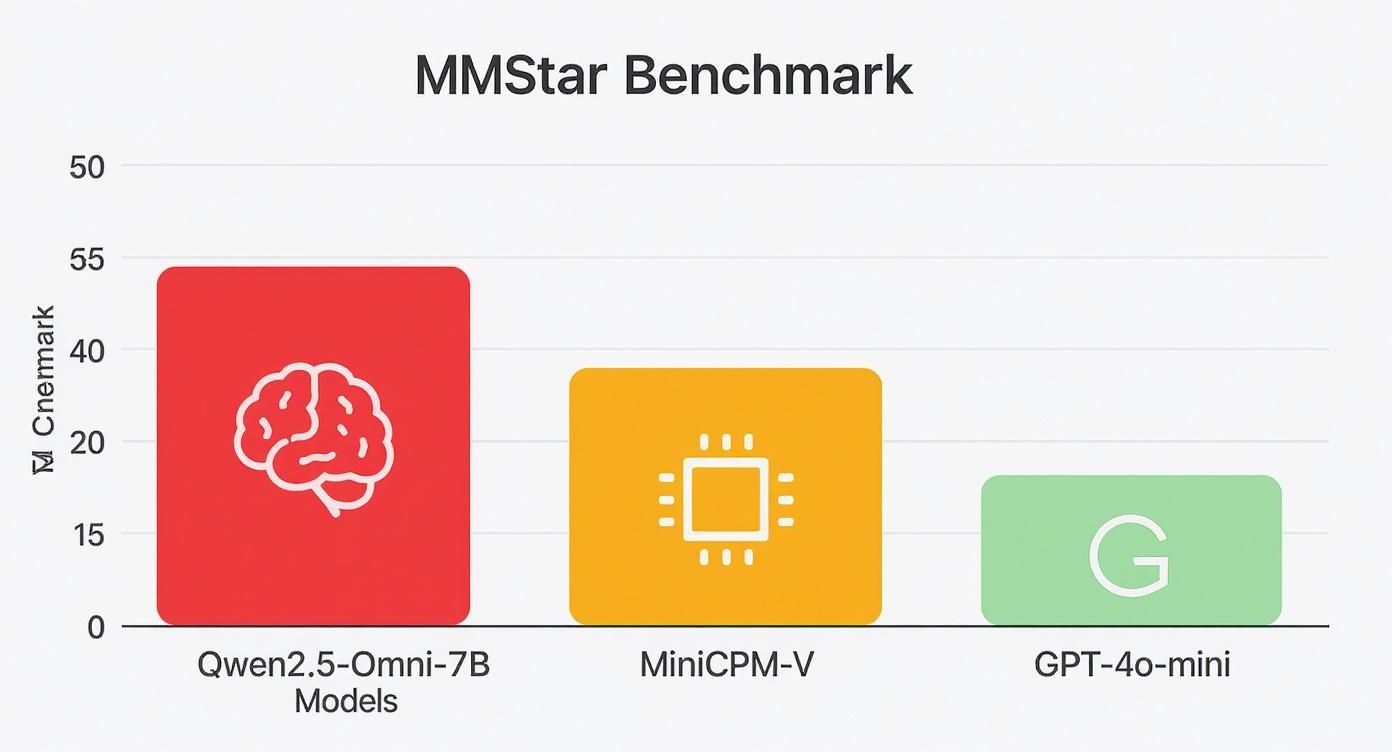

A Head-to-Head Comparison

To really put things into perspective, let's look at how Qwen2.5-Omni-7B performs against some well-known competitors on key benchmarks.

Here’s a quick comparison of Qwen2.5-Omni-7B's performance against some of the big names and its direct rivals. While giant models like Gemini-1.5-Pro might have an edge in some areas due to sheer scale, you’ll see this compact model holds its own surprisingly well.

Qwen2.5-Omni-7B Performance vs Other AI Models

| Benchmark | Qwen2.5-Omni-7B | Gemini-1.5-Pro | GPT-4o-mini | MiniCPM-V |

|---|---|---|---|---|

| OmniBench (Overall) | State-of-the-art | High Performer | N/A | Competitive |

| MMStar (Image Reasoning) | 64% Accuracy | High Performer | 54.8% Accuracy | 64% Accuracy |

| MMSU (Spoken Language) | Top Open-Source | High Performer | N/A | N/A |

| MVBench (Video Understanding) | Excels | High Performer | Competitive | Competitive |

| Seed-TTS (Speech Gen) | Excellent | High Performer | Competitive | N/A |

The numbers don't lie. Qwen2.5-Omni-7B is particularly impressive in image and speech tasks. In image-to-text challenges, it hit 64% accuracy on MMStar, tying its direct competitor MiniCPM-V and leaving OpenAI's GPT-4o-mini in the dust at just 54.8%. It also snagged the top spot among open-source models on the MMSU benchmark for spoken language understanding, proving that a smaller model can absolutely match—or even beat—the big guys.

If you're interested, you can explore a deeper dive into these metrics and get more insights into its competitive edge.

What This Performance Means For You

These scores translate into real, tangible benefits for developers and creators. The model’s high marks in visual and audio benchmarks mean you can count on it to power applications that need to be accurate and dependable.

- For intelligent voice assistants: Its top-tier spoken language understanding means it can follow commands and answer questions with fewer mix-ups, even if there's background noise.

- For content analysis: Its sharp visual reasoning makes it a fantastic choice for systems that need to analyze images for context, identify objects, or moderate visual content.

- For accessibility tools: The killer combo of excellent vision and speech generation makes it perfect for building apps that describe the world for visually impaired users.

At the end of the day, the benchmark performance tells a clear story. Qwen2.5-Omni-7B is a highly capable and efficient model that offers a balanced, powerful alternative to larger, closed-off systems. It gives you an accessible path to advanced multimodal AI without forcing a major trade-off in performance.

Practical Use Cases and Real-World Applications

https://www.youtube.com/embed/pEmCgIGpIoo

While benchmark scores and technical specs are great on paper, the real measure of an AI model is what it can do in the wild. This is where Qwen2.5-Omni-7B stops being a concept and starts becoming a genuinely useful tool for creating and solving problems. Its ability to understand the world through multiple senses opens the door to a whole host of practical applications across countless industries.

A huge part of its appeal is its size. Because it's a compact and efficient model, developers can build powerful applications without needing a warehouse full of expensive hardware. This makes it the perfect foundation for nimble, cost-effective AI agents that can run right on laptops and smartphones, putting advanced AI directly into users' hands.

Smarter Customer Interaction and Support

Customer service is one of the most obvious places it can make an immediate impact. Imagine a support system where a customer can simply show a broken product on video, explain the problem out loud, and get an instant, helpful response. Qwen2.5-Omni-7B can process the visual data from the video and the spoken context from the audio to give troubleshooting steps that are miles ahead of a text-only chatbot.

This creates a much more natural and less frustrating experience for everyone. The AI can pick up on the nuance in a customer's tone and see exactly what they're seeing, which means faster solutions and happier customers.

A Big Leap for Accessibility and Learning

The model’s talent for both vision and speech is a game-changer for accessibility tools. It can power apps that help visually impaired users navigate their environment by giving real-time audio descriptions of what a device's camera sees. Think of it describing a room, reading a restaurant menu, or identifying a person walking toward you.

In education, it could be used to build truly interactive learning experiences. For instance, a student could show the model a complex diagram from a textbook and ask questions about it, getting a clear, verbal explanation in return. This multimodal approach works for different learning styles and makes tough subjects feel a lot more approachable.

The chart below gives you an idea of how its visual reasoning skills stack up against other leading models, as measured by the MMStar benchmark.

This strong performance in visual tasks is exactly why it’s so well-suited for applications that need to interpret the world through a camera.

Advanced Content Creation and Moderation

For content creators, Qwen2.5-Omni-7B can be a powerful creative partner. It can analyze raw video footage to suggest edits, generate descriptive captions based on both sound and visuals, or even help brainstorm new ideas by interpreting a mood board of images.

This knack for understanding context also applies to content moderation. Instead of just flagging keywords, the model can analyze an image or video clip along with its caption to make a much more informed decision. This kind of nuanced understanding helps create safer online communities by catching harmful content that text-only systems would likely miss.

By blending sight, sound, and language, Qwen2.5-Omni-7B enables us to build applications that are more intuitive, helpful, and aware of their surroundings than ever before. We're moving beyond simple automation into the realm of genuine assistance.

Of course, using a model like this effectively goes beyond just the tech. Businesses need to think about broader strategies for AI adoption and revenue growth to really get the most out of their investment. Bringing a tool like Qwen2.5-Omni-7B into your workflow isn't just a technical upgrade; it's a strategic move that can unlock entirely new opportunities. The potential is massive, whether it's for building next-gen personal assistants or intelligent in-car systems that understand both verbal commands and changing road conditions.

How to Get Started with Qwen2.5-Omni-7B

Alright, let's move from theory to practice. Getting Qwen2.5-Omni-7B up and running is probably easier than you think. One of the best things about this model is how accessible it is—it was clearly designed to lower the high entry barrier you often see with powerful AI.

You don't need a massive server cluster to start tinkering. The model is built for efficiency, so it plays nice with a surprising range of hardware, including many consumer-grade GPUs. This focus on performance means more of us can build and test projects right from our own desks.

Accessing the Model

The quickest way to get started is by grabbing the model from popular AI platforms where it's openly shared. Places like Hugging Face have become the go-to hubs for developers, offering everything from the model files to helpful documentation.

- Hugging Face: You'll find the model waiting for you on the Hugging Face Hub. For most people, this is the best place to start. It makes accessing the model weights and tokenizer incredibly simple.

- ModelScope: ModelScope, Alibaba Cloud's own open-source community, also hosts the model, giving you another direct download option.

- GitHub: If you want to get under the hood and look at the source code, the official GitHub repository has everything you need.

Using these platforms means you can typically get the model into your project with just a few lines of code.

Your First Run: A Simple Example

To show you just how straightforward this is, let's walk through a basic implementation. We'll give the model an image and a text prompt and get a text answer back. It's a classic multimodal task that really shows off what this model can do.

First, make sure you have the necessary libraries installed, mainly transformers and torch. With your environment ready, you can load the model and tokenizer straight from Hugging Face.

Heads Up: The first time you run the code, the model files will be downloaded to your machine. It’s a pretty big download, so make sure you have a solid internet connection and enough disk space.

Here’s a quick Python snippet to get you going. It processes an image with a text query:

from transformers import AutoModelForCausalLM, AutoTokenizer

from PIL import Image

import torch

Load the tokenizer and model

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2.5-Omni-7B-Instruct", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-Omni-7B-Instruct", device_map="auto", trust_remote_code=True).eval()

Prepare the inputs

image = Image.open("your_image.jpg").convert("RGB")

prompt = "Describe what is happening in this picture."

Format the query for the model

query = tokenizer.from_list_format([

{'image': image},

{'text': prompt},

])

Generate a response

response, _ = model.chat(tokenizer, query=query, history=None)

print(response)

Think of this code snippet as your starting point. Just swap out the image path and change the prompt, and you can immediately start exploring the visual reasoning power of Qwen2.5-Omni-7B.

And if you want to take it a step further, you can look into techniques like parameter-efficient fine-tuning to adapt the model for your specific needs without the headache of a full retrain.

Frequently Asked Questions

When exploring a new model like Qwen2.5-Omni-7B, a few questions always pop up. Let's get right to the answers to give you a clear picture of what this model is all about.

What's The Big Deal With Qwen2.5-Omni-7B?

Its main advantage is a powerful mix of multimodal skills and efficiency. Most models that handle text, images, and audio are massive and require expensive hardware. Qwen2.5-Omni-7B packs all that into a much smaller 7-billion-parameter model.

This means you get advanced AI capabilities without needing a server farm to run it. It’s designed to perform well on everyday hardware like a good laptop, making high-end AI much more accessible.

What Kind of Projects Is This Model Really Good For?

This model shines in any project where you need to make sense of different types of information at once. It’s a perfect fit for building things like:

- Smarter Voice Assistants: Imagine an assistant that can not only listen to you but also "see" and describe what's in a picture you show it.

- Next-Gen Accessibility Tools: It can create audio descriptions of the visual world for those with visual impairments.

- Interactive Learning Apps: Think of software that can look at a diagram and answer a student's spoken questions about it.

- Better Content Moderation: It can analyze the full context of an image or audio clip, not just text keywords, to make more accurate decisions.

What About AI Hallucinations?

Like any LLM, Qwen2.5-Omni-7B can sometimes invent facts or get things wrong—what we call "hallucinations." However, its ability to process images and sound gives it more context to ground its answers, which can help reduce these errors compared to a text-only model.

The key to keeping it on track is solid prompt engineering. If you want to dive deeper into getting reliable outputs, our guide on how to reduce hallucinations in LLMs has some great tips that work well here too.

What Skills Do I Need To Get Started With It?

You don't need to be an AI researcher. If you have a decent grasp of Python and have worked with common machine learning libraries like transformers or torch, you have what it takes.

Because it’s available on platforms like Hugging Face, you can get it up and running with just a few lines of code. It's a great entry point for developers looking to explore the world of multimodal AI.

Ready to organize and supercharge your AI interactions? At Promptaa, we provide a library to help you create, manage, and enhance your prompts for models like Qwen2.5-Omni-7B, ensuring you get the best possible results every time. Start building smarter prompts today at https://promptaa.com.