12 Best Prompt Management Tools for 2025

Getting consistent, high-quality results from AI like ChatGPT or Claude isn't just about what you ask, it's about how you ask. As AI becomes more integrated into our workflows, from content creation to complex coding, the need for a systematic way to create, store, and refine prompts is critical. Simply relying on memory or scattered notes leads to inefficiency, inconsistent outputs, and a steep learning curve for new team members.

This is where prompt management tools come in. They act as a central nervous system for your AI interactions, transforming chaotic experimentation into a repeatable, scalable process. For developers, this systematic approach is just as crucial as using the best AI tools for writing code to streamline their development cycles. A well-managed prompt library ensures that every API call delivers predictable, high-quality results.

In this comprehensive guide, we'll dive into the 12 best prompt management tools designed to help you organize, test, and deploy your prompts. We'll explore options for every user, from individual creators to enterprise-level teams, showing you how a structured approach can fundamentally change your relationship with AI. Each review includes key features, ideal use cases, pricing, and direct links with screenshots to help you quickly find the perfect platform for your specific needs. This list cuts through the noise to give you a clear, actionable comparison of the top solutions available today, so you can stop guessing and start building with confidence.

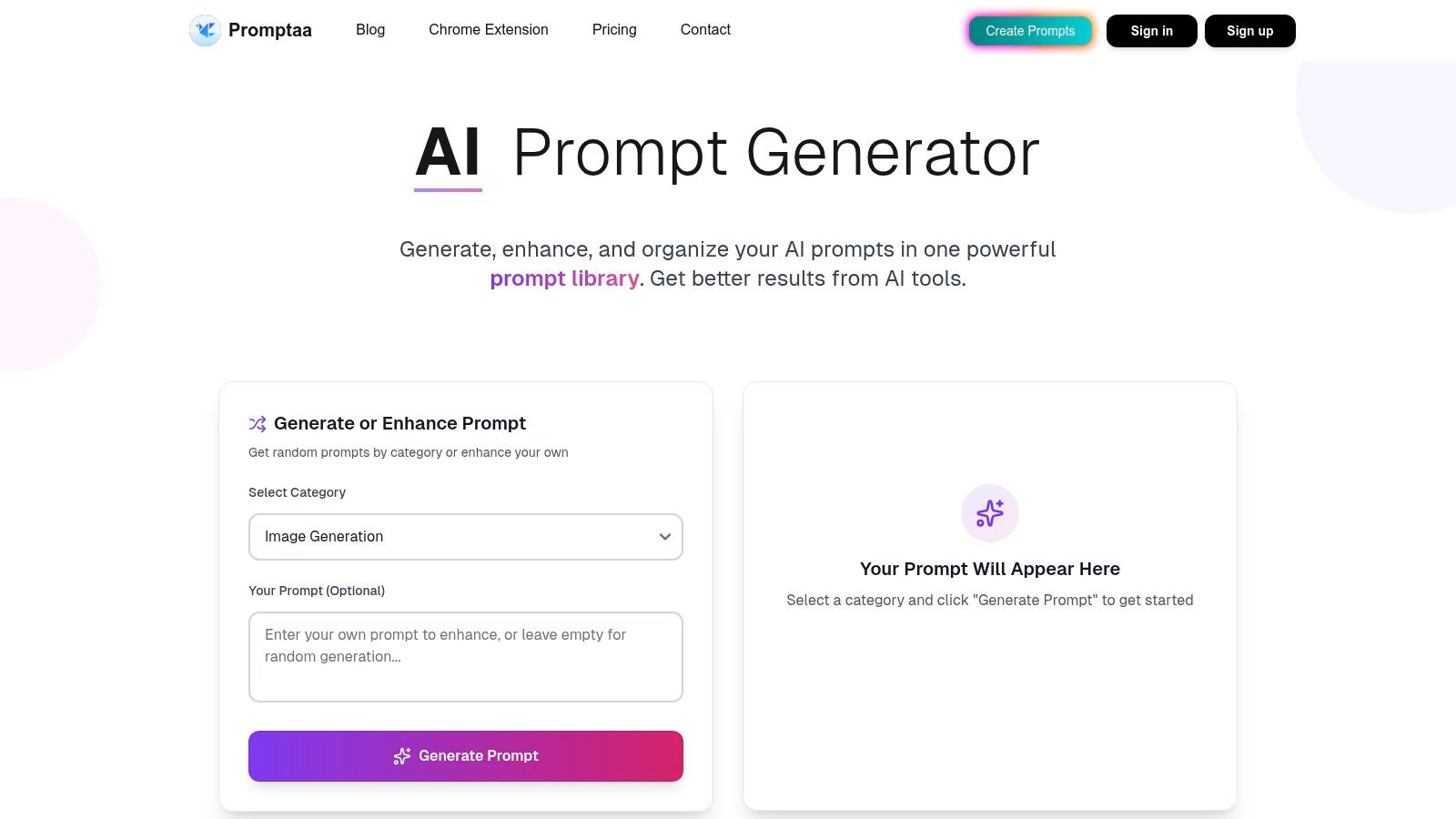

1. Promptaa

Promptaa positions itself as a premier, AI-first solution for anyone looking to master generative AI. It serves as a comprehensive hub for creating, refining, organizing, and sharing prompts. Instead of treating prompts as disposable one-liners, Promptaa helps you build a durable, reusable library of high-quality instructions for any AI model. Its core strength lies in its "magic-wand" enhancement feature, which intelligently transforms a basic idea into a detailed, structured prompt complete with necessary context, constraints, and examples.

This platform excels at bridging the gap between beginner and expert. It provides the scaffolding needed for consistently high-quality outputs, making it one of the most well-rounded prompt management tools available. For those new to the space, Promptaa’s blog is a great resource to understand the fundamentals. To get started, you can learn more about crafting effective prompts on Promptaa.com.

Key Features and Practical Benefits

Promptaa is designed for practical application, focusing on features that deliver tangible improvements in efficiency and output quality.

- AI-Powered Prompt Enhancement: The standout feature is its ability to take a simple concept and instantly flesh it out. For example, you can input "blog post about productivity hacks" and its AI will generate a detailed prompt specifying tone, target audience, key sections, and negative constraints, saving significant manual effort.

- Unlimited, Organized Library: Users can save every prompt in a personal, searchable library. With custom categories, filters, and version history, you can meticulously track iterations and quickly find the exact prompt needed for a task, ensuring consistency across projects. This is invaluable for teams aiming to standardize their AI-generated content or code.

- Broad Use-Case Support: Promptaa isn't limited to just text generation. It supports prompts for a wide array of models, including DALL-E, Midjourney, and Stable Diffusion for images, as well as code, business analysis, and educational content.

- Community and Collaboration: The platform includes community features allowing you to publish your best prompts and discover what works for others. This collaborative ecosystem accelerates learning and provides proven templates you can adapt for your own use cases.

Who Should Use It?

Promptaa is ideal for professionals and enthusiasts who interact with AI tools daily. Content creators, developers, marketers, and educators will find its library and enhancement features essential for maintaining quality and saving time. While specific pricing isn't detailed without visiting the site, its robust feature set makes it a compelling choice for anyone serious about improving their prompt engineering workflow.

Website: https://promptaa.com

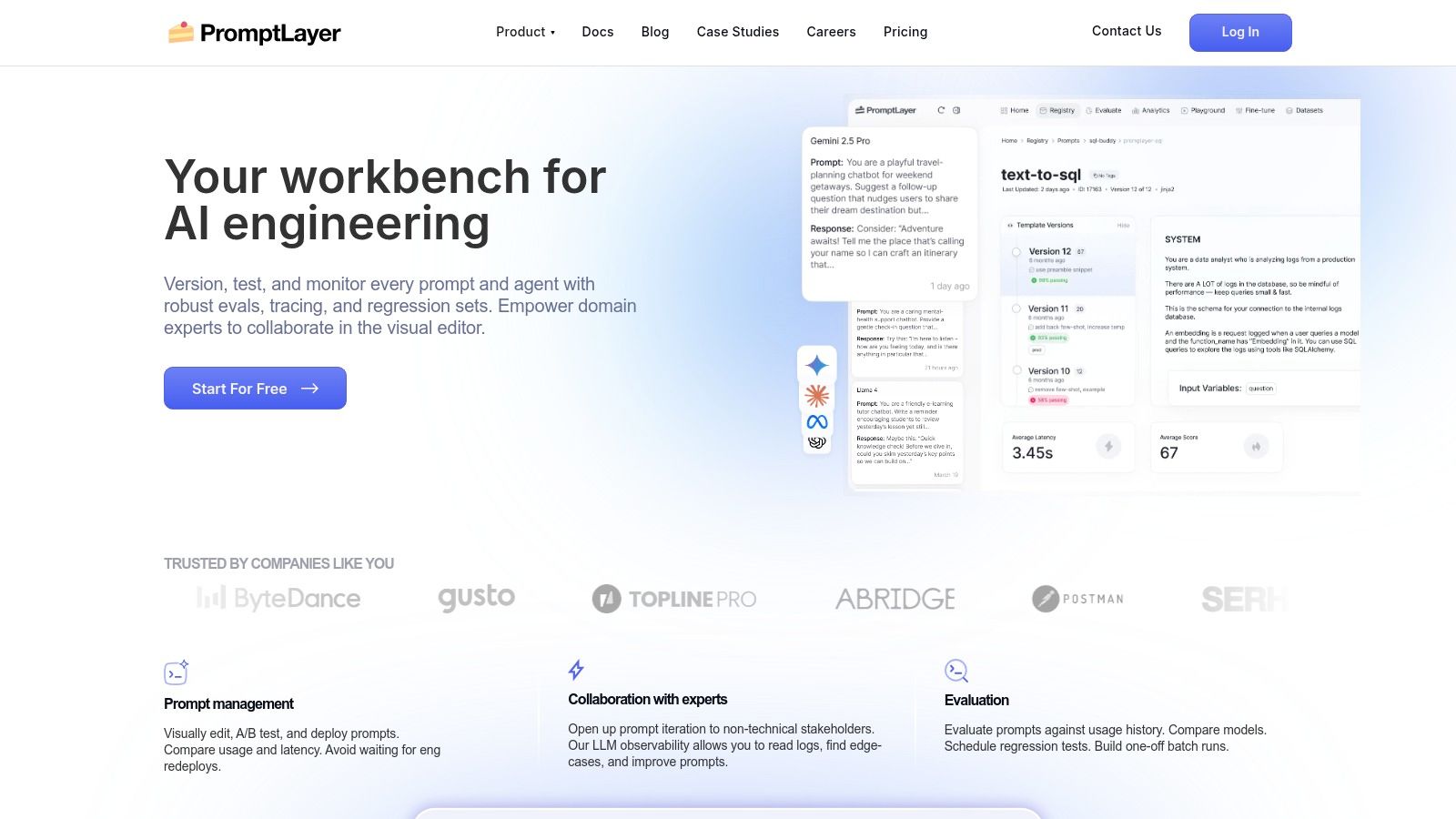

2. PromptLayer

PromptLayer positions itself as a robust platform for engineering teams, functioning less like a personal library and more like a dedicated content management system (CMS) for prompts. It's designed to bring the rigor of software development lifecycles to prompt engineering. This tool excels at getting prompts out of your application's code and into a centralized, governed registry where they can be versioned, tested, and safely deployed.

The platform is built for collaboration, enabling non-technical stakeholders like product managers or copywriters to iterate on prompt templates without touching the codebase. Its visual editor, complete with version control and rollback capabilities, makes this possible. For developers, the real power lies in the built-in observability and evaluation tools.

Key Features and Use Cases

- Version Control & Registry: Think of it like Git for your prompts. You can track every change, compare versions, and roll back to a previous state if a new prompt underperforms.

- A/B Testing & Evaluations: PromptLayer allows you to run head-to-head tests between prompt versions and set up scheduled regression tests to ensure performance doesn't degrade over time.

- Observability: The platform provides detailed logs, cost tracking, and latency metrics for every prompt execution, helping you monitor and optimize AI-powered features in production.

- Team Collaboration: With access controls and a shared registry, it’s ideal for teams where multiple people contribute to prompt creation and maintenance.

Who Is It For?

PromptLayer is one of the best prompt management tools for software development teams building and maintaining AI applications at scale. Its value shines in production environments where prompt performance, stability, and governance are critical. While individuals could use it, the feature set is truly optimized for a team workflow.

Pros:

- Bridges the gap between technical and non-technical teams.

- SOC 2 Type II / HIPAA options for regulated industries.

- Offers both cloud and self-hosted enterprise plans.

Cons:

- Usage-based pricing can become costly for high-volume applications.

- May be overly complex for solo developers or hobbyists.

Website: https://www.promptlayer.com/

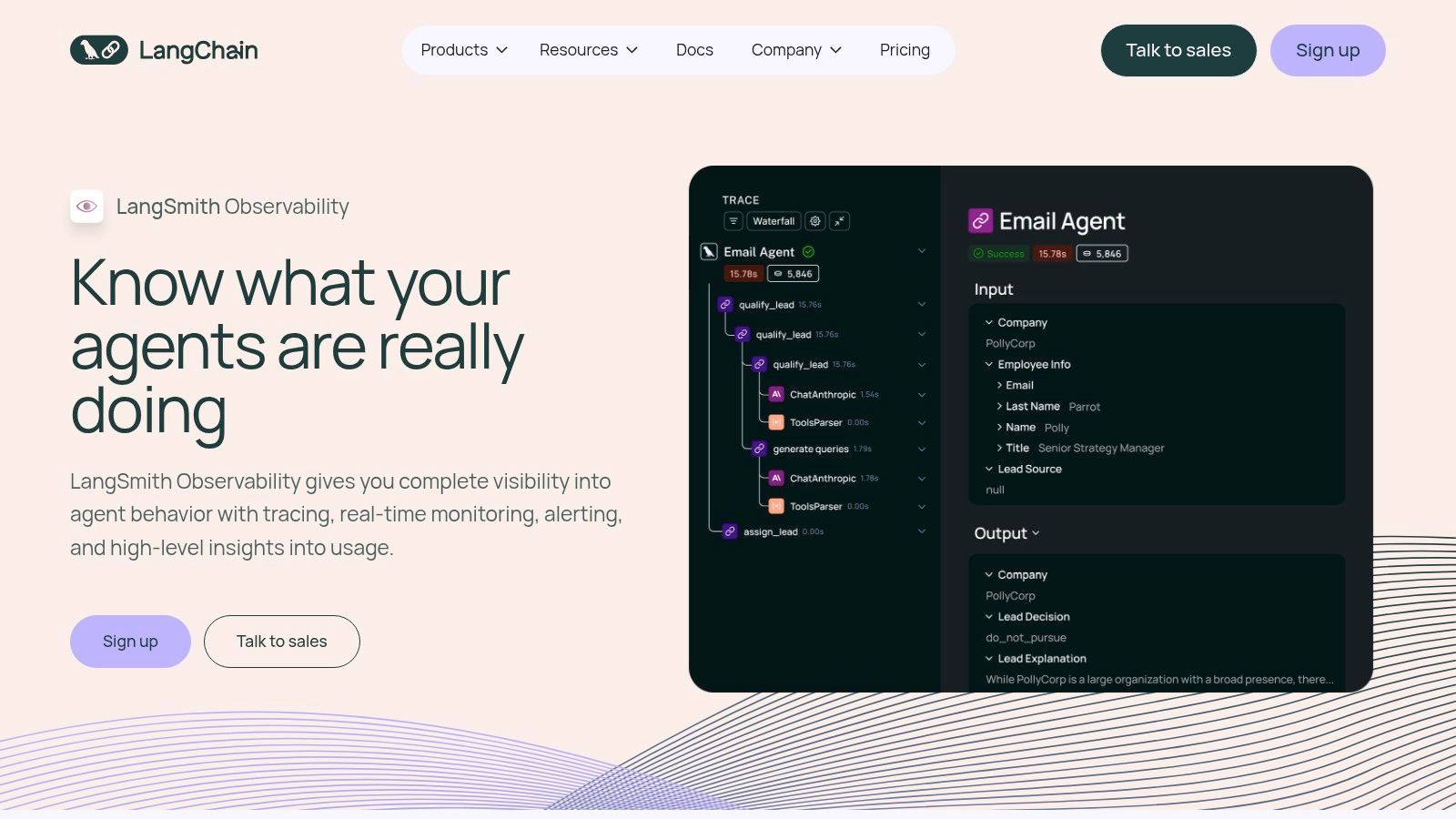

3. LangSmith (by LangChain)

LangSmith is the production-focused platform from the creators of the popular LangChain framework. It’s designed to be a comprehensive suite for debugging, testing, evaluating, and monitoring large language model applications. While it integrates seamlessly with the LangChain ecosystem, it also functions as a standalone tool, offering robust observability for any LLM-powered system. The core idea is to bring development best practices to the often chaotic world of LLM app creation.

The platform excels at providing deep insights into every step of your application’s execution chain. From prompt inputs to model outputs and tool usage, LangSmith traces everything, making it far easier to pinpoint errors, identify performance bottlenecks, and understand why your application behaved a certain way. This granular level of detail is crucial for moving from a prototype to a reliable production-grade service.

Key Features and Use Cases

- Tracing & Observability: Get a complete, step-by-step view of your application's runs. This is invaluable for debugging complex chains and agents to see exactly where things went wrong.

- Dataset-Driven Evaluations: Create datasets of inputs and expected outputs to systematically test your prompts. You can compare different prompt versions or even different models against the same dataset to make data-driven decisions.

- Prompt Hub & Playground: A central place to manage, version, and collaborate on prompts. It allows for quick iteration and testing before deploying changes to your application.

- Monitoring & Analytics: Track key metrics like latency, cost, and token usage over time. Set up alerts to get notified of regressions or unexpected spikes in activity.

Who Is It For?

LangSmith is one of the best prompt management tools for developers and engineering teams building complex LLM applications, especially those already using or considering the LangChain framework. Its strength lies in providing the deep-level debugging and evaluation tools needed to build, ship, and maintain reliable AI products. Applying prompt best practices is easier when you can see the direct impact of your changes.

Pros:

- Works with or without the LangChain framework.

- Strong ecosystem and excellent documentation.

- Offers enterprise-grade options like EU/US data locality and self-hosting.

Cons:

- Its detailed, usage-based pricing can be complex to predict and monitor.

- Some advanced enterprise features may require direct sales engagement.

Website: https://www.langchain.com/langsmith

4. Humanloop

Humanloop is an enterprise-grade platform focused on connecting prompt engineering with rigorous evaluation and human feedback. It’s designed for organizations that need to maintain high standards of quality and governance over their AI applications. The platform excels at creating a structured workflow where prompts are not just stored but are continuously tested, evaluated, and improved with direct input from both automated systems and human reviewers.

This tool centralizes the entire lifecycle of a prompt, from initial creation and versioning to deployment and real-time monitoring. It empowers cross-functional teams, including product managers and subject matter experts, to collaborate on prompt development within a controlled environment. Its emphasis on human-in-the-loop processes and detailed tracing makes it a powerful choice for applications where accuracy and compliance are non-negotiable.

Key Features and Use Cases

- Prompt Versioning & Deployments: Manage prompt templates with version control and use tagged deployments to control which version is live, complete with role-based access.

- Robust Evaluation Framework: Implement both offline (using historical data) and online (live traffic) evaluators, alongside dedicated workflows for human review and feedback.

- Tracing & Observability: Capture detailed logs of model inputs and outputs, set up alerts for performance degradation, and integrate end-user feedback directly into the platform.

- CI/CD Integration: Seamlessly integrate prompt updates into your existing software development lifecycle, treating prompt management with the same rigor as code.

Who Is It For?

Humanloop is one of the best prompt management tools for large, cross-functional teams in regulated or quality-sensitive industries. Its structured evaluation and feedback loops are ideal for companies that need to prove model effectiveness, ensure compliance, and involve non-technical stakeholders in the AI development process.

Pros:

- Built for collaboration between engineers, PMs, and subject matter experts.

- Strong compliance posture with SOC 2 Type II and HIPAA options (BAA).

- Offers a free trial to explore core evaluation and management features.

Cons:

- Enterprise pricing requires contacting sales, which can slow down adoption.

- Maximum value is realized when evaluation workflows are deeply integrated into the development process.

Website: https://humanloop.com/

5. Langfuse

Langfuse offers an open-source approach to LLM engineering, blending prompt management with deep observability. It's built for technical teams who want full control over their stack, providing a self-hostable core alongside a managed cloud version. The platform centers around the concept of "traces," which give you a granular view of your application's execution flow, from user input to the final LLM output.

This focus on observability makes Langfuse particularly powerful for debugging and optimizing complex AI workflows. Instead of just storing prompts, it links them directly to performance data like latency, token usage, and cost. This allows teams to understand not just what a prompt says, but how it actually performs in a live environment, making it a comprehensive tool for both development and production monitoring.

Key Features and Use Cases

- Open-Source & Self-Hostable: The core platform is MIT licensed, giving teams the ultimate flexibility to deploy it within their own infrastructure for maximum data privacy and control.

- Tracing and Observability: Track and visualize every step of your LLM-powered features. This is invaluable for debugging complex chains or agent-based systems.

- Prompt Management: Create, version, and manage prompts as first-class objects. You can fetch the active production version of a prompt directly from your application code.

- Evaluations & Datasets: Build datasets from production traces and run evaluations to score prompt performance, helping you rigorously test changes before deployment.

Who Is It For?

Langfuse is one of the best prompt management tools for engineering teams that prioritize open-source flexibility and deep operational insight. It's a great fit for organizations that want to avoid vendor lock-in and require the ability to self-host their tooling. The detailed tracing is especially useful for developers working on sophisticated, multi-step AI applications where understanding the entire execution path is critical.

Pros:

- True open-source core allows for free self-hosting.

- Excellent observability features for debugging and performance monitoring.

- Clear, graduated cloud pricing with discounts for startups.

Cons:

- Self-hosting requires setup and ongoing operational effort.

- Advanced features like SSO and RBAC are gated to paid tiers.

Website: https://langfuse.com/

6. Helicone

Helicone operates as an open-source observability platform and LLM gateway, providing a unified layer for managing API calls, monitoring performance, and organizing prompts. It's designed to give developers deep visibility into their AI application's usage, costs, and latency, while also serving as a central hub for prompt templates. The platform acts as a proxy, simplifying integration to a one-line code change and enabling powerful features like intelligent caching and request routing.

This approach allows teams to access over 100 different models through a single API endpoint, track every request, and manage prompts without cluttering their application code. For businesses, Helicone's dashboard provides critical insights into spending and user behavior, making it a comprehensive tool that combines observability with foundational prompt management.

Key Features and Use Cases

- LLM Gateway & Observability: Unify access to 100+ models with a single API. Monitor costs, latency, and user activity through detailed dashboards and set up alerts for anomalies.

- Intelligent Caching & Routing: Reduce costs and latency with smart caching. Set up automatic fallbacks to different models if a primary provider fails, enhancing application reliability.

- Prompt Template Management: Create, version, and manage your prompt templates directly within the Helicone dashboard, keeping them separate from your core application logic.

- User-Centric Analytics: Track API usage on a per-user basis, which is invaluable for setting rate limits, understanding user behavior, and implementing fair billing models.

Who Is It For?

Helicone is one of the best prompt management tools for developers and engineering teams who need a robust observability layer for their LLM-powered applications. It's particularly useful for startups and businesses that require detailed cost tracking, performance monitoring, and the flexibility to switch between different AI models without extensive refactoring.

Pros:

- Quick, one-line integration simplifies setup.

- Offers a 0% markup on provider pricing via its managed credits option.

- Provides options for U.S. or EU data residency.

Cons:

- Full prompt management and compliance features are locked behind higher-tier plans.

- Adds an additional proxy layer, which may be a concern for some security policies.

Website: https://www.helicone.ai/

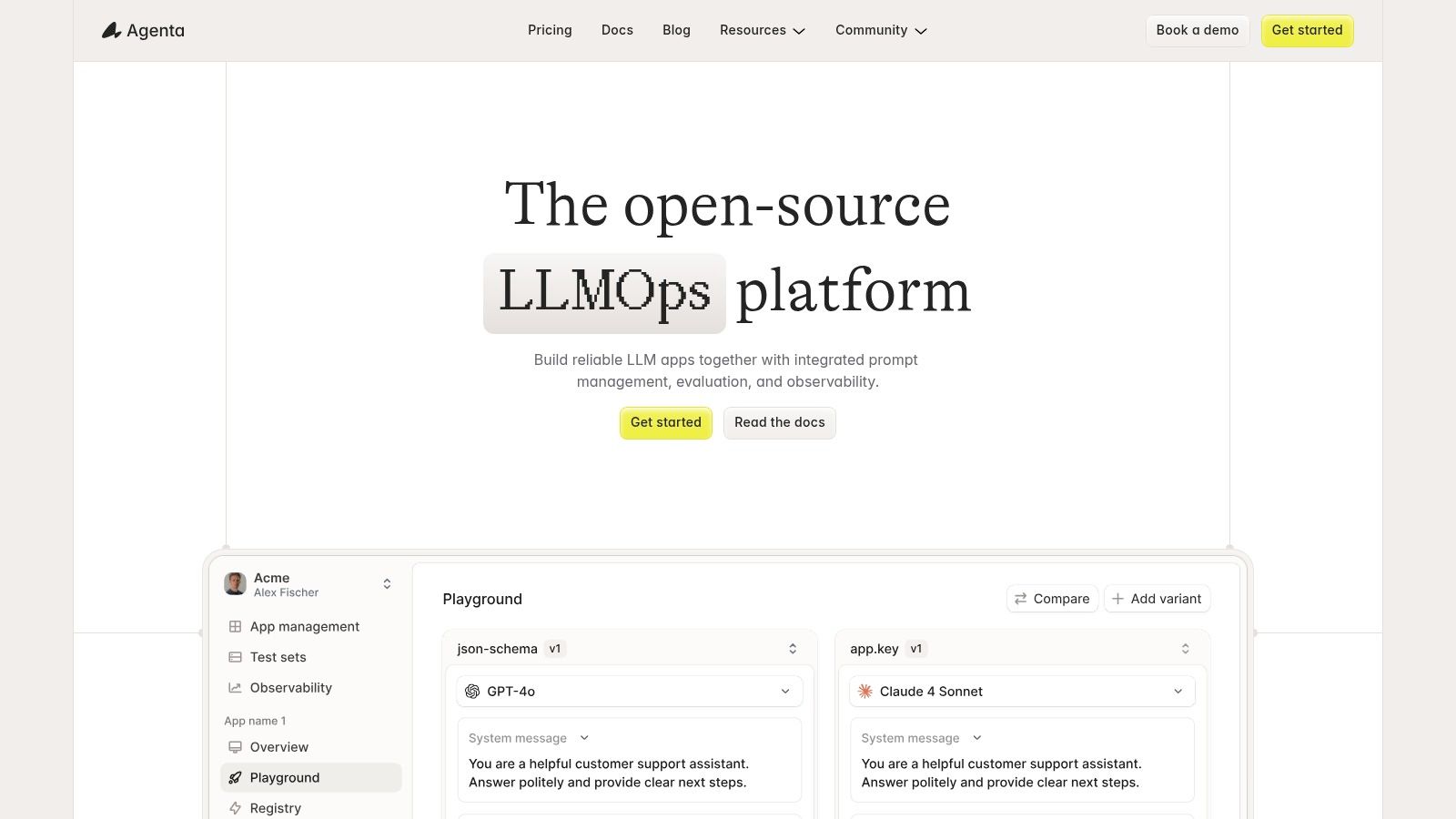

7. Agenta

Agenta is a comprehensive, open-source LLMOps platform designed to streamline the entire lifecycle of developing LLM-powered applications. It moves beyond simple prompt storage, offering an integrated environment for prompt engineering, evaluation, and operational monitoring. The platform is built for teams that require a systematic approach, combining a powerful prompt playground with robust testing and observability features.

Its open-source nature and self-hosting capabilities make it a flexible choice for organizations with specific data privacy or infrastructure requirements. Agenta aims to provide a complete toolkit, from initial experimentation in the playground to deploying and monitoring prompts in a continuous integration (CI) pipeline, positioning it as a serious contender among developer-focused prompt management tools.

Key Features and Use Cases

- Integrated Playground: Experiment with different models, compare prompt versions side-by-side, and manage your prompt library with version history and rollback capabilities.

- Automated & Human Evaluations: Create test sets to systematically evaluate prompt performance against defined criteria, incorporating both automated metrics and human feedback loops.

- Tracing & CI Integrations: Gain deep visibility into application behavior with detailed tracing and integrate your evaluation workflows directly into your CI/CD pipeline for continuous quality assurance.

- Flexible Deployment: Choose between a fully managed cloud offering or a self-hosted deployment for maximum control over your data and infrastructure.

Who Is It For?

Agenta is ideal for engineering teams and AI developers who need an end-to-end, open-source platform to build, evaluate, and deploy LLM applications reliably. Its emphasis on automated testing and CI integration makes it particularly suitable for teams practicing DevOps principles. The generous free tier and self-hosting options also appeal to startups and individual developers looking for powerful tools without a significant initial investment.

Pros:

- Free self-hosted option and a generous Business tier on the cloud.

- Founder-active community with responsive support via Slack.

- Clear, per-trace overage pricing for predictable cost scaling.

Cons:

- Cloud Pro seats and high-volume trace usage can become costly.

- Some enterprise-grade features like SSO/MFA are still on the roadmap.

Website: https://agenta.ai/

8. PromptBase

PromptBase takes a different approach, positioning itself not as a technical management tool but as a vibrant marketplace for prompts. It's a platform where users can buy and sell high-quality, pre-engineered prompts for a wide range of AI models, including ChatGPT, DALL-E, Midjourney, and Stable Diffusion. This is less about managing your own internal library and more about acquiring ready-made assets to jumpstart a project or solve a specific creative or business challenge.

For individuals or teams who lack the time or expertise to craft complex prompts from scratch, PromptBase offers a massive, searchable catalog of proven solutions. You can find everything from prompts for generating detailed business plans to creating specific artistic styles. The marketplace model also allows expert prompt engineers to monetize their skills, creating a dynamic ecosystem of supply and demand.

Key Features and Use Cases

- Prompt Marketplace: Browse, search, and purchase individual prompts with detailed descriptions, examples, and seller ratings.

- Wide Model Support: Find prompts specifically engineered for popular models like GPT-4, Midjourney V6, and others, ensuring compatibility and optimal results.

- Custom Prompt Commissions: If you can't find what you need, you can hire top prompt engineers directly through the platform to create a custom prompt for your specific use case.

- Creator Storefronts: Sellers can build a reputation and a following by creating their own storefront, showcasing their portfolio of high-quality prompts.

Who Is It For?

PromptBase is ideal for freelancers, marketers, artists, and small teams looking for quick, effective prompt solutions without the overhead of building them internally. It's a valuable resource for anyone who wants to leverage expert-level prompting for specific tasks. It is not, however, one of the prompt management tools designed for internal governance, versioning, or team collaboration on a proprietary prompt library.

Pros:

- Pay-per-prompt model means no subscription commitment.

- Large, active catalog with thousands of options for creative and business needs.

- Enables rapid access to high-quality prompts without extensive engineering effort.

Cons:

- Prompt quality can vary significantly between sellers, requiring careful vetting.

- Lacks features for internal team management, version control, or performance tracking.

Website: https://promptbase.com/

9. Google Cloud Vertex AI – Prompt Management

For organizations deeply integrated into the Google Cloud Platform (GCP) ecosystem, Vertex AI’s built-in prompt management capabilities offer a native, highly secure solution. Rather than a standalone tool, it's a feature set within the broader Vertex AI Studio and SDK, designed to bring enterprise-level governance to prompt engineering. This approach ensures that prompts are treated like any other critical software asset within your cloud environment.

The primary benefit is its seamless integration with existing GCP security and operational controls. Teams can create, save, version, and retrieve prompts programmatically, all while adhering to established governance protocols like CMEK (Customer-Managed Encryption Keys) and VPC Service Controls. This makes it an ideal choice for businesses where data security and compliance are non-negotiable, and the AI workflow needs to align with existing cloud infrastructure.

Key Features and Use Cases

- Native SDK Integration: Provides SDK prompt classes and versioned templates, allowing developers to manage prompts directly within their Python or JavaScript applications.

- Enterprise Security: Leverages the full suite of GCP governance and security tools, ensuring prompts and their data are protected by your organization's existing policies.

- Gemini Model Optimization: Designed to work flawlessly with Google's Gemini family of models and other services available through Vertex AI.

- Centralized Management: Acts as a single source of truth for prompts used across various applications running on Google Cloud, simplifying maintenance and updates.

Who Is It For?

Google Cloud Vertex AI's prompt management is built for enterprise development and data science teams that have standardized on GCP. It’s particularly valuable for organizations in regulated industries that need to maintain strict security, compliance, and deployment hygiene for their AI applications. It is less a tool for casual users and more a strategic component for large-scale, cloud-native AI development.

Pros:

- First-party integration with the entire GCP stack.

- Enforces consistent enterprise security and deployment controls.

- Excellent documentation and robust SDK support.

Cons:

- Creates significant vendor lock-in with Google Cloud.

- Pricing is separate for models, grounding, and tokens, which can complicate cost management.

Website: https://cloud.google.com/vertex-ai/

10. Claude (Anthropic) – Projects / Workbench

For teams already invested in the Anthropic ecosystem, Claude's native web and desktop suite offers built-in tools for prompt organization. The "Projects" feature functions as a lightweight workspace, allowing users to group related prompts, files, and chat sessions together. This creates a convenient, self-contained environment for iterating on prompts specifically with Claude's long-context models.

While it's not a standalone, dedicated prompt management system, this integrated approach provides a seamless on-ramp for individuals and teams. The "Workbench" style interface is ideal for experimentation and exploration, letting users test different prompt variations and see immediate results. For organizations, the Team and Enterprise plans add essential admin controls like SSO and user management, making it a viable option for collaborative prompt refinement within a secure, controlled setting.

Key Features and Use Cases

- Project-Based Organization: Users can create distinct "Projects" to keep different workflows, prompts, and associated documents neatly separated and easily accessible.

- Direct Model Access: The environment provides immediate access to the latest Claude models, including their extensive context windows, which is ideal for tasks involving large documents.

- Team Collaboration: With Team and Enterprise plans, you can invite collaborators into projects, making it easy to share and refine prompts collectively.

- Add-on Capabilities: Integrated tools like web search and code execution can be enabled to expand the types of prompts you can effectively build and test.

Who Is It For?

Claude's built-in tools are best for individuals and teams who primarily use Anthropic's models and need a simple, integrated way to organize their work. It’s an excellent starting point for those who don't yet require the complex versioning and observability features of more specialized prompt management tools. It helps keep prompts accurate, an important factor in AI performance. Read more about how to reduce hallucinations in your LLM.

Pros:

- Extremely simple on-ramp for existing Claude users.

- Scales from a free individual tier up to enterprise-level plans.

- Directly integrated with Claude's unique model capabilities.

Cons:

- Lacks the advanced registry, versioning, and A/B testing features of dedicated tools.

- Primarily focused on the Anthropic ecosystem, offering no multi-provider support.

Website: https://claude.com/

11. Weights & Biases (W&B) – Weave / Prompt assets

Weights & Biases (W&B) extends its comprehensive MLOps platform to address prompt engineering challenges through its Weave and Prompts features. Rather than a standalone tool, W&B integrates prompt management directly into the machine learning lifecycle. It treats prompts as versioned assets, similar to datasets or models, allowing teams to track, compare, and manage them with the same rigor.

This approach is powerful for organizations already using W&B for model training and monitoring. Prompts can be linked directly to experiments, evaluations, and production traces, creating a complete lineage from template to performance outcome. It’s a holistic solution that embeds prompt management deep within the existing MLOps toolchain, making it one of the most integrated prompt management tools for established ML teams.

Key Features and Use Cases

- Prompt Assets & Versioning: Store prompts as versioned objects within W&B. You can view diffs between versions and manage them as part of your CI/CD pipeline.

- Tracing & Evaluation: The Weave toolkit provides detailed tracing for LLM calls, capturing inputs, outputs, costs, and latency. You can build custom evaluation pipelines (scorers) to test prompt performance systematically.

- Team Collaboration: Prompts and their associated evaluations are centralized in a shared workspace, providing a single source of truth for the entire team.

- Lineage & Auditing: Every prompt and its performance are tracked, creating an auditable trail that links a specific prompt version to its results in production.

Who Is It For?

Weights & Biases is ideal for established data science and machine learning teams who are already leveraging the W&B ecosystem for their model development operations. It’s perfect for organizations that need to maintain strict governance, lineage, and audit trails for their AI applications.

Pros:

- Mature, enterprise-ready platform with robust support.

- Seamlessly integrates prompt management into the broader MLOps workflow.

- Offers private and enterprise hosting options for enhanced security.

Cons:

- Per-user pricing can become expensive for larger teams.

- The platform’s breadth can be overwhelming for teams needing only a simple prompt library.

- LLM inference costs are managed and billed separately through the respective providers.

Website: https://wandb.ai/

12. Promptfoo

Promptfoo takes a developer-centric, code-first approach to prompt quality assurance. It is an open-source framework designed for automated prompt testing and evaluation, positioning itself less as a prompt library and more as a critical CI/CD gating tool. Its core function is to systematically test prompt variations against predefined test cases before they ever reach production.

The tool operates using simple YAML configuration files where you define your prompts, models, and evaluation criteria. This allows developers to run regression tests, compare outputs from different providers like OpenAI and Anthropic side-by-side, and score results based on custom logic. It’s built to integrate directly into existing development pipelines, ensuring that prompt changes are as rigorously tested as any other code change.

Key Features and Use Cases

- Automated Evaluation: Define test suites in YAML to run A/B tests and regression checks on prompts, scoring outputs for quality, correctness, or adherence to format.

- Multi-Provider Comparison: Simultaneously test the same prompt across different models and providers to find the best-performing and most cost-effective option for your use case.

- CI/CD Integration: Designed to be a step in your deployment pipeline, it can automatically block a release if a prompt change leads to a degradation in quality.

- Open-Source & Hosted Options: Use the powerful open-source core for free or opt for hosted plans that add dashboards, team collaboration features, and enterprise support.

Who Is It For?

Promptfoo is one of the best prompt management tools for engineering teams that want to enforce quality and prevent regressions in their AI applications. It's ideal for those who prefer a "prompts-as-code" workflow and need a reliable way to validate changes systematically. While it’s not a full prompt CMS, it serves as an essential companion to one.

Pros:

- Free and powerful open-source core with an active community.

- Excellent for gating prompt changes within a CI/CD pipeline to enforce quality.

- Security-focused test libraries and enterprise support options are available.

Cons:

- Not a full prompt management system; focuses purely on testing and evaluation.

- The hosted version's pricing varies by volume, requiring contact for enterprise details.

Website: https://www.promptfoo.dev/

Top 12 Prompt Management Tools Comparison

| Tool | Core features ✨ | Quality/UX ★ | Value/Price 💰 | Target audience 👥 |

|---|---|---|---|---|

| 🏆 Promptaa | ✨ Instant prompt generator, AI "enhance", unlimited searchable library, Chrome ext. | ★★★★☆ — beginner → pro friendly | 💰 Pricing on site / community-driven | 👥 Creators, devs, teams, educators |

| PromptLayer | ✨ Visual editor, versioning, A/B tests, registry & metrics | ★★★★☆ — team-focused workflow | 💰 Usage-based; can scale with overages | 👥 Teams needing governed prompt CMS |

| LangSmith (LangChain) | ✨ Tracing, dataset evals, prompt/run comparisons, managed deploys | ★★★★☆ — developer-centric | 💰 Node/trace/uplift billing (granular) | 👥 LLM app builders & enterprises |

| Humanloop | ✨ Versioning, feedback workflows, tracing, CI/CD | ★★★★☆ — evaluation & governance strong | 💰 Enterprise via sales; free trial available | 👥 Cross‑functional teams & regulated orgs |

| Langfuse | ✨ Open-source core, prompt objects, traces, cost/token tracking | ★★★★☆ — OSS flexibility | 💰 Free self-host / paid cloud tiers | 👥 Teams wanting OSS observability |

| Helicone | ✨ Unified API (100+ models), caching, routing, prompt history | ★★★★☆ — easy integration | 💰 0% markup option / Team plans for full features | 👥 Teams needing unified model gateway |

| Agenta | ✨ Prompt playground, evaluations, tracing, CI integrations | ★★★★☆ — active community, founder-led | 💰 Free self-host / Business & cloud tiers | 👥 Teams wanting LLMOps + self-host |

| PromptBase | ✨ Marketplace with 230k+ prompts, creator storefronts | ★★★☆☆ — catalog varies by seller | 💰 Pay-per-prompt (no sub) | 👥 Individuals & teams buying templates |

| Google Cloud Vertex AI – Prompt Mgmt | ✨ SDK templates, GCP security (CMEK, VPC), Gemini support | ★★★★☆ — enterprise-grade | 💰 Billed via GCP (model & infra costs separate) | 👥 GCP-centric enterprises |

| Claude (Anthropic) – Projects / Workbench | ✨ Project spaces, long-context models, add‑ons (search, code) | ★★★★☆ — smooth experimentation | 💰 Included in Claude plans (API pricing applies) | 👥 Teams using Anthropic ecosystem |

| Weights & Biases (W&B) | ✨ Prompt assets, diffs, tracing, lineage & CI/CD | ★★★★☆ — mature MLOps UX | 💰 Per-user Pro pricing; infra billed separately | 👥 ML teams needing auditability |

| Promptfoo | ✨ YAML-based automated testing, regression & A/B in CI | ★★★★☆ — CI/CD gating specialist | 💰 Free OSS core; hosted/team pricing by volume | 👥 Devs enforcing prompt quality in pipelines |

Integrating a Prompt Tool Into Your Workflow: The Path Forward

Navigating the landscape of prompt management tools can feel like a significant undertaking, but the journey from scattered text files to a structured, powerful system is a transformative one. We've explored a dozen distinct platforms, from the intuitive, creator-focused library of Promptaa to the developer-centric, observability-driven powerhouses like LangSmith and Langfuse. The core lesson is clear: the era of ad-hoc, disposable prompting is over.

To truly harness the potential of generative AI, a systematic approach is no longer a luxury but a necessity. The right tool acts as a force multiplier, turning your best ideas into reusable, version-controlled assets. This structured practice prevents redundant work, ensures consistency across teams, and provides a solid foundation for evaluating and improving AI outputs over time.

From Selection to Integration: Your Action Plan

Choosing the right tool is the first crucial step, but its true value is only unlocked through deliberate integration into your daily habits and workflows. The key is to start small and build momentum. Don't try to migrate hundreds of prompts overnight.

Here are a few actionable steps to get started:

- For Individuals and Small Teams: Select a tool like Promptaa or PromptBase and begin by migrating your top 10-20 most frequently used or highest-performing prompts. Focus on building the muscle memory of reaching for your new library instead of a messy notes file. Categorize them with a clear, logical structure from day one.

- For Development Teams: If you're building LLM-powered applications, your needs are more complex. Platforms like Helicone, Langfuse, or PromptLayer should be integrated directly into your development lifecycle. Set up A/B tests for prompt variations and use an evaluation framework like Promptfoo to automate regression testing within your CI/CD pipeline. This prevents a brilliant new prompt from inadvertently breaking an existing feature.

- For Enterprise-Level Operations: When deploying AI at scale, governance, security, and observability are paramount. Tools like Google's Vertex AI or Humanloop offer the robust features needed for managing prompts across multiple teams and applications. Establish a clear governance model: who can create prompts, who reviews them, and how are they approved for production use?

Key Factors to Guide Your Decision

As you weigh the options we've discussed, circle back to your primary use case. Are you a content creator focused on creative output, or a software engineer building a complex RAG system? Your answer will significantly narrow the field.

Consider these final guiding questions:

- What is my core goal? Is it organization and collaboration (Promptaa), or is it deep technical observability and debugging (LangSmith, Langfuse)?

- Who is the end-user? Will non-technical team members need to create and manage prompts, or is this a tool exclusively for developers?

- How will I measure success? Is success defined by faster content creation, reduced LLM costs, or higher-quality, more reliable application outputs?

Once a prompt management tool is integrated into your workflow, measuring the ongoing performance and impact of your LLM applications becomes critical to demonstrate value and guide further optimization. For organizations needing to track the broader business impact of these initiatives, working with Specialized LLM Visibility Tracking Agencies can provide the necessary analytics and reporting to connect your prompt engineering efforts to tangible outcomes.

Ultimately, adopting any of these prompt management tools is a commitment to a more professional and effective way of working with AI. It’s about building a living, breathing system that grows with you, capturing your best work and making it instantly accessible. This strategic shift transforms your prompts from simple text strings into one of your most valuable, leverageable assets, paving the way for more consistent, high-quality, and innovative AI-powered results.

Ready to move beyond messy text files and chaotic spreadsheets? Promptaa is designed for creators, marketers, and teams who need a simple, powerful, and collaborative way to organize, refine, and share their best prompts. Start building your centralized prompt library today and see the difference a structured workflow makes.