A Guide to Parameter Efficient Fine Tuning

Think about a world-class chef. This chef has spent years mastering thousands of recipes and techniques, building a deep, intuitive understanding of cooking. Now, you want to teach them a new, highly specialized dish.

Would you make them go back to culinary school and relearn everything from how to boil water? Of course not. Yet, this is essentially what traditional training methods demand when we want to adapt a massive AI model for a new task.

The Problem With Traditional AI Training

Today's most powerful AI models, especially the large language models behind generative AI, are incredible feats of engineering. They've been pre-trained on staggering amounts of data, developing billions of parameters—think of these as the internal knobs the model twists to make decisions. This initial training gives them a vast, general knowledge of language, patterns, and logic.

But to be truly useful for a specific business need, this generalist model needs to become a specialist. The old-school way to do this is called full fine-tuning.

What Is Full Fine-Tuning?

Full fine-tuning takes a pre-trained model and adjusts all of its billions of parameters using a new, smaller dataset tailored to a specific task. You're essentially retraining the entire model, just on a more focused subject. While this method works, it comes with a few gigantic, often deal-breaking, problems.

- Mind-Blowing Computational Costs: Tweaking every single parameter in a model like Llama 3, which has 8 billion of them, requires a colossal amount of computing power. We're talking about needing fleets of expensive, high-end GPUs running for days or weeks. For most companies, the price tag is simply out of reach.

- Massive Storage Headaches: Every time you fully fine-tune a model for a different task, you're creating a brand new, full-sized copy. If the base model is 30GB, creating five specialized versions means you suddenly need 150GB of storage. This gets out of hand very, very quickly.

- The Risk of "Catastrophic Forgetting": When you alter all of a model's parameters to master a new skill, you can accidentally overwrite the general knowledge it learned during pre-training. The model might become a world-class expert on legal documents but forget how to have a simple, coherent conversation. It’s like our chef perfecting a single, complex soufflé recipe but forgetting how to chop an onion.

The bottom line is that full fine-tuning is powerful but brutally inefficient. It's like tearing down and rebuilding an entire house just to repaint one room.

This inefficiency has been a major roadblock, stopping countless developers and businesses from tailoring these incredible models to their own unique problems.

This is exactly the problem that Parameter-Efficient Fine-Tuning (PEFT) was created to solve. It provides a much smarter way forward—a way to teach our expert chef that new recipe without sending them back to square one. It makes customizing AI faster, cheaper, and accessible to everyone.

Understanding Parameter Efficient Fine Tuning

So, what’s the magic behind this approach? The core idea of parameter efficient fine-tuning is surprisingly straightforward: instead of tweaking every single one of the billions of parameters inside a massive pre-trained model, you freeze almost all of them. Then, you train only a tiny, strategically chosen handful.

Think of it this way. Full fine-tuning is like trying to customize a complex piece of software by rewriting its entire source code. It's a massive, expensive, and often risky job. Parameter efficient fine tuning, or PEFT, is the smarter play. It’s more like installing a lightweight plugin that adds a new feature or modifies the software's behavior for a specific task—all without touching the core code.

This "plugin" is essentially a small set of new, trainable parameters that work in harmony with the original, frozen ones. This focused strategy is what makes PEFT a whole family of techniques, not just a single method.

The Power of Selective Training

By freezing most of the model, PEFT cleverly preserves the vast general knowledge the model learned during its initial, expensive training run. This sidesteps a huge risk known as "catastrophic forgetting," where a model gets so good at one niche task that it completely forgets its broader skills. You're not retraining the model from the ground up; you're just teaching it a new trick in a very targeted way.

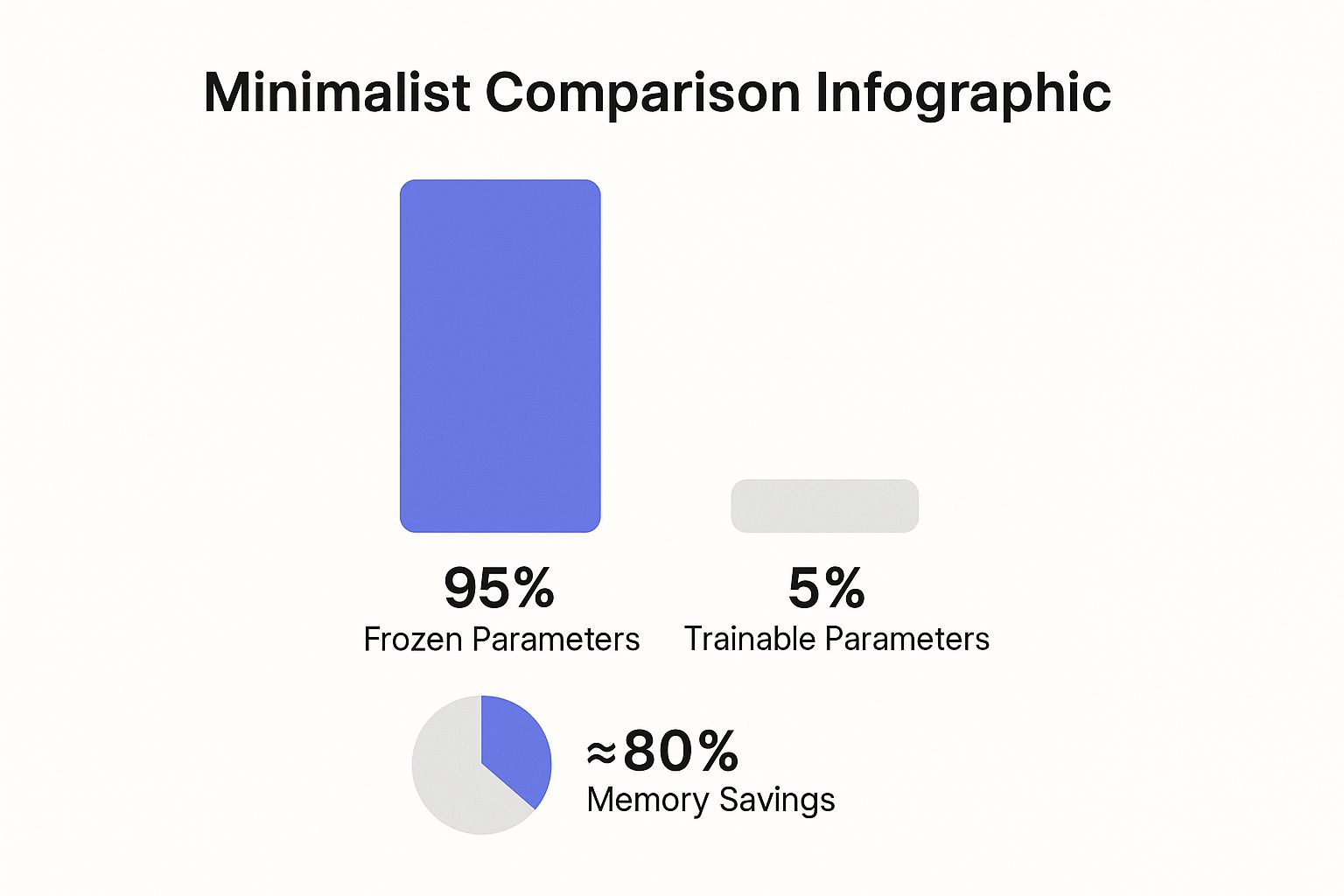

This infographic really drives home the difference in the number of parameters being trained and the memory savings that result.

As you can see, the efficiency boost is massive. It allows teams to fine-tune these huge models on hardware that's much more accessible and affordable.

PEFT methods have fundamentally changed how we adapt large language models for new jobs by slashing computational and memory costs. Some PEFT techniques, for instance, can cut the number of trainable parameters down to just 0.1% of the original model's size. That means you're adjusting 10,000 times fewer parameters compared to a full fine-tuning run. This opens the door to training on modest hardware that would have been a non-starter for models of this scale.

Core PEFT Methodologies

While there are many specific PEFT techniques out there, they generally fall into a few main categories. Getting a handle on these categories gives you a solid framework for picking the right tool for your project.

- Additive Methods: These approaches, like the very popular LoRA (Low-Rank Adaptation), add small, new layers or modules into the model's architecture. Only these new bits are trained, leaving the original model completely untouched. It’s like adding a small set of precision dials to the model’s existing machinery.

- Selective Methods: These methods don't add anything new. Instead, they selectively unfreeze and train a tiny subset of the model’s original parameters. This might mean training just a few specific layers or even certain parts of those layers.

- Reparameterization-Based Methods: This is a more advanced category where the model's huge weight matrices are transformed into a smaller, more efficient form just for training. LoRA is a classic example here, as it uses a technique called low-rank decomposition to create much smaller matrices that are easier to train.

PEFT isn't about compromising on performance. It’s about achieving similar or even better results than full fine-tuning, but with a fraction of the computational and financial resources.

By concentrating the training process on a small, manageable set of parameters, PEFT makes custom AI development faster, cheaper, and more sustainable. This has allowed many more organizations, regardless of their size or budget, to start adapting powerful AI models for their own needs. Before we get into the nitty-gritty of specific techniques, it helps to have a good grasp of the different types of LLMs that can be fine-tuned.

Popular PEFT Techniques Explained

The world of parameter-efficient fine-tuning isn't a one-size-fits-all solution. It's a collection of clever strategies, each taking a slightly different angle on how to adapt these massive models. While new methods pop up all the time, a few have really stood out and become the go-to choices for developers.

Let's break down three of the most popular PEFT methods. We'll skip the dense math and use some simple analogies to get to the heart of how they work.

H3 LoRA: Low-Rank Adaptation

Picture a massive, complex piece of machinery—say, a factory assembly line. This represents our pre-trained model, with thousands of settings (parameters) all perfectly calibrated for a wide range of jobs. If you wanted to start producing a slightly different product, you wouldn't tear down and rebuild the entire line. That would be insane.

Instead, you'd just add a few small, precise control dials at key points. That’s the core idea behind LoRA (Low-Rank Adaptation).

LoRA works by injecting tiny, trainable "adapter" modules directly into the model's architecture, usually within its attention layers. These modules are basically just small matrices with very few parameters compared to the giant ones in the original model.

During fine-tuning, the original model's massive machinery is completely frozen. It doesn't change at all. We only train the new, tiny dials—the LoRA adapters. This simple trick can reduce the number of trainable parameters by a factor of 10,000 or even more.

The best part? Once training is done, the adjustments from these new dials can be mathematically merged back into the main machinery. You're left with a single, updated model that has the new skill baked in, with no extra parts to lug around. This makes LoRA incredibly efficient for both training and deployment.

H3 Prefix-Tuning

Now, imagine you're working with a brilliant but very literal assistant. To get the best results, you can't just blurt out a question. You need to give them some context first—a little preamble that sets the stage. This is exactly how Prefix-Tuning operates.

This technique freezes the entire pre-trained model and focuses on learning a small chunk of data called a prefix. This prefix gets tacked onto the beginning of the input for every single layer of the model.

Think of it as a small, sticky-note "instruction manual" that the model re-reads at every step of its process. It doesn’t change the model’s internal knowledge, but it steers its attention and guides its calculations for the specific task you’re working on. A prefix tuned for summarization will constantly nudge the model to focus on finding and condensing the most important information.

By only training this tiny prefix, we avoid touching any of the original model's billions of parameters, making it another highly efficient approach.

H3 Prompt Tuning

If Prefix-Tuning is like writing a detailed instruction manual, then Prompt Tuning is like finding the perfect "magic words" to get exactly what you need. It’s a simpler, more streamlined take on the same concept.

Just like Prefix-Tuning, this method keeps the entire language model frozen. But instead of adding a trainable prefix to every layer, Prompt Tuning adds a small set of trainable "soft prompts" only to the very beginning, at the input layer.

These aren't prompts you can read. They're learned numerical vectors that are fine-tuned to nudge the model’s output in the right direction. It's like an automated way of figuring out the most potent set of instructions for the model—far more nuanced than anything a person could come up with by hand.

Because it only trains these few "magic words" at the input, Prompt Tuning is one of the most lightweight PEFT methods out there. All you need to store for each new task is a tiny file containing that specific prompt.

H3 Comparing Popular PEFT Methods

So, you have LoRA, Prefix-Tuning, and Prompt Tuning. Each one offers a different trade-off between performance, complexity, and efficiency. LoRA often gets the nod for its raw performance across many different tasks, while Prompt and Prefix-Tuning are champions of lean, mean efficiency.

To make the choice a little clearer, here’s a quick side-by-side comparison.

Comparing Popular PEFT Methods

| Technique | Mechanism | Trainable Parameters | Best For |

|---|---|---|---|

| LoRA | Injects small, trainable matrices into existing layers. | Very Few (e.g., ~0.1% of total) | Getting strong performance on a wide variety of tasks with minimal parameter overhead. |

| Prefix-Tuning | Adds a trainable "prefix" vector to each layer. | Extremely Few | Complex generation tasks where you need consistent guidance throughout the model's computation. |

| Prompt Tuning | Prepends a small set of trainable "soft prompts" to the input. | Minimal (Fewest of the three) | Natural language understanding tasks where efficiency and minimal storage are the absolute top priorities. |

Ultimately, what these methods represent is a huge shift in how we think about specializing AI. Instead of building monolithic models for every task, we can now create whole fleets of customized experts for a fraction of the cost. This opens the door to applying powerful AI to all sorts of niche and specific business problems that were previously out of reach.

The Business Case: Why PEFT is More Than Just a Technical Trick

Let's move beyond the clever math and engineering for a moment. The real reason PEFT is taking the AI world by storm boils down to its impact on business. This isn't just about adopting a new technology; it's a strategic move that directly affects your budget, your team's speed, and your position in the market. It fundamentally changes the economics of building custom AI.

The most obvious win is the massive cost saving. Full fine-tuning is an expensive habit. It often requires renting out entire clusters of high-end, top-dollar GPUs for days or weeks at a time. Historically, this put bespoke AI models out of reach for anyone but the biggest, most well-funded tech giants.

PEFT completely shatters that financial barrier. Since you're only training a tiny fraction of the model's parameters, the need for raw computational horsepower drops dramatically. Your development team can get state-of-the-art results using far more accessible—and significantly cheaper—hardware.

Move Faster, Innovate More

This cost reduction naturally leads to a second, equally powerful benefit: unprecedented speed. Projects that used to involve months of painstaking planning and execution can now be turned around in a matter of weeks, sometimes even days. For any business trying to keep up with a fast-moving market, that kind of agility is a huge advantage.

Instead of one single, high-stakes training run, teams can finally afford to experiment. They can iterate quickly on different datasets, try out various PEFT methods like LoRA, and spin up multiple specialized models for different tasks—all without getting an angry call from the finance department.

Parameter-efficient fine-tuning transforms AI development from a slow, monolithic process into a rapid, iterative cycle of innovation. It empowers teams to fail fast, learn faster, and get solutions to market before the competition.

This rapid iteration is critical for staying relevant. We're seeing a surge in PEFT adoption because it hits that sweet spot between performance and resource efficiency. That's a game-changer in major global markets. In industries like finance, healthcare, and software, data is always changing. PEFT makes it possible to retrain models quickly, cutting down timelines from weeks to days without needing expensive GPU clusters. This isn’t just an improvement; it's democratizing AI, letting more companies build and maintain advanced models sustainably. You can dive deeper into these findings on model adaptability and cost-effectiveness.

The Scalability and Sustainability Edge

Finally, there’s a sustainability angle here that is becoming more important every day. The huge energy footprint of training large AI models is a serious and growing concern. PEFT offers a much greener path forward.

- Lower Energy Bills: Less computation means less electricity burned during training. It's that simple.

- A Smaller Carbon Footprint: By cutting down on resource-heavy training runs, companies can genuinely reduce the environmental impact of their AI work.

- Smarter Scalability: Managing and storing your models also becomes ridiculously easy. Instead of saving a new multi-gigabyte copy of a model for every single task, you just have to store the small, lightweight adapter weights.

This approach makes it truly practical to deploy and maintain dozens or even hundreds of specialized AI models—a logistical and financial nightmare if you were attempting it with full fine-tuning.

In the end, PEFT isn't just a clever optimization. It's a strategic tool that slashes costs, supercharges development, and encourages more responsible AI practices. It gives more organizations a real shot at building a competitive advantage with custom AI, all in a way that’s both affordable and sustainable.

How PEFT Is Used in The Real World

Theory is one thing, but seeing parameter efficient fine tuning in action is where its real power becomes clear. Let's move past the concepts and look at how PEFT is solving tangible business problems, turning massive, generalist models into highly specialized and cost-effective tools.

PEFT really shines in situations where a full fine-tune is just not practical, either due to a lack of data or sky-high costs. One of the most compelling examples comes from the world of remote sensing and satellite imagery.

Transforming Earth Observation with Limited Data

Picture an organization trying to monitor global deforestation or identify specific crop types from space. They have a powerful, pre-trained vision model at their disposal, but their collection of labeled satellite images for "diseased soybean fields in Brazil" is tiny.

Trying to fully fine-tune a massive model on such a small, niche dataset would be incredibly expensive. Worse, it would almost certainly cause the model to "overfit"—it would memorize the training images and perform poorly on any new ones it sees.

This is exactly where PEFT becomes a game-changer. Using a method like LoRA, they can freeze the model's core understanding of the visual world and train only a small set of new adapter layers. This lets the model learn the specific visual signatures of diseased soybeans without messing with its general knowledge of landscapes, clouds, and terrain.

The efficiency of PEFT has huge implications for any field with limited or imbalanced data. In remote sensing, for example, labeled data is scarce and you often have many rare categories to identify. By smartly adjusting where tunable modules are inserted and tweaking learning rates for each, PEFT can dramatically improve accuracy on these rare classes. This shows how parameter efficient fine tuning not only slashes costs but also solves the critical challenge of adapting models to limited data—a major hurdle in fields like agriculture and environmental monitoring.

Customizing Customer Service Chatbots

Another fantastic application is in customer service. Imagine a large telecom company using a general-purpose chatbot built on a foundation model like Llama 3. The bot is great at handling common questions like "what's my bill?" but it gets lost when faced with the company's specific, jargon-filled technical support problems.

A full fine-tune is off the table. It would require a colossal dataset of conversations and risk making the bot "forget" how to handle basic conversational flows. So, what do they do? They use PEFT to create a whole team of specialized "expert" bots.

- Billing Bot: Fine-tuned with a LoRA adapter trained purely on billing inquiries.

- Technical Support Bot: Another adapter trained on router setup guides and troubleshooting logs.

- Sales Bot: A third adapter trained on product features and current promotions.

Each of these adapters is tiny—just a few megabytes. The company can load the right one on the fly based on what a customer asks, instantly turning its generalist chatbot into a focused specialist. This approach is not only efficient but also incredibly scalable, allowing them to roll out dozens of expert bots without having to store dozens of separate multi-billion parameter models. While specialization is key, it's also crucial to manage model outputs; our guide on how to reduce hallucinations in LLMs offers some great strategies for this.

Streamlining Healthcare Documentation

In healthcare, doctors spend hours writing detailed clinical notes. A hospital wants to use an LLM to automatically summarize these notes into a clean, concise format for patient charts, freeing up precious time. The problem is that medical language is notoriously complex, packed with unique acronyms and phrasing.

A general model might summarize a clinical note by saying, "The patient's heart is beating fast." A properly fine-tuned model would know to use the correct clinical term: "The patient is experiencing tachycardia."

By using PEFT, the hospital can adapt a powerful language model to understand its specific terminology and documentation style. They can train it on a secure, anonymized dataset of their own clinical notes, creating a summarization tool that is both accurate and compliant. Because PEFT only adds a small, trainable layer on top of the original model, the risk of exposing sensitive information from its broader training data is significantly reduced, which is a huge win for privacy.

How to Choose Your PEFT Strategy

So, you’re ready to try Parameter-Efficient Fine-Tuning. Picking a method can feel a bit like standing in front of a giant toolbox—each tool is specialized for a particular job. The trick isn't finding the single "best" method, but rather matching the right tool to your specific project.

This really boils down to three things: what task are you trying to accomplish, what model architecture are you using, and what kind of hardware are you working with?

Don't worry, this isn't as complicated as it sounds. You can use a simple framework to map the most common PEFT methods to your goals. This gives you a solid starting point so you can get from theory to practice without getting lost.

A Simple Decision Framework

For most projects, you can follow a straightforward hierarchy to land on the right method pretty quickly. This approach is all about balancing performance, ease of use, and computational cost.

A fantastic place to start for almost any use case is LoRA (Low-Rank Adaptation). It’s the workhorse of the PEFT world, delivering consistently strong results across a huge range of tasks without forcing you to mess with the model’s core architecture. If you're aiming for a general performance boost on something like text classification or summarization, LoRA is almost always your best bet.

Key Takeaway: When in doubt, start with LoRA. There's a reason it's the most popular PEFT method—it just works. Its blend of high performance and efficiency makes it incredibly versatile.

Now, if your project is more about tightly controlling how the model generates text—think creative writing, enforcing a specific brand voice, or chatbot personalities—then Prompt Tuning is a great choice. It's incredibly lightweight because you're only training a tiny set of "soft prompts." This makes it perfect for situations where you need to juggle many different tasks without burning through storage.

For more nuanced sequence-to-sequence tasks like machine translation or really sophisticated summarization, you might want to look at Prefix-Tuning. By adding a trainable prefix to every layer of the model, it gives you much finer control over its internal workings. This can be a game-changer for tasks that need consistent, step-by-step guidance to get right.

Essential Tools for Implementation

Getting your hands dirty with PEFT is easier than ever, thanks to some brilliant open-source libraries. The absolute must-have in your toolkit is the PEFT library from Hugging Face. It offers clean, high-level APIs for implementing LoRA, Prompt Tuning, Prefix-Tuning, and more.

What's great about this library is that it handles most of the heavy lifting behind the scenes. You can add powerful fine-tuning capabilities to your training script with just a few lines of code. It also plays nicely with the rest of the Hugging Face ecosystem, like Transformers and Accelerate, which makes for a really smooth development process. With these tools in hand, you can start experimenting and putting PEFT to work on your own projects with confidence.

Got Questions About PEFT? We've Got Answers

As you start digging into parameter-efficient fine-tuning, you're bound to have some questions. It’s a big shift from the old way of doing things. Let's tackle some of the most common ones that come up.

Does PEFT Actually Hurt Model Performance?

This is the big one, the question on everyone's mind. And the answer is, for the most part, no. It’s a common misconception that tuning fewer parameters must lead to worse results, but that’s not what we see in practice.

For most real-world tasks, a well-tuned PEFT method like LoRA can perform just as well as full fine-tuning. Sometimes, it even does a little better. You're not starting from scratch; you're just teaching an already brilliant model a new, specific skill. By freezing most of the original weights, you protect its incredible base knowledge from being overwritten or forgotten.

So, Which PEFT Method Should I Use?

There's no single "best" method for every situation. The right choice really depends on your project's needs, your data, and what kind of hardware you're working with.

That said, if you're looking for a solid starting point, begin with LoRA (Low-Rank Adaptation). It’s the go-to for a reason. LoRA hits that sweet spot between high performance and resource efficiency, making it a reliable and versatile choice for a huge variety of tasks.

- If you're juggling dozens of tasks and need to keep your storage footprint absolutely minimal, Prompt Tuning is a fantastic, lightweight option.

- For more intricate text-generation tasks where you need a bit more control over the model's behavior, Prefix-Tuning might give you the edge.

How Much Memory Are We Really Saving Here?

The savings are dramatic. This is often the killer feature that convinces teams to switch to PEFT. With full fine-tuning, you have to load the entire model, plus its gradients and optimizer states, into your GPU's VRAM. It adds up fast.

PEFT completely changes the game.

Because you're only training a tiny sliver of the total parameters—often as little as 0.1%—you can slash memory usage during training by 80% or more. This is what makes it possible to fine-tune a massive model on a single consumer GPU, instead of needing a whole rack of expensive, enterprise-grade hardware.

Can I Mix PEFT with Other Optimization Tricks?

Absolutely! This is where you can unlock some serious efficiency gains. PEFT plays incredibly well with other optimization techniques, especially quantization.

Quantization shrinks a model by using lower-precision numbers for its weights (like 8-bit or 4-bit integers instead of the standard 32-bit floats). You can take a pre-quantized base model and then apply a PEFT adapter like LoRA right on top. This one-two punch creates an unbelievably efficient setup for both fine-tuning and serving your model, demanding a fraction of the resources you'd normally need.