Master Natural Language Processing Basics Today

Natural Language Processing (NLP) is a fascinating branch of artificial intelligence that essentially teaches computers how to read, understand, and make sense of human language. It’s the magic that bridges the gap between how we talk and how computers think.

You're already using it every single day, probably without even realizing it. Think about your phone's predictive text or the spam filters that miraculously keep your inbox clean. That's NLP at work.

What Is Natural Language Processing

So, what’s actually happening under the hood? Imagine trying to explain a sarcastic comment to a robot. You can't just define the words; the robot needs to get the context, the tone, and the shared understanding that makes the comment funny. That’s the core challenge of Natural Language Processing. It's way more than just vocabulary—it's about teaching machines to navigate the beautifully messy and ambiguous world of human communication.

At its core, NLP breaks our language down into pieces that a computer can actually process. An NLP model doesn't see "The quick brown fox jumps over the lazy dog." Instead, it sees a puzzle of grammatical structures, word relationships, and potential meanings that it needs to solve.

Bridging The Human-Computer Gap

The main point of NLP is to make our interactions with technology feel less like we're typing commands and more like we're having a conversation. We shouldn't have to learn "computer-speak"; the goal is to teach computers to understand us.

This really boils down to a few key goals. To make it clearer, here’s a quick breakdown of what NLP systems are trying to accomplish.

Core Goals of Natural Language Processing

| Objective | Simple Explanation | Everyday Example |

|---|---|---|

| Understand Intent | Figuring out what someone really means, even if they phrase it weirdly. | Asking your phone "Where's a good place for coffee?" or "I need caffeine" and getting the same coffee shop results. |

| Extract Information | Pulling out specific, structured facts from a big block of text. | Scanning a news article to automatically identify the people, organizations, and locations mentioned. |

| Generate Responses | Creating natural-sounding text to answer questions or hold a conversation. | Chatbots that provide customer support or a voice assistant telling you the weather forecast. |

These objectives are what allow NLP to power so many of the tools we now take for granted.

The real power of understanding natural language processing basics is realizing it’s less about technology and more about teaching machines the art of human context.

When your email client flags a sketchy message or a voice assistant schedules your next meeting, that’s NLP doing the heavy lifting behind the scenes. It's that invisible layer of intelligence making our tech just a little more human.

The First Steps in Teaching Machines to Talk

To really get a feel for how far we've come with natural language processing, it helps to rewind the clock. The whole journey kicked off in the 1950s, a time when researchers first started to seriously ponder whether a machine could actually understand the way we talk. It was a question famously posed by Alan Turing in 1950 and soon put into practice.

One of the most significant early milestones was the 1954 Georgetown-IBM experiment. This pioneering effort managed to automatically translate more than 60 Russian sentences into English—a huge feat for its time.

The Age of Rules and Phrasebooks

So, how did these early systems work? Imagine giving a tourist a phrasebook. They were built on a similar idea: a rigid set of grammatical rules and a very limited vocabulary. This approach works just fine for straightforward, predictable sentences like, "Where is the library?"

But the moment you throw in slang, a metaphor, or just the natural, messy flow of a real conversation, the whole thing grinds to a halt.

These rule-based systems were incredibly labor-intensive. Linguists had to sit down and manually code every single grammatical possibility they could think of. This made the systems extremely brittle; a single unexpected word could break the entire process.

These first attempts hammered home a fundamental truth about language: it's far too complex, messy, and creative to be boxed in by a finite set of rules.

This realization was a turning point. While these rule-based systems were a necessary first step, their shortcomings made it clear that a new path was needed. Machines didn't just need to follow instructions; they needed a way to learn.

This insight set the stage for a massive pivot, moving away from rigid rules and toward the flexible, data-hungry models that define modern NLP. For a look at how we now guide these sophisticated models, you can explore our guide on the different types of prompting.

How NLP Takes Human Language Apart

Before a machine can ever hope to understand a sentence, it has to tear it down to its basic components. Think of it like a mechanic taking an engine apart piece by piece to see how it works. This is the very first, non-negotiable step in all of NLP.

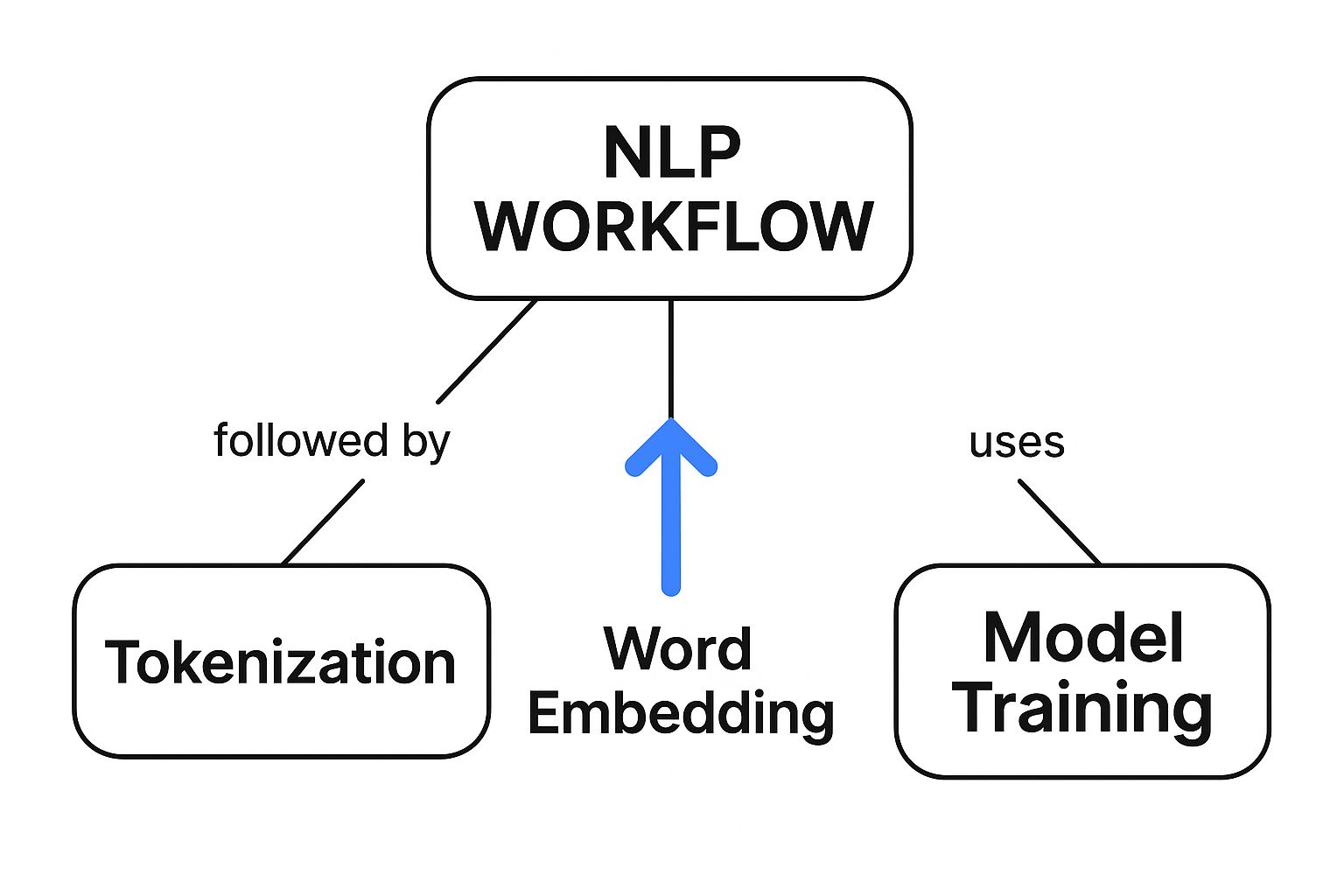

The process kicks off with something called tokenization. It sounds technical, but it’s really just about chopping up a sentence or a paragraph into smaller units called "tokens."

So, the sentence "The dogs are barking loudly" is broken into five individual tokens: "The", "dogs", "are", "barking", and "loudly". Each word becomes a distinct piece for the machine to examine.

Tokenization is a lot like separating Lego bricks from a completed model. You can't grasp how the whole thing was built until you look at each individual brick.

Finding Meaning in the Pieces

Once the text is broken into a neat pile of tokens, the real work of figuring out the meaning can start. It’s not enough to just have the words; the machine needs to understand how they fit together. This is where a few key techniques come into play.

- Part-of-Speech (POS) Tagging: Just like we learned in school, every word has a grammatical job to do. POS tagging assigns a label to each token. For instance, "dogs" gets tagged as a noun, "are" and "barking" as verbs, and "loudly" as an adverb. This grammatical context is crucial for understanding the sentence's structure.

- Lemmatization: This is the process of getting to the root of a word. The words "running," "ran," and "runs" all share a core meaning, and lemmatization boils them all down to their dictionary form, or "lemma": "run". This stops the machine from treating variations of the same word as completely different ideas, which is essential for accurate analysis.

These fundamental steps are what turn the messy, nuanced flow of human language into organized data that a computer can actually work with.

By first breaking sentences into tokens, then figuring out each word's grammatical role, and finally finding their root meanings, NLP builds a solid, structured foundation. Only then can a machine move on to more complex jobs like figuring out user intent, analyzing customer sentiment, or answering a question.

The Big Shift: From Following Rules to Learning Patterns

Early NLP systems were a bit like a tourist trying to get by with a clunky, old-fashioned phrasebook. Developers painstakingly tried to hand-code a massive set of grammatical rules. But language is messy. It has endless exceptions, evolving slang, and creative phrasing that constantly breaks the rules. This rigid, rule-based approach was fragile and just couldn't keep up.

A major change was needed. Instead of trying to teach computers every single rule, a new idea started to take hold: what if machines could learn the patterns themselves? This was the pivotal moment when the field shifted from programming explicit instructions to learning directly from raw data.

This infographic breaks down the modern, pattern-based approach, showing how text is deconstructed, turned into numbers, and then used to train a model.

As you can see, modern NLP is a multi-stage process. It's all about systematically converting our messy, human language into clean, statistical patterns that a machine can actually understand and learn from.

From Manual Rules to Statistical Insights

This move away from labor-intensive, rule-based systems really picked up steam in the 1980s and 1990s. As huge digital text collections, known as corpora, became available, developers finally had the fuel they needed. For a deeper dive into this history, the University of Washington's computer science page offers a great overview.

Think of it as the difference between memorizing a dictionary and actually learning a language by immersing yourself in it. Instead of just knowing definitions, you start to get a feel for how words work together.

This leap allowed machines to figure out the probabilities and connections between words entirely on their own. By analyzing millions of sentences, an algorithm could learn that the word "queen" is statistically very likely to appear near words like "king," "royal," or "England." No one had to program that rule; the machine discovered the pattern from the data.

This conceptual shift is the single most important development in the history of NLP. It’s the engine that powers the sophisticated language tools we use every single day.

By learning from data, models became far more flexible and robust. They could finally handle the nuance and ambiguity that rule-based systems never could. This evolution paved the way for the powerful models we have today. In fact, the very differences between large language models vs generative AI highlight just how far this data-first approach has taken us.

Seeing NLP in Action All Around You

The theory behind natural language processing can feel a bit distant, but you're probably interacting with it dozens of times a day without even realizing it. NLP is the quiet, invisible engine running behind many of the digital tools we now take for granted, making them feel more intuitive and genuinely helpful.

Once you know what to look for, you'll start spotting it everywhere. From your email inbox to your favorite shopping app, NLP is constantly working to understand what we mean and what we want.

Decoding Customer Opinions

Ever wonder how an e-commerce site can sift through thousands of product reviews and slap a simple "85% positive" rating on it? That’s Sentiment Analysis at work. It's a classic NLP task designed to figure out the emotional tone behind a piece of text.

The system reads a review like, "I absolutely loved this product, it works perfectly!" and knows it's positive. On the flip side, it sees "This was a complete waste of money and broke after one use" and tags it as negative. By doing this at a massive scale, businesses get an instant pulse check on how people feel.

Identifying Key Information Instantly

Another cool application you’ve definitely seen is Named Entity Recognition (NER). Think about reading a news article on your phone and seeing names of people, companies, and places automatically highlighted or linked. NER is the magic behind that.

An NER model scans text and pulls out important "entities." For example, in the sentence, "Tim Cook announced Apple's latest product from their headquarters in Cupertino," the model would pick out:

- Person: Tim Cook

- Organization: Apple

- Location: Cupertino

This simple act allows apps to categorize articles, pull up contextual information, or link you to related stories, making content much more dynamic.

NLP isn't just about understanding words; it's about extracting meaningful, structured information from the chaotic flow of human communication. This ability to find signals in the noise is what makes it so valuable.

Breaking Down Language Barriers

Maybe the most obvious example is Machine Translation. Services like Google Translate use incredibly sophisticated NLP models to convert text from one language to another almost instantly. This is a far cry from the clunky, word-for-word substitutions of the past.

Modern translation systems analyze the grammar and context of the entire sentence to create a translation that sounds natural, not just technically correct. The fact that you can have a near real-time conversation with someone on the other side of the world is a direct result of decades of progress in natural language processing.

You might be surprised by just how many of your daily digital interactions are powered by these core NLP tasks. Here’s a quick look at a few common examples.

NLP Tasks and Their Everyday Applications

| NLP Task | What It Does | Where You See It |

|---|---|---|

| Sentiment Analysis | Gauges the emotional tone (positive, negative, neutral) of text. | Product review summaries on Amazon, social media monitoring tools. |

| Named Entity Recognition | Identifies and categorizes key entities like names, places, and brands. | Highlighting people and places in news articles, organizing your email inbox. |

| Machine Translation | Automatically translates text from a source language to a target language. | Google Translate, live translation features in apps like Skype. |

| Text Summarization | Condenses a long document into a short, coherent summary. | News apps that provide a "TL;DR" version of long articles. |

| Spam Detection | Classifies incoming emails as either "spam" or "not spam." | The spam filter in your Gmail or Outlook account. |

As you can see, NLP isn't some far-off future technology. It’s a practical and powerful tool that's already deeply embedded in the services we use every single day, making them smarter and more efficient.

What's Next for Natural Language Processing?

Teaching machines to understand us is a journey, not a destination. The foundational concepts of NLP paved the way for today's AI, but the field is always pushing forward, moving beyond just breaking down sentences to generating truly coherent, context-aware language.

This massive leap forward is thanks in large part to a groundbreaking architecture called the Transformer model. First introduced in a now-famous 2017 paper, Transformers completely changed the game by allowing machines to weigh the importance of different words in a sentence. It’s like giving the AI an "attention" mechanism, letting it focus on the most relevant words to grasp the true meaning.

This was a huge departure from older models that had to process words one by one, in a rigid order.

A Transformer doesn't just read a sentence; it understands the web of relationships between every word, no matter how far apart they are. This ability to see the "big picture" is what unlocked a new level of language comprehension.

The Rise of Large Language Models

This powerful Transformer architecture is the engine humming inside today’s Large Language Models (LLMs). The name is pretty descriptive: an LLM is a massive neural network trained on a staggering amount of text data scraped from the internet, books, and countless other sources.

By sifting through all this information, models like the ones powering ChatGPT learn the intricate patterns, grammar, facts, and even the reasoning styles embedded in human language. They get exceptionally good at one core task: predicting the next word in a sequence. That simple-sounding skill is the key to unlocking a huge range of abilities:

- Generating Human-Like Text: They can draft everything from professional emails and detailed articles to creative poems and functional code.

- Answering Complex Questions: Instead of just finding a link, they synthesize information to give you a complete, conversational answer.

- Translating Languages: They go beyond word-for-word translation to capture the subtle nuances of context and tone.

- Summarizing Long Documents: Need the highlights from a dense report? An LLM can distill the key points for you in seconds.

These capabilities are a quantum leap forward, making our interactions with technology feel more natural and intuitive than ever before. Getting a handle on the natural language processing basics is the first step to truly appreciating how these incredible tools will continue to shape our world, from smarter personal assistants to more capable creative partners.

A Few Common Questions About NLP

As we wrap up this introduction to natural language processing, let's clear up a few questions that often come up.

Is NLP the Same as AI and Machine Learning?

It's a common point of confusion, but they're not the same thing. The easiest way to think about it is like a set of nesting dolls.

Artificial Intelligence (AI) is the biggest doll—it's the entire field of creating intelligent machines. Inside that doll, you'll find Machine Learning (ML), which is a specific approach to AI that involves training systems on data. NLP is an even more specialized doll inside ML, one that’s focused entirely on language.

Why Is NLP Considered So Difficult?

Human language is messy and beautiful, but for a computer, it’s a nightmare. It’s filled with ambiguity, context, slang, and sarcasm that we humans navigate effortlessly.

Think about a simple word like "set." It has over 400 different meanings in the dictionary! Computers, which thrive on rules and logic, have a tough time with that kind of nuance. This is why models can sometimes get things wrong or misunderstand what you mean. You can dive deeper into this issue in our guide on how to reduce hallucinations in LLMs.

At Promptaa, we build tools that help you write clearer, more powerful prompts for any AI model. If you want to get better results, visit us today to see how you can organize and refine your prompts.