LLM for Code Your New AI Coding Partner

So, what exactly is an LLM for code? Put simply, it’s an AI model that’s been intensively trained on a massive amount of programming languages. These models are fluent in Python, JavaScript, and C++.

Think of it as a specialized language expert, but for software.

Your New Partner in Software Development

We've all been there—stuck on a tricky bug, hours ticking by as you scour forums for a solution. Now, imagine an AI coding partner suggesting a fix in seconds. This isn’t a far-off dream; it's what developers are experiencing right now with specialized Large Language Models (LLMs).

These aren't just glorified autocomplete tools. They're becoming genuine collaborators in the software development lifecycle.

An LLM for code is like a GPS for your programming journey. It doesn't just give you the final destination (a working feature); it suggests the fastest routes, warns you about potential "traffic jams" (bugs), and might even point out a scenic detour—a creative solution you hadn't thought of. It's a fundamental shift that's quickly making these AI models essential for developers everywhere.

Redefining the Developer Workflow

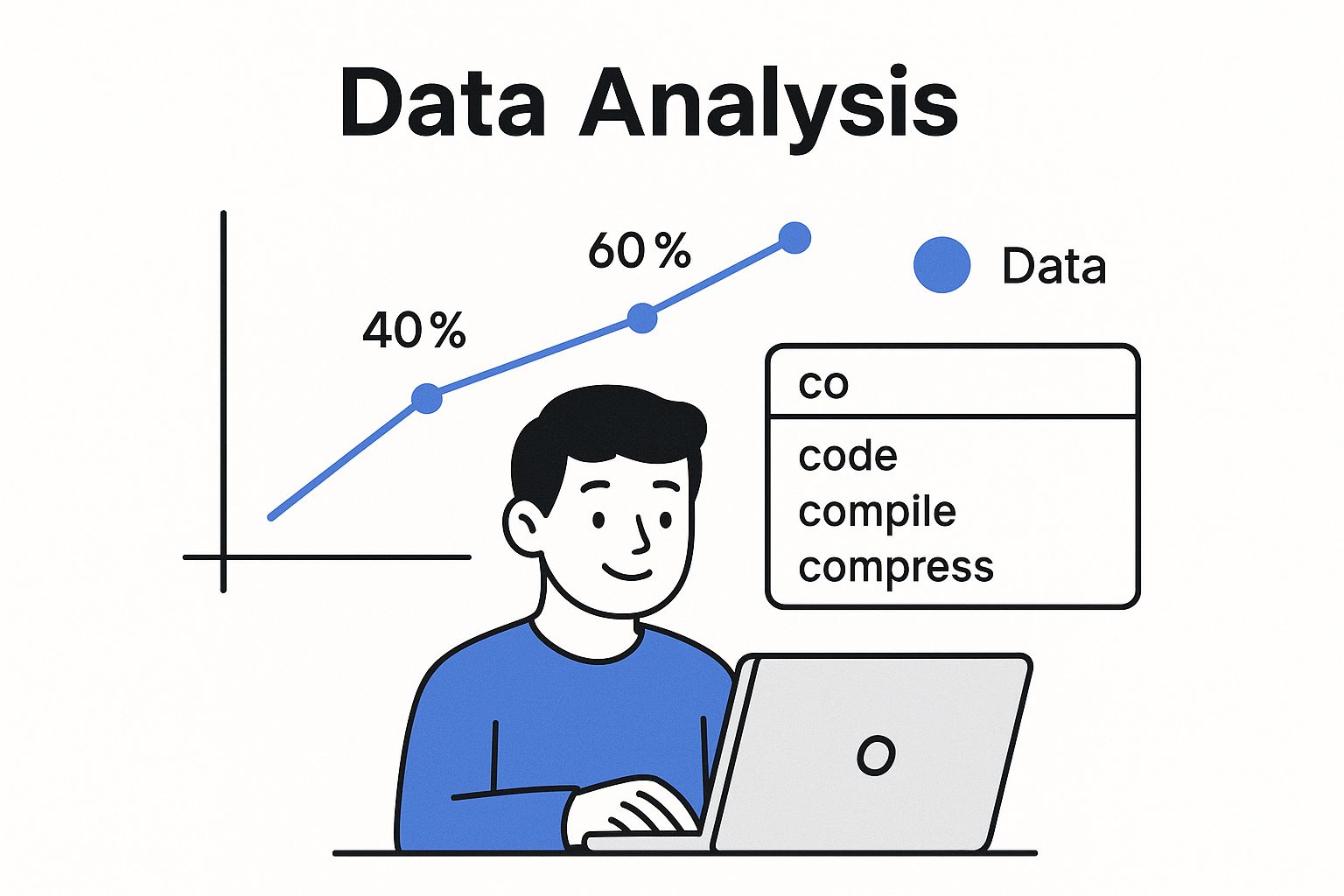

The impact is already huge. By 2024, AI had become a massive contributor to software development, with AI tools generating roughly 41% of all code worldwide. That translates to an estimated 256 billion lines of code written by AI, a number that speaks volumes about its rapid adoption. You can find more stats on AI-generated code over at elitebrains.com.

This integration lets developers hand off the tedious, repetitive work. Their role changes. Instead of manually writing boilerplate code or hunting down basic syntax, they can think at a higher, more strategic level. The job evolves from being a coder to being an architect who directs the AI to build better software, faster.

An LLM for code acts as a force multiplier, automating the mundane aspects of programming. This frees up developers' cognitive energy for what truly matters: creative problem-solving, system design, and innovation.

Ultimately, using an LLM for code is about augmenting human intelligence, not replacing it. It’s a partnership where your expertise and creativity are amplified by the speed and knowledge of a powerful AI. The result? More efficient workflows and more sophisticated software.

How AI Learns the Language of Code

So, how does an AI actually write functional Python or figure out what’s wrong with a messy block of JavaScript? It’s a lot like how we learn a new spoken language. An LLM for code doesn't just have a giant database of answers; it genuinely learns the patterns, the structure, and the logic that make code work.

It all starts with the absolute basics. Just like a language student first learns the alphabet and simple words, an LLM breaks code down into its smallest meaningful pieces, which we call tokens. A token could be a keyword like function, a variable name, an operator, or even a simple semicolon.

From there, the AI starts to learn grammar—or in the coding world, syntax. It analyzes how all those tokens are supposed to fit together in a specific programming language. It learns that a for loop in Python looks different from one in JavaScript, and it internalizes the rules that make a piece of code valid and ready to run.

The World's Largest Code Library

The final, and most important, part of its education is reading. The AI is trained on an unbelievable amount of code, literally billions of lines from public repositories like GitHub. By churning through this massive dataset, the LLM learns common patterns, popular idioms, and the best practices used in countless real-world projects.

This massive exposure gives the AI an incredible knack for prediction. Think about how your phone’s keyboard suggests the next word in your text message. An LLM for code does the same thing, but for code—it predicts the next logical token. That predictive power is exactly what lets it complete lines of code, write entire functions from scratch, and even suggest fixes for bugs. If you want to get into the nuts and bolts of the technology, our article comparing large language models vs generative AI is a great place to start.

Adapting to Your Project

This training isn't just about general programming knowledge. The really powerful models can adapt to the specific context of your project. Two key ideas make this possible:

- Context Window: You can think of this as the AI's short-term memory. It's the amount of code, comments, and conversation history the model can look at all at once to understand what you're trying to do. A bigger context window means it can see the bigger picture of your project, not just the single file you have open.

- Fine-Tuning: This is a more specialized training process where a model is fed a specific dataset, like your company's private codebase. Fine-tuning teaches the LLM your team's unique coding style, your internal conventions, and how your proprietary libraries work. This makes its suggestions feel like they came from a seasoned member of your own team.

These advanced techniques have truly changed the game. Models can now generate executable code directly from natural language descriptions, thanks to improvements like fine-tuning, expanded context windows, and adding domain-specific knowledge to their training. You can explore the technical details in this recent research paper.

By combining this incredibly broad training with a sharp focus on the details of a specific project, an LLM for code becomes a genuinely helpful partner in the development process.

4. Choosing Your AI Coding Assistant

With so many AI coding assistants popping up, picking the right one can feel a bit overwhelming. The secret is to cut through the hype and zero in on what actually matters in your day-to-day work. Think of an LLM for code as a new teammate—the best fit depends entirely on your project's specific needs.

Whether you're flying solo on a personal project or working within a large enterprise team, the decision really boils down to a few key things. You need to consider its raw coding accuracy, how well it can grasp your project's context, its integration with your favorite tools, and, of course, the price tag.

Developer surveys show a clear trend: more and more of us are leaning on AI for everyday tasks like generating code and getting smart autocompletions. According to a 2023 Stack Overflow survey, 77% of developers are already using or plan to use AI tools in their workflow this year.

This shift really highlights how these models are becoming true pair-programming partners, helping us build faster and better.

The Top Contenders

A few big names dominate the conversation, each bringing something different to the table. You've got the high-performance proprietary models from the tech giants on one side and the flexible, open-source alternatives on the other.

- OpenAI's GPT-4: Often seen as the gold standard, GPT-4 is a powerhouse for both general reasoning and code generation. It’s known for its rock-solid performance across tons of programming languages and its knack for untangling complex problems.

- Google's Gemini 1.5 Pro: A serious competitor, Gemini's biggest claim to fame is its massive context window. It's fantastic at making sense of huge, multi-file codebases where understanding the bigger picture is critical.

- Anthropic's Claude 3: Also known for its enormous context window, Claude has built a reputation for its powerful reasoning abilities. It's a go-to for developers who need an AI that can essentially "read" and digest an entire project at once.

- Meta's Llama 3: As the leading open-source family of models, Llama gives you the ultimate freedom. You can host it yourself and fine-tune it on your own data, which is a huge win for privacy and customization.

A Head-to-Head Comparison

To really get a feel for the differences, it helps to see these models stacked up against each other. The field is moving incredibly fast, with new versions constantly pushing the limits of what these tools can do.

Below is a quick comparison of some of the top models available right now. This table helps break down their performance on a common benchmark, how much information they can handle at once (context window), and what makes each one stand out.

Top LLM for Code Comparison

| Model | Developer | HumanEval Score (Approx.) | Max Context Window | Key Strengths |

|---|---|---|---|---|

| GPT-4o | OpenAI | 90.2% | 128,000 tokens | Top-tier reasoning, great all-around performance. |

| Gemini 1.5 Pro | 84.9% | 1,000,000+ tokens | Massive context, ideal for large codebase analysis. | |

| Claude 3 Opus | Anthropic | 84.9% | 200,000 tokens | Strong reasoning, great for complex documentation. |

| Llama 3 70B | Meta | 81.7% | 8,000 tokens | Best-in-class open-source, highly customizable. |

Note: HumanEval scores are from published reports and can vary. For the latest numbers, check out resources that track current LLM benchmarks.

As you can see, the "best" model isn't a one-size-fits-all answer. A freelance developer might gravitate toward the flexibility and zero cost of an open-source model like Llama 3. On the other hand, a large company might need the absolute peak performance and security assurances that come with a proprietary tool like Gemini or GPT-4.

Ultimately, the right choice comes down to balancing performance, features, and cost. The best advice? Try a couple of them out. See which one clicks with your personal coding style and the demands of your projects.

Unlocking Your LLM's Full Potential

Thinking a LLM for code is just for autocompleting lines is like thinking a smartphone is only for making calls. You're missing the whole point. The real power comes from the applications that go way beyond simple suggestions. These tools are becoming genuine force multipliers across the entire development lifecycle.

This is about handing off the grunt work—the tedious, repetitive tasks that eat up your day—so you can focus on what you do best: creative problem-solving and building incredible software. You shift from being a manual coder to a high-level architect who guides an AI to execute a vision.

Supercharge Your Daily Workflow

Let's get practical. A code-focused LLM isn't just a helper; it's like having a team of specialists on call. Imagine pasting a buggy function into your AI assistant and getting back a corrected version just moments later, complete with a clear explanation of what went wrong and how the fix works.

That feature alone can slash your debugging time. But it's really just the tip of the iceberg. These models can touch nearly every part of the software creation process.

Here are a few game-changing ways to use them:

- Automated Debugging: Forget setting breakpoints and stepping through code line-by-line. Just give the model your error logs and the problematic code snippet. It can analyze the context, pinpoint the root cause, and suggest a specific fix, often catching subtle logic errors a human might overlook.

- Cross-Language Translation: Need to convert a Python data processing script into JavaScript for a new web app? A task that used to be a full day of painstaking manual rewriting can now be done in minutes. The LLM manages the syntax, finds equivalent libraries, and even handles idiomatic differences between languages, giving you a massive head start.

- Documentation Generation: We all know writing clear, thorough documentation is essential, but it’s often the first thing to get skipped under pressure. You can feed a function or an entire class to an LLM and ask it to generate detailed docstrings, explanations for each parameter, and even practical usage examples. This keeps your codebase clean, maintainable, and easy for others to jump into.

Think of an LLM as a tireless pair programmer. It’s always ready to tackle complex refactoring, write a suite of robust unit tests, or even help you brainstorm different architectural patterns. When you offload these tasks, you free up your own mental energy for real innovation.

Of course, it’s not magic. It's crucial to remember that these models can sometimes generate plausible-sounding code that is subtly—or completely—wrong. While incredibly powerful, they aren't perfect. Learning how to reduce hallucinations in LLM outputs is a key skill for using them effectively and safely.

By weaving these advanced capabilities into your daily routine, you’re not just coding faster. You’re building better, higher-quality software more efficiently and changing your entire development process from the ground up.

Working Smarter with Your AI Partner

To really get value out of an LLM for code, you can't just toss it a problem and hope for the best. You need a smart workflow. It's helpful to think of these tools less like a magic box and more like a junior developer—one who types incredibly fast but needs clear direction and a watchful eye.

The entire partnership hinges on the quality of your prompts. A lazy request like "write a function to handle user uploads" is going to get you a generic, probably useless, piece of code. You have to feed the AI specific, context-rich instructions to guide it toward the solution you actually have in mind.

That means getting explicit with your requirements. Spell out the programming language. List the function's parameters and what types they should be. Describe exactly what you expect the function to return. If you need it to use a particular library or follow a certain error-handling pattern, you have to say so upfront.

Treat AI Code as a First Draft

Here's the most important rule of thumb when using a code-generating LLM: never trust, always verify. Think of the output as a first draft, not a finished product. It's a fantastic starting point, but you're still the senior developer in the room, and it's your job to review, test, and polish it.

You’ve always been responsible for what you merge to main. You were five years ago. And you are tomorrow, whether or not you use an LLM. If you build something with an LLM that people will depend on, read the code.

This human oversight isn't optional. Your experience is what catches subtle logic errors, ensures the new code fits your project's architecture, and confirms it doesn't open up any security holes. The AI gives you speed; you provide the critical judgment and quality control.

Master the Art of Pair Programming

The best way to approach this is to treat your sessions with the LLM like pair programming. You're the driver, setting the high-level strategy and providing the specific instructions. The AI is the navigator, handling the grunt work of typing and implementing the details.

This back-and-forth works best when you keep it iterative:

- Give a clear, chunked task: Don't ask it to build a whole feature at once. Break the problem down into a small, manageable piece, like writing a single function or building one UI component.

- Provide relevant context: Paste in related code snippets, data structures, or API documentation right in your prompt. Give the LLM the full picture to work with.

- Review and refine: Look over the code it generates. If something isn't quite right, give it specific feedback and ask for a revision. "That's good, but can you refactor it to use a

switchstatement instead?" - Test rigorously: Once you have a draft you're happy with, run your tests. If any fail, copy the error message and feed it back to the LLM. You'll be surprised how often it can debug its own mistakes.

This iterative loop turns a simple code generator into a genuine collaborator. It can help you brainstorm, build, and debug much faster than you could alone. The secret is to stay in the driver's seat, guiding the AI with your expertise every step of the way.

The Evolving Role of the Modern Developer

With an LLM for code becoming a standard part of the developer's toolkit, the conversation isn't about replacement—it's about evolution. We're not looking at a future of humans versus machines, but a powerful new partnership. A developer's value is quickly moving beyond their typing speed or their ability to memorize obscure syntax.

Instead, the modern developer is becoming more of an architect and an AI collaborator. Their focus is shifting from the low-level "how" to the high-level "why." This means they can spend far more time on the uniquely human skills that AI simply can't touch.

The most crucial tasks will be designing robust system architectures, solving complex business problems, and guiding powerful AI assistants to execute a clear vision. It’s a transition from builder to director.

Augmentation Over Automation

This shift is incredibly empowering. When you can hand off the repetitive, time-consuming tasks—like writing boilerplate code, generating unit tests, or drafting initial documentation—you free up valuable mental bandwidth for what really matters: innovation.

This frees up developers to tackle bigger, more interesting challenges and build more sophisticated applications in a fraction of the time.

This human-AI collaboration is already unlocking new possibilities. Developers who learn to work with these tools effectively will find their productivity skyrocketing, allowing them to bring ambitious ideas to life with incredible speed. For a deeper dive into what's on the horizon, check out our guide on exploring the future of AI code writers.

The core idea is simple. The rise of the coding LLM is augmenting our abilities, not automating us out of a job. It's a chance to elevate the craft of software engineering, focusing our creativity and critical thinking on building the next wave of technology with AI as an indispensable partner.

Common Questions About Using LLMs for Code

Whenever developers start bringing an LLM for code into their daily routine, the same questions always seem to pop up. Getting a handle on the answers is key to making these tools work for you, not against you.

Let's get right to it and address the big ones.

The elephant in the room is always job security. Will AI coders put us all out of a job? The short answer is no, but our jobs are changing. Think of these models less as replacements and more as hyper-efficient junior partners. They excel at the repetitive, boilerplate stuff that bogs us down.

This shift lets you spend more time on what actually matters: architecting complex systems, untangling tricky business logic, and creative problem-solving. It’s the kind of work that still requires a human brain. The role is evolving, not disappearing. We're becoming AI collaborators.

Who Owns the Code? And Can You Even Trust It?

Two other huge concerns are ownership and reliability. If an AI writes the code, whose is it? And is it good enough to ship?

Ownership is a tricky one, and it all comes down to the fine print. The policy depends entirely on the tool you're using, so you absolutely have to read the terms of service. With most public tools, you generally own the output. But if you're working on sensitive or proprietary code, you’ll want to look at enterprise-level or self-hosted models that offer clear-cut ownership and privacy.

As for reliability? Never, ever blindly trust AI-generated code.

It's best to treat anything an LLM spits out as a first draft from a talented but inexperienced new hire. It might look perfect, but it still needs a senior developer—you—to review it, test it, and run a security analysis before it goes anywhere near production. Your expertise is the final, non-negotiable quality check.

Ready to organize and improve your own coding prompts? Join the community at Promptaa and start building a powerful, personal library of AI instructions. Get started for free at https://promptaa.com.