Large Language Models vs Generative AI in 2024

The simplest way to put it is this: LLMs are a type of generative AI that works only with text, while generative AI is the bigger picture. It’s the broad category for any AI that creates new content, whether that’s images, music, code, or video.

Think of it like this: Generative AI is the entire artist's studio, full of different tools for painting, sculpting, and composing. An LLM is just one of those tools—a very powerful, specialized pen for writing.

Understanding Generative AI and LLMs

People throw these terms around interchangeably, but knowing the difference is key to picking the right tool for the job. Generative AI is the whole field of artificial intelligence focused on creating something from scratch. It learns the patterns in a dataset and then uses that knowledge to generate completely new, original outputs.

Large Language Models (LLMs) are one of the most visible and powerful applications of generative AI today. Their entire world is language. By training on enormous amounts of text and code, models like OpenAI's GPT series have become incredibly good at understanding, summarizing, translating, and generating text that feels like it was written by a person.

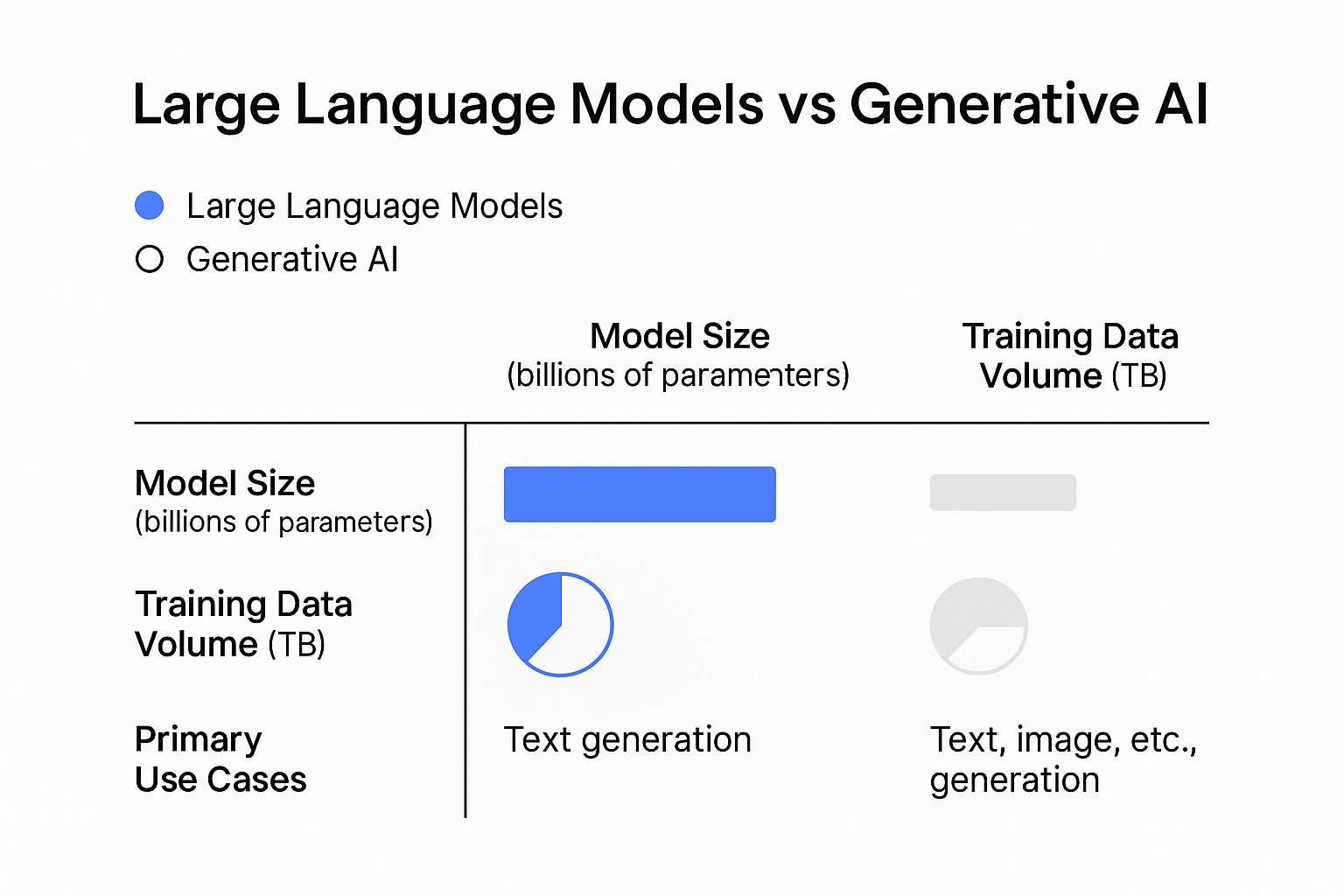

The image below gives you a sense of how they stack up in terms of model size, training data, and what they're typically used for.

As you can see, even though LLMs require massive amounts of data and computing power, they are purpose-built for language tasks. This is a much narrower focus compared to the wide-open, multi-modal world of generative AI.

Key Foundational Differences

To really get it, you have to see their core functions side-by-side. The two work together in many modern applications, but they aren't the same thing. An LLM might power a chatbot, but a different generative AI model is needed to create a video or a piece of music. For a closer look at how businesses are integrating these tools, you can explore the practical impact on Appian.com.

This table breaks down the core distinctions in a simple format.

Quick Comparison: Generative AI vs. Large Language Models

To help clarify where each technology fits, here’s a quick rundown of their primary attributes and roles.

| Attribute | Generative AI | Large Language Models (LLMs) |

|---|---|---|

| Primary Scope | A broad field of AI that creates any new content (images, audio, text, etc.). | A specific subset of generative AI focused only on text. |

| Common Outputs | Images, music, synthetic data, 3D models, code, and text. | Essays, emails, summaries, translations, and conversations. |

| Core Technology | Includes LLMs, GANs, VAEs, and Diffusion Models. | Almost always built on the Transformer architecture. |

This table makes it clear that LLMs are a specialized tool within a much larger toolbox.

The easiest way to remember this is that all LLMs are a form of generative AI, but not all generative AI is an LLM. This simple hierarchy is everything. An LLM can't paint you a picture, but another type of generative AI model definitely can. Knowing this is the first step in picking the right technology for what you're trying to accomplish.

Core Architectural and Functional Differences

To really get the difference between large language models and generative AI, you have to look under the hood at how they're built. The core architecture is what dictates how they learn, create, and what they’re ultimately capable of. They aren’t just different in what they do; they are fundamentally different in their design.

Large Language Models almost always run on a specific framework called the transformer architecture. First introduced back in 2017, this design completely changed how machines handle language by introducing a clever mechanism known as self-attention.

This self-attention feature lets an LLM figure out which words in a sentence are most important, regardless of their position. For instance, in "The cat, which had chased the mouse all day, was finally tired," the model knows "was tired" refers to the "cat," not the "mouse." That contextual awareness is the magic behind generating text that actually makes sense.

The Engine of Language LLMs

At its core, an LLM’s primary job is surprisingly straightforward: next-token prediction. It’s trained on a staggering amount of text from books, articles, and websites, which makes it an expert at guessing the most statistically likely word to come next in any given sentence.

It isn’t “thinking” like a person. It's performing an incredibly sophisticated probabilistic calculation. This process is what makes LLMs so good at tasks like:

- Content Creation: Churning out articles, emails, or marketing copy by continuously predicting the next logical word.

- Conversational AI: Acting as the brains for chatbots that can understand what you’re asking and give a relevant, contextual reply.

- Text Summarization: Pinpointing the key sentences in a long document to create a short, accurate summary.

This laser focus on sequential text prediction is both the LLM's greatest strength and its biggest limitation. It has an incredible command of language, but it's stuck in that domain.

The Diverse World of Generative AI Architectures

Generative AI, on the other hand, isn't married to a single architecture. It’s more of a diverse toolbox of models, each one built for a specific kind of creative work. This is why generative AI can produce so much more than just text.

Two of the most well-known non-LLM architectures are Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs). These models work on principles that are worlds away from the next-token prediction of an LLM.

A GAN operates through a fascinating "artist and critic" dynamic. One neural network, the generator, creates something new (like an image). A second network, the discriminator, then tries to tell if that creation is real or fake. This back-and-forth competition forces the generator to get better and better, producing incredibly realistic results.

This adversarial process is completely different from an LLM’s predictive method. An LLM builds text one word at a time, while a GAN refines a complete output by testing it against a critical judge.

A Tale of Two Models

The best way to see the functional differences is to compare how each model would handle a creative request.

| Task Comparison | Large Language Model (LLM) | Generative AI (GAN) |

|---|---|---|

| Input | A text prompt, like "Write a poem about a lonely robot." | A text prompt, like "Create a photorealistic image of a lonely robot sitting in a field." |

| Core Process | Predicts the most likely sequence of words to create a coherent poem. | The generator creates an image, and the discriminator critiques it until it looks real. |

| Output | A block of text containing the poem. | A final, high-resolution image file (e.g., JPEG or PNG). |

| Underlying Goal | Linguistic coherence—making sure the language patterns make sense. | Visual realism—matching the statistical properties of the images in its training data. |

This comparison puts the core divergence in sharp focus. An LLM's world is governed by the sequential logic of language and the transformer architecture. The rest of generative AI uses a variety of models—often competitive or reconstructive—to create all sorts of non-textual data, making it a much broader field. Understanding this essential difference is the key to knowing which tool to use for which job.

Comparing Real-World Business Applications

Understanding the technical nuts and bolts of these models is one thing, but the real test is seeing them in action. Knowing when to use a large language model versus a broader generative AI tool is what separates a successful project from a frustrating one. The right choice always comes down to your specific business goal, whether that’s making internal workflows run smoother or launching a truly innovative marketing campaign.

An LLM is your specialist. Think of it as the go-to expert for any task that revolves around understanding, processing, or generating text. Its applications are laser-focused and incredibly good at boosting efficiency and clarifying communication.

On the other hand, the wider field of generative AI is your creative engine. It’s the tool you reach for when you need to create something entirely new that isn’t just words on a page. It can produce visuals, audio, and even complex data, opening up entirely new possibilities in product design and brand strategy.

When to Deploy a Large Language Model

LLMs truly shine in situations that demand a deep understanding of language and context. At their core, they are tools built to automate and augment any workflow that relies on text.

Think of an LLM as a highly skilled digital assistant who can read, write, and summarize information at an unbelievable speed. Its main job is to take over repetitive language-based tasks, freeing up your team to focus on work that requires strategic human insight.

Here are a few classic business cases where an LLM is the perfect fit:

- Automated Customer Support: Powering chatbots that can instantly answer common questions, guide users through troubleshooting, and know exactly when to hand off a conversation to a human agent. This dramatically cuts down on wait times and frees up your support team.

- Internal Knowledge Management: Creating a smart search function for your company’s internal documents or automatically summarizing long meeting transcripts. This means your team can find the information they need in seconds instead of digging through endless files.

- Content and Marketing Copy Generation: Drafting blog posts, social media updates, email newsletters, or product descriptions to get the creative process started. It's a fantastic way to beat writer's block and speed up your content pipeline. For a closer look at how different models tackle this, our https://promptaa.com/blog/comparison-of-chatgpt-and-claude-models offers some great insights.

This sharp focus on language is fueling incredible growth. By 2025, an estimated 750 million applications are expected to have LLM technology built-in. The global market itself is projected to jump from $1.59 billion in 2023 to a staggering $259.8 billion by 2030, all driven by the need for more sophisticated text processing. You can find more details on this explosive growth in recent LLM market statistics.

When Your Project Needs Broader Generative AI

If LLMs are the text specialists, then generative AI is the multidisciplinary artist. You turn to these tools when your goal is to create something from scratch that goes beyond text. These applications are all about ideation, design, and multi-modal creation.

Generative AI is the answer when you need pure imagination combined with visual or auditory output. It doesn't just process what's already there; it synthesizes information into entirely new forms.

Generative AI is the engine for innovation when you need to visualize an idea, create a new sound, or generate a dataset that doesn't exist yet. It breaks free from the constraints of language to build tangible, creative assets from the ground up.

Think about these scenarios where a broader generative AI tool is essential:

- Visual Asset Creation: Generating unique logos, brand imagery, and marketing visuals from a simple text prompt. This lets design teams explore dozens of creative directions in a fraction of the time.

- Product Prototyping and Design: Creating realistic 3D models of new products or architectural concepts. This helps everyone visualize the final product long before a single physical component is made.

- Synthetic Data Generation: Producing large, realistic datasets to train other machine learning models, which is crucial in fields like healthcare or finance where real-world data is either scarce or highly sensitive.

- Video and Media Production: Creating animated storyboards from a script, generating custom background music, or even producing short promotional videos.

Making the Right Choice for Your Business Goal

The decision really boils down to one simple question: what do you need the AI to produce?

| Business Goal | Primary Output | Recommended Technology | Example Application |

|---|---|---|---|

| Improve Operational Efficiency | Text | Large Language Model (LLM) | An AI chatbot for instant customer support. |

| Accelerate Creative Workflows | Images, Video | Generative AI | A tool that generates ad visuals from a text prompt. |

| Enhance Data Analysis & Reporting | Text, Code | Large Language Model (LLM) | An assistant that summarizes sales data and writes reports. |

| Innovate Product Design | 3D Models | Generative AI | Software that creates product prototypes from sketches. |

If you map your business objective directly to the required output, the path becomes clear. If the problem is rooted in language, an LLM is your tool. If you need to create something visual, auditory, or entirely new, then you need the expansive toolkit of generative AI.

A Nuanced Look at Strengths and Limitations

To get the most out of any AI project, we have to move beyond a simple pros and cons list and set some realistic expectations. When you put large language models vs generative AI side-by-side, you'll see that each one has unique strengths and very real limitations that are tied directly to how they were built.

An LLM's superpower is its incredible command of language. After being trained on mountains of text, it has a deep grasp of context, nuance, and the subtle ways we communicate. This makes it a fantastic tool for tasks that demand serious language comprehension, like summarizing a dense legal contract or writing marketing copy that actually connects with a specific audience.

But that strength is also its biggest weakness. An LLM is trapped in the world of text it was trained on. It doesn't actually understand the physical world, which is why it can confidently state things that are completely wrong. This is the phenomenon we all know as "hallucination."

The LLM's Factual Grounding Problem

The risk of an LLM inventing plausible-sounding nonsense is the single biggest barrier to its adoption in the business world. Since they work by predicting the next most likely word in a sequence, they can easily make up facts, statistics, or sources that are pure fiction. This makes them fundamentally unreliable for any job where factual accuracy is a must-have, at least without some serious guardrails in place.

An LLM’s output is a mirror of its training data, not a window into real-world truth. This is a critical distinction. While it can write beautifully about a topic, it has no built-in fact-checker, which leaves the door wide open for significant errors.

Thankfully, the industry is working hard on this. If you want to dive deeper into the problem, our guide on how to reduce hallucinations in LLMs offers some practical strategies. These fixes are essential for making text-based AI something businesses can truly trust.

Generative AI: The Price of Creativity

Generative AI's main draw is its sheer versatility. Using different architectures like GANs and diffusion models, it can create brand-new images, music, code, and even 3D models from a simple text prompt. This ability to generate non-text assets from scratch opens up a ton of creative possibilities, letting teams visualize new products or marketing campaigns in a matter of minutes.

The trade-off for all this creative power is the massive computational cost. Training a high-quality model to generate images or video takes an enormous amount of processing power and energy. That cost ultimately gets passed on to the user, which is why creating a high-resolution image is often more expensive than generating a block of text.

On top of that, keeping quality consistent can be a real headache. A model might generate a perfect image one minute, but a tiny tweak to the prompt could spit out something bizarre or completely unusable. This unpredictability means a human still needs to be in the loop, refining and iterating to get the right result, which adds an extra step to the creative process.

We're seeing a major push toward more accountable AI. Techniques like retrieval-augmented generation (RAG) are becoming standard practice to slash hallucination rates by forcing the AI to pull from verified, external data. As these tools become more integrated into daily business operations, the focus has shifted from just creating cool things to delivering provably accurate results. You can read more about this shift in generative AI trends on ArtificialIntelligence-News.com.

Situational Strengths and Limitations

To make this more concrete, let's look at how these trade-offs play out in the real world. The right tool always depends on the job at hand.

This table breaks down which technology shines—and where it might stumble—in a few common business scenarios.

| Scenario | Optimal Technology | Key Strength | Potential Limitation |

|---|---|---|---|

| Drafting a Legal Brief | Large Language Model | Deep understanding of complex legal terminology and document structure. | Risk of "hallucinating" case law or misinterpreting critical statutes. |

| Creating Ad Campaign Visuals | Generative AI | Ability to rapidly generate dozens of unique, on-brand images from text. | Inconsistent quality and high computational cost for high-res outputs. |

| Automating Customer FAQs | Large Language Model | Can provide instant, conversational, and context-aware answers to queries. | May provide incorrect information if not grounded in a verified knowledge base. |

| Prototyping a New Product | Generative AI | Can create realistic 3D models and designs to visualize concepts quickly. | Generated designs may not be physically viable without expert human review. |

Ultimately, choosing between an LLM and a broader generative AI tool isn't about picking a "winner." It's about understanding the specific strengths of each and aligning them with your goals. For text-heavy tasks requiring nuance, an LLM is your go-to. For creating new, non-textual assets, generative AI is the clear choice.

How to Choose the Right AI for Your Project

Deciding between a large language model and a broader generative AI tool can feel overwhelming. The best way to cut through the noise is to ask one simple question: What is the final output you need? Your end goal is the single most important factor.

If your project lives and breathes text, an LLM is almost always the right tool for the job. But if you're creating something new and tangible—an image, a piece of music, a 3D model—you'll need the much broader capabilities found across the generative AI landscape. Making the right choice upfront saves you from the headache of a tool-task mismatch down the road.

Define Your Primary Output

First things first, get crystal clear on your deliverable. Are you automating a workflow, creating new assets from scratch, or analyzing existing data? Your answer will immediately guide you toward the right technology.

Let’s break this down into a simple decision-making framework.

You probably need a Large Language Model if your project is about:

- Automating Communication: Think customer service chatbots that handle common questions or an internal helpdesk assistant.

- Summarizing Information: Condensing lengthy reports, academic papers, or meeting notes into quick, digestible summaries.

- Generating Written Content: This is the bread and butter of LLMs—drafting emails, blog posts, marketing copy, or technical documentation.

- Analyzing Text Data: Running sentiment analysis on customer reviews or pulling key themes from a mountain of user feedback.

You're better off with a broader Generative AI tool if your project involves:

- Creating Visuals: Generating brand logos, social media graphics, or custom illustrations for a presentation.

- Product Design and Prototyping: Creating realistic 3D renderings of a new product or architectural mockups.

- Multimedia Production: Composing background music for a video or generating storyboard images directly from a script.

- Synthetic Data Generation: Creating unique datasets to train other machine learning models, especially when real-world data is scarce or sensitive.

This initial sort helps you figure out if you need a language specialist (an LLM) or a multi-talented creator (a generative AI tool).

Assess Your Need for Accuracy vs. Creativity

The next big consideration is the trade-off between factual accuracy and creative freedom. This is really at the heart of the large language models vs generative ai debate, as each technology leans heavily in one direction.

LLMs are incredibly powerful, but they are known to "hallucinate"—that is, they can state incorrect information with absolute confidence. If your project demands rock-solid factual accuracy, like a medical knowledge base or a financial reporting tool, you absolutely must have a human-in-the-loop verification process or use an LLM specifically grounded in a verified dataset.

The core paradox of using an LLM is that you have to write with extreme clarity to get clear results. Poorly structured prompts lead to vague or unreliable outputs. Success depends on your ability to provide specific, well-defined instructions.

On the other hand, generative AI tools for images or music are built for creative exploration. Their entire purpose is to produce novel outputs that spark new ideas. Factual accuracy isn't the goal; originality and aesthetic appeal are. This makes them perfect for brainstorming, design ideation, and artistic projects where you're exploring what's possible, not just stating what is.

If you need a tool for creative ideation, a generative model is your best bet. If you need a tool to process and generate fact-based text, an LLM is the right choice, but you have to manage it carefully to ensure reliability. Honing your ability to write clear instructions is essential. You can learn more about the different types of prompting and how they impact AI outputs in our detailed guide.

The Future of LLMs and Generative AI

The next big leap is already here: multi-modal LLMs. These models are designed to understand and generate content across text, images, and audio, effectively blurring the lines between different types of data. This isn't just a small step forward; it’s a new phase in how AI operates.

We're also seeing efficiency and reasoning abilities improve at an incredible pace—about 40% per year. This is thanks to smarter model compression techniques and more refined architectures. In practical terms, this means we can expect AI agents to handle increasingly complex, multi-step tasks with far greater reliability.

Key Multi-Modal Advances

So, what's happening under the hood? A few key developments are driving this shift:

- Unified Embeddings: This allows models to find the contextual links between images and text, leading to much richer, more relevant outputs.

- Cross-Modal Prompts: You can now describe a scenario using a mix of text, audio, and visuals. The AI gets the full picture, not just one piece of it.

- On-Device Inference: Processing is happening locally on devices, which slashes latency and keeps sensitive data secure, all in real time.

These advances are turning LLMs from text-based specialists into genuinely versatile partners. Imagine an AI that can write a code snippet, annotate a diagram you provide, and then compose a short audio summary of the changes—all in one seamless workflow.

“Multi-modal LLMs are setting the stage for AI that thinks and perceives more like humans,” an industry architect notes.

This is where things get really interesting. AI agents are moving beyond executing single, isolated tasks. They're starting to orchestrate entire processes from start to finish, like automating a full report generation cycle or coordinating a design and deployment workflow without anyone needing to step in manually.

Emerging Opportunities for Automation

For businesses, this opens up a whole new world of operational efficiency. Think about a supply chain team that can auto-generate shipment reports complete with image-based inspections and text summaries.

Here are just a few possibilities:

- Inventory Audits: Using image recognition to count stock and then generating a text summary of the findings.

- Customer Onboarding: Creating an interactive experience that uses chat, voice commands, and visual guides.

- Automated Compliance: Combining document analysis with visual anomaly detection to flag issues instantly.

To get ready, it’s a good idea to start auditing your current workflows. Pinpoint the tasks that could benefit from this kind of AI orchestration. Building out prompt libraries and establishing strong governance frameworks will be essential for deploying these systems securely and at scale.

| Opportunity | Benefit |

|---|---|

| Multi-modal customer support | Higher resolution and faster issue triage |

| Automated design iterations | Shorter creative cycles and cost savings |

| Real-time data visualization with narration | Improved decision-making with context |

Preparing for Next-Generation AI

Your old prompting strategies won't be enough. Teams need to learn how to create prompts that weave together different types of input. As AI agents gain more autonomy, putting clear ethical guardrails and governance in place becomes non-negotiable.

Here are some practical steps to take:

- Get your designers, developers, and data scientists talking. Cross-functional collaboration is a must.

- Start training your staff on how to write effective prompts that combine text, image, and audio cues.

- Keep a close eye on performance metrics across all modalities to continuously refine your models.

Using a tool like Promptaa to build a centralized prompt repository can make a huge difference in getting everyone on the same page and accelerating adoption.

Unified prompt management is key to maintaining consistency across AI-driven workflows.

Organizations that get ahead will be those that invest in fine-tuning and transfer learning, allowing them to adapt their models quickly. Start with small pilot projects to identify integration challenges and figure out what it will take to scale. A continuous learning loop, where the agents improve based on real-world feedback, is the only way to go.

Finally, don't forget the fundamentals:

- Evaluate the data privacy risks that come with new multi-modal inputs.

- Define clear KPIs to measure agent performance and user satisfaction.

- Schedule regular audits to ensure everything remains compliant and accurate.

Frequently Asked Questions

It's easy to get tangled up in AI terminology. Let's clear up a few of the most common questions people have when trying to sort out the difference between large language models and generative AI.

Is ChatGPT An LLM Or Generative AI?

It’s both. Think of it this way: at its heart, ChatGPT is powered by a Large Language Model—one of OpenAI's GPT models. That LLM is the engine doing the heavy lifting, understanding language, and figuring out what to say next.

But because the application creates original text, it is, by definition, a form of Generative AI. ChatGPT is a perfect illustration of how these two concepts fit together. It's a generative AI tool that uses an LLM to perform its specific task.

Can Generative AI Work Without An LLM?

Absolutely. It’s a common misconception that all generative AI relies on language models, but that’s not the case at all. Many of the most popular generative tools use completely different architectures to produce anything but text.

For example:

- Image Generators like Midjourney or Stable Diffusion are often built on diffusion models or Generative Adversarial Networks (GANs).

- Music Composition Tools use unique models designed specifically to generate harmonies and melodies.

These models are masters of their own craft, creating brand new content without ever touching an LLM. This really highlights just how diverse the generative AI field has become.

The key takeaway is that an LLM is the right tool for generating language. For everything else—from images to synthetic data—other specialized generative AI models are required.

What Is The Main Cost Difference Between The Two?

The biggest cost difference really boils down to output complexity and the raw computing power needed to create it. As a general rule, generating text with an LLM is far less computationally demanding than creating a high-resolution image or a piece of video with a different generative model.

While training any of these massive models is a pricey endeavor, the day-to-day operational cost—what we call the "inference" cost—is where the difference becomes clear. The cost to generate a paragraph of text is typically much lower than producing a detailed 4K image. That’s because visual data is incredibly dense and requires a ton more processing, making multi-modal generative AI applications more expensive to run at scale.

Ready to organize and optimize your own AI prompts? Promptaa provides a central library to create, share, and enhance your prompts for better results. Start building your collection and master your AI interactions today at https://promptaa.com.