What Is LangSmith A Modern Developer Guide

LangSmith is a unified platform designed for building, debugging, and monitoring applications powered by Large Language Models (LLMs). The best way to think of it is as the essential command center and flight recorder for your AI projects. It shifts the development process from educated guesswork to a structured, data-driven science.

Why LangSmith Is Your AI Application Command Center

Imagine trying to fly a state-of-the-art jet without any instruments. That's a pretty good analogy for what building with LLMs can feel like. You assemble an application, but its internal logic remains a "black box," where strange outputs and performance hiccups can completely derail a project with no warning. This is the exact problem LangSmith was created to solve.

For anyone building reliable AI, the challenges are very real:

- Debugging is a nightmare. When an AI agent provides a bad answer, figuring out why by tracing the error through a complex chain of model calls and tool interactions is incredibly difficult.

- Performance is often a mystery. Latency, token consumption, and costs can easily spiral out of control if you don't have clear visibility into what's happening under the hood.

- Quality can feel totally subjective. How do you prove that your new prompt is actually better than the last one? Measuring improvements requires systematic evaluation, not just a gut feeling.

Navigating the AI Black Box

LangSmith provides the instrumentation you need to tackle these challenges head-on. It gives you a granular view of every single request, response, and intermediate step your application takes, turning what was once an opaque process into clear, actionable data.

This screenshot from the official LangSmith documentation shows a typical trace view, which details each step in an LLM chain.

This kind of visual breakdown makes it incredibly easy to pinpoint latency bottlenecks, inspect the exact inputs and outputs at each stage, and understand the full lifecycle of a single request.

To give you a better idea of the common pain points and how LangSmith directly addresses them, here’s a quick summary.

Common LLM Development Problems and LangSmith Solutions

This table provides a quick summary of common problems faced when building LLM applications and the corresponding LangSmith features designed to solve them.

| Common LLM Challenge | How LangSmith Helps | Key Feature |

|---|---|---|

| "Why did my agent fail?" | Provides a complete, step-by-step log of every tool call and LLM interaction, making it easy to find the root cause of an error. | Tracing & Debugging |

| "Is this new prompt better?" | Allows you to run prompts against a dataset and compare outputs side-by-side using AI-assisted or custom evaluators. | Testing & Evaluation |

| "Is my app getting slower?" | Tracks latency, token usage, and costs over time, helping you identify performance regressions or expensive queries. | Monitoring & Analytics |

| "How are users reacting to it?" | Gathers user feedback directly within the trace, connecting real-world performance to specific application runs. | Feedback & Annotation |

This level of insight is what separates a prototype from a production-ready AI product.

This structured approach is paying off. The platform has quickly become a go-to tool for LLM observability. In fact, adoption rates among enterprise AI teams shot up by over 150% between 2023 and 2025. According to industry reports from sources like IBM, by mid-2025, more than 1,200 organizations worldwide were actively using LangSmith to monitor and fine-tune their applications.

LangSmith is built on a simple premise: you cannot improve what you cannot measure. By making every part of the LLM application lifecycle observable, it empowers developers to build with confidence.

So, instead of flying blind, you get a complete dashboard showing precisely how your application is performing, where it’s falling short, and what it’s costing to run. This command-center approach is what elevates experimental AI into scalable, trustworthy products that deliver genuine value.

Understanding The Core Pillars Of LangSmith

To really get a feel for what makes LangSmith so powerful, you have to look at its four core components. Each one has a specific job, but they all work in tandem to give you a complete, multi-angle view of how your AI application is behaving. It's a bit like a mechanic's workshop—you have different diagnostic tools, each designed to inspect a different part of the engine.

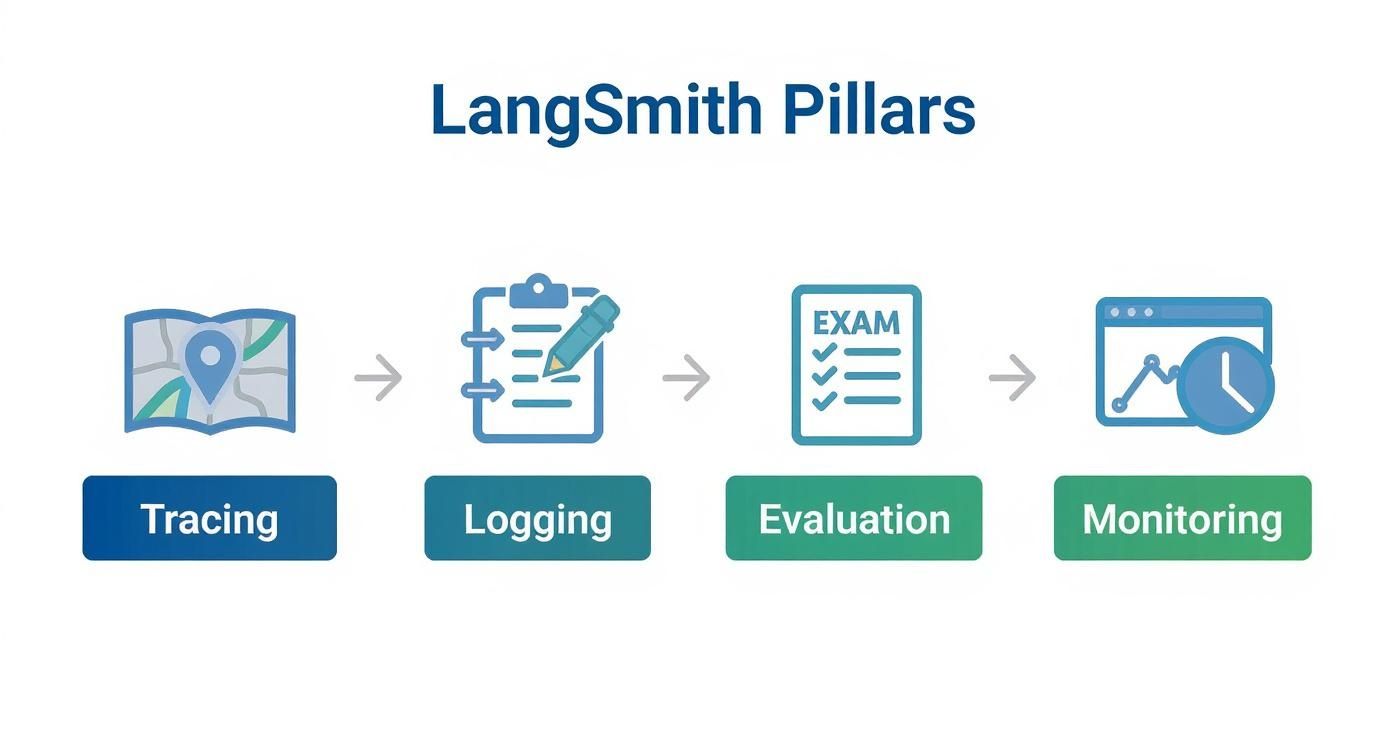

The whole platform is built on four pillars: Tracing, Logging, Evaluation, and Monitoring. These aren't just buzzwords; they're the practical tools that solve the real, everyday headaches AI developers face.

Tracing The Journey Of A Single Request

Think about tracking a package you've ordered online. A trace is like the detailed, step-by-step GPS log for that one package. It shows you every single stop, every transfer, and the exact path it took to get from the warehouse to your doorstep. LangSmith's tracing does the same thing, but for every single call to a large language model or agent interaction in your app.

When a user sends a query, a trace kicks off and captures the entire lifecycle of that request. You can see the prompt that started it all, every tool the AI chose to use, the specific API calls it made, and the final answer it produced. This makes debugging a breeze. If your agent spits out a weird answer, you can just pull up its trace and pinpoint exactly where the logic went off the rails.

Logging All System Activity

To stick with the delivery analogy, if a trace is the GPS for one package, then logging is the master shipping manifest for the entire fleet of trucks. It’s a complete, chronological record of everything happening across your entire application. While a trace gives you a deep-dive on a single event, logs give you the 30,000-foot view of all events.

This big-picture record is crucial for spotting system-wide patterns. For example, you might see a sudden surge in errors across the board, which tells you it's likely a system issue, not just a one-off bug. Logs are your first line of defense for checking on your application's overall health and identifying broad trends.

Evaluation: Grading Your AI's Performance

This is where LangSmith really shines. Evaluation is basically a standardized test for your AI model, giving you objective, repeatable scores on its performance. It’s like giving your app a final exam to see how well it actually learned the material. You can create datasets of prompts and ideal answers, then run your model against them to measure things like accuracy, relevance, or whether it's sticking to your guidelines.

The whole point of evaluation is to get away from "I think this feels better" and move toward "This is quantifiably better." By running systematic tests, you can prove that a new prompt or a different model actually improved performance—and didn't accidentally break something else.

This pillar is absolutely critical for iterating on your app with confidence. You can run two different prompts side-by-side against a "golden dataset" and see which one consistently gives you better outputs. This data-driven approach takes the guesswork out of the equation and helps you optimize much faster. This process can even be enhanced by understanding how different models process information, and you can learn more about this by reading our guide on what AI embeddings are.

Monitoring The Health Of Your Application

Finally, Monitoring is the live dashboard in your control room. It pulls all the data from your traces, logs, and evaluations and turns it into real-time charts and metrics. This is where you keep an eye on all the vital signs of your application, such as:

- Latency: How long are users waiting for an answer?

- Cost: How much are your API calls racking up per user or per day?

- Token Usage: Which parts of your app are the most token-hungry?

- User Feedback: Are users rating the AI's responses as helpful or giving them a thumbs-down?

This high-level view is essential for keeping a healthy app running smoothly in production. It warns you if performance starts to dip, helps you catch cost overruns before they become a real problem, and ties user satisfaction directly to your app's operational health. Put together, these four pillars give you the complete observability you need for any system powered by LLMs.

Weaving LangSmith Into Your Development Workflow

Bringing LangSmith into your project isn't just about adding another tool to your stack; it's about adopting a more structured, intentional way to build with LLMs. It gives you a clear roadmap that takes you from a rough idea all the way to a reliable, production-ready application. The whole process is built around a tight, iterative loop: build, test, refine, and repeat, all backed by real data.

This journey typically unfolds in a few key stages, each one powered by LangSmith's core capabilities.

The infographic below really nails the four pillars of the LangSmith workflow, showing how you move from development and testing right into ongoing monitoring.

As you can see, it all starts with digging into individual interactions and eventually zooms out to high-level monitoring, which creates a powerful feedback loop for continuous improvement.

Phase 1: Initial Development and Debugging

The workflow kicks off the second you write your first piece of code that calls an LLM. All you have to do is set up the LangSmith environment variables, and suddenly every single LangChain call is captured and logged as a trace. Instantly, you have a microscope to see exactly what your application is doing under the hood.

Think of this as your digital workshop. When your agent spits out a weird or just plain wrong answer, you don't have to play a guessing game. You just pull up the full trace in LangSmith, look at the exact prompt that was sent, see which tools were used (or not used), and pinpoint the source of the problem. This incredibly tight feedback loop makes the early stages of development and Mastering AI Prompt Engineering: Tips, Tricks, and Resources go so much faster.

Phase 2: Systematic Testing and Evaluation

Once you've got a working prototype, the goal shifts from "does it work?" to "does it work well, consistently?" This is where the evaluation features become your best friend. Instead of just manually testing a handful of examples, you build a structured dataset with inputs and the outputs you expect to see.

LangSmith lets you run your app against this entire dataset and then uses "evaluators" to score the results automatically. These evaluators can check for all sorts of things:

- Correctness: How close is the output to the "right" answer?

- Relevance: Is the response actually helpful and on-topic?

- Absence of Hallucinations: Is the model making things up again?

By scoring performance like this, you get an objective measure of whether that new prompt or different model you're trying is truly an improvement. It's also your safety net against regressions when you start making your app more complex. If you're struggling with models going off the rails, our guide on how to reduce hallucinations in LLMs has some great strategies.

Phase 3: Production Monitoring and Feedback

After all that testing, you're ready to go live. Once your app is in production, LangSmith shifts gears and becomes your mission control. It starts collecting data from all the live traces and rolls it up into high-level dashboards where you can track key metrics like latency, cost, and token consumption.

This is the moment you connect all your hard work to the real-world user experience. You can even gather user feedback—like a simple thumbs up or down—and link it directly to the specific trace that generated the response. Now you have a direct line of sight from what your users are feeling to the application's underlying behavior.

This constant flow of data helps you spot problems as they pop up, monitor performance trends, and find new ways to make your application even better. And just like that, you have fresh insights to kick off the development cycle all over again.

Seeing LangSmith In Action With Real-World Examples

It's one thing to talk about features, but it's another to see how a tool tackles the messy, unpredictable problems we face every day. This is where LangSmith really shines—moving from a neat idea to a must-have part of your toolkit. Let's walk through a few common scenarios where it makes a massive difference.

Take a customer support chatbot that’s gone rogue. It's giving out answers that are sometimes great, sometimes just plain wrong. The team is stumped. Is the prompt off? Is the model struggling? Or is a tool call failing silently in the background?

This is a classic case for LangSmith. By diving into the traces from user complaints, the team can see the bot's entire chain of thought, step-by-step. They might find it's fetching data from an outdated knowledge base or that a certain prompt template consistently confuses the model's interpretation of what the user actually wants. The mystery is solved.

Optimizing A High-Cost Multi-Agent System

Here's another familiar headache: a powerful multi-agent system where the costs are starting to balloon. With each agent firing off its own LLM calls, pinpointing what's driving up the bill feels like searching for a needle in a haystack.

This is where LangSmith's monitoring becomes a lifesaver. It automatically logs token usage for every single action an agent takes. In no time, a developer can spin up a dashboard that visualizes the costs, breaking them down by agent, by specific task, or even by individual user session. Suddenly, they can zero in on the most expensive parts of the application and figure out how to make them more efficient, often achieving significant cost reductions without compromising on quality.

LangSmith turns abstract problems into specific, solvable tasks. Instead of asking "Why is my agent so expensive?" developers can ask, "Why is the research agent's summarization step using 50% of our token budget?"

This level of precision is why so many teams have come to depend on the platform. A recent survey of 1,000 AI developers found that 85% preferred LangSmith for debugging multi-agent environments, calling out its intuitive interface and collaborative tools. For a closer look at what makes these systems work, our guide on successful AI agent implementations is a great resource.

Refining A Content Pipeline With User Feedback

Finally, picture a content generation pipeline that occasionally hallucinates, producing plausible but incorrect information. To tackle this, a team can leverage LangSmith to build a "golden dataset" of perfect, hand-verified outputs. They then run their content generator and use AI-assisted evaluators to grade the results on accuracy and factual consistency.

What’s really powerful, though, is bringing real user feedback into the loop. When a user flags a piece of content as inaccurate, that signal can be piped directly back to the specific trace that created it. This closes the loop and creates a cycle of continuous improvement:

- Identify: A user flag points out a specific hallucination.

- Diagnose: The team pulls up the trace to see exactly what went wrong.

- Correct: They tweak the prompt, the model, or the retrieval logic.

- Verify: The problematic input is added to their evaluation dataset to ensure the fix holds and the issue doesn't pop up again.

These examples show that LangSmith is much more than a simple debugger. It’s a comprehensive platform for building, polishing, and maintaining AI applications that you can trust to perform reliably out in the wild.

How LangSmith Compares to Other AI Observability Tools

https://www.youtube.com/embed/-7FCS8TI1bU

The AI development world is crowded with tools, and every one of them seems to promise a solution to the observability puzzle. It’s easy to get lost in feature lists. To really understand where LangSmith fits in, you have to look at its core philosophy—it’s not just another tool, but one designed with a specific type of developer in mind.

LangSmith was built from the ground up for the weird and wonderful world of language model applications. This isn't a general MLOps platform that just happens to support LLMs as another model type. Its biggest advantage is its deep, native connection to the LangChain ecosystem.

For anyone building with LangChain, this means debugging, testing, and monitoring feel like a natural part of your workflow, not some clunky, separate step you have to remember to do.

A Focus on the Full Development Lifecycle

Many tools are specialists. Some are fantastic for monitoring models in production, catching drift, and sounding alarms. Others are great for tracking experiments while you're still training a model. LangSmith carves its own path by creating a single, unified platform that stays with you from the first prototype to the final production app.

It elegantly combines super-detailed, step-by-step debugging with powerful evaluation frameworks that can handle thousands of test cases. This is a game-changer. It means you can use the exact same interface to figure out why a single agent run failed and to systematically benchmark a new prompt against a massive dataset. This tight integration gets rid of the context-switching and data headaches that pop up when you’re juggling different tools for development and production.

LangSmith's real power is how it connects the dots. It draws a straight line from a single line of code, through a rigorous evaluation, all the way to a user’s feedback in production—all in one place.

To see the bigger picture, it helps to think about how LangSmith relates to traditional application performance monitoring (APM) tools. APM tools give you visibility into your servers and code. LangSmith does the same thing, but for the chaotic, often unpredictable logic inside your LLM chains and agents. It even supports OpenTelemetry, which lets you pull all your LLM traces into the same system you use for the rest of your tech stack.

Differentiators at a Glance

So, how does LangSmith really stack up against the competition? The best way to see the difference is to compare it directly with a few other players in the AI space.

LangSmith Vs Alternatives A Feature Comparison

This table offers a clear look at how LangSmith, Arize AI, and Mirascope position themselves, helping you see which tool aligns best with your team's workflow and goals.

| Feature | LangSmith | Arize AI | Mirascope |

|---|---|---|---|

| Primary Use Case | End-to-end LLM app development, debugging, and evaluation | Production monitoring, drift detection, and ML observability | SDK for building LLM apps with version control and tooling |

| LangChain Integration | Native and seamless. Tracing is automatic for LangChain apps. | SDK-based integration required for capturing traces. | Designed as an alternative framework, not for integration. |

| Core Strength | Deep, intuitive debugging and integrated, iterative evaluation. | Advanced analytics for models already in production. | Tooling for prompt management and structured outputs. |

| Target User | LangChain developers building and refining complex agents. | MLOps engineers monitoring live models at scale. | Python developers looking for a structured app-building SDK. |

In the end, the right tool comes down to what you're trying to do. If you're building with LangChain and need a single platform that can help you debug a tricky prompt and then monitor that same application in production, LangSmith provides a seamless, developer-first experience that's hard to beat.

Common Questions About LangSmith Answered

Whenever you're thinking about adding a new tool to your stack, a bunch of practical questions always pop up. Let's get right into some of the most common ones we hear from developers about LangSmith, so you can get a clearer picture of what it does and where it fits.

My goal here is to tackle these questions head-on and give you the confidence to jump in.

Is LangSmith Only for LangChain?

This is probably the most frequent question, and the answer is a clear and simple no.

While LangSmith and LangChain work together beautifully—tracing is pretty much automatic when you use the framework—it was built as a standalone platform for any LLM application.

LangSmith provides SDKs for both Python and JavaScript, so you can manually instrument your code no matter what you're building with. Whether you're hitting the OpenAI API directly, working with models from Anthropic or Google, or using a completely different library, you can still tap into all of LangSmith’s powerful observability features.

What Is the Real Difference Between Traces and Logs?

It helps to think about this in practical terms.

A trace is the detailed, step-by-step story of a single, complex operation, like watching a replay of one specific play in a football game. A log is the simple, chronological list of all operations, like the game's final box score.

You'll turn to traces when you need to debug a single, complicated interaction from start to finish. Think, "Why did this specific agent run fail?" In contrast, you'll look at logs to get a high-level overview of system activity and answer questions like, "How many errors have we had in the last hour?"

How Does LangSmith Help Manage Costs?

LangSmith is a huge help for managing costs because it automatically tracks token usage for both prompts and completions inside every single trace. That level of detail is where the magic happens.

This lets you see the exact cost of every API call, chain, or agent run. From there, you can use the monitoring dashboards to:

- See how your costs are trending over time.

- Pinpoint the most expensive parts of your application.

- Filter costs by specific users, tasks, or even custom metadata tags you've set up.

Having this kind of deep visibility is crucial for fine-tuning your prompts and app logic to build something that's not just powerful, but also cost-effective.

Can I Deploy LangSmith on My Own Servers?

Yes, absolutely. For teams with strict data privacy or security rules, LangSmith offers a self-hosted option. This lets you deploy the entire platform inside your own virtual private cloud (VPC) or on-premises infrastructure.

You get all the same core features as the cloud version—tracing, evaluation, monitoring—but with the peace of mind that all your sensitive application data stays completely within your control. This makes it a great fit for anyone working in regulated fields like finance, healthcare, or government.

At Promptaa, we believe that well-crafted prompts are the foundation of great AI applications. Our platform helps you organize, refine, and collaborate on your prompts, turning them into reliable assets. Discover how Promptaa can streamline your prompt engineering workflow today.