How to Reduce Hallucinations in LLM: Essential Tips

If you want to get a handle on LLM hallucinations, you need to go beyond just writing simple prompts. The real wins come from a mix of grounded, verifiable strategies. I've found the most effective approach combines precise prompt engineering, anchoring the model in real data with Retrieval-Augmented Generation (RAG), and sharpening its knowledge through targeted fine-tuning.

Think of it like this: these methods work together to put guardrails on the model, forcing it to lean on actual information instead of just making things up from its internal memory, which can be surprisingly unreliable.

What Are LLM Hallucinations and Why They Matter

An LLM hallucination is when an AI states something with complete confidence, but the information is factually wrong, nonsensical, or just plain made up. It's not really a "bug" like you'd find in traditional software. It’s a natural side effect of how these models work.

At their core, LLMs are next-word prediction machines, not databases of truth. Their main job is to string together words that are grammatically correct and statistically likely, based on the mountains of text they were trained on. This is precisely what can lead them off track, especially when a question is a bit ambiguous or pushes the limits of what they've learned.

The Root Causes of AI Fabrication

To stop hallucinations, you first have to understand why they happen. The problem really comes down to a few core limitations in how LLMs are built today.

- Parametric Knowledge Gaps: All the "knowledge" an LLM has is stored as complex mathematical patterns, not a searchable database. If a piece of information wasn't in its training data (or wasn't there very often), the model might just invent a plausible-sounding answer to fill that gap.

- Training Data Biases and Errors: These models learn from a massive slice of the internet, which is full of biased opinions, outdated information, and straight-up falsehoods. It's almost inevitable that the LLM will absorb and repeat some of these inaccuracies.

- Over-optimism for Coherence: The model is programmed to produce a smooth, coherent response above all else. This means it can sometimes weave fabricated details into a block of correct information just to make the whole thing read better.

The real danger of hallucinations isn’t just getting a wrong answer; it’s getting a wrong answer that sounds authoritative and convincing. This erodes user trust and can introduce significant risks in business applications, from providing incorrect legal advice to generating flawed financial reports.

These errors aren't just minor quirks; they're major hurdles to building AI systems you can actually rely on. Tackling them means getting smarter about how we interact with the models and making deeper changes to the models themselves.

Throughout this guide, we'll walk through the practical, proven techniques—from prompt engineering to RAG and fine-tuning—that give you more control over your model's outputs and help you build more trustworthy AI applications.

Fine-Tuning Your Prompts for Factual Accuracy

When it comes to fighting hallucinations, prompt engineering is your first and most powerful line of defense. It's all about how you frame your requests to steer the model toward answers that are grounded in fact. A sloppy, open-ended prompt is practically an invitation for an LLM to start making things up.

Think of it this way: giving a vague prompt is like telling a new assistant to "handle marketing." You'll get something, but who knows what. A well-crafted prompt, on the other hand, is like giving them a detailed creative brief, complete with brand guidelines, key messaging, and examples of what you're looking for. The results are worlds apart.

From Vague Questions to Direct Instructions

The secret is to leave as little to the imagination as possible. You need to shift your mindset from asking a question to directing a process. This means being crystal clear about the context, the format you want, and the guardrails the model should operate within.

Let’s look at a simple marketing example.

Vague Prompt (High Hallucination Risk):"Write an ad for our new running shoe."

This is asking for trouble. The model might invent features that don't exist, create a fictional brand name, or guess at the target audience. It's a total crapshoot.

Precise Prompt (Low Hallucination Risk):"You are a marketing copywriter. Using *only* the product details below, write three ad headlines for the 'AeroStride 5' running shoe. Your target audience is marathon runners. Each headline must be under 10 words and highlight the shoe's lightweight carbon-fiber plate. Do not mention price.

Product Details:

- Name: AeroStride 5

- Key Feature: Full-length carbon-fiber plate for energy return

- Weight: 7.5 oz

- Target Runner: Competitive marathoners"

See the difference? This prompt provides a role, context, specific constraints, and source material. We've dramatically shrunk the space where the model could go off the rails and invent something.

Leveling Up with Advanced Prompting Techniques

Getting specific is just the start. A few structured prompting techniques can really boost factual accuracy, especially when you're building more sophisticated AI applications. It's worth getting familiar with the different types of prompting techniques to see what works best for your needs.

Here are a few of the most battle-tested strategies I use all the time:

- Few-Shot Prompting: This is my go-to for locking in a specific format. You give the LLM a couple of quick examples of what you want before making your actual request. It’s like saying, “Here’s what a good answer looks like. Now you do one.” It works wonders.

- Chain-of-Thought (CoT) Prompting: For anything that requires reasoning, ask the model to "think step-by-step." Forcing it to lay out its logic often helps it catch its own flawed assumptions before it spits out a final, wrong answer.

- Instructional Guardrails: This is just adding direct, non-negotiable rules right into your prompt. You’d be surprised how well a model follows a direct order.

Here's a simple instruction I add to nearly all my fact-based prompts: "If you do not know the answer or if the information is not in the provided context, respond with 'I do not have enough information to answer.'" This little phrase is a powerful safety net, stopping the model from just guessing when it hits a knowledge gap.

Putting Instructional Guardrails into Practice

Let’s see how this works in a real-world scenario. Imagine you're building a customer support bot that needs to answer questions based only on an internal company policy document.

Without a Guardrail:

A customer asks, "What's your policy for international returns?" If that specific detail isn't in the document, a normal LLM will often try to be "helpful" by making up a policy that sounds reasonable but is completely wrong. This is a huge liability.

With a Guardrail:

You’d structure your prompt like this:"Based *only* on the text below, answer the user's question. If the answer is not in the text, say 'I cannot find information on that topic in our policy guide.'

[Insert policy document text here]

User Question: What is the policy for international returns?"

That small tweak fundamentally changes the model’s behavior. It teaches the LLM to stick to the provided source material and admit when it doesn't know something. Mastering these prompt engineering skills gives you a solid foundation for building AI systems people can actually trust.

Grounding Your LLM with Retrieval-Augmented Generation

While smart prompting puts guardrails on your LLM, Retrieval-Augmented Generation (RAG) gives it a library card to your own curated source of truth. It's one of the most effective ways to reduce hallucinations in LLM applications because it forces the model to base its answers on real, verifiable data instead of just its internal, often flawed, memory.

Think of it as giving your AI an open-book test. Instead of asking it to recall a fact from its vast but imperfect training, you're telling it: "Go look this up in our approved documents, find the answer, and then tell me what you found." This simple shift from recall to retrieval dramatically improves factual accuracy.

The RAG process connects your LLM to an external knowledge base—this could be your company's internal wiki, a database of product specs, or a curated set of research papers. When a user asks a question, the system first retrieves relevant chunks of information from this knowledge base. It then feeds those chunks to the LLM as context, right alongside the original prompt.

The Core Components of a RAG System

Putting together a RAG pipeline involves a few key pieces that work in tandem to ground the model's output. The beauty of this approach is its modularity; you can tweak each part to better suit your specific needs.

Here's how it usually flows:

- Knowledge Base Creation: You start by gathering your trusted documents (like PDFs, text files, or database entries) and breaking them down into smaller, manageable chunks.

- Vectorization: Each chunk is converted into a numerical representation called a vector embedding. This process captures the semantic meaning of the text, allowing the system to find conceptually similar information, not just matching keywords.

- User Query and Retrieval: When a user submits a prompt, it's also converted into a vector embedding. The system then performs a similarity search to find the chunks in your knowledge base that are most relevant to the query.

- Augmented Prompt Generation: The top-matching text chunks are combined with the user's original question to create a new, context-rich prompt.

- LLM Response: Finally, this augmented prompt is sent to the LLM, which uses the provided context to formulate a factually grounded answer.

The real magic of RAG is that it makes the LLM's "thinking" process transparent. By asking it to cite its sources from the retrieved context, you not only get a more accurate answer but also a way to verify where the information came from.

RAG in Action: A Before-and-After Scenario

Imagine you’re building a chatbot to help employees understand your company's benefits policy, which is buried in a 100-page PDF.

Without RAG:

An employee asks, "How many paternity leave days do I get?" A standard LLM, relying on its general training data, might confidently guess with a generic answer like, "Most companies offer two weeks of paternity leave." That's a huge problem if your policy is different.

With RAG:

The RAG system first finds the exact section from the benefits PDF that discusses parental leave. It then provides this text to the LLM and instructs it to answer the question based only on that context.

Now, the model responds: "According to our policy, eligible employees receive 20 paid days of paternity leave, which must be taken within the first six months after the child's birth."

The difference is night and day. The RAG-powered response is specific, accurate, and directly tied to your company's actual documents.

This technique is a proven method for stopping fabrication in its tracks. Research shows that integrating RAG can significantly cut down on confident but incorrect answers because the model is forced to reference real data. One analysis found that using RAG with GPT-4o lowered hallucination rates to 20.7% in longer responses and 24.7% in shorter ones—a major improvement. You can see how Mount Sinai experts compared LLM hallucinations and check the data for yourself.

But remember, the quality of your RAG system hinges entirely on the quality of your knowledge base. If your source documents are outdated or inaccurate, the LLM will just generate answers based on that faulty information. Keeping your knowledge source clean, current, and well-organized is the most important part of building a reliable RAG-powered application.

The Impact of High-Quality Data and Fine-Tuning

While prompt engineering and RAG are fantastic for steering a model’s output in real-time, they don't change the model's fundamental knowledge. To truly get to the root of hallucinations, you have to look at what the model was built on: its training data. At the end of the day, an LLM is a mirror reflecting the information it learned from. If that information is garbage, well, you know the old saying.

This is where rolling up your sleeves with data governance and targeted fine-tuning really pays off. By feeding a base model a steady diet of high-quality, domain-specific data, you can mold it into a reliable expert for your exact needs.

Clean Data is the Bedrock of Truthful AI

You wouldn't build a house on a shaky foundation, and the same logic applies here. Building a factually accurate LLM starts with clean, reliable data. Honestly, the time you spend curating your dataset is probably the single best investment you can make in reducing hallucinations.

This isn't just about grabbing a bunch of documents. It's a careful, deliberate process.

- Vet Your Sources: Start by identifying authoritative, accurate, and current information for your domain. Think internal company knowledge bases, peer-reviewed studies, or verified industry reports.

- Scrub and Polish: This is the tedious but critical part. You need to hunt down and remove duplicate entries, fix factual errors, and standardize the formatting. It also means filtering out biased or irrelevant content that could poison the well. For little things that add up, our guide on crafting an AI prompt to remove em dashes and en dashes can help keep your text consistent.

- Structure for Success: Organize the data logically. If you're building a question-answering bot, this could mean creating clean, verified pairs of questions and their corresponding correct answers.

The link between data quality and model reliability isn’t just anecdotal. Study after study confirms that hallucination rates are directly tied to noise, gaps, and biases in the training data. A strict data pipeline is your best defense, especially in high-stakes fields like finance or medicine.

Fine-Tuning for Specialized Accuracy

Once you have a pristine, curated dataset, you can use it to fine-tune a general-purpose LLM. Fine-tuning is essentially continuing a pre-trained model's education, but with a highly specialized curriculum—your data.

This process doesn't teach the model brand new concepts from scratch. Instead, it refines its existing knowledge and tailors its responses to fit the nuances of your specific domain.

A base LLM is like a brilliant liberal arts grad; it knows a bit about everything. Fine-tuning is like sending that grad to law school. You're taking that broad intelligence and focusing it, creating a specialist with deep, reliable expertise in one area.

For instance, a generic LLM might give a vague, and possibly wrong, answer to a complex medical question. But a model fine-tuned on a dataset of peer-reviewed medical journals and clinical trial data will give you a far more accurate, nuanced, and contextually aware response.

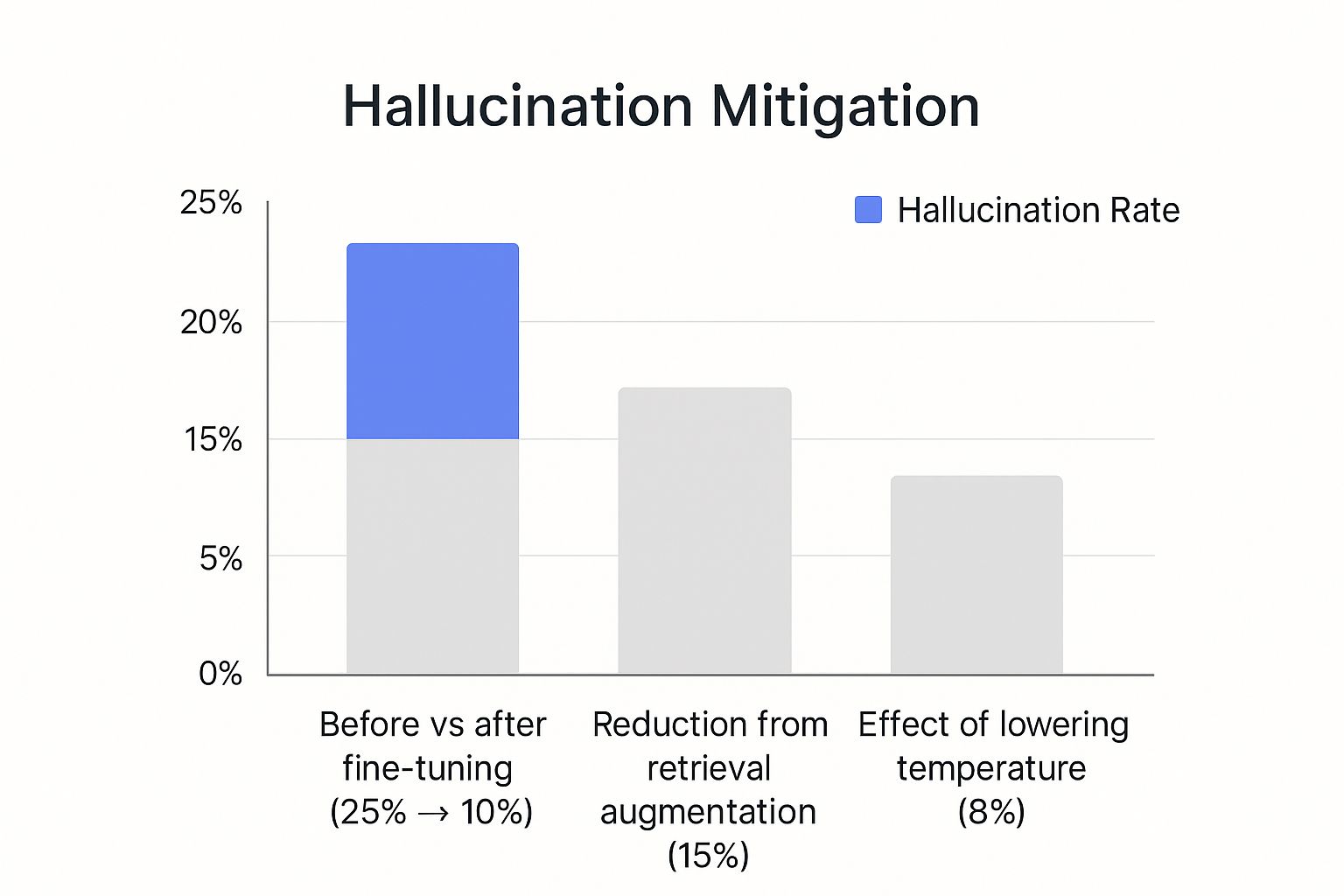

This visual shows just how much of a difference the right techniques, including fine-tuning, can make.

As you can see, fine-tuning can slash hallucination rates, often cutting the baseline error rate by more than half.

Now, let's pull these techniques together to see how they stack up.

Comparing Hallucination Reduction Techniques

This table compares the primary methods for reducing LLM hallucinations, outlining their approach, complexity, and ideal use cases.

| Technique | Core Approach | Implementation Complexity | Best For |

|---|---|---|---|

| Prompt Engineering | Guiding the model's output at inference time with clear instructions and context. | Low | Quick adjustments, controlling tone, and providing immediate context. |

| Retrieval-Augmented Generation (RAG) | Providing the model with external, verified information to use when generating a response. | Medium | Grounding outputs in specific, up-to-date documents or knowledge bases. |

| Fine-Tuning | Continuing the training of a base model on a smaller, curated, domain-specific dataset. | High | Embedding deep domain expertise and specialized knowledge into the model itself. |

| Reinforcement Learning from Human Feedback (RLHF) | Aligning the model's behavior with human preferences for truthfulness and helpfulness. | Very High | Polishing a model to be safer, more helpful, and less prone to making things up. |

Each of these methods plays a distinct role, and they often work best when used together. Fine-tuning provides the specialized knowledge, RAG supplies the real-time facts, and good prompting guides the final output.

Aligning Models with Human Feedback

There's another powerful technique that works hand-in-glove with fine-tuning: Reinforcement Learning from Human Feedback (RLHF). This method moves beyond just teaching the model facts; it teaches the model what we consider a "good" and "truthful" answer.

Here’s the gist of how it works:

- Gather Human Preferences: First, you have human reviewers look at multiple answers the model gives for the same prompt. They rank these answers from best to worst based on accuracy, helpfulness, and safety.

- Train a Reward Model: All that ranking data is used to train a separate "reward model." Its only job is to learn to predict which kind of response a human would prefer.

- Optimize the LLM: The original LLM is then fine-tuned using the reward model as a guide. It generates an answer, the reward model "scores" it, and the LLM learns to produce outputs that get higher and higher scores.

While it’s a complex and resource-intensive process, RLHF is a key ingredient in what makes leading models like ChatGPT and Claude feel so reliable. It creates a powerful incentive for the model to avoid making things up, providing a critical last line of defense against hallucinations.

How to Measure and Evaluate LLM Hallucinations

You can't fix what you can't measure. It’s a simple truth. While things like prompt engineering and RAG are fantastic tools for taming hallucinations, without a solid evaluation process, you’re just making educated guesses. A systematic way to measure these errors is what separates wishful thinking from reliable engineering.

The old-school machine learning metrics—things like accuracy or BLEU scores—just don't cut it here. They’re great at telling you if a sentence is grammatically sound or statistically likely, but they’re completely blind to whether it's actually true. A model can spit out a perfectly fluent, high-scoring sentence that's pure fiction. This is exactly why we need a more specialized playbook for measurement.

Establishing a Ground Truth Dataset

Everything starts with creating a "ground truth" dataset. Think of it as the ultimate answer key that you'll grade your LLM against. This is simply a hand-picked collection of prompts paired with their factually correct, verified answers.

This dataset becomes your single source of truth. When you test a model’s output against it, you're not just looking for similar wording; you're confirming that the facts line up.

A strong ground truth dataset is your most valuable asset in the fight against hallucinations. It needs to be diverse, covering the topics and question types your application will actually face, and meticulously vetted by human experts.

Putting one together takes real effort, but skipping this step is not an option. A great way to start is by logging real-world user questions and having domain experts write out the ideal, verified answers. This ensures your tests reflect the real challenges your system will encounter in the wild.

Key Metrics for Quantifying Hallucinations

With your ground truth in hand, you need the right metrics to actually score your LLM's performance. Relying on standard metrics is a trap many people fall into.

One 2024 study of systematic reviews laid bare just how misleading those metrics can be. It found some pretty shocking hallucination rates that would otherwise go unnoticed. GPT-3.5, for instance, hallucinated in nearly 39.6% of its references. Bard (now Gemini) was even worse, with a massive 91.4% hallucination rate in the same task.

Even the mighty GPT-4 only managed a factual precision of 13.4%. That means just one out of every eight references it cited was actually correct.

Here's a look at the data from that study by Chelli et al.:

LLM Hallucination Rates in Systematic Reviews

| LLM Model | Reference Hallucination Rate (%) | Factual Precision Rate (%) |

|---|---|---|

| GPT-3.5 | 39.6 | 23.3 |

| GPT-4 | 28.4 | 13.4 |

| BARD (Gemini) | 91.4 | 2.6 |

| BING CHAT (Copilot) | 56.8 | 9.9 |

These numbers drive home why specialized evaluation is so critical. To get a real sense of what's going on, you need to focus on more targeted methods:

- Factual Consistency Scoring: This is a direct comparison. You take the claims made in the LLM’s response and check them against your ground truth or a source document. The goal is simple: spot any contradictions or made-up "facts."

- Negation Detection: A more subtle but common form of hallucination. This technique specifically hunts for instances where the model incorrectly denies something true or confirms something false.

- Information Retrieval Metrics (for RAG systems): If you're using a RAG setup, you have two moving parts to evaluate: the retriever and the generator. You'll want to track metrics like context precision (did the documents it pulled up actually contain the answer?) and context recall (did it find all the relevant documents?).

Implementing an Evaluation Pipeline

A proper evaluation pipeline automates this whole measurement process, giving you a way to track your progress as you make changes. This usually involves a mix of automated tools and human-in-the-loop validation.

One surprisingly effective technique is to use another, more powerful LLM as a "judge." You can craft a prompt that instructs the judge LLM to compare your model's output to the ground truth and score its factual accuracy. It's not perfect, but it can help you scale your testing efforts dramatically. You can learn more about the concepts behind this in our guide to understanding perplexity and ChatGPT differences.

A typical evaluation pipeline breaks down into a few clear steps:

- Input a Prompt: Grab a prompt from your ground truth dataset.

- Generate a Response: Feed it to the LLM you're testing and get its output.

- Compare and Score: Use your chosen method—an automated script, a judge LLM, or a human reviewer—to score the response against the verified answer.

- Log the Results: Keep the scores in a structured format. This lets you see trends over time and compare different model versions or prompt strategies.

This isn't a one-and-done setup. It’s a continuous feedback loop. Rigorous measurement turns the abstract problem of "hallucinations" into a concrete engineering challenge you can actually solve, one iteration at a time.

Frequently Asked Questions About LLM Hallucinations

https://www.youtube.com/embed/LdeUg5UUZO8

As you start putting these strategies into practice, you're bound to run into some real-world questions. Let's tackle some of the most common ones that come up when trying to get a handle on LLM hallucinations.

Which Hallucination Reduction Technique Should I Start With?

Always, always start with prompt engineering. It's the lowest-hanging fruit—the fastest, cheapest, and most straightforward way to get more control over what your model puts out. You'd be surprised how often you can get a dramatic boost in factual accuracy just by being more deliberate in how you ask for something.

Think of a well-crafted prompt as your first line of defense. Before you even think about investing the time and resources into more involved methods like Retrieval-Augmented Generation (RAG) or fine-tuning, you need to be sure you've pushed your prompting skills as far as they can go.

Can LLM Hallucinations Ever Be Completely Eliminated?

In a word, no. The way these models work is fundamentally probabilistic; they're built to predict the next most likely word in a sequence, not to query a database of facts. Because of this, a small risk of hallucination will always be part of the equation.

The goal isn't to chase the impossible dream of 100% elimination. Instead, you should focus on effective mitigation. It's all about reducing the frequency and impact of hallucinations to a level that's acceptable and predictable for your specific application.

This means building a robust system, often with several layers of defense, and having a process for continuously monitoring and correcting errors when they pop up.

Is RAG Better Than Fine-Tuning for Reducing Hallucinations?

This is a really common question, but it’s a bit like asking if a hammer is better than a screwdriver. They’re both great tools, but they’re designed for different jobs. Neither one is universally "better"—they actually work incredibly well together.

Here’s a practical way to think about it:

- Use RAG when… you need to ground your model in specific, verifiable, and constantly changing information. Think of things like your company's latest support articles, current product specs, or real-time inventory data. It's fantastic for this because updating a document in your RAG knowledge base is much easier than re-training a model.

- Use Fine-Tuning when… you need to teach the model a particular style, tone, or a complex way of reasoning that's hard to explain in a prompt. This is how you embed deep domain expertise or get the model to adopt a very specific persona.

In high-stakes, complex systems, the best approach is often a hybrid one. You can use RAG to feed the model the latest, most accurate facts, while a fine-tuned model knows exactly how to interpret and communicate that information like a seasoned expert.

What’s the First Step if My Live AI Application Starts Hallucinating?

When you spot a hallucination in a live system, you need a calm, structured plan. The key is to act quickly to maintain user trust without panicking.

Here’s a simple three-step response I recommend:

- Log and Analyze. Your immediate first step is to capture the user's input and the model's bad output. Keep a running log of these incidents. This data is pure gold because it helps you spot patterns. Are hallucinations clustering around a certain topic? A specific type of question? This is your starting point for a real fix.

- Deploy a Quick Fix. You can't always roll out a major system update on the spot. Your priority is to stop the problem from continuing. This is where prompt engineering comes in handy again. You can often push a quick update to your system's metaprompt, adding a guardrail that tells it to be more cautious about the problem area or to explicitly say when it doesn't know something.

- Plan a Long-Term Solution. Once the immediate fire is out, use the data you logged to figure out a more permanent solution. If you identified a pattern, the fix might be updating a specific document in your RAG system, adding new examples to your fine-tuning dataset, or tweaking your evaluation metrics to catch this kind of error before it ever gets to a user.

Following a process like this turns a stressful incident into a valuable feedback loop that makes your application stronger over time.

Ready to create better, more effective prompts that reduce hallucinations from the start? With Promptaa, you can organize, refine, and share prompts that deliver more accurate results. Build your library of high-quality prompts and supercharge your AI interactions today.