How to Create Effective AI Prompts for Better Results

Crafting a great AI prompt is all about giving the model clear, contextual, and goal-oriented instructions. It's not about chasing the newest, shiniest AI model; it's about mastering the art of the ask.

Why Better Prompts Beat Better Models

Ever asked a super-smart AI a question and gotten back a bland, useless answer? It’s a common frustration, and it highlights a core truth of working with generative AI: the quality of what you get out is directly tied to the quality of what you put in.

Many people think the secret to better AI results is simply upgrading to the latest, most powerful model. And while new models are more capable, they aren't a silver bullet. The real magic happens when you learn how to write a better prompt.

Think of an AI as a brilliant but incredibly literal assistant. If you give it a vague command like, "write about marketing," you'll get a vague, textbook-style summary. The AI has no context for what you really need, who it's for, or what you want to achieve. It did what you asked, but the result is pretty much worthless.

The Overlooked Power of Prompting

The gap between a mediocre AI response and a brilliant one is almost always in the details you provide. A well-constructed prompt is like a detailed creative brief; it gives the AI all the ingredients it needs to cook up something great.

This isn't just a gut feeling—it's backed by data.

Researchers from MIT Sloan ran a large-scale experiment and uncovered something fascinating. They found that when people switched to a more advanced AI model, the performance jump was split roughly 50/50. Half the improvement came from the better model, but the other half came from people getting better at prompting. They learned to adapt, add more context, and rephrase their requests.

The takeaway is simple: spending money on the latest AI tools without also building your prompt engineering skills is a waste. Your ability to communicate clearly with the AI is a massive force multiplier.

From Vague to Valuable An AI Prompt Transformation

Let's look at how this plays out with a real-world example. Imagine you need a social media post for a new app launch. A simple change in your approach can make all the difference.

The table below breaks down the anatomy of a prompt, showing how adding specific elements transforms a weak request into a powerful instruction.

| Element | Ineffective Prompt Example | Effective Prompt Example |

|---|---|---|

| Role/Persona | (None provided) | Act as a social media marketing expert... |

| Target Audience | (None specified) | ...targeting busy professionals on LinkedIn. |

| Task & Format | Write a social media post... |

Create an engaging post (under 150 words)... |

| Context & Key Info | ...about our new productivity app. |

...announcing our new app, 'FocusFlow.' Highlight its key features: AI-powered task prioritization and distraction blocking. |

| Goal & CTA | (None provided) | End with a question to encourage comments and a link to our website. |

See the difference? The first prompt is a gamble. The second is a direct order. It provides a persona, an audience, a specific task, critical information, and a clear call to action. This eliminates the guesswork and guides the AI toward creating content that isn't just written but is strategically crafted.

If you're interested in the technology that makes this all possible, you can dive deeper into large language models vs. generative AI.

Throughout this guide, we'll keep breaking down these core principles to help you move from being a casual user to a skilled prompt engineer who gets exactly what they want from AI.

The Anatomy of a High-Performing Prompt

To consistently get great results from AI, you have to stop thinking of prompts as simple questions. Start seeing them as structured instructions. A truly effective prompt isn't just a request; it's a detailed brief that leaves almost nothing to chance. Forgetting a key element is like asking a chef to cook without telling them the main ingredient.

The best prompts are built from several distinct pieces that all work together. When you consciously include each one, you clear up any ambiguity and steer the AI exactly where you want it to go. This turns prompting from a frustrating guessing game into a repeatable skill.

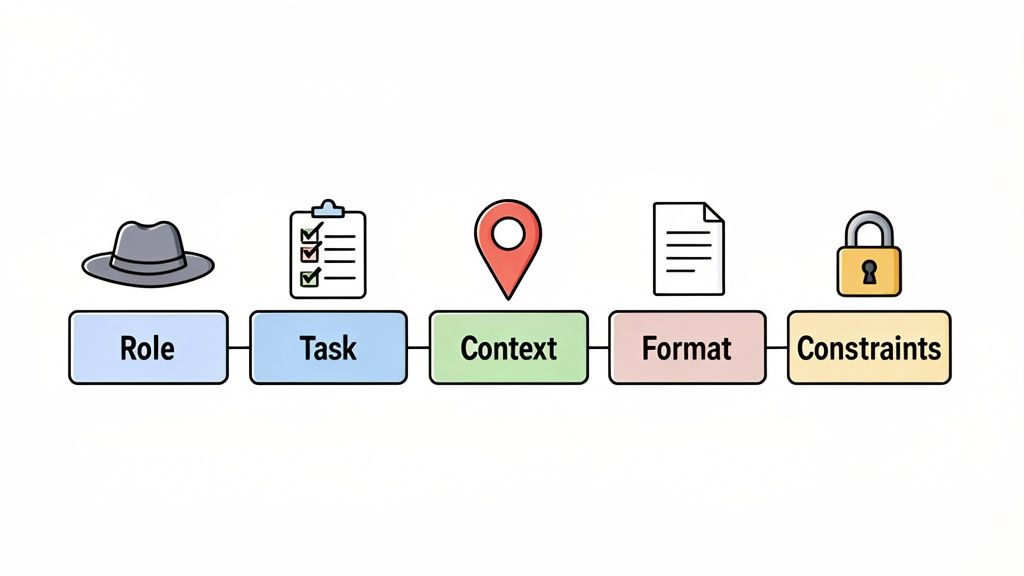

Let's break down the five core pillars that I use to build prompts that actually work.

Assigning a Specific Role

This one is my favorite trick. Giving the AI a role or persona is the fastest way to influence its tone, style, and expertise. Instead of letting it default to its standard "helpful assistant" voice, you can frame its knowledge before it even starts writing.

Think of it as casting an actor for a part. You wouldn't ask a stand-up comedian to deliver a serious legal argument, right? The same logic applies here.

For example, instead of this:

"Explain the benefits of content marketing."

Try this:

"Act as a seasoned digital marketing consultant explaining the long-term ROI of content marketing to a skeptical small business owner."

See the difference? That single instruction immediately sets the tone (professional, persuasive), establishes the audience (a non-expert), and focuses the expertise (digital marketing, ROI).

Defining a Clear Task

The task is the verb of your prompt—it’s the specific action you want the AI to perform. I can't stress this enough: vague tasks lead to vague, useless outputs.

Don't just say "write about" or "discuss." Get in the habit of using strong action verbs that describe the exact end product you have in mind.

- Analyze this customer feedback and identify the top three complaints.

- Summarize the attached transcript into five key bullet points.

- Draft a professional email to a client who missed a payment deadline.

- Compare the features of Product A and Product B in a table format.

Each of these commands is direct and unambiguous. The AI knows precisely what its job is, which stops it from wandering off on weird, irrelevant tangents.

Key Takeaway: The more specific your task, the less the AI has to guess. It can focus all its processing power on executing your command instead of trying to figure out what you meant.

Providing Essential Context

Context is the background information—the "why" behind your request. In my experience, this is the single most powerful element for turning a decent prompt into a fantastic one. Without it, the AI is just working in a vacuum.

This is where you drop in all the crucial details:

- Who is the target audience for this content?

- What is the ultimate goal? (e.g., to persuade, to inform, to sell)

- Is there any key information, data, or brand guidelines to include?

- What's the background of the project or problem?

Imagine asking a developer for code. A bad prompt is "Write a Python script." A great prompt provides context: "Write a Python script to scrape the titles from a webpage. The script needs to handle potential timeout errors and save the titles to a CSV file." That context makes all the difference.

Specifying the Output Format

Telling the AI how you want the information presented is just as important as telling it what to provide. If you skip this, the AI will almost always default to a standard wall of text, which you'll then have to spend time fixing.

Be explicit about the structure you need for the final output. It will save you a ton of editing time later.

Here are a few examples of formatting instructions I use all the time:

- "Present the answer as a Markdown table."

- "Write the output as a JSON object."

- "Use H2 headings for main sections and bullet points for lists."

- "Create a numbered list of actionable steps."

For a deeper dive, explore our guide covering AI prompt best practices to see how formatting can dramatically improve your results.

Setting Clear Constraints and Rules

Finally, constraints are the guardrails that keep the AI on track. Think of them as the rules of the road—the limitations and boundaries you set to prevent unwanted behavior and really dial in the output.

Constraints can be about what to do or, just as importantly, what not to do.

- "Keep the response under 200 words."

- "Do not use any technical jargon."

- "Write in a formal and professional tone."

- "Avoid mentioning our competitors by name."

By combining all five of these elements—Role, Task, Context, Format, and Constraints—you build a comprehensive instruction that gives the AI everything it needs to nail your request on the first try.

Using Frameworks for Predictable AI Results

Trying to nail the perfect prompt from scratch every single time is a recipe for frustration. It's like building a house without a blueprint—sure, you might get a wall up, but the whole structure will be shaky. If you want consistent, high-quality results from AI, you need to stop thinking in terms of one-off commands and start using reusable frameworks.

Frameworks are just pre-built structures that give the AI guardrails. They guide its response, so you get the kind of output you actually need, every single time. It’s a simple shift, but it moves you from basic prompting to a much more reliable and efficient way of working with AI. Instead of staring at a blank page, you start with a proven formula.

Give the AI Examples with Few-Shot Prompting

One of the most effective frameworks is also one of the simplest: Few-Shot Prompting. The idea is that you show the AI a few examples of what you want before you ask it to do the work. This trains the AI on your specific style, tone, and format.

Think of it like getting a new junior employee up to speed. You wouldn’t just say, "Go write a marketing email." You’d show them a few of your best-performing emails first so they could internalize the brand voice and see what works.

Let’s see how this plays out. Say you need a punchy subject line for a new productivity app.

- Without Examples (Zero-Shot):

"Write a subject line for our new productivity app."- AI might give you: "Check Out Our New App" — which is bland and totally forgettable.

- With Examples (Few-Shot):

"Write a subject line for our new productivity app. Match this tone and style:Example 1: Finally, a to-do list that does the work for you.Example 2: Is your calendar secretly running your life?Example 3: Reclaim 5 hours of your week. Seriously.Your Turn:- Now the AI gives you: "Stop letting busywork win your day." — much better, right? It's benefit-driven and captures the exact vibe you were going for.

Just three little examples gave the AI a clear pattern to follow, and the result is worlds apart.

Make the AI "Show Its Work" with Chain-of-Thought

Another go-to framework is Chain-of-Thought (CoT) prompting. This one is a lifesaver for any task that involves logic, math, or multiple steps. Instead of just asking for the final answer, you tell the AI to "think step-by-step."

This simple instruction forces the AI to break down the problem, which dramatically cuts down on the odds of it making a silly math error or just making stuff up.

Forcing an AI to show its work makes the final answer more accurate. It’s a simple trick that improves reasoning by making the AI follow a logical path instead of just jumping to a conclusion.

Imagine a business analyst needs a quick calculation.

- Without CoT:

"If a company has 5,000 users and a 4% monthly churn rate, and it acquires 500 new users this month, what is the net user growth?"- The AI could easily just spit out a number. If it’s wrong, you have no idea why.

- With CoT:

"If a company has 5,000 users and a 4% monthly churn rate, and it acquires 500 new users this month, calculate the net user growth. Show your reasoning step-by-step."- AI Output:

- Calculate users lost to churn: 5,000 users * 0.04 = 200 users lost.

- Calculate net change: 500 new users - 200 lost users = 300 net new users.

- Final Answer: The net user growth is 300 users.

- AI Output:

The second prompt is so much more trustworthy because you can see the logic. If there was a mistake, you'd spot it immediately.

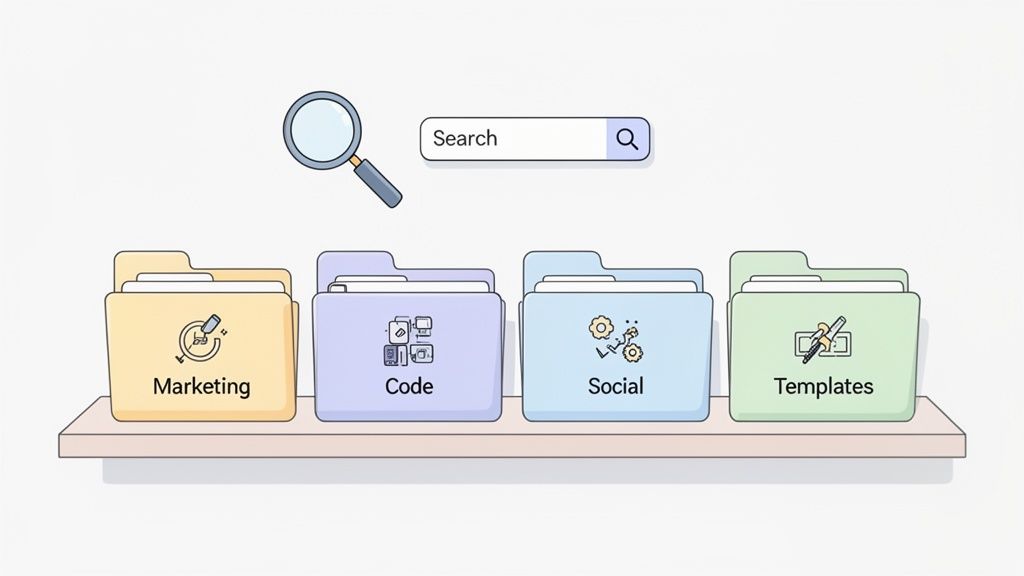

Build a Library of Your Best Prompts

The real magic happens when you start saving and reusing these frameworks. Instead of typing out your Few-Shot examples every time, you build a library of templates for your most common tasks. This is how you and your team can really start to scale up your AI use.

A content team, for instance, could have ready-to-go templates for:

- Generating blog post outlines

- Drafting social media posts in the company voice

- Summarizing articles into key bullet points

- Writing product descriptions that hit all the right notes

This is where a tool like Promptaa really shines. Forget about keeping your best prompts in a messy Google Doc; you can organize them into a clean, searchable library that everyone can use.

An organized library means you stop reinventing the wheel. Everyone on the team can grab a proven prompt and generate work that consistently meets a high standard.

These frameworks are the foundation for getting real work done. They're what power specialized tools like an AI-powered LinkedIn post generator, which needs structured input to create good content. And if you really want to systematize your workflow, you can even learn how to use an AI prompt to create mini-frameworks for your specific needs.

Ultimately, by adopting these structured approaches, you turn AI from a fun but unpredictable toy into a reliable workhorse you can count on.

How to Test and Refine Your Prompts

Your first prompt is rarely your best one. The real magic happens in the refinement stage. Think of your initial prompt as a first draft—it’s a solid starting point, but it almost always needs a few tweaks to go from "decent" to "wow, this is exactly what I wanted."

The key is to shift from a "one-and-done" mindset to a continuous loop of testing, learning, and improving. This iterative approach is what separates amateurs from the pros who get consistently great results from AI. It’s all about making small, intentional changes and seeing how they affect the AI’s response.

Troubleshooting Common AI Frustrations

If you’ve used AI for more than five minutes, you’ve probably run into some common roadblocks. Maybe the output is too generic, riddled with factual errors (hallucinations), or formatted in a way that’s completely unusable. Don't blame the AI—nine times out of ten, the prompt is the culprit.

Here are a few common problems I see and how to start fixing them:

- Generic Responses: If the output is bland and lacks depth, your prompt was likely too vague. Try adding more context, assigning a more specific persona, or even providing examples of the tone you're after.

- Factual Errors: To cut down on hallucinations, you have to give the AI source material. You can paste in an article or report and specifically instruct it to "only use the information provided" when answering your question.

- Weird Formatting: Ever get a giant wall of text? You probably forgot to specify the format. Be explicit. Say things like, "Format the output as a Markdown table with three columns," or "Use H2 headings for each section and bullet points for key details."

A huge part of refining your prompts is learning How to Avoid AI Detection and Humanize Your Content. This helps ensure the final output feels authentic and actually connects with your audience, which is what this whole process is about.

The Power of Iterative Prompting

Here’s my best piece of advice: change only one variable at a time. If you rewrite the entire prompt at once, you'll have no idea which specific change made the difference. This systematic approach is the core of effective prompt engineering.

Think of it like A/B testing an email headline. You create two versions with one small difference and see which one performs better. You can do the exact same thing with your prompts.

I like to keep a simple log of my prompt versions and the AI's responses. Just a quick note in a document or spreadsheet can reveal patterns over time, helping you understand what works best for specific tasks.

For example, you could test:

- Version A: "Summarize this article."

- Version B: "Summarize this article for a busy executive, focusing on the three most important takeaways. Present them as a numbered list."

Version B will almost certainly give you a more useful, targeted result. By isolating the change, you learn that adding an audience and a specific format constraint dramatically improves the output.

Using Metrics for High-Stakes Prompts

For everyday tasks, "looks good to me" is a perfectly fine metric. But when you’re working on business-critical applications, you need a more structured approach. This is where systematic prompt engineering comes in, using templates and objective metrics to get more reliable results.

For high-stakes work, many teams I know maintain a ‘prompt testing dataset’ with known inputs and their expected outputs. This allows them to benchmark changes over time and has been proven to lower hallucination rates.

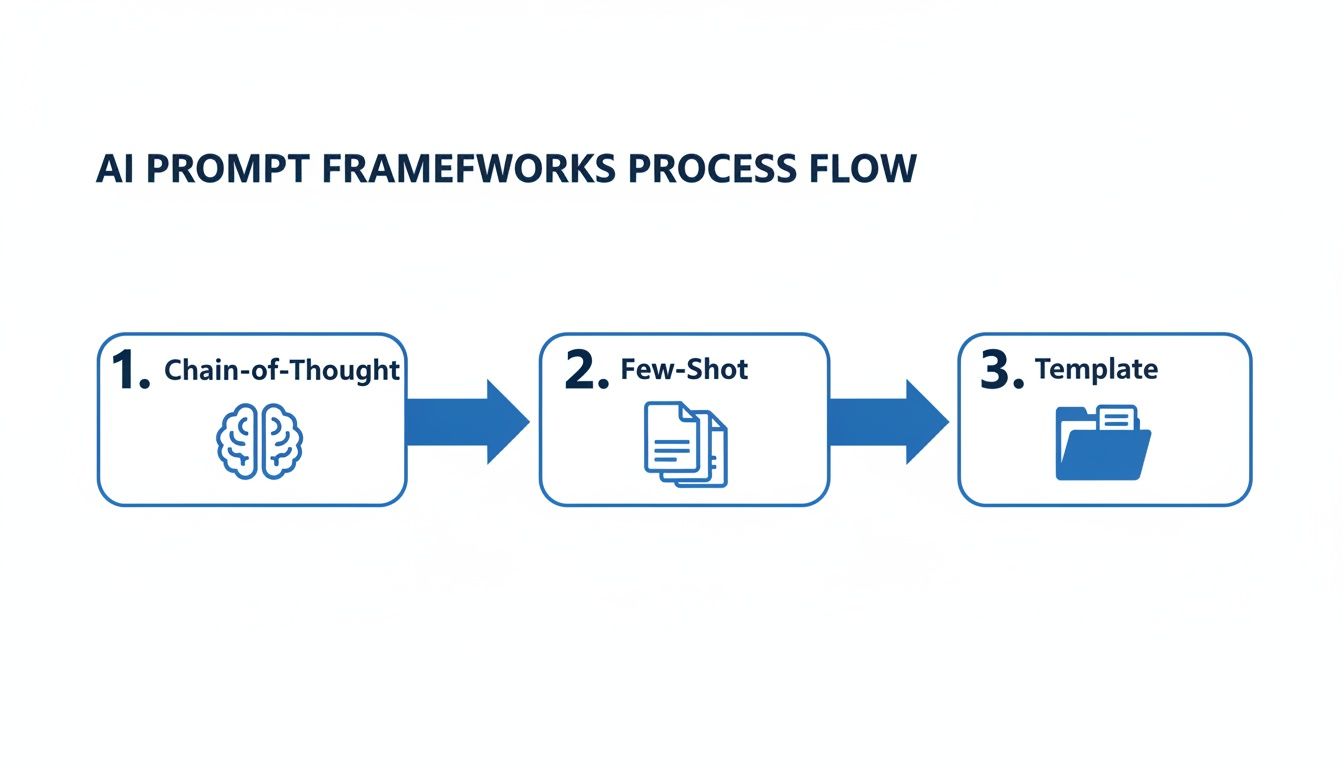

The flowchart below shows how more structured frameworks, like Chain-of-Thought and Few-Shot prompting, fit into this systematic process.

As you can see, moving from simple requests to structured frameworks is a key step in creating more reliable and effective prompts.

Refining for Tone and Style

Beyond just getting the facts right, you can also refine your prompts to perfect the AI’s tone and style. This is where subtle word choices in your prompt can have a huge impact.

Check out these minor tweaks and the difference they make:

| Initial Phrase in Prompt | Refined Phrase for Better Tone | Expected Outcome |

|---|---|---|

Write a paragraph... |

Draft a persuasive paragraph... |

Shifts the tone from purely informative to sales-oriented. |

Explain the concept. |

Explain the concept like I'm 15. |

Simplifies the language and encourages relatable analogies. |

Use a professional tone. |

Use an authoritative but approachable tone. |

Balances expertise with readability, making it less stuffy. |

Each of these small changes nudges the AI toward a more specific personality. Don’t be afraid to experiment with different adjectives and adverbs in your instructions. It’s a low-effort way to fine-tune the output until it sounds just right.

Building an Organized Prompt Library That Saves Time

So, you’ve spent hours crafting the perfect prompt. It’s a work of art—nuanced, specific, and it delivers exactly what you need every single time. But what good is it if you can't find it next week?

Crafting great AI prompts is only half the job. The other half is building a smart system to manage them. This is where you shift from one-off creative sparks to a scalable, efficient process. Without a system, you’re just reinventing the wheel every time you open a chat window, trying to remember that magic phrase you stumbled upon last Tuesday.

A central prompt library is the answer. Think of it as your team’s single source of truth for your best work.

This becomes absolutely essential when you’re working with a team. Imagine a marketing department where everyone has their own slightly different version of a "social media post" prompt. The result? A mess of inconsistent brand voice, unpredictable quality, and a ton of wasted time. A shared library gets everyone on the same page, working from the same proven templates.

How to Structure Your Prompt Library

There's no single "right" way to organize your library. The best system is simply the one you and your team will actually stick with. The main goal here is to make finding the right prompt second nature.

A simple, effective method is to categorize prompts by their function or project. This creates a logical folder structure that anyone can understand instantly.

Here are a few common ways people set things up:

- By Task: Group prompts based on what they do (e.g., "Summarize Text," "Generate Blog Outlines," "Write Python Code").

- By Project or Client: If you juggle multiple projects, keeping all the related prompts in a dedicated folder ensures total consistency for that specific work.

- By Department: For bigger teams, organizing by department like "Marketing," "Sales," or "Engineering" helps keep things from getting cluttered.

- By AI Model: Let's be real, some prompts just work better on certain models. You might have a folder for your go-to GPT-4 prompts and another for Claude 3 Opus.

Within each category, get specific with your naming conventions. "Social Media Post Prompt" is okay, but "LinkedIn Post Prompt - Thought Leadership - Authoritative Tone" is infinitely better. It tells you everything you need to know without even opening it.

The Business Case for Systematized Prompting

Organizing prompts isn't just about personal neatness; it's a powerful business strategy. As companies move AI from small experiments to everyday tools, the productivity gains are becoming impossible to ignore.

Global surveys show that 65–78% of organizations are already using or experimenting with AI. This lines up with massive efficiency boosts—programmers are becoming up to 88% more productive, and consultants are finishing tasks ~25% faster with 40% higher quality. But here’s the catch: these results are directly tied to the quality of the prompts. The companies seeing these huge returns are the ones that systematize their prompting with well-organized libraries. You can dig into more of these AI adoption and productivity findings from Stanford's AI Index Report.

By creating a shared prompt library, you turn an individual skill into a scalable team asset. It’s the difference between one person knowing how to fish and the entire village having a fleet of GPS-equipped fishing boats.

Tools for Building Your Library

Sure, you could start with a simple spreadsheet or a Google Doc, and for a solo user, that might work for a while. But for teams, dedicated tools offer way more power. Platforms like Promptaa are built specifically for this.

They bring features to the table that go way beyond just storing text:

- Advanced Categorization and Tagging: So you can sort, filter, and find exactly what you need in seconds.

- Team Sharing and Collaboration: Give your whole team access to a curated library, ensuring everyone is working from the best-of-the-best prompts.

- AI Enhancement: Some tools can even analyze your existing prompts and suggest tweaks to make them clearer and more effective.

At the end of the day, a well-organized prompt library does more than save a few minutes. It builds consistency, scales expertise across your team, and turns the art of prompt engineering into a repeatable science that drives real results.

Got Questions About Writing AI Prompts?

As you start getting your hands dirty with prompt engineering, you're going to have questions. Everyone does. It's just part of figuring out how to get these tools to work for you. Let's walk through a few of the most common hurdles people face when they're learning how to create effective AI prompts.

Getting a handle on these basics is key. Don't think of them as rigid rules, but more like a compass to help you point the AI in the right direction.

What's the "Right" Length for a Prompt?

Honestly, there's no magic word count. A good prompt is as long as it needs to be to get the job done—and not a word longer.

Instead of counting words, ask yourself: "Have I given the AI everything it needs to deliver what I want?"

- A quick request for a blog post title might just be a single, punchy sentence.

- But if you're asking for a detailed business analysis, you'll probably need a few paragraphs to lay out the background, provide key data points, and specify the format.

The real goal here is complete information. It's all about clarity, not hitting some arbitrary length.

The single biggest mistake I see people make is being too vague. They expect the AI to be a mind reader, but it can only work with what you give it. This inevitably leads to bland, generic results. When in doubt, add more detail.

Do I Need to Write Different Prompts for Different AI Models?

In short, yes. While the core principles—giving the AI a role, context, and clear instructions—are pretty universal, every AI model has its own quirks and strengths. Think of them as different employees; you wouldn't manage them all in exactly the same way.

For instance, some models really shine with a conversational, friendly tone. Others perform better when you give them structured, almost code-like commands. The only way to know for sure is to experiment. Try running the same prompt through a few different models and see what you get back. You’ll quickly get a feel for which tool is best for which task.

How Do I Stop the AI from Just Making Stuff Up?

AI "hallucinations"—where the model confidently states something that isn't true—are a real challenge. While you can't get the risk down to zero, you can definitely manage it.

The best way to keep an AI grounded is to give it trusted source material to work with.

- Copy and paste the text from a reliable article, a report, or an internal company document right into your prompt.

- Then, give it a direct order: "Answer the following question using only the information provided in the text above."

- You can even add a fallback command, like: "If the answer cannot be found in the provided text, respond with 'I do not have enough information to answer.'"

This builds a fence around the AI, forcing it to stick to the facts you’ve provided instead of pulling from its massive—and sometimes flawed—training data. And always, always fact-check any critical information the AI produces. For any professional work, that final check is non-negotiable.

Tired of your best prompts getting lost in random notes and documents? With Promptaa, you can build a clean, searchable library that makes your prompt skills a real asset for you and your team. Start building your library for free and feel the difference.