gemma-3-12b: A Practical Guide to Google's 12B Model

Google's Gemma 3 12B is a fresh take on open models, built to understand more than just words. It can process images, code, and documents, making it a powerful tool for developers and tech enthusiasts looking to build some seriously smart applications.

What Is the Gemma 3 12B Model

Imagine a top-tier research assistant who suddenly gains the ability to see. That's a good way to think about Gemma 3 12B. While older models were stuck just reading text, this one can interpret visual information right alongside it, which unlocks a whole new world of possibilities. It’s the latest addition to Google's open model family, sharing some of the same DNA as their premier Gemini models.

So what's the "12B" all about? It stands for 12 billion parameters. Think of parameters as the internal knobs and dials the model adjusts to make predictions and come up with responses. This size hits a real sweet spot—it’s powerful enough for heavy-duty tasks but doesn't demand the massive, expensive hardware that larger, proprietary models do.

A New Generation of Accessible AI

The real magic of Gemma 3 12B is that it's both powerful and open. Because it's an open model, developers can freely tinker with it, customize it, and run it wherever they want. This kind of access is what really pushes the whole industry forward.

When powerful models like Gemma 3 12B are made available to everyone, it lowers the bar for creating sophisticated AI. Suddenly, smaller teams and even solo creators can get in on the action and build amazing things.

The model was designed with a few key upgrades that make it especially useful:

- Multimodal Capabilities: It's a natural at handling text and images together. You can feed it a picture and ask questions about it or have it analyze visual data from a report.

- Expanded Context: It can juggle a much larger amount of information in one go. This is a game-changer for tasks like summarizing a dense annual report or debugging a huge chunk of code.

- Enhanced Performance: Even with its manageable size, it holds its own against bigger models, often outperforming them on standard industry tests for logic and accuracy.

If you want to really get what makes Gemma 3 12B tick, it helps to understand how AI language models work under the hood. Models like those in the Gemma family are all different tools for solving different kinds of problems. For a broader look, our guide on the different types of LLMs can give you a better sense of where Gemma fits in the grand scheme of things.

A Look Under the Hood: Core Architecture and Capabilities

So, what actually makes Gemma 3 12B tick? To really get a feel for why it’s a big deal, we have to pop the hood and look at the engine. The model's real power comes from a few key design choices that lead to some seriously useful benefits for anyone building with it.

First up, Gemma 3 12B is built with 12 billion parameters. You can think of parameters like the tiny connections in a brain that learn and store information. This number isn't random; it hits a sweet spot. It's beefy enough to handle complex reasoning but doesn't demand the kind of eye-watering, expensive hardware that much larger models need.

To fuel all those parameters, the model was trained on a staggering 12 trillion tokens of data. A "token" is basically a piece of a word, and training on such a massive and diverse dataset—including text, images, and code—gives the model a rich, deep understanding of how we communicate, think, and create.

Let's take a quick look at the core specs that define Gemma 3 12B's power.

Gemma 3 12B Key Specifications at a Glance

This table gives you a snapshot of the model's fundamental technical details.

| Specification | Detail |

|---|---|

| Model Parameters | 12 Billion |

| Training Dataset Size | 12 Trillion Tokens |

| Max Context Window | 128,000 Tokens |

| Capabilities | Text, Image, and Code |

| Multilingual Support | Pretrained on over 140 languages |

These numbers aren't just for show; they directly translate into what you can do with the model. Let's break down what a couple of these mean in practice.

The Power of a Massive Context Window

One of the standout features here is the enormous 128,000-token context window. A context window is basically the model's short-term memory. It determines how much information the model can keep in mind at once when you give it a task.

To give you a sense of scale, 128,000 tokens is roughly the equivalent of a 300-page book. This means you can hand the model a massive document and it can process the entire thing in one go. This completely changes the game for tasks that rely on understanding long, detailed source material.

Think of it this way: with a huge context window, the model isn't just reading one sentence at a time. It’s seeing the whole story, which allows it to maintain plot, track characters, and connect ideas from chapter one to the final page without getting lost.

For instance, a developer could drop in an entire codebase and ask it to hunt for bugs. Or a financial analyst could upload a company's lengthy annual report and ask for a summary of the key risks mentioned. Because the model holds all that context, its answers are far more accurate and insightful.

True Multimodal Understanding

Gemma 3 12B isn't just a text model with image capabilities bolted on; it was built from the ground up to be multimodal. This means it was designed from day one to process and understand both text and images together, seamlessly. Its architecture has a sophisticated image encoder baked right in, allowing it to "see" and reason about what's in a picture.

This is a huge step forward. This model from Google is a major advance, especially since it can handle both text and image inputs within that massive 128,000-token context window. That’s a 16-fold increase over previous Gemma models.

This expanded capacity means you can feed it hundreds of images or multiple long documents in a single prompt. For things like document analysis or visual Q&A, it can handle up to 131,100 input tokens—that's like analyzing over 100 pages of text at once. If you want to dive deeper, you can see detailed model statistics and benchmarks.

When you put all these pieces together—the parameter count, the massive training data, the giant context window, and the native multimodal design—you get a model that’s not just powerful, but incredibly versatile.

How Does Gemma 3 12B Stack Up? Performance and Safety

A model’s architecture is just a blueprint. What really matters is how it performs in the real world—how it handles complex reasoning, tricky math problems, and generating clean code. This is where benchmarks come in.

Think of benchmarks as a standardized exam for AI. They give us a common yardstick to measure different models, showing us exactly where each one shines and where it might struggle. For Gemma 3 12B, the results are pretty clear: this model punches well above its weight, delivering serious performance without needing the massive computational power of its bigger cousins.

Putting Numbers into Context

Gemma 3 12B really holds its own, especially when it comes to accuracy and efficiency in multilingual and multimodal tasks. It was trained on an enormous dataset of 12 trillion tokens from a wide variety of text sources, which is key to its strong performance across different languages and subjects.

In standard evaluations, the numbers speak for themselves. Gemma 3 12B scored 42.1% on AGIEval (3-5-shot), 24.2% on MATH (4-shot), and 36.0% on HumanEval (0-shot). These scores show it has a solid grasp of reasoning, coding, and general knowledge, making it a powerful option for global applications.

This chart breaks down the key specs that fuel its performance.

It’s this combination—a healthy parameter count, a gigantic training dataset, and a generous context window—that gives Gemma 3 12B the ability to tackle complex, multi-step problems so effectively.

To get a feel for how models like this are making an impact, it helps to use the most accurate AI visibility metrics software to see how they're being adopted and used across the industry, especially as big players like Google keep releasing new models.

A Strong Focus on Safety and Responsibility

But raw performance is only half the equation. In the world of AI, safety and ethics are non-negotiable. Google has made responsible development a core part of the Gemma 3 series, baking in rigorous safety protocols from the very beginning.

This isn't just an afterthought; it’s a multi-layered approach:

- Careful Data Curation: The model was trained on data that was carefully filtered to reduce biases and screen out harmful content right from the start.

- Safety-Focused Fine-Tuning: After the initial training, the model went through an alignment process to make sure its responses line up with established safety policies.

- Tough Safety Evaluations: Gemma 3 12B was put through its paces against a whole battery of safety benchmarks designed to spot potential for misuse, like generating dangerous content or reinforcing harmful stereotypes.

Google’s approach is smart. Because Gemma 3 showed a real knack for STEM subjects, they ran specific tests to see if it could be misused to create harmful substances. The results showed a low risk, which is exactly what you want to see.

This proactive stance on safety is crucial. While no model is perfect, these built-in guardrails help minimize risks and ensure the outputs are not just correct, but also responsible. For anyone building on top of this model, it offers a much more reliable foundation. Of course, a big part of that reliability also comes down to knowing how to reduce hallucinations in LLM outputs, which works hand-in-hand with the model’s safety features.

By pairing impressive performance with a solid ethical framework, Gemma 3 12B stands out as a capable and trustworthy tool for all sorts of applications. It’s powerful, accessible, and built with responsible AI principles at its core.

Exploring Real-World Use Cases

While the specs and benchmarks for Gemma 3 12B are impressive on paper, what really matters is what you can do with it. The model's combination of a huge context window, multimodal understanding, and language fluency isn't just a technical achievement; it unlocks practical solutions for real problems.

Let's move past the theory and look at how these features translate into tools that can help developers, analysts, and creators get things done.

Digging Deep into Document Analysis

One of the most powerful things you can do with Gemma 3 12B is complex document analysis. This is where its massive 128,000-token context window really shines. Professionals in fields like law, finance, and research are constantly buried in long, dense documents.

Think about a legal team reviewing a 200-page contract. They need to find every single clause related to liability. Instead of spending hours manually combing through the text, they can drop the entire document into the model and ask for a summary of the relevant sections. What used to take half a day can now be done in minutes.

The same goes for a financial analyst. They can feed the model an entire annual report and ask it to pull out key financial metrics, flag potential risks, and summarize the management discussion—all from a single prompt.

Being able to process an entire document at once is a genuine game-changer. The model isn't just reading sentences in isolation; it understands the whole story, connecting ideas from page 1 to page 200 for far more accurate insights.

Multimodal E-commerce and Customer Support

Gemma 3 12B’s ability to understand images and text together opens up entirely new ways to interact with users, especially in e-commerce. It can reason about visual information alongside a text query, which makes for a much more intuitive customer experience.

Here are a couple of examples:

- Visual Question Answering (VQA): A customer uploads a photo of a chair and asks, "What kind of wood is this, and would it go well with my oak floors?" The model can analyze the wood grain in the image and give a genuinely helpful, detailed answer.

- Product Support: Someone takes a picture of their router with a blinking light and asks, "What does this light pattern mean?" Gemma 3 12B can interpret the visual cue and provide the correct troubleshooting steps.

This is a huge step up from text-only chatbots. You get a richer, more effective support system that solves problems faster because it can see what the user sees.

Smarter Code Generation and Debugging

For developers, Gemma 3 12B is a seriously powerful coding partner. The large context window is perfect for understanding the full scope of a codebase, not just a single function. A developer can paste in a large, messy file and ask the model to spot bugs, suggest ways to make it faster, or even translate it into a different language.

It's also great for generating code from plain English. A programmer can just describe what a function needs to do, and the model can spit out a working block of code, often complete with comments and good practices.

This ability to grasp so much context makes it a fantastic tool for working on old, legacy codebases. It can get a holistic view of thousands of lines of interconnected logic, helping developers refactor and maintain complex software much more efficiently.

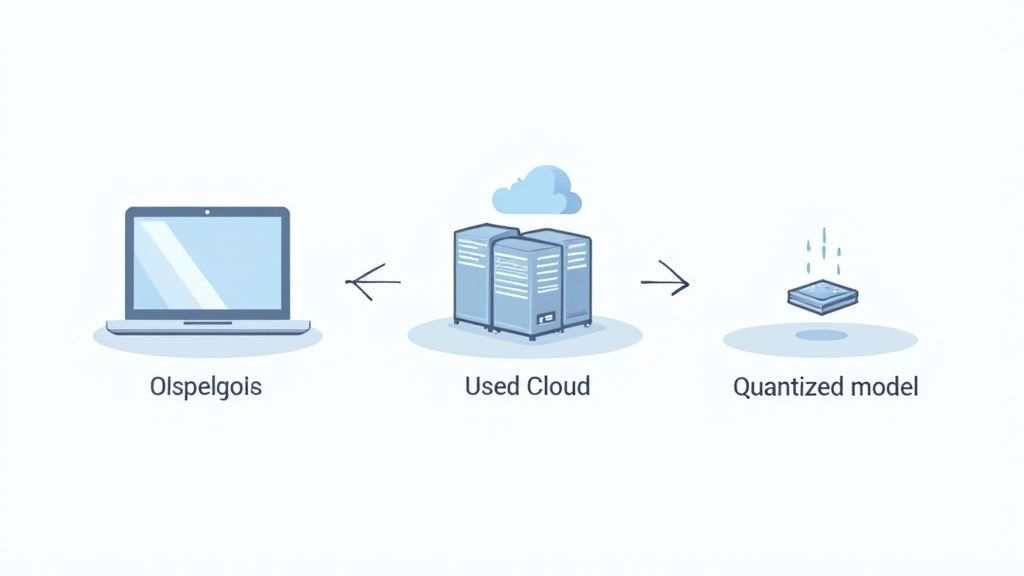

How to Deploy and Run Gemma 3 12B

Getting Gemma 3 12B up and running is surprisingly straightforward. You don't need a massive data center to start experimenting. Whether you want to build a scalable cloud app or just tinker on your own machine, there's a clear path forward. The right choice really just boils down to what you need in terms of power, cost, and hands-on control.

If you're building something for production, a managed cloud service is usually the best bet. Platforms like Google's Vertex AI handle all the heavy lifting, letting you deploy Gemma 3 12B without worrying about the underlying servers. This route gives you the reliability and scale needed for serious, business-critical applications.

But what makes the Gemma family really special is how well it runs locally. Thanks to its efficient architecture, you can run this 12B model right on your own hardware, even on a decent laptop. This is perfect for development, research, or any task where you want total control and don't need to be online.

Understanding Quantization for Local Use

Running a model with 12 billion parameters on a personal computer might sound impossible, but a clever technique called quantization makes it happen.

Think of it like this: quantization is to an AI model what compressing a giant RAW photo into a JPEG is for an image. It makes the file much smaller without losing much of the important detail. The process shrinks the model's memory footprint and computational needs by using simpler, lower-precision numbers for its internal parameters. You end up with a much lighter model that runs smoothly on consumer hardware, often with a barely noticeable drop in performance.

Quantization is the bridge that connects state-of-the-art AI with everyday hardware. It democratizes access, allowing developers and enthusiasts to experiment with powerful models like Gemma 3 12B without needing a data center.

The good news is that Google already provides officially quantized versions of Gemma 3. This means you don't have to do the complicated work yourself and can get started much faster on a wider variety of machines.

Hardware Requirements and Essential Tools

To run a quantized version of Gemma 3 12B locally, you’ll need a solid setup, but nothing too extreme. Here's a good baseline to aim for:

- GPU: An NVIDIA GPU is your best friend here. Aim for one with at least 12-16 GB of VRAM for a smooth experience.

- CPU and RAM: A modern multi-core CPU and 16-32 GB of system RAM will help ensure your computer doesn't bottleneck the GPU.

The community has built some fantastic tools that make this whole process much easier. The Hugging Face Transformers library is the industry standard for a reason; it gives you a simple Python interface to download and run models like Gemma.

For those who prefer a more visual approach, tools like LM Studio provide a graphical interface for running models locally with just a few clicks. If you're new to this, checking out a guide on how to select a model in LM Studio can be a great first step. These resources take a lot of the technical headache out of the equation.

Effective Prompting Strategies

https://www.youtube.com/embed/qBlX6FhDm2E

Having a powerful model like Gemma 3 12B is one thing, but knowing how to talk to it is what truly unlocks its potential. Getting the right output is all about crafting the right instructions. It's less of a magic formula and more about learning how to guide the model's thinking process.

Think of it like giving directions to a very smart, very literal assistant. If you just say, "Tell me about cars," you'll get a vague, encyclopedia-style answer. But if you ask, "Explain the key differences between a hybrid and an all-electric vehicle for someone who isn't a car expert," you'll get a focused, genuinely useful response. Specificity and context are your best friends here.

Start with the Basics: Clarity and Context

The foundation of any great prompt is clarity. Your instructions need to be direct and leave no room for guessing. You have to give the model all the information it needs to succeed.

This includes defining the format, tone, and even the audience for the response. For example, instead of just asking for a summary, specify what you need: "Give me a three-bullet-point summary written in a professional tone for our marketing team."

Adding context is just as crucial. The model needs some background to frame its response correctly. When you provide relevant details, examples, or constraints, you help narrow its focus and stop it from going off on a tangent.

A well-crafted prompt acts as a set of guardrails for the AI. It steers the model toward the desired outcome by clearly defining the scope of the task and providing the necessary background information to execute it accurately.

Once you nail these fundamentals, you can move on to more advanced techniques that handle even the most complex jobs.

Advanced Techniques for Precision

With the basics covered, you can start using more structured prompting methods to guide Gemma 3 12B toward higher-quality results. These techniques really shine when you need a specific style or a multi-step thought process.

Here are three powerful approaches you can use:

- Zero-Shot Prompting: This is the most straightforward method. You ask the model to do something without giving it any examples. For instance: "Translate the following sentence into French: 'The quick brown fox jumps over the lazy dog.'" This works great for simple tasks where the model's general training is enough.

- One-Shot Prompting: Here, you provide a single example to show the model the input-output format you want. This helps it understand the exact structure you're looking for. For example: "Translate English to French. English: 'Hello, world.' French: 'Bonjour, le monde.' Now translate this: 'I love programming.'"

- Few-Shot Prompting: This is your go-to for complex or nuanced tasks. You provide several examples ("shots") to teach the model the pattern you want it to follow. This is incredibly effective for things like sentiment analysis, generating code in a specific style, or pulling structured data from messy text.

Using few-shot prompting is like giving the model a mini-training session right inside the prompt. It can dramatically improve the accuracy and relevance of its answers.

Prompting for Multimodal Tasks

Gemma 3 12B can process both images and text, which opens up some exciting possibilities. But it requires a slightly different way of thinking about your prompts. You have to structure your request to clearly reference the visual information.

When you're working with images, be as descriptive as you can in your text. For instance, instead of uploading a photo of a circuit board and just asking "What's wrong?", a much better prompt would be: "Analyze the attached image of this circuit board. Can you identify the component labeled 'U3' and explain what it probably does, based on its location near the power input?"

This combined approach—visual data plus a specific text instruction—lets the model connect the dots and give you a far more insightful answer.

Practical Do's and Don'ts

To get the best results, it helps to follow a few simple rules. Keeping these in mind will help you sidestep common mistakes and write instructions that get you what you want, consistently.

Do's for Effective Prompts

- Be Specific and Direct: Clearly state your goal.

- Provide Context: Give the model the necessary background info.

- Define the Persona and Tone: Tell it who to be (e.g., "Act as a senior software engineer").

- Set Constraints: Specify the length, format (like JSON or a markdown table), or style.

- Use Examples (Few-Shot): Show the model exactly what a good answer looks like.

Don'ts to Avoid

- Avoid Ambiguity: Don't use vague or open-ended language.

- Don't Assume Knowledge: Put all the details the model needs right in the prompt.

- Avoid Overly Complex Sentences: Keep your language simple and clear.

- Don't Ask Multiple Questions in One Go: Break down big tasks into smaller, separate prompts.

By following these guidelines, you can build a more effective workflow with Gemma 3 12B, turning it from a general tool into a specialized assistant fine-tuned for your exact needs.

Got Questions? We've Got Answers

Diving into a new model always sparks a few questions. Let's clear up some of the common ones about Gemma-3 12B so you can get a better handle on what it can do.

What Does "Multimodal" Actually Mean for Gemma-3 12B?

Simply put, a multimodal model can chew on more than one type of data at once. For Gemma-3 12B, this means it was designed from the get-go to understand both text and images together in a single prompt.

You’re not just limited to text. You can drop in an image and ask it a question, and the model will actually see the image to figure out the answer. This is all thanks to a built-in image encoder that makes it a genuine vision-language model.

Why Is the 12B Parameter Size a Big Deal?

The "12B" stands for 12 billion parameters, which are essentially the knobs and dials the model uses to learn and make connections. This size is something of a sweet spot.

It’s beefy enough to tackle tough reasoning, coding, and analysis but still lean enough to run on a single powerful GPU. With a bit of optimization, you could even get it running on a high-spec laptop. This makes serious AI power much more accessible, without needing a whole server farm to get started.

What Can You Do With a 128K Context Window?

Think of the 128,000-token context window as the model's working memory. It's the total amount of information—text, code, instructions—it can keep in mind for a single task.

In real-world terms, that’s enough to process a 300-page book in one go. You can throw entire documents, complex codebases, or long reports at it for a full analysis without the model forgetting what it saw at the beginning. It's perfect for tasks that require understanding the big picture.

Ready to organize your prompts and get better AI results? With Promptaa, you can create, store, and even enhance your instructions for models like Gemma-3 12B. Start building your perfect prompt library today at https://promptaa.com.