Few Shot Prompting: Master few shot prompting in 3 steps

Ever tried to teach someone a new card game? You wouldn't just read the rulebook aloud. You'd deal a few hands and play them out, showing them how it's done. That’s the core idea behind few-shot prompting. You're giving an AI model a handful of examples—or "shots"—right inside your prompt to show it exactly what you want.

Why Few-Shot Prompting Is a Big Deal

Few-shot prompting gives the AI a crash course on the pattern, style, and format you're looking for, all without needing to retrain or fine-tune the entire model. It’s all about getting precise results by showing, not just telling. This completely changes the game for how we work with large language models (LLMs), making powerful AI much more practical for everyday use.

This is about working smarter. Instead of crossing your fingers and hoping the AI gets what you mean, you’re handing it a clear blueprint for success.

The Magic of In-Context Learning

The secret sauce here is something called in-context learning. The LLM isn’t permanently updating its brain with your examples. Instead, it uses them just for that one conversation to figure out the task. It looks at the examples you gave, recognizes the pattern, and applies that logic to your new request.

It's like giving someone a temporary cheat sheet for a specific quiz. They use it to ace the test, but once the quiz is over, they toss the sheet. This makes the whole process incredibly flexible and perfect for unique or varied tasks.

This adaptability is what makes few-shot prompting so powerful. You can steer the model’s behavior for a specific job without the massive cost and time commitment of formal fine-tuning. For a deeper dive into other methods, you can explore the various types of prompting techniques in our detailed guide.

Getting Better Results, Faster

The most obvious win here is the massive jump in quality. With just a few well-chosen examples, you can get models to handle complex tasks like sentiment analysis, code generation, or content creation with stunning accuracy.

In fact, for certain tasks, this method can boost the model's performance by up to 90%. That kind of efficiency is crucial for anyone trying to get reliable results from AI without wasting resources. You can read more about how few-shot prompting boosts AI accuracy on addlly.ai.

Ultimately, this saves a ton of time and frustration. By providing a clear mini-lesson in your prompt, you're essentially programming the AI's output on the fly to get exactly what you need.

Choosing Your Prompting Strategy

Picking the right prompting strategy is a bit like choosing the right tool for a job. You wouldn't use a sledgehammer to hang a picture, and you don't always need a bunch of examples for a simple AI request. The trick is to match your approach to the complexity of what you're trying to get done.

You have three main methods to work with: zero-shot, one-shot, and the incredibly useful few-shot prompting. Each one strikes a different balance between simplicity, upfront effort, and the precision of the final output. Understanding what makes each one tick is the key to getting better, more consistent results from your AI.

Zero-Shot Prompting: The Direct Command

Zero-shot prompting is as straightforward as it gets. You just tell the AI what to do, without giving it any examples to work from. Think of it as giving a direct order to a skilled assistant who already gets the gist of your requests.

For example, if you wanted a simple translation, you'd just ask:

Translate 'dog' to French.

This approach works great for common tasks that the AI has been heavily trained on. It’s fast and requires almost no setup, but its success completely depends on the model's built-in knowledge. If your task is a little quirky or specialized, the results can be a total crapshoot.

One-Shot Prompting: A Single Clue

With one-shot prompting, you nudge the AI in the right direction. Instead of just asking, you provide a single, complete example to give the model a hint about the format or pattern you have in mind. It's like showing someone one piece of a jigsaw puzzle to help them figure out what the next one should look like.

Let's stick with our translation example. A one-shot prompt would be:

English: cat -> French: chat English: dog -> French:

By providing just one correct pair, you've handed the model a crucial piece of context. It now sees the pattern (English word -> French word) and is far more likely to give you the correct answer, "chien." This is perfect for steering the AI toward a specific output style without overcomplicating things.

Few-Shot Prompting: Establishing a Clear Pattern

This is where you really start to see the magic of in-context learning. Few-shot prompting means giving the model multiple examples (usually two to five) to lock in a clear, undeniable pattern for it to follow. It’s essentially teaching by example—showing someone how a rule works by demonstrating it in a few different situations.

A few-shot prompt for our translation task would leave no room for doubt:

English: cat -> French: chat English: house -> French: maison English: bread -> French: pain English: dog -> French:

With three solid examples, the AI gets a much richer understanding of the assignment. It learns the pattern, recognizes the specific language pair, and internalizes the format you want. This dramatically boosts the odds of getting the right answer, formatted exactly how you need it. Few-shot prompting is your go-to strategy for nuanced, complex, or highly specific tasks where you can't afford to get it wrong.

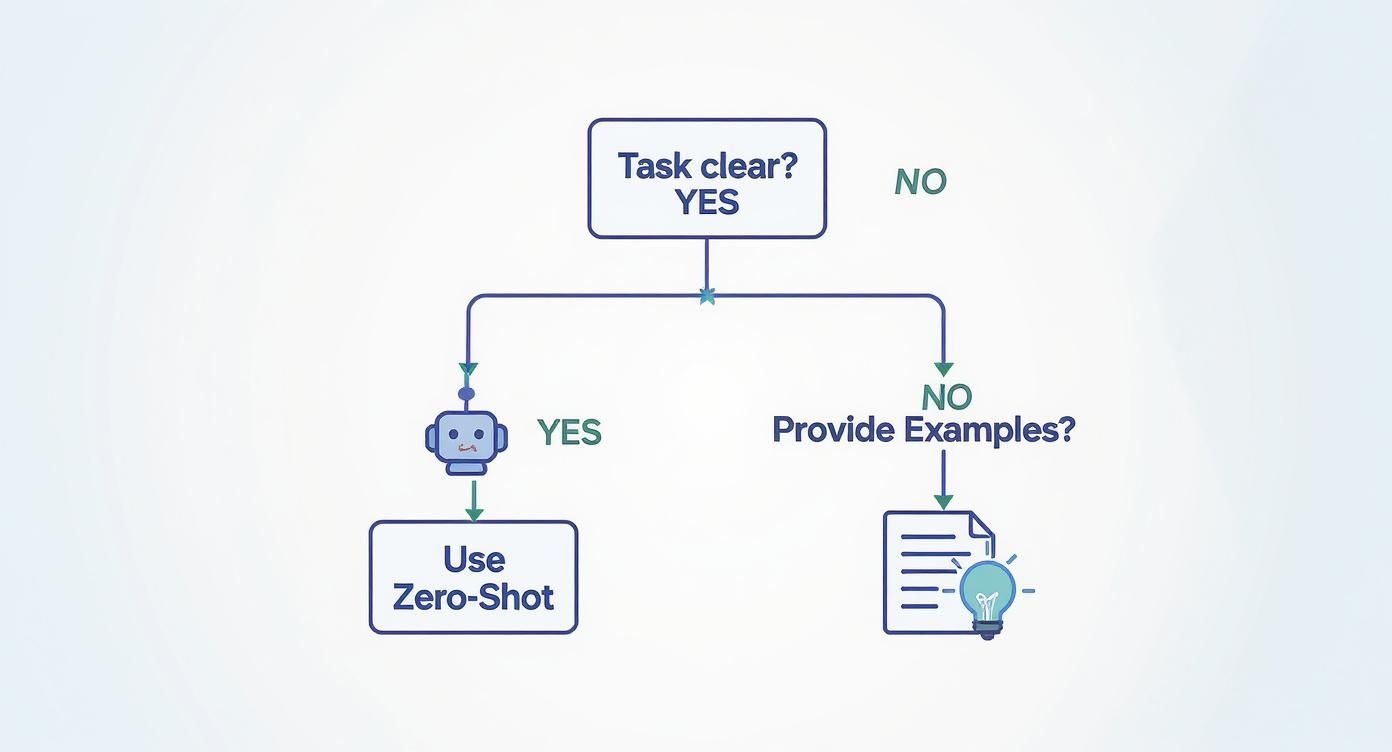

This simple decision tree helps visualize when to stick with a direct zero-shot command versus when it’s time to bring in more context with few-shot prompting.

As the guide shows, if your task is simple and clear, a zero-shot prompt is efficient. But for anything that needs a more specific or reliable output, providing a few examples is the smarter path.

To really nail down the differences, a side-by-side comparison can be incredibly helpful. This table breaks down when and why you'd choose one method over the others.

Prompting Techniques At a Glance

| Technique | Number of Examples | Best For | Pros | Cons |

|---|---|---|---|---|

| Zero-Shot | None | Simple, common tasks like basic Q&A or summarization. | Fast, easy to write, requires no example data. | Unreliable for complex or nuanced requests. |

| One-Shot | One | Tasks requiring a specific format or style. | Provides helpful context with minimal effort. | A single example might not be enough for complex patterns. |

| Few-Shot | Two or more | Complex, specialized, or multi-step tasks needing high accuracy. | High accuracy and reliability; establishes clear patterns. | Requires more effort to create high-quality examples. |

Ultimately, the number of examples you provide directly shapes the model's ability to grasp the context. While zero-shot is great for quick, simple tasks, few-shot prompting gives you far more control and accuracy when the stakes are higher.

How Few Shot Prompting Actually Works

To really get what’s happening with few-shot prompting, let's use an analogy. Think of a Large Language Model (LLM) as a brilliant but junior detective. If you just hand it a case file and ask, "Who's the culprit?" (a zero-shot prompt), it'll make a guess based on its vast but general knowledge of past cases. It might be right, but it's just a shot in the dark.

Now, with few-shot prompting, you’re not just giving it the case file. You're also slipping in a few solved "mini-cases" that have a similar pattern—a specific modus operandi. The detective sees these examples, instantly picks up on the logic you're hinting at, and applies that exact same reasoning to solve the new case.

This whole process hinges on a concept called in-context learning.

The Power of In-Context Learning

In-context learning is what lets an LLM change its behavior for a single task without actually reprogramming its core. The model isn’t “learning” like we do, where new information gets filed away for later. It’s more like it’s using the examples you provide as a temporary instruction manual for one specific job.

The model sees your entire prompt—the instructions, the examples, and your final question—as one long string of text. By analyzing the input -> output pairs you've laid out, it figures out the relationship you want it to copy. It learns the "rules of the game" for just this one request and makes its next move based entirely on that immediate context.

Think of it like giving the AI a cheat sheet for a very specific pop quiz. It uses the sheet to ace your question but forgets everything on it the second the quiz is over. This is what makes the technique so powerful for one-off or highly customized tasks.

This temporary adaptation is a game-changer. It means you can steer the model toward very niche tasks—like mimicking a quirky brand voice or pulling data into a weirdly specific format—without the massive cost and effort of fully retraining the base model.

The Attention Mechanism at Its Core

So, how does the model know which parts of your examples matter most? That’s the job of the attention mechanism. This is a core piece of the puzzle for modern LLMs, allowing the model to weigh the importance of different words in your prompt when it generates a response.

When you feed it few-shot examples, the attention mechanism helps the model zero in on the key parts. It learns to "pay attention" to the connection between the inputs and outputs you’ve shown it. For example, if you're doing sentiment analysis, it will notice how certain words in your examples lead to a "Positive" or "Negative" label.

By choosing your examples carefully, you are quite literally directing the model’s attention, guiding it to focus on the patterns you want it to follow. This is also why high-quality, consistent examples are so important. Giving it confusing or contradictory examples will just throw it off track, leading to unreliable or fabricated answers. To get a better handle on this problem, you can read our guide on how to reduce hallucinations in LLMs.

How to Craft Effective Few Shot Prompts

Alright, let's move from theory to practice. This is where you really start to see the magic of few-shot prompting. Getting consistent, high-quality results isn't about some secret engineering trick; it’s about being thoughtful in how you design your prompts. Think of it as part art, part science.

The golden rule is simple: quality and consistency trump quantity. You'll get far better results from two or three perfectly crafted examples than you will from a dozen random ones. Your whole goal is to create a crystal-clear pattern that the AI can instantly pick up on and replicate for your task.

The Foundation: Quality Examples

The examples you choose are the very foundation of your prompt. If that foundation is wobbly, the entire thing will fall apart. Each example needs to be a perfect miniature of the output you want, nailing the exact format, tone, and logic you're after.

For instance, if you're trying to classify customer feedback, make sure your examples cover the main sentiment categories you expect. If you're generating marketing copy, every example should sound exactly like your brand's unique voice.

Key Takeaway: Think of each example as a mini-lesson. A great example teaches the AI the structure, style, and content it needs to know, making its final output predictable and on-point.

To really get this right, you need a solid grasp of the general principles of how to write prompts that get results. This foundational skill is what separates a frustrating experience from a successful one.

Keep Your Instructions Clear and Concise

While your examples do most of the heavy lifting, your initial instruction is what sets the stage. Ambiguity is your enemy here. A vague instruction, even with great examples, can still lead the AI down the wrong path.

Tell the model exactly what you want it to do. Be direct.

- Weak Instruction: "Look at these reviews and tell me what they mean."

- Strong Instruction: "Classify the following customer reviews as 'Positive', 'Negative', or 'Neutral'."

That simple tweak removes all the guesswork. It gives the model a single, well-defined job. Getting this clarity right is a huge first step, and for a deeper dive, you can check out our guide on how to write AI prompts that actually work.

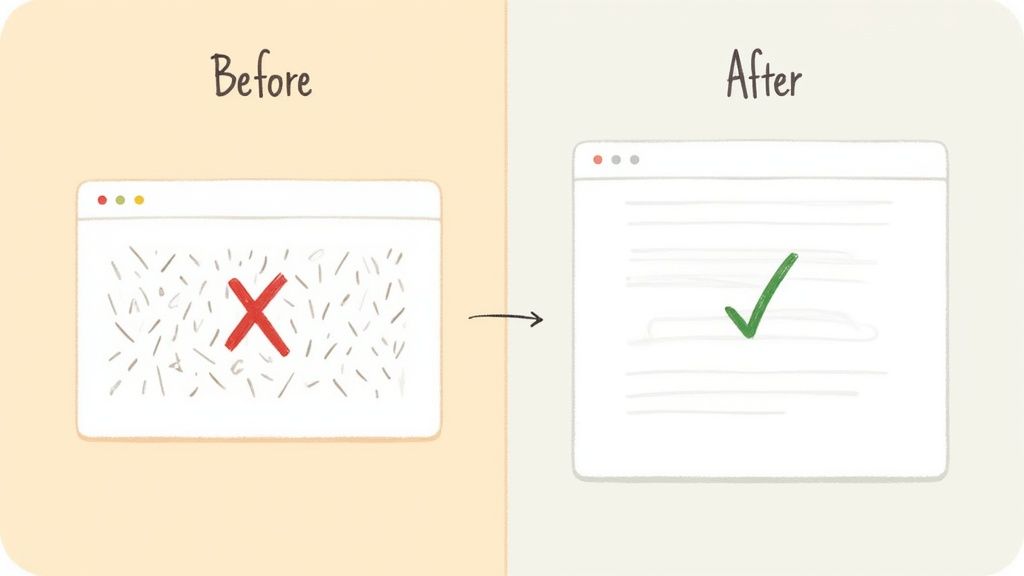

Practical Examples: Before and After

Let's look at a real-world scenario to see how this plays out. Imagine you need to pull specific data points—like material and color—from unstructured product descriptions.

Before (A Weak Prompt):

A weak, zero-shot attempt might look like this:

From this text, what is the product made of and what color is it? "The sleek Summit Backpack is made from water-resistant nylon and comes in a classic black."

Sure, this might work sometimes. But the output format will be all over the place. You might get a full sentence one time and bullet points the next.

After (An Effective Few-Shot Prompt):

Now, let’s build a powerful few-shot prompt that demands a specific JSON output.

Extract the material and color from the product description and format it as JSON.

Description: The elegant Alpine Tote features a durable canvas body with rich leather straps and is available in navy blue. Output: {"material": "canvas", "color": "navy blue"}

Description: Our Trailblazer Jacket is crafted from breathable polyester and is offered in a vibrant forest green. Output: {"material": "polyester", "color": "forest green"}

Description: The sleek Summit Backpack is made from water-resistant nylon and comes in a classic black. Output:

See the difference? By providing just two clear examples, you've taught the AI the exact pattern. It now knows its job isn't just to find the info, but to structure it perfectly into a JSON object. That makes the output immediately usable for any app you're building. This is the real power of few-shot prompting.

Real-World Applications of Few-Shot Prompting

https://www.youtube.com/embed/Ts42JTye-AI

While the theory behind few-shot prompting is interesting, its real value comes to life when you see it applied to actual business problems. This isn't just a clever trick; it's a practical tool that companies are using right now to get more done, improve efficiency, and tackle projects that used to be too expensive or complicated.

The core idea is simple but powerful: give the AI a handful of good examples, and it can learn to handle a very specific task without needing to be completely retrained. Let's dig into some of the most common ways this technique is making a real impact today.

A Game-Changer for Marketing and Customer Support

In marketing, you need to be fast and relevant. Few-shot prompting helps teams create high-quality, on-brand content in a fraction of the time. Instead of starting from scratch, a marketer can show the AI a few of their best-performing email subject lines, and it will generate dozens of new ideas that capture the same tone and style.

The same concept works wonders for customer support. Say your team is drowning in customer feedback forms. A simple prompt with a couple of examples can teach an AI to instantly classify what people are saying.

- Example 1 Input: "The checkout process was confusing and I almost gave up."

- Example 1 Output:

{"Category": "User Experience", "Sentiment": "Negative"} - Example 2 Input: "I love the new design, it's so much easier to find what I need!"

- Example 2 Output:

{"Category": "UI/UX Redesign", "Sentiment": "Positive"}

Just like that, the AI learns how to structure the feedback. What was once a messy pile of text becomes organized, actionable data in seconds.

Speeding Up Development and Data Analysis

For developers and data analysts, few-shot prompting is a huge productivity hack. It can wipe out the time wasted on writing repetitive code snippets or standard functions. By showing the AI an example or two of what they need, developers can have it generate the rest of the code, letting them focus on the hard stuff.

One of the most valuable uses is turning plain English into code. An analyst can provide a few examples of a question and the matching SQL query, effectively training the model to act as a data assistant.

This approach opens up data access to everyone. Team members without technical skills can get information from a database just by asking a question, which speeds up decision-making for the entire organization.

The technique's benefits—less data required, quicker learning, and more flexibility—are making AI practical in fields where getting large datasets is tough, like healthcare and finance.

Taming Complex Legal and Financial Work

The legal and financial industries are built on dense, specialized documents that demand careful review. Few-shot prompting offers a way to quickly pull out key information or summarize contracts, reports, and legal filings. A lawyer could show an AI how to spot specific clauses in a contract, turning hours of manual reading into a job that takes minutes.

In finance, the same method can standardize financial data from different reports or flag potential risks by learning from a small set of examples. Many complex systems are already benefiting from this kind of AI integration, which is why we see so many companies powering Generative AI applications to solve these problems.

The advantage is always the same: it makes expert knowledge scalable, saving time and cutting down on human error in tedious tasks.

Putting It All Together

Think of mastering few-shot prompting less as memorizing a strict set of rules and more like developing a feel for how to guide an AI. We've walked through how this technique gives a model a handful of high-quality examples, essentially teaching it what you want on the fly through in-context learning. This simple shift from just telling the AI what to do to showing it transforms your interactions from a vague guessing game into a precise, collaborative effort.

The real secret sauce is in the quality and consistency of the examples you provide. Each one needs to be a perfect miniature of the output you're aiming for. This creates an unmistakable pattern that the model can lock onto. Always remember, two or three stellar examples will get you far better results than a dozen mediocre ones ever will.

Your Next Steps in Prompting

This guide is just the starting point. The real learning happens when you roll up your sleeves and start applying these ideas to your own work. The best way to get good at this is to treat prompting as a continuous cycle of testing and tweaking.

- Start Small: Don't try to boil the ocean. Pick a simple, specific task you handle regularly.

- Build Your Examples: Carefully craft two or three crystal-clear, consistent examples that show exactly what you want.

- Test and Refine: Run the prompt. If the output is off, look closely at your examples. Is there any ambiguity? Is the pattern you're trying to show actually clear?

- Iterate: Adjust your examples or fine-tune your instructions, then run it again.

This hands-on, trial-and-error approach is genuinely the fastest way to build your prompting intuition. Every prompt you write is a chance to better understand how the model "thinks" and reacts to the context you provide.

At the end of the day, few-shot prompting is a powerful skill that gives you a whole new level of control and precision when working with AI. By getting comfortable with experimentation, you can leave generic outputs behind and start producing results that are genuinely useful and specific to your needs. Start putting these techniques into practice today, and you'll find much more effective ways to collaborate with AI.

Got Questions? Let's Talk Specifics

As you start to wrap your head around few-shot prompting, it's normal for some practical questions to pop up. How many examples do I really need? And when should I just fine-tune a model instead?

Let's dig into a couple of the most common questions. Getting these details right is what separates a basic attempt from a truly strategic approach.

What’s the Magic Number for Examples?

There isn't a single "magic number," but a good rule of thumb is to stick with two to five examples. This little window seems to be the sweet spot—it's just enough context for the model to lock onto a pattern without muddying the waters with too much information.

Of course, the ideal number really comes down to what you're asking the model to do.

- Simple Formatting: If you're just reformatting text or extracting data, two or three solid examples will probably do the trick.

- Nuanced Classification: For trickier tasks like detailed sentiment analysis with multiple shades of meaning, you might need to lean closer to four or five examples to show the model the full range of possibilities.

The real secret is consistency. A few crystal-clear, consistent examples will always beat a dozen that are confusing or contradictory. A great starting point is to try three, see what happens, and tweak from there.

Few-Shot Prompting vs. Fine-Tuning: What's the Difference?

This is a big one. While both techniques help a model get better at a specific task, they go about it in completely different ways. Think of it like this: few-shot prompting is like giving a student a cheat sheet for a pop quiz, while fine-tuning is like enrolling them in a full-semester course.

Few-shot prompting is all about quick, in-the-moment learning. The model looks at the examples you provide for a single task, gets the job done, and then moves on, completely "forgetting" what it just learned. It's fast, it's cheap, and it's perfect for one-off tasks or when you're just experimenting to see what works.

Fine-tuning, on the other hand, is a much deeper, more permanent kind of training. You feed the model a huge dataset—we're talking hundreds or even thousands of examples—to create a brand new, specialized version of the original model. It costs more and takes longer, but you end up with a model that has truly internalized the nuances of your specific domain. You'd go this route for core business functions where you need consistent, expert-level performance every single time.

Ready to stop guessing and start building better prompts? Promptaa provides an organized library to create, test, and share your best few-shot examples, helping you get consistent, high-quality results from any AI model. Organize your prompts with Promptaa today!