Claude vs ChatGPT A Practical AI Showdown

When you get down to it, the core of the Claude vs ChatGPT debate is pretty straightforward. Think of Claude as a specialist—it truly shines with nuanced, creative, and analytical tasks, making it an incredible partner for writers and researchers. ChatGPT, on the other hand, is the powerhouse generalist, offering incredible versatility and speed for a huge range of applications.

At a Glance: The Core Differences

Picking the right AI assistant can feel like choosing between a finely tuned instrument and a feature-packed multi-tool. Both Claude, from Anthropic, and ChatGPT, from OpenAI, are at the absolute peak of language model technology, but they were built with slightly different philosophies in mind. I'm going to break down their key distinctions to help you figure out which one will fit seamlessly into your workflow.

While ChatGPT has a massive head start, Claude is gaining ground fast. Its global market share recently jumped to 3.8%, up from just 2.1% the year before. That's not just a number; it shows a real and growing demand for what Claude brings to the table. For a wider view of how they stack up against others like Gemini, it's worth reading this comprehensive AI chatbot comparison.

So, what's driving this growth? People are starting to value Claude's specific strengths—things like deep analysis and a more natural, human-like way of writing.

Let's kick things off with a quick, high-level overview to frame the discussion.

Claude 3 vs ChatGPT-4 At a Glance

This table cuts right to the chase, comparing the flagship models from both companies. It’s designed to give you a quick snapshot so you can immediately see which AI might be a better fit for what you do every day.

| Feature | Claude 3 Opus | ChatGPT-4 Turbo |

|---|---|---|

| Primary Strength | Deep analysis, nuanced writing, and effortlessly handling long, complex documents. | All-around versatility, raw speed, and a wide array of features like image creation. |

| Writing Style | Feels more natural and human-like. It's much less prone to those generic, "AI-sounding" phrases. | Very direct and structured. It's incredibly efficient, but can sometimes come off as a bit robotic. |

| Context Window | A massive 200K tokens, which means it can process and remember information from entire books. | A still-impressive 128K tokens, great for most long documents but smaller than Claude's. |

| Best For | Writers, academics, researchers, and developers who need in-depth textual analysis. | Anyone looking for a powerful multi-purpose tool for coding, brainstorming, and quick creative tasks. |

| Key Differentiator | Its ethical framework ("Constitutional AI") results in more cautious and thoughtful responses. | An ecosystem of extensive third-party integrations (GPTs) and true multi-modal capabilities. |

This side-by-side look really highlights the different philosophies. Claude is geared towards depth and quality of interaction, while ChatGPT focuses on breadth and capability.

Comparing the Core AI Philosophies

To really get to the bottom of the Claude vs ChatGPT debate, you have to look past the surface-level features and understand what makes these models tick. Their core design philosophies are what shape their personalities, their strengths, and their quirks. It’s like comparing a precision instrument to a powerful multi-tool—both are incredibly useful, but for very different jobs.

Anthropic, the creators of Claude, was started by a group of former OpenAI researchers. Their mission from day one was different: build AI that was fundamentally safe, controllable, and transparent. The secret sauce here is a training method they pioneered called Constitutional AI.

Instead of just learning from vast amounts of human feedback, Claude is guided by a "constitution"—a set of principles based on concepts like the UN Universal Declaration of Human Rights. This approach bakes ethical guidelines right into the model's core, aiming to make it helpful, harmless, and honest by design. You can feel this in its output; it often comes across as more cautious, thoughtful, and even a bit reflective.

Claude and Its Constitutional Approach

Claude's distinct "personality" is a direct result of this ethical framework. It’s built to be more of a collaborative partner, especially for nuanced tasks like writing, editing, and deep analysis where understanding context is everything.

You can see its constitutional training shine through in a few key ways:

- More Expressive Language: Claude's writing often feels more human. It has a knack for avoiding those tell-tale robotic phrases and can slip into different tones and styles with surprising authenticity.

- Built-in Ethical Guardrails: You'll find that Claude is quick to decline requests that sit in a grey area, and it's usually good about explaining why. This transparency makes it a trustworthy choice for professional settings where brand safety is a concern.

- Focus on Depth: The model really excels when you throw long, complex documents at it. It can summarize dense research papers or give detailed feedback on a 50-page report without losing the plot.

The big idea with Constitutional AI is that the model's values aren't just a layer added on top; they're woven into its very fabric. This creates a much more predictable and reliable tool, which is a massive advantage for professional use.

This safety-first design explains why Claude can sometimes feel a bit more "reserved" than ChatGPT. That’s not a flaw, it’s a feature. For anyone who prioritizes reliability, ethical consistency, and high-quality, natural-sounding prose, Claude is an outstanding choice.

ChatGPT and the Pursuit of Capability

OpenAI's philosophy with ChatGPT, on the other hand, has always been about pushing the absolute limits of what AI can do. Their strategy is centered on massive scale to build a model with powerful, general-purpose intelligence that can tackle just about any task you throw at it. While safety is a huge priority, the primary driver has always been to create a universally competent AI.

This focus on raw capability is obvious when you look at ChatGPT's feature set. It’s a true powerhouse, engineered for speed, versatility, and deep integration into other workflows.

Here’s what that philosophy looks like in practice:

- Broad Functionality: ChatGPT is the Swiss Army knife of AI. It writes code, generates images with DALL-E, analyzes data, browses the web—it's designed to be an all-in-one assistant.

- Rapid Iteration: OpenAI is constantly shipping updates and rolling out new features. Their strategy is one of relentless innovation, always pushing to stay ahead in the market.

- Extensive Integrations: The GPT Store and a powerful API have spawned a massive ecosystem of third-party plugins and tools, dramatically extending what you can do with the model.

This relentless pursuit of capability is why ChatGPT can feel more direct and to the point. It’s optimized to give you a functional answer fast, making it the go-to for developers, marketers, and anyone who needs a powerful, do-it-all toolkit for a huge variety of applications.

AI Performance in Real-World Scenarios

Specs on paper are one thing, but how these models perform on the tasks you actually care about is what truly matters. Let's move past the theory and see how Claude and ChatGPT stack up when we put them to work on real-world professional challenges.

We're going to put them head-to-head in four common scenarios: hammering out a business strategy, crafting creative content, writing code, and analyzing dense documents. The differences in their outputs might seem subtle at first, but they reveal a lot about which tool is the right fit for your specific workflow.

Complex Reasoning and Strategy

When you're trying to map out a high-level strategy, the gap between a decent answer and a great one comes down to nuance and structure. To test this, we gave both models a fairly demanding business prompt.

Prompt: "Develop a go-to-market strategy for a new B2B SaaS product that uses AI to automate customer support tickets for small e-commerce businesses. Include target audience, key messaging, channel strategy, and a 3-month launch plan."

ChatGPT-4 produced a solid, actionable framework. Its response was logically laid out, ticking every box in the prompt with clean, clear bullet points. It felt like a well-organized checklist—incredibly efficient and perfect for a team that's ready to hit the ground running.

Claude 3 Opus, on the other hand, gave a more narrative, almost consultative, response. It didn't just list recommendations; it framed the strategy within the broader market, explaining the why behind each move. For example, instead of just listing "SEO" as a channel, it explained why a content-led strategy would be crucial for building long-term trust with skeptical small business owners.

Key Insight: ChatGPT is your go-to for structured, tactical plans that you can act on immediately. Claude feels more like a strategic partner, helping you brainstorm and understand the underlying dynamics of the market before you commit.

Creative and Long-Form Content

For anyone who writes for a living, an AI's knack for generating natural, engaging text is everything. We tested both on their creative writing chops with a blog post prompt.

Prompt: "Write the introductory 500 words of a blog post titled 'The Future of Remote Work is Asynchronous.' Adopt a thoughtful, slightly provocative tone aimed at tech leaders."

This is where Claude really started to shine. Its output was fluid, capturing the requested tone perfectly with a distinct, human-like style. The prose felt original, skillfully avoiding those tell-tale AI phrases and hooking the reader from the very first sentence. It felt less like a machine's output and more like an essay from a seasoned thought leader.

ChatGPT's response was well-organized and informative, but it didn't have the same creative flair. The tone was a bit more formal and academic, and it leaned on familiar openings like "In today's evolving landscape..." While factually sound, the writing required a heavier hand in editing to inject a real voice and personality.

This brings up a common issue with language models: sometimes they produce confident-sounding but incorrect information, a problem known as hallucination. Getting accurate and reliable outputs means knowing how to steer the AI correctly. For anyone looking to sharpen their skills, our guide on how to reduce hallucinations in LLM outputs offers some practical techniques.

Code Generation and Troubleshooting

For developers, a good AI assistant doesn't just write code; it helps you debug and solve real problems. We threw a common coding challenge at them to see how they'd handle it.

Prompt: "I have a Python script that scrapes data from a website, but it's failing on pages that use JavaScript to load content. Write a function using Selenium to handle dynamic content, and explain the key steps."

Both models nailed the request and produced working code. Their approaches, however, were quite different.

- ChatGPT's Approach: It got straight to the point, delivering a clean, efficient code block almost instantly. The explanation that followed was a concise, step-by-step breakdown of how the Selenium WebDriver works. The focus was clearly on speed and accuracy.

- Claude's Approach: Claude also provided the correct code but took the time to weave more detailed comments directly into the code itself. Its explanation went deeper, covering potential edge cases like handling timeouts and different ways to find elements—incredibly useful for a developer who might be learning the ropes.

For experienced devs who just need a correct code snippet fast, ChatGPT is often the quicker tool. But if you're troubleshooting a tricky issue or trying to learn, Claude’s more educational, context-rich approach makes it a far better assistant.

Summarizing Dense Documents

Plowing through long, technical documents is a classic time-sink for researchers, analysts, and legal pros. We tested how well each model could distill the key takeaways from a dense, 50-page academic paper on machine learning.

Prompt: "Summarize the key findings, methodology, and limitations of the attached research paper. Extract the top three most significant conclusions and present them as bullet points."

This task immediately showed why a large context window matters.

Claude, with its massive context window, produced a summary that was exceptionally detailed and coherent. It didn't just pull out keywords; it accurately identified the nuanced arguments throughout the paper. Its analysis of the study's limitations was particularly sharp, demonstrating a genuine comprehension of the material.

ChatGPT also delivered a decent summary, but it was much more high-level. It successfully extracted the main points, but it sometimes missed the subtle threads connecting different sections. The bullet points were accurate but lacked the rich contextual depth that Claude provided.

Both models do a good job if all you need is a quick overview. But for deep, nuanced analysis where understanding subtle arguments is the whole point, Claude's ability to process and synthesize huge amounts of information gives it a clear edge.

How Each AI Handles Your Data

It’s easy to get caught up in the quality of AI-generated text, but what's happening behind the scenes is just as important. The technical nuts and bolts of Claude and ChatGPT really shape how you can use them, especially when you’re dealing with serious, professional work. Things like context windows and data privacy aren't just jargon—they determine which tool fits best into your daily workflow.

These backend details can make or break the user experience, affecting everything from speed to security. Let’s dig into what these technical differences actually mean for you.

The Critical Role of the Context Window

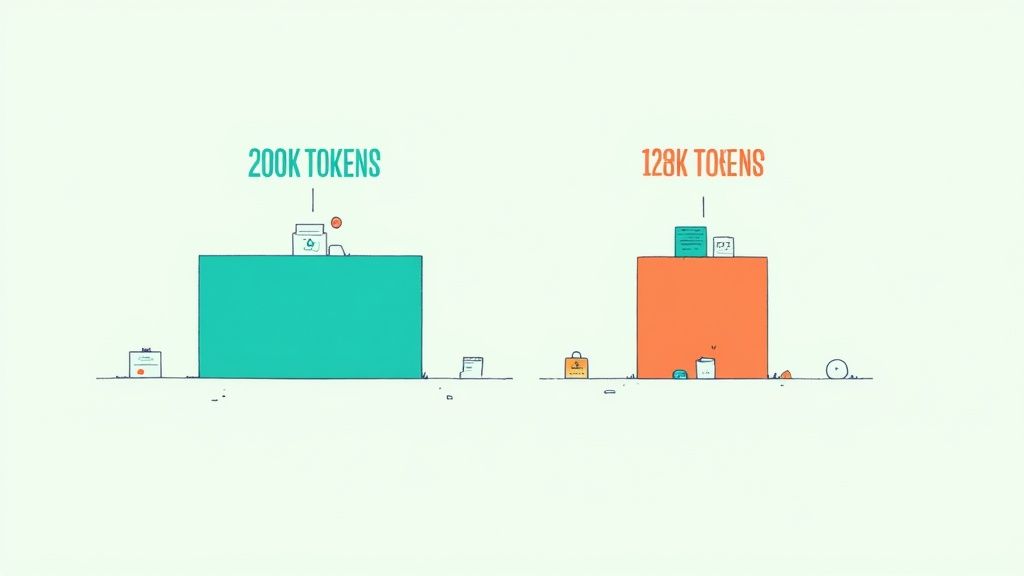

Think of an AI's context window as its short-term memory. It's the total amount of information the model can keep in mind during a single conversation, measured in "tokens" (which are basically small pieces of words). A bigger window means the AI can digest more information at once without forgetting what you told it at the beginning.

Here’s how the flagship models compare:

- Claude 3 Opus: Comes with a massive 200,000 token context window. That’s about 150,000 words, more than enough to analyze a whole novel like Moby Dick in one pass.

- ChatGPT-4 Turbo: Offers a very respectable 128,000 token context window. This handles roughly 100,000 words, which is plenty for most long-form professional documents like detailed project briefs or hefty reports.

This isn't just about big numbers; it has real-world consequences. Say you're a developer trying to refactor a complex codebase. With Claude, you can drop all the relevant files into the prompt at once. The AI sees the big picture, understands how different modules interact, and gives you a much more cohesive solution. With ChatGPT, you might have to feed it the code in smaller chunks, which risks losing crucial context and could lead to mistakes.

For tasks that demand deep analysis of huge documents—think legal contract reviews, summarizing academic research, or dissecting financial reports—Claude's larger context window gives it a clear, undeniable edge.

Comparing Response Latency

Latency—how long it takes an AI to give you an answer—is a big deal for user experience. Both models are impressively quick, but their speed can feel different depending on what you’re asking.

For short, simple questions, ChatGPT often feels faster. Its architecture seems tuned for quick back-and-forth conversations, brainstorming sessions, and generating small snippets of code or text. It feels incredibly responsive for those day-to-day tasks.

But when you get into more complex reasoning or throw a huge document at it, the lines blur. Claude's ability to process everything in one go can actually make it more efficient for those deep-dive tasks since it isn't juggling smaller pieces of information. For most people, though, both models are so fast that latency won't be the deciding factor unless you’re building a real-time application with their APIs.

Data Privacy and Security Policies

This is a big one. For any business, how your data is handled is arguably the most important consideration. The difference between Anthropic and OpenAI's policies is a major point of separation in the Claude vs ChatGPT debate.

Anthropic's Privacy-First Stance Anthropic has staked its reputation on being a secure, enterprise-ready platform. Their policy is refreshingly simple: they do not train their models on any data you submit via their API or paid Claude Pro and Team plans. Period. Your proprietary information and business conversations stay yours. This makes Claude a much more comfortable choice for any organization handling sensitive data, like:

- Confidential legal documents

- Proprietary source code

- Financial records

- Private customer data

OpenAI's Data Usage Policy OpenAI's approach is a bit more complicated. They guarantee they won't use data from their API or the ChatGPT Enterprise plan to train their models. However, conversations on the consumer ChatGPT Plus and free versions can be used for training unless you proactively opt out in your settings.

While OpenAI certainly has strong security, this default opt-in policy is a risk for businesses. Any employee using a personal account for work could unknowingly feed sensitive company information right into the model's training data. It’s a crucial distinction for any organization where data confidentiality is a top priority.

A Practical Breakdown of Pricing and API Costs

When you're trying to pick between Claude and ChatGPT, the decision often boils down to your wallet and what you're actually trying to accomplish. Both platforms have free versions, paid subscriptions for individuals, and pay-as-you-go API access for developers. But where they really differ is in the value you get for your money.

For everyday users, both Claude Pro and ChatGPT Plus hover around the $20 per month mark. That price gets you access to their best models, higher usage caps, and quicker responses. The real fork in the road is the feature set. ChatGPT Plus, for instance, throws in extras like DALL-E image generation, which makes it a more well-rounded creative tool.

Comparing Consumer Subscriptions

Choosing a Pro plan really depends on what your day-to-day work looks like. If you're constantly analyzing, writing, or summarizing huge documents, Claude Pro is a powerhouse. Its massive context window and natural, fluid writing style are hard to beat for text-heavy tasks.

On the other hand, if you need a jack-of-all-trades assistant for everything from brainstorming and coding to whipping up a quick image for a presentation, ChatGPT Plus gives you a wider array of tools for the same price. It's the classic specialist versus generalist dilemma.

A Deep Dive into API Pricing

For developers and businesses building on top of these models, the real conversation starts and ends with API costs. Everything is measured in "tokens," which are just small chunks of words. You pay for "input" tokens (what you send to the model) and "output" tokens (what it sends back). Lower per-token costs mean you can scale your application without breaking the bank. For a more detailed look at how this works, our comprehensive guide to Claude's pricing models breaks it all down.

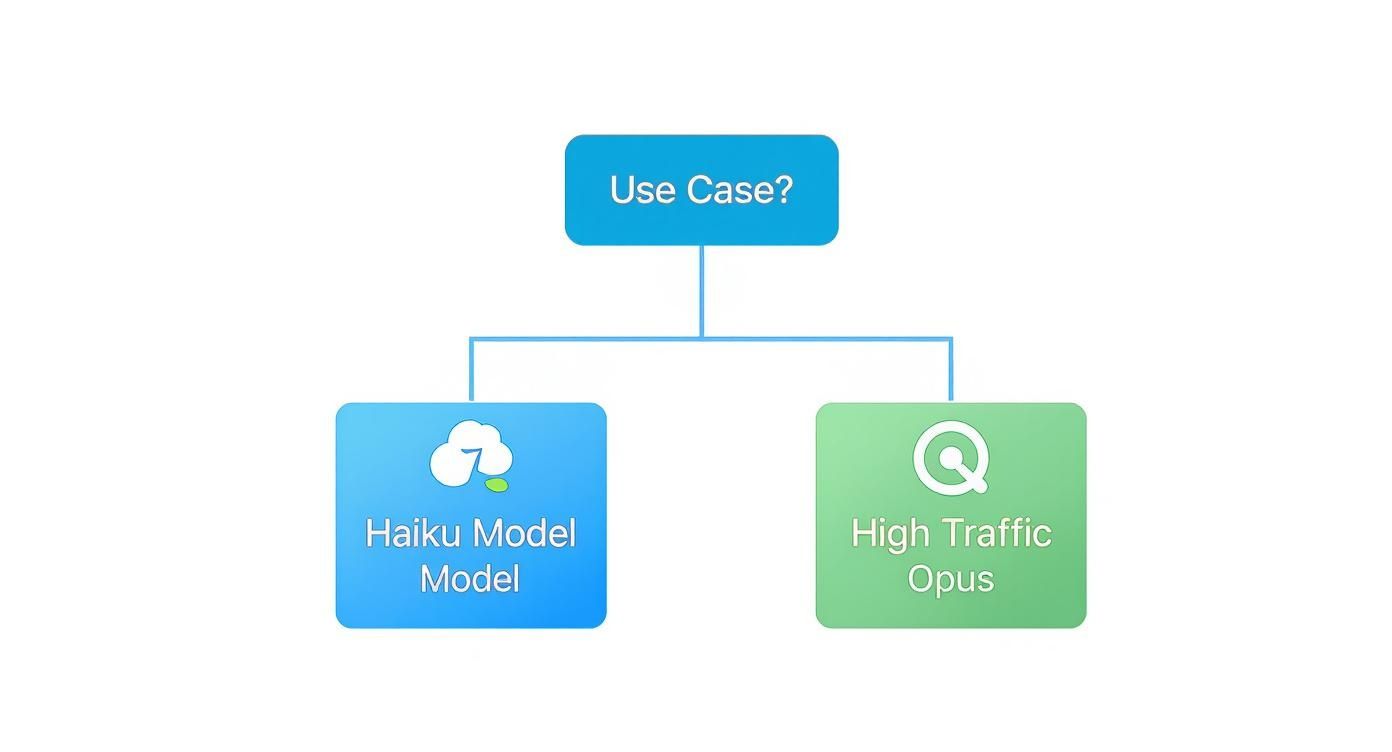

Both Anthropic and OpenAI have tiered pricing. The faster, lighter models like Claude 3 Haiku or GPT-4o cost less, while the heavy-hitters like Claude 3 Opus come with a premium price tag. It's always a good idea to look at various pricing models in the industry to see how they stack up.

Key Takeaway: For high-volume, simpler tasks like customer service bots, a cheaper model is the smart move. But if your application needs to perform complex reasoning or in-depth analysis, you have to invest in a top-tier model like Opus, even if it costs more.

The table below gives you a clear, numbers-first look at the cost to process one million tokens across the most popular models. This is absolutely essential for forecasting your budget when you're building out AI features.

API Pricing Comparison Per Million Tokens (USD)

Here's a cost analysis for developers comparing the API pricing for various Claude 3 and ChatGPT-4 models.

| AI Model | Input Cost / 1M Tokens | Output Cost / 1M Tokens |

|---|---|---|

| Claude 3 Haiku | $0.25 | $1.25 |

| Claude 3 Sonnet | $3.00 | $15.00 |

| Claude 3 Opus | $15.00 | $75.00 |

| GPT-4o | $5.00 | $15.00 |

| GPT-4 Turbo | $10.00 | $30.00 |

This comparison brings a critical point in the Claude vs ChatGPT debate into sharp focus. Anthropic's Claude 3 Haiku is incredibly cheap for input-heavy jobs, which makes it a fantastic choice for apps that need to process a lot of user text. Meanwhile, OpenAI's GPT-4o hits a sweet spot between raw power and affordability, making it a go-to, all-around option for many developers.

Which AI Should You Choose for Your Role

Deciding between Claude and ChatGPT isn't about finding the "best" AI overall. It’s about picking the right tool for the job you need to do. The best choice really boils down to the specific demands of your daily work.

This decision tree helps visualize how to pick the right model based on your use case, especially when you're balancing cost against traffic needs.

As you can see, a model like Claude Haiku is perfect for cost-sensitive tasks that don't happen too often. On the other hand, if you're running high-traffic, complex applications, it makes sense to invest in a premium model like Opus.

For Content Strategists and Writers

If your world is built on words, Claude is the definitive choice. Its knack for generating natural, nuanced prose that feels genuinely human is second to none. It’s fantastic at adopting specific tones and styles, making it an incredible partner for drafting long-form content, editing tricky documents, and keeping your brand voice consistent.

Thanks to its massive context window, Claude can digest entire reports or manuscripts in one go, offering insightful feedback without losing track of the details. ChatGPT is certainly competent, but its writing can sometimes come off as a bit generic, forcing you to do more heavy lifting to inject personality. For creative pros where quality and authenticity are everything, Claude is simply the better tool.

Recommendation: Choose Claude for its expressive language, superior long-form content generation, and ability to handle deep textual analysis with nuance.

For Developers and Data Scientists

When it comes to coding and data analysis, ChatGPT often holds the edge. Its real strengths are speed, versatility, and a vast ecosystem of integrations. It spits out clean, functional code snippets in a flash and is a solid partner for brainstorming logic or cracking common programming puzzles.

That said, Claude has become a serious contender, especially for more complex projects. Anthropic has clearly focused on coding, creating features that can analyze entire codebases and help with debugging in a way that’s more contextual and even educational. Our detailed look at Claude's coding capabilities shows just how much it has grown. Still, for developers who need quick, all-purpose help and access to tons of tools, ChatGPT is usually the first stop.

- ChatGPT's Strengths: Fast code generation, a huge range of integrations, and strong performance on standard programming tasks.

- Claude's Strengths: Deep codebase analysis, helpful educational explanations, and better handling of large, complex projects.

Recommendation: Start with ChatGPT for its all-around utility and speed, but give Claude a serious look for in-depth codebase analysis and untangling complex systems.

For Business Analysts and Researchers

For analysts and researchers who spend their days digging through dense information, Claude is a much more powerful ally. Its industry-leading 200K token context window is an absolute game-changer. You can drop in massive financial reports, long-winded market studies, or a handful of academic papers all at once and get back a coherent, detailed synthesis.

Claude has a real talent for spotting subtle trends, connections, and limitations buried within large documents. ChatGPT can summarize well enough, but it's more likely to miss those nuanced details when faced with extremely long texts. For any role where the main job is to pull actionable insights from mountains of information, Claude’s analytical depth gives it a clear advantage.

A Few Lingering Questions

When you're trying to pick the right tool for the job, a few practical questions always pop up. Let's tackle some of the most common ones that come up when comparing Claude and ChatGPT head-to-head.

This isn't about hype; it's about what works in the real world. Here are the straight answers to help you lock in your decision.

So, Who’s the Better Creative Writer?

For most creative writing, Claude tends to pull ahead. People often describe its output as more natural and less mechanical, which is a huge plus when you're drafting anything that needs a real voice—think blog posts, scripts, or marketing copy.

ChatGPT is no slouch, but it can sometimes lean into more predictable, generic phrasing. I've found that Claude is just better at picking up on subtle tones and producing writing that feels like it came from a person, not a machine. That usually means less time spent editing and more time creating.

The bottom line: If your main focus is producing high-quality, nuanced writing with a distinct style, Claude is probably your best bet.

Can I Really Feed Claude an Entire Book?

Yes, you absolutely can, and this is where Claude truly shines. The 200,000 token context window in Claude 3 Opus is a game-changer. That's about 150,000 words, which means you can drop in a full novel, a massive research paper, or even an entire codebase and ask it questions.

This massive memory allows it to perform incredibly deep analysis without forgetting what was said in chapter one. ChatGPT's 128K context window is impressive in its own right, but for anyone working with truly massive documents, Claude’s bigger capacity is a major advantage.

What’s the Real Difference on Privacy?

The biggest distinction comes down to how your data is treated. Anthropic's policy for Claude Pro and API users is straightforward: they do not use your data to train their models. This is a massive selling point for any business or individual handling sensitive information.

On the other hand, OpenAI might use your conversations from the consumer versions of ChatGPT to train its models unless you go into your settings and opt out. If data confidentiality is a non-negotiable for you or your organization, Claude’s privacy-first stance gives it a clear edge.

Ready to get more out of your AI conversations? Promptaa helps you build, organize, and perfect your prompts to get consistently better results from any model. Start building your ultimate prompt library today.