applio: Ultimate Guide to AI Voice Cloning

Ever heard of AI voice cloning? Applio is one of the most popular open-source platforms out there for doing just that. It uses a powerful technique called Retrieval-based Voice Conversion (RVC) to analyze the unique qualities of a voice from audio clips and then reproduce it with stunning accuracy.

What Is Applio and How Does It Work?

Think of Applio as a digital workshop for your voice. It lets you take an audio recording of someone speaking or singing, feed it to the AI, and create a "voice model." Once that model is trained, you can use it to make any other audio sound as if it were spoken or sung by that original voice.

This isn't just some novelty voice-changing app. It’s a sophisticated system that gives you the keys to the entire process. You get to train your own custom AI voice models from scratch, which means you have total creative control over the final sound.

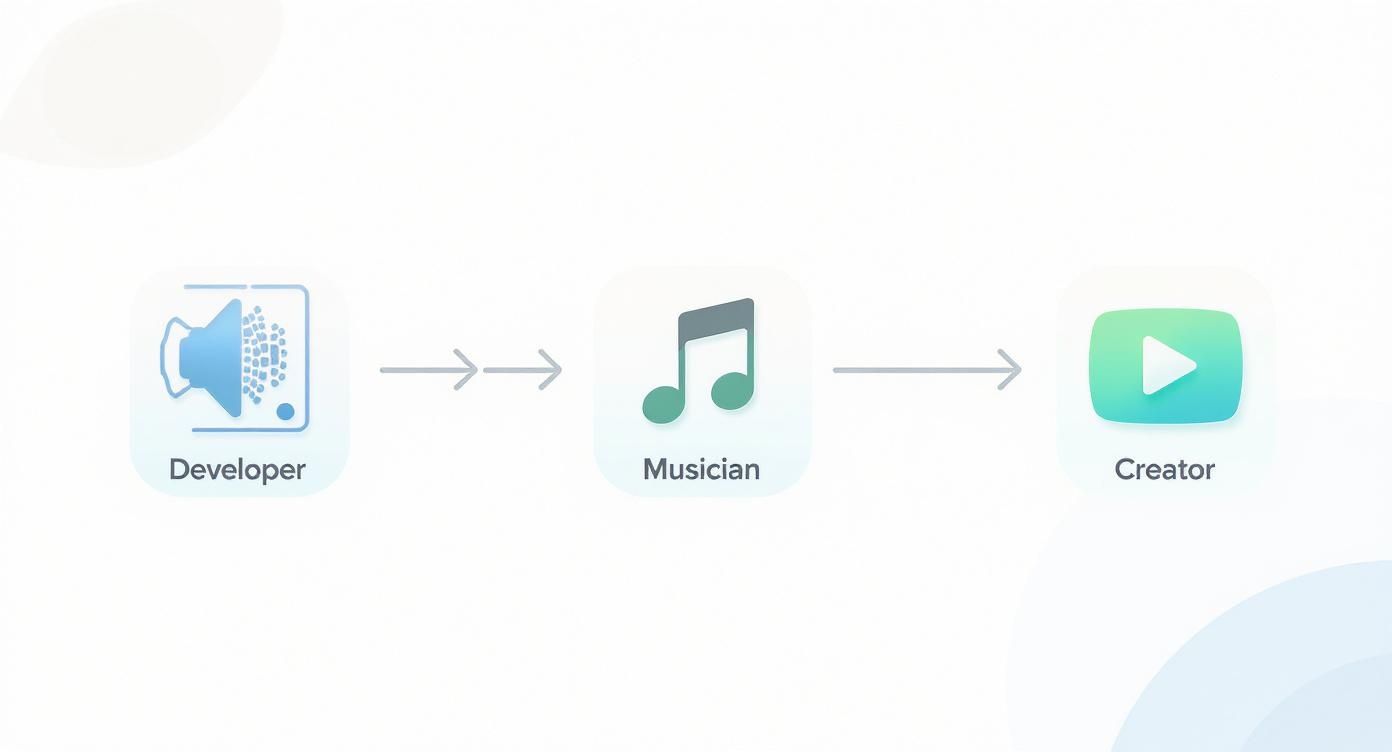

This level of control is why it's a go-to tool for creators. Musicians can experiment with new vocal textures, game developers can craft unique character voices, and podcasters can produce consistent voiceovers. Applio hands you the tools to build the exact voice you need, without being tied to a pre-packaged, subscription-based service.

The Power of Open-Source AI

The fact that Applio is open-source is a huge part of its appeal. This isn't just a technical detail; it has real, practical benefits for anyone who uses it.

- Community-Driven Development: A dedicated community of developers and fans from all over the world are constantly working to make it better. They add new features, squash bugs, and push the technology forward.

- Complete Customization: You have full access to the source code. This means you can tweak it, modify it, and even build it into your own custom projects if you have the know-how.

- It’s Free to Use: Applio itself doesn't cost anything. You won't find any subscription fees here. Your only cost is the hardware (like a good GPU) or cloud computing service needed to run the training process.

At its heart, Applio democratizes voice cloning technology. It takes a complex process once reserved for specialized labs and puts it directly into the hands of individual creators and developers, fostering innovation and creative expression.

Here's a quick look at the platform's clean interface, which neatly organizes all its functions into different tabs.

As you can see, the layout is designed to be straightforward. You can easily navigate between training a new model and converting existing audio files. This design makes the technology feel much less intimidating, even if you’re not a programmer.

The entire platform is built around a logical workflow, guiding you from preparing your audio data all the way to generating the final voice output. In this guide, we'll walk through each of those steps to show you how to make this powerful tool a practical part of your creative process.

Applio at a Glance: Key Features and Benefits

To give you a clearer picture, here’s a quick breakdown of what makes Applio such a compelling tool in the AI voice space.

| Feature | Description | Primary Benefit |

|---|---|---|

| RVC v2 Models | Utilizes the latest Retrieval-based Voice Conversion technology. | Achieves high-fidelity, realistic voice clones with fewer artifacts. |

| Open-Source | The software is free and its code is publicly available. | No subscription fees, and allows for community-driven improvements and deep customization. |

| Local Installation | Can be run on your own computer hardware. | You have complete control over your data and models, ensuring privacy and security. |

| Model Sharing | A large community shares pre-trained voice models. | Access thousands of voices created by others, saving you time on training. |

| TTS Integration | Includes text-to-speech capabilities. | Generate spoken audio directly from text using your custom-trained voice models. |

| TensorBoard Support | Built-in visualization tool for monitoring training progress. | Helps you understand how well your model is learning and when to stop training for best results. |

This combination of powerful technology, community support, and user control is what really sets Applio apart. It’s a tool built for creators who want to explore the frontiers of audio production without limits.

Understanding Applio's Core Technology and Features

To really get what makes Applio tick, we have to pop the hood and look at the engine: Retrieval-based Voice Conversion (RVC). This isn't just a simple voice filter; it's a far more sophisticated way of capturing the very soul of a voice.

Think of it like a master painter creating a portrait. They don't just trace a photo; they study the person's unique expressions, the way they hold their head, and the personality in their eyes. RVC does something similar for audio, analyzing a voice's core characteristics—its pitch, tone, and timbre—to build a detailed acoustic profile.

When you feed new audio into the RVC model, it digs through that learned profile, "retrieves" the most fitting vocal traits, and skillfully applies them. The result is a voice conversion that sounds incredibly natural and authentic, sidestepping the robotic, tinny quality that you hear in a lot of other tools. If you're curious about the broader tech behind this, this comprehensive guide to AI celebrity voice generator technology is a great place to start.

The Magic Behind RVC: Vector Embeddings

So, how does this actually work? At its heart, RVC translates the unique qualities of a voice into a series of numbers called vector embeddings. You can think of these embeddings as a voice's unique digital fingerprint, captured in a mathematical language the AI can understand and work with.

During the training phase, Applio pulls these distinct features from your audio files and plots them in a kind of multi-dimensional map. The cleaner and clearer your audio, the more accurate that "fingerprint" becomes. For a closer look at this foundational AI concept, check out our guide on what embeddings are.

This is precisely why having a clean dataset is non-negotiable. Any background hum, echo, or unwanted noise gets baked right into that vocal fingerprint, which will inevitably show up as glitches or artifacts in your final output.

Keeping an Eye on Training with TensorBoard

How can you tell if your model is actually learning or just spinning its wheels? Applio has a slick solution for this by plugging directly into TensorBoard, a powerful tool for visualizing what's happening.

Instead of trying to decipher lines of code or complex log files, TensorBoard gives you a live dashboard. It’s like having a heart rate monitor hooked up to your AI as it trains. You can watch key metrics and charts unfold in real-time to see just how well it’s picking up the nuances of the target voice.

This kind of visual feedback is a lifesaver for a few reasons:

- Catching Overfitting: You can pinpoint the exact moment your model stops learning and starts just memorizing the training data—a common issue that kills quality.

- Spotting Problems: If something’s off with your dataset, it often appears as weird spikes or flat lines on the graphs, telling you to investigate.

- Saving Time and Power: By seeing when the model has learned enough, you can stop the process and avoid running your GPU for hours on end, saving both time and electricity.

The Power of the Community Model Ecosystem

One of Applio’s best features isn't code at all—it's the community. The platform is built around a central hub where people can upload, share, and download pre-trained voice models. This has created a massive, constantly growing library of voices that are ready to go right out of the box.

This shared ecosystem is a game-changer. It means you don't always have to start from square one. You can find a high-quality model that’s close to what you need and start creating right away, or even use it as a foundation for your own custom training.

This collaborative approach really speeds up experimentation and makes it much easier for newcomers to get started. Whether you need a specific vocal style for a song or a character archetype for a video game, chances are someone in the Applio community has already built something you can use. It turns Applio from a solo tool into a bustling creative marketplace.

Who Uses Applio? Real-World Examples

The technology powering Applio is cool, but where the rubber really meets the road is in its practical, everyday applications. This isn't just a toy for tech geeks; it's a serious tool for creators, developers, and businesses who need custom, high-quality audio without the traditional headaches and high costs.

Let's dive into a few real-world scenarios to see how people are putting Applio to work.

The Indie Game Developer

Picture Alex, an indie game developer pouring heart and soul into a new fantasy RPG. The team is small, the budget even smaller. Hiring a full cast of voice actors for every merchant, guard, and quest-giver is a financial impossibility. Professional voice-over can run into the thousands, and that’s just not in the cards.

This is where Applio completely changes the game.

Instead of stretching the budget thin, Alex hires just two talented actors to record a handful of core character archetypes—the gruff warrior, the wise elder, the sneaky rogue. With that source audio, Alex uses Applio to:

- Create Vocal Variety: Train multiple distinct voice models from the initial recordings, turning two actors into a dozen unique characters.

- Generate Dialogue On-Demand: When the script changes or a new quest is added, Alex can instantly generate new lines that perfectly match the original actor's voice and tone. No need to book another recording session.

- Prototype and Test: Early in development, Alex uses the AI voices as placeholders. This lets the team test the flow of conversations and cinematics long before final audio is locked in.

Suddenly, Alex's game world feels alive and populated with a rich cast of characters—a level of polish that would have been completely out of reach otherwise.

The Experimental Musician

Now let's think about Maya, a musician who produces electronic tracks. She's always hunting for unique sounds and wants to build a track around an ethereal, otherworldly choir. But booking a studio and hiring a vocal ensemble? That's a non-starter.

So, Maya turns to Applio to build her own custom vocal instrument. She records herself singing a few simple scales and melodies, creating a clean dataset of her own voice.

From there, the real magic begins. She trains a model and starts experimenting. By tweaking the pitch and tone, she can transform her single voice into a full, dynamic choir. She generates soaring soprano lines and deep, resonant bass harmonies, all from her original performance. It gives her music a signature sound that is 100% hers.

Applio lets musicians like Maya move beyond generic sample packs. It essentially turns the human voice into a new kind of synthesizer, opening up a whole new world of creative expression.

The Niche YouTuber

Finally, meet Ben. He runs a YouTube channel creating deep-dive documentaries on historical events. For his brand, consistency is everything. But his recording space isn't a professional studio. Background noise sometimes ruins a take, or his energy level varies from one day to the next, creating a jarring experience for his viewers.

Ben now uses Applio to create a flawless, consistent voice-over for his channel. He took his best recordings—the ones with perfect audio and delivery—and trained a high-quality model of his own voice.

Now, for every new script, he just feeds the text into Applio and generates the narration. This solves several problems at once. It gets rid of audio imperfections, guarantees a uniform tone across all his videos, and cuts his production time down significantly. He can spend less time re-recording and more time on research and editing.

This kind of workflow isn't just for solo creators. When you have multiple people working on content, learning how to use AI for teams can unlock massive productivity boosts, helping to create a unified brand voice and streamline the entire creative process.

How to Create Your First AI Voice Model

Alright, let's dive in and get our hands dirty. Creating your first AI voice with Applio is actually a pretty exciting process. I'll walk you through everything from the initial setup to hearing that first AI-generated audio clip, making sure each step makes sense along the way.

The first thing to know is that Applio runs right on your own computer, which is great because it gives you total control. The trade-off is that training a voice model takes some serious muscle. You’ll need a decent NVIDIA graphics card (GPU) with at least 8GB of VRAM to get solid results.

But what if your computer isn't a powerhouse? No problem at all. Cloud services like Google Colab or RunPod are fantastic alternatives. They let you "rent" powerful GPUs by the hour, so you can train top-notch models without needing to buy expensive hardware.

Step 1: Curating Your Audio Dataset

This is, without a doubt, the most important part of the entire process. The audio clips you feed the AI—your "dataset"—will determine the quality of the final voice model. It's a classic case of "garbage in, garbage out."

Think of it like this: if you want to teach an AI to sing beautifully, you can't give it a bunch of scratchy, static-filled recordings. Your goal is to gather the cleanest, most consistent audio you possibly can.

Here’s what you should aim for:

- Audio Length: Shoot for a total of 15 to 30 minutes of audio. You can get something recognizable with less, but this is the sweet spot for capturing all the little details of a voice without the training process taking forever.

- Cleanliness is Critical: Your audio has to be pure. That means no background noise, no music, no echo, and definitely no other people talking. Every unwanted sound risks getting baked right into your model.

- Vocal Consistency: Try to use recordings where the tone, pitch, and volume don't jump all over the place. A dataset that goes from a whisper to a shout will just confuse the AI.

Step 2: Configuring the Training Session

Got your pristine audio files ready? Awesome. Now it's time to load them into Applio and set up the training parameters. This is where you tell the software exactly how to learn from your data.

You’ll start by telling Applio where your dataset folder is. The software will then get to work preprocessing the audio, slicing it into manageable chunks and analyzing its acoustic properties. It's basically doing its homework before the real training begins.

Next, you’ll tweak a few key settings. The defaults are a pretty good starting point, but it helps to know what’s what. You’ll give your model a name, pick the RVC version (v2 is the go-to for quality), and decide how many "epochs" to train for. An epoch is just one full pass where the AI studies your entire dataset from start to finish.

Step 3: Launching and Monitoring the Training

Once everything is set, you hit the "start" button. This is where your GPU really earns its keep, cycling through the audio data over and over, getting a little bit smarter about the voice with each epoch.

Be prepared—this isn't a fast process. It can take several hours, depending on your hardware and how much audio you're using. This is where Applio's integration with TensorBoard becomes a lifesaver. It’s a dashboard that gives you a live look at how the training is going.

By keeping an eye on the graphs in TensorBoard, you can actually see the model improving in real time. This helps you find that perfect "Goldilocks zone"—where the voice sounds great but hasn't been over-trained to the point of sounding robotic. It’s a huge time-saver.

As this flow shows, Applio is a seriously flexible tool. Whether you're a developer, musician, or content creator, it has practical applications that can slot right into your existing workflow.

Step 4: Testing Your New Voice Model

When the training is finally done, you’ll have two important files: a .pth file (the model itself) and a .index file (which helps it work faster). Together, these make up your brand-new AI voice. Now for the fun part—putting it to the test.

Head over to the "Inference" or "Voice Conversion" tab in Applio. Here's how simple it is to use your model:

- Select Your Model: Just choose the

.pthfile you just created. - Upload Source Audio: Grab any audio clip you want to transform. It could be your own voice, a line from a movie, or a song.

- Adjust Pitch: You can shift the pitch up or down to better suit the voice. For example, a +12 shift is common for male-to-female conversions, and -12 for female-to-male.

- Click Convert: Applio does its magic, swapping the voice in the source audio with the one from your model.

And that's it! You'll get a new audio file with the original performance but in your cloned voice. Congratulations, you’ve just made your first AI voice model. From here, you can go back and fine-tune your dataset or experiment with different settings to get even better results.

Getting Professional-Level Results With These Advanced Tips

Alright, so you've made your first voice model. That's a huge step! But now the real fun begins. Getting past the default settings is where you'll take a decent voice clone and turn it into something truly impressive. This means digging into the training parameters and getting serious about how you prepare your audio.

Think of it like using a professional camera. The "auto" mode works, but the best shots always come from photographers who take control in manual mode. Fine-tuning your Applio settings gives you that same level of creative control over the final voice.

Fine-Tuning Key Training Parameters

Two of the most important dials you can turn are batch size and epochs. Getting a feel for how these two work together is your ticket to a high-quality model without wasting a ton of time on training that goes nowhere.

- Batch Size: This tells the AI how many audio clips to look at in one go before it updates its learning. A bigger batch size can make training faster, but it also eats up more of your GPU's memory (VRAM). On the other hand, a smaller batch size is slower but can sometimes produce a more detailed model because the AI is learning in smaller, more careful steps.

- Epochs: An epoch is just one full pass through your entire audio dataset. If you don't run enough epochs, the model will sound robotic and unfinished. But if you run too many, you can "overfit" it—the model essentially memorizes your audio clips and loses its ability to speak new words naturally.

There's no magic formula here; the right settings really depend on how much audio data you have and what kind of hardware you're running. The secret is to experiment. Start with a reasonable number of epochs and use TensorBoard to see where the learning curve starts to flatten out. For those who want to get really nerdy about this, the concepts behind parameter-efficient fine-tuning offer a much deeper dive into making these processes work smarter, not harder.

Best Practices for Your Audio Dataset

Let me be blunt: the quality of your training audio sets the absolute ceiling for the quality of your final model. You can't tweak your way out of a bad dataset.

To really level up your audio prep, nail these three things:

- Noise Reduction: Fire up a tool like Audacity and hunt down any background hiss, hums, or room noise. Even tiny sounds you can barely hear can create weird artifacts in the finished voice.

- Vocal Consistency: Try to make sure the volume, tone, and speaking pace are as consistent as you can get them across all your clips. A dataset that jumps from a whisper to a shout will just confuse the AI.

- Silence Trimming: Snip out all the dead air and long pauses at the beginning and end of your audio files. This makes sure the AI is learning from the voice itself, not the empty space around it.

The best Applio models are always built on audio that has been obsessively curated. See yourself as a vocal archivist, saving only the cleanest, most representative samples of the voice you're cloning. That extra effort up front pays off big time in the final product.

Troubleshooting Common Audio Problems

Even with the best preparation, you might run into a few hiccups. Here’s a quick guide to figuring out what's wrong and how to fix it.

- Problem: The voice sounds robotic and flat.

- This is almost always a sign of undertraining. The model just hasn't had enough time to learn the little details of human speech.

- Solution: Increase the number of epochs. You also want to make sure your dataset is big enough—aim for at least 15+ minutes of clean audio.

- Problem: There are weird clicks, pops, or warbling sounds.

- Nine times out of ten, this is caused by a "dirty" dataset.

- Solution: Go back to your original audio files and listen with a critical ear. Clean up any microphone pops, background noises, or other imperfections you might have missed the first time.

- Problem: The voice sounds muffled, like it's talking through a pillow.

- This can happen if your batch size is set too high for your GPU to handle effectively.

- Solution: Try lowering the batch size. This forces the model to learn in smaller, more manageable chunks, which can often bring back the clarity.

Common Questions About Applio

Jumping into a powerful tool like Applio always sparks a few questions. From the nitty-gritty of hardware specs to the crucial ethical lines you need to know, getting good answers upfront is the key to using the platform well. Let's dig into some of the most common things people ask.

What Kind of Computer Do I Need to Run This?

This is usually the first question on everyone's mind, and for good reason—training AI models is heavy lifting. The single most important piece of gear for Applio is your Graphics Processing Unit (GPU). Sure, you can technically run the software on a regular CPU, but the training process will feel like it's moving in slow motion. We're talking days or even weeks for a job a decent GPU could knock out in a few hours.

For a good experience, you'll want an NVIDIA graphics card with at least 8GB of VRAM. Cards like the RTX 3060, RTX 4060, or anything in that family or higher will do the trick. The more VRAM you have, the bigger the batches you can train with, which seriously speeds things up.

But what if you're not working with a high-end gaming rig? No problem. Cloud platforms like Google Colab and RunPod are fantastic alternatives. They let you rent access to beefy GPUs on a pay-as-you-go basis, giving you all the power without the hefty price tag of new hardware.

Is It Legal to Clone Someone's Voice?

This one is incredibly important, and the answer is nuanced: it all comes down to consent. The laws around voice cloning are still catching up with the technology, but they generally hinge on rights to privacy, publicity, and fraud. Using a person's voice without their direct and informed permission could get you into a world of legal trouble.

On the ethical side, the line is much brighter. The folks behind Applio and its community are huge advocates for responsible use. That means you should only be cloning:

- Your own voice.

- A voice you have clear, written permission to use.

- Voices that are specifically licensed for this type of work.

Using a voice for parody or commentary can sometimes be a legal gray area, but using it for commercial gain, to impersonate someone, or to create fake, deceptive content is a massive no-go. Always, always put consent and transparency first.

How Much Audio Do I Need for a Good Voice Model?

The quality of your AI voice is a direct reflection of your training data. You can technically get a recognizable result from just a few minutes of audio, but it will probably sound robotic and flat, lacking any real personality.

For a high-quality, natural-sounding voice, aim for 15 to 30 minutes of clean audio. And "clean" is the key word here. Your recordings need to have:

- Zero background noise or music.

- No echo or reverb.

- No other voices in the recording.

- A consistent tone and volume.

Remember this: quality always trumps quantity. A perfect 15-minute dataset will give you a much better voice model than an hour of audio filled with background chatter and sloppy delivery. Taking the time to prep and clean your audio files is the best investment you can make in this entire process.

How Is Applio Different from a Service like ElevenLabs?

This question really gets to the core of control versus convenience. Applio and commercial platforms like ElevenLabs are both incredible tools for generating AI voices, but they're built for different people with different goals. The main difference is in their fundamental approach.

| Feature | Applio | Commercial Services (e.g., ElevenLabs) |

|---|---|---|

| Cost | Free (open-source) | Subscription-based (monthly fees) |

| Control | Total control over models and training | Limited to the platform's features and settings |

| Setup | Requires local or cloud installation | Web-based, ready to use in seconds |

| Customization | Hugely customizable for technical users | User-friendly but less flexible |

| Community | Strong user community for support and models | Official company customer support |

Ultimately, what you choose depends on what you want to achieve. Applio is perfect for the tinkerer, the developer, or the creator who wants to get their hands dirty and have complete ownership over their work. Services like ElevenLabs are built for people who need a polished, fast, and easy solution and are happy to pay for that simplicity.

Think of it this way: Applio is a workshop stocked with every tool you can imagine, while ElevenLabs is a finished product you can pick up right off the shelf.

Ready to create, organize, and perfect your prompts for Applio and other AI tools? Explore the extensive library and powerful features at Promptaa and start getting better results today. Visit https://promptaa.com to learn more.