A Guide to the Different Types of LLM

When you start looking into Large Language Models (LLMs), you’ll quickly see they aren't all the same. They fall into a few key categories based on how they're built, who can use them, and what they’re designed to do. We're primarily talking about the differences between autoregressive and bidirectional models, open-source and proprietary systems, and general-purpose and domain-specific LLMs.

Getting a handle on these distinctions is the first real step toward picking the right tool for the job.

What Are Large Language Models Anyway?

Before diving into the different types, let's get on the same page about what an LLM actually is. The easiest way to think about it is like an incredibly powerful autocomplete. But instead of just guessing the next word in your text message, it can generate entire articles, write computer code, or even craft a poem.

These models are a specific type of AI that has been trained on a colossal amount of text and data scraped from the internet. Through this training, they absorb the patterns, grammar, context, and subtle nuances of human language. It’s important to remember they don’t truly understand things the way a person does. Instead, they become masters at calculating the statistical probability of which words should follow others.

How LLMs Learn and Generate Text

At its heart, an LLM's job is pretty straightforward: predict the next most likely word in a sequence. It accomplishes this using a sophisticated neural network architecture known as a Transformer, which helps it weigh the importance of different words in a prompt to create responses that make sense.

Think of it like a seasoned chef who has memorized thousands of recipes. They know from experience that certain ingredients (words) work well together and the specific order they need to be combined to produce a fantastic meal (a coherent sentence).

An LLM's true power isn't just regurgitating what it has learned. It's the ability to generalize from that data, mixing and matching patterns in new ways to create something entirely original.

This is what allows a single model to tackle such a wide array of tasks. The foundational technology behind this is a fascinating field of AI, which you can learn more about in our guide on the basics of Natural Language Processing.

This remarkable flexibility has led to a massive boom in their use. In fact, it's projected that by 2025, around 750 million applications will have LLM technology baked into them, a clear signal of just how important they're becoming across almost every industry.

Autoregressive vs. Bidirectional LLMs

When you peek under the hood of a large language model, you'll find one of two core design philosophies at work. Think of it as a fundamental split in how they "think"—one approach predicts what's coming next, while the other focuses on understanding what's already there.

Getting a handle on this difference is the key to understanding why a model like GPT-4 is a creative powerhouse, while another like BERT is a master of analysis. One is a storyteller, the other is a detective.

Autoregressive Models: The Predictive Storytellers

Autoregressive models are built to do one thing with incredible skill: predict the next word in a sentence. It’s a simple concept with powerful results.

Imagine you start a sentence, "The dog chased the ___." The model looks at the words "The dog chased the" and calculates the most likely word to follow. It might be "ball," "cat," or "car." Once it adds a word, that new word becomes part of the context for predicting the next one. It's a chain reaction, building text one link at a time, always moving forward.

This left-to-right, sequential generation is what makes these models so good at tasks where coherence and flow are critical.

- Content Creation: They're the go-to for drafting articles, emails, or marketing copy that sounds natural and human.

- Creative Writing: Need a poem, a script, or a short story? This is their sweet spot.

- Chatbots & Virtual Assistants: Their knack for creating conversational, back-and-forth dialogue makes them perfect for interactive AI.

This constant focus on "what comes next?" is why autoregressive models excel at producing text that feels like it was written by a person. They’re always building on the past to create a plausible future.

Bidirectional Models: The Context Detectives

On the other side of the fence, you have bidirectional models. These models, like Google's famous BERT, aren't trying to predict the future. Their mission is to deeply understand the present.

Instead of reading from left to right, a bidirectional model looks at an entire sentence all at once—forwards and backward. This allows it to grasp the intricate relationships between words and nail down their true meaning based on the full context.

Take the word "bank." In the sentence, "She sat by the river bank," it has a specific meaning. But in, "He deposited money at the bank," it means something entirely different. A bidirectional model sees the whole sentence and instantly understands which "bank" you're talking about.

This ability to analyze the full picture gives bidirectional models a profound understanding of nuance. They aren't great at writing new stories, but they are unparalleled at understanding the ones that are already written.

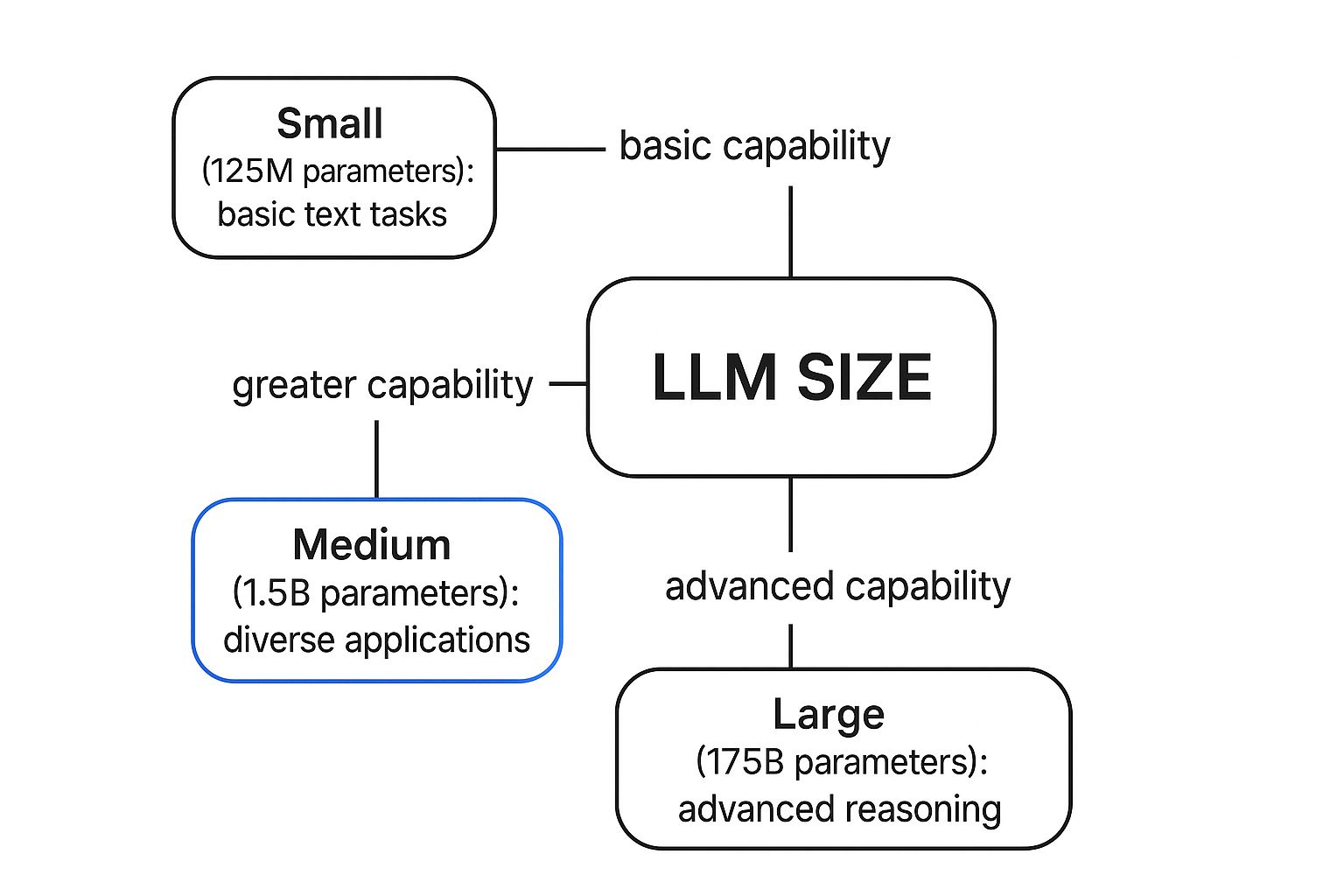

The image below shows how model size, measured in the number of parameters, often correlates with a model's reasoning power—a crucial factor for both types of architectures.

As you can see, models with more parameters tend to have more sophisticated reasoning skills, which boosts the performance of both autoregressive and bidirectional systems.

Because of their deep contextual awareness, bidirectional models are the workhorses for any task that requires true comprehension.

- Search Engines: They power the technology that figures out the actual intent behind your search query, not just the keywords.

- Sentiment Analysis: These models can read a product review and instantly tell you if the customer was happy or frustrated.

- Text Classification: They're used to automatically categorize news articles, sort customer support tickets, or flag spam emails.

Autoregressive vs. Bidirectional Models at a Glance

To make this even clearer, let's break down the key differences side-by-side. The right choice always depends on what you're trying to accomplish.

| Attribute | Autoregressive Models (e.g., GPT-4) | Bidirectional Models (e.g., BERT) |

|---|---|---|

| Primary Goal | Predict the next word in a sequence | Understand the context of all words in a sequence |

| How It Works | Reads text from left-to-right (forward only) | Reads text forwards and backward at the same time |

| Best For | Generative tasks: writing, content creation, conversation | Analytical tasks: search, classification, sentiment analysis |

| Strengths | Fluency, coherence, creativity, human-like text generation | Deep contextual understanding, nuance, accuracy in analysis |

| Famous Examples | GPT series, PaLM, Llama 2 | BERT, RoBERTa, ALBERT |

Ultimately, autoregressive models are your creators, spinning new text out of existing context. Bidirectional models are your analysts, digging deep to uncover the meaning buried within the text you already have.

Choosing Between Open-Source and Proprietary Models

One of the first major forks in the road you'll encounter when working with LLMs is the choice between a proprietary or an open-source system. This decision isn't just about technical specs; it has huge implications for everything from your budget and data privacy to the long-term flexibility of your project. Each path comes with its own distinct set of benefits and trade-offs.

A good way to think about it is like buying a car. Proprietary models are like high-end, fully serviced sports cars. You don't need to be a mechanic—you just pay for access and get world-class performance straight off the lot. On the other hand, open-source models are like powerful engine kits. You get all the core components, but it’s on you to assemble, tune, and maintain them to build the exact machine you need.

Proprietary Models: The Plug-and-Play Powerhouses

Proprietary models, often called closed-source models, are the creations of private companies. You typically access them through a paid API (Application Programming Interface), which makes them incredibly easy to plug into your applications without needing a supercomputer in your server room or a team of AI experts.

These models often represent the very peak of AI performance, fueled by colossal research budgets and trained on mind-boggling amounts of data. For any team that needs to get a powerful AI feature up and running fast, they’re usually the most direct route.

So, what are the main draws?

- Top-Tier Performance: They are often the biggest and most capable models out there, delivering state-of-the-art results across a huge range of tasks.

- Ease of Use: All it takes is a simple API call to get going. This completely removes the enormous technical headache of hosting and maintaining a massive model yourself.

- Reliability and Support: The company that owns the model handles all the maintenance, updates, and troubleshooting. This means consistent uptime and dedicated support when you need it.

A key advantage of proprietary systems is speed to market. A developer can integrate a model like GPT-4 or Claude into an app in a matter of hours, a process that could take an entire team weeks or months with an open-source alternative.

The trade-off for all this convenience is a loss of control. You're completely dependent on the provider's pricing, their terms of service, and how they handle your data. To get a better sense of how the leading proprietary models compare, our in-depth comparison of ChatGPT and Claude models breaks down their unique strengths.

Open-Source Models: The Customizable Engines

Open-source models offer a completely different proposition, one built around transparency, control, and deep customization. Here, the model's architecture and its trained weights are made public, allowing anyone with the right skills and hardware to download, modify, and run them on their own servers.

This approach is perfect for organizations with very specific or sensitive needs. For instance, a healthcare company could fine-tune an open-source model on its private patient data without ever sending that information to a third-party server, ensuring maximum data privacy.

This level of control unlocks some powerful opportunities:

- Unmatched Flexibility: You can fine-tune the model for a highly specialized task, potentially achieving even better performance than a general-purpose proprietary model in that one specific niche.

- Cost Control: The initial setup can be a heavy lift, but running the model on your own hardware can be much more cost-effective at scale compared to paying per API call.

- Transparency: With full access to the model's inner workings, you have a much better shot at understanding its behavior, its biases, and its limitations.

The biggest hurdle here is the technical expertise required. Deploying and fine-tuning these models is not for the faint of heart; it demands serious computational resources and a skilled team of AI engineers. But for those who have the resources, the ability to build a truly custom AI solution is a game-changing advantage.

General Purpose vs. Domain Specific LLMs

When you're looking at different types of LLMs, one of the biggest strategic questions you'll face is whether you need a jack-of-all-trades or a master of one. This is the core difference between general-purpose models, which are designed to do a little bit of everything, and domain-specific models, which are laser-focused experts in a single field.

Figuring this out is key. The right choice depends entirely on whether you need broad, flexible capabilities or deep, highly specialized knowledge for a specific job.

General Purpose LLMs: The Versatile Problem Solvers

Think of a general-purpose LLM as a brilliant liberal arts graduate. They’ve studied an incredible range of subjects, training on vast, diverse datasets that cover everything from ancient history and poetry to quantum physics and Python. This wide-ranging education makes them remarkably adaptable.

Models like Anthropic’s Claude 3 or Google’s Gemini are perfect examples. You can ask one to whip up some marketing copy, summarize a dense scientific paper, debug a block of code, and then suggest a recipe for dinner, all in the same conversation. Their power lies in this flexibility, which makes them fantastic tools for all sorts of business, creative, and everyday tasks.

These models are built to be helpful in almost any scenario. Their main selling point is their versatility, acting as a kind of universal multitool for anything you can throw at them.

The sheer scale of these models can be mind-boggling. Take the Falcon 180B model from the Technology Innovation Institute—it’s built with an incredible 180 billion parameters. To put that in perspective, it's 4.5 times larger than its predecessor. Its top-tier performance on general benchmarks really shows what's possible when you train a model on a massive, broad scale. You can dig deeper into how these powerful models have evolved and see how they stack up against each other.

Domain Specific LLMs: The Niche Experts

On the other side of the spectrum, you have domain-specific LLMs. These are more like seasoned specialists who’ve dedicated their entire careers to a single, complex field. They are trained on much smaller, highly curated datasets, but that information is incredibly deep and relevant to one particular industry.

This focused training gives them an undeniable edge. Their understanding of industry jargon, subtle context, and specific concepts is tuned to a level that a generalist model just can't replicate.

Here are a few great real-world examples:

- BioBERT: Trained exclusively on biomedical literature, this model is a powerhouse for analyzing scientific texts and accelerating medical research.

- BloombergGPT: This model was fed decades of financial data, making it exceptionally good at market analysis, tracking investor sentiment, and generating financial reports.

- FinBERT: Similar to BloombergGPT, this model is a specialist in financial language, helping firms analyze reports and news for critical investment insights.

If you’re a financial firm, using BloombergGPT for market analysis gives you a serious leg up. It can pick up on the subtle cues in financial news that a general-purpose model would likely overlook. A general model might give you a good answer, but a specialist model is far more likely to give you the right answer—one that’s packed with the nuance and accuracy that only a true expert can provide.

How to Select the Right LLM for Your Needs

Knowing the difference between LLM types is one thing, but actually picking the right one can feel daunting. The secret isn't finding the single "best" model on the market; it's about finding the best fit for what you're trying to accomplish. This means taking a good, hard look at your goals, your resources, and your limitations.

It's a lot like choosing a vehicle. You wouldn't pick a two-seater sports car for a family camping trip, and a massive truck isn't practical for zipping around a crowded city. Every LLM is built with a purpose in mind. Line up its strengths with your needs, and you're set for a successful project.

Define Your Primary Goal

First things first: what do you actually need this LLM to do? Your answer will instantly cut your options in half. Are you trying to generate brand new content, or are you trying to make sense of information you already have?

- For Content Generation: If you’re writing blog posts, drafting emails, or building a friendly chatbot, an autoregressive model is what you want. These models excel at predicting the next word in a sequence, making them fantastic for creating natural, human-like text from scratch.

- For Data Analysis: On the other hand, if your task is sentiment analysis, document classification, or powering an internal search engine, a bidirectional model is the clear winner. Its ability to look at a whole sentence—both backward and forward—gives it a much deeper contextual understanding, which is crucial for analytical tasks.

Once you’ve got that core objective pinned down, you can start digging into the practical side of things.

Evaluate Your Resources and Constraints

Beyond what you want to do, you have to consider what you can actually do. Getting real about your team's capabilities and limitations will help you avoid choosing a model that sounds great on paper but is a nightmare in practice.

Selecting an LLM is a constant balancing act between power, price, and control. If you ignore one, you might end up with a tool that's too expensive to run, too inflexible for your needs, or just too complicated for your team to handle.

Here are the key questions to ask yourself:

- Budget: How much are you prepared to spend? Proprietary models usually come with pay-as-you-go API fees, which can add up quickly. Open-source models, while free to download, demand a serious upfront investment in powerful hardware and the talent to run it.

- Technical Expertise: Do you have AI specialists on hand to deploy, fine-tune, and maintain a complex model? If not, a proprietary model with a simple API is a much more straightforward path. It’s essentially a plug-and-play solution.

- Data Privacy and Security: This is a big one. If you're handling sensitive customer data or internal secrets, sending that information to a third party is likely a non-starter. In that case, hosting an open-source model on your own servers is the only way to guarantee total control and security.

- Accuracy and Reliability: All LLMs have a tendency to make things up. If factual accuracy is a deal-breaker for your project, you'll need a solid plan to manage this. For some great strategies, check out our guide on how to reduce hallucinations in LLM outputs.

By working through this checklist, you can turn a potentially overwhelming choice into a clear, step-by-step process. You'll be able to confidently select a model that’s not just powerful, but perfectly suited to your project.

What's Next for Large Language Models

If you think the world of large language models is moving fast now, just wait. Knowing the core types of LLMs is the best way to stay grounded as the next wave of innovation reshapes what these tools can do.

We're moving beyond simple text generation and into a much more integrated, complex future.

Multimodality is Coming

One of the biggest leaps forward is multimodality. Tomorrow's models won't just be masters of words; they'll natively understand images, audio, and video, too.

Picture an AI that can watch a product demo video and instantly write a detailed user manual, complete with screenshots. Or imagine one that listens in on a brainstorming session and generates a visual mind map of the key ideas. This ability to process and connect different types of information will open up a whole new world of applications.

Smaller, Faster, and Everywhere

At the same time, not everything is about getting bigger. There's a huge push to create smaller, more efficient models that don't need a massive data center to run. The goal is to get powerful AI running locally on your laptop or even your smartphone.

This shift toward compact models solves a lot of problems. It dramatically improves privacy by keeping your data on your device, cuts down on operational costs, and makes AI available even when you're offline.

This trend is a key driver behind the explosive growth in the market. Projections show the LLM market is set to expand at a staggering compound annual growth rate of 79.80% between 2023 and 2030. You can find more details on the projected growth of LLM technology and see just how fast this space is moving.

As these technologies mature, the conversation around ethical development is getting louder—and for good reason. Ensuring models are fair, unbiased, and used responsibly isn't just a technical challenge; it's a social necessity that will define the industry's future.

Ultimately, by understanding the fundamentals—from autoregressive versus bidirectional models to the trade-offs between open-source and proprietary systems—you're building the foundation you need to adapt and succeed in this incredibly exciting field.

Digging Deeper: Your LLM Questions Answered

As you get more familiar with the world of large language models, a few common questions tend to pop up. Let's tackle some of the most frequent ones to clear up any confusion and help you make smarter decisions.

Core Model Differences and Usage

What's the real difference between autoregressive and bidirectional LLMs?

Think of it this way: an autoregressive LLM like GPT-4 is a forward-thinking storyteller. It writes by predicting the very next word based only on the words that came before it. This makes it fantastic for creative writing, drafting content, and conversational AI.

On the other hand, a bidirectional LLM like BERT is more like a meticulous editor. It reads the entire sentence—both forward and backward—to grasp the full context of every word. This deep understanding is perfect for tasks like search engines, sentiment analysis, and figuring out the subtle meaning in a piece of text.

Are open-source LLMs actually free for commercial use?

This is a classic "yes, but" situation. While you can often download the model itself for free, the real costs show up when you try to use it for a business. You're on the hook for the powerful servers, ongoing maintenance, and the specialized talent needed to keep it all running smoothly.

On top of that, not all open-source licenses are created equal. Some have strict rules about commercial use, so you absolutely must read the fine print before you build a product on top of one.

The "free" in open source is more about "freedom" to modify and inspect the code, not necessarily "free of cost." Sometimes, the total cost of running your own open-source model can end up being higher than just paying for API access to a proprietary one.

Making the Right Selection

When should I pick a domain-specific LLM instead of a general one?

You'll want a domain-specific LLM when you need a true expert. If your task demands high accuracy in a specialized field—like analyzing complex financial documents with something like BloombergGPT or combing through medical studies with BioBERT—a specialist model will always outperform a generalist.

A general-purpose LLM is your go-to for everything else. It’s the jack-of-all-trades perfect for broad applications like writing emails, summarizing articles on any topic, or building a chatbot that can talk about almost anything.

What's the magic number of parameters for an LLM to be considered 'large'?

Honestly, the goalposts are always moving on this one. A few years ago, anything over one billion parameters was considered massive. It was a pretty good rule of thumb.

But then models like GPT-3 came along with its 175 billion parameters, completely rewriting the definition of "large." Now, with the latest models pushing past a trillion, size is becoming less about a specific number and more about a model's overall capability.

Ready to organize and optimize your own prompts for any type of LLM? Explore Promptaa and discover a smarter way to manage your AI interactions. Get started today at https://promptaa.com.