4o-mini vs 4.1-mini A Practical Comparison Guide

When you're trying to decide between 4o-mini and 4.1-mini, the newer 4.1-mini model is more than just an update—it's a massive step up. It's noticeably smarter, responds faster, and somehow manages to be cheaper. For nearly anything you were using the old mini for, the new one is the clear winner.

A High-Level Comparison

Choosing the right small language model is a big deal. It's a decision that hits everything from your application's performance and user experience to your monthly bill. While both GPT-4o Mini and GPT-4.1 Mini are built for efficiency, they come from different points in AI's evolution. Figuring out what sets them apart is the key to picking the right tool for the job.

Quick Look 4o-mini vs 4.1-mini Key Differentiators

To get our bearings, let's start with a simple side-by-side. This table cuts straight to the chase, highlighting the biggest differences you'll notice right away.

| Feature | GPT-4o Mini | GPT-4.1 Mini | Key Takeaway |

|---|---|---|---|

| Overall Intelligence | Strong for its size | Near GPT-4 level | 4.1-mini is significantly smarter and better at complex reasoning. |

| Performance | Fast and efficient | Nearly 50% lower latency | 4.1-mini delivers that near-instant feel perfect for real-time apps. |

| Cost Efficiency | Cost-effective | Up to 83% cheaper | 4.1-mini makes running high-quality AI much more affordable. |

| Context Window | 128K tokens | 1 Million tokens | The massive context window in 4.1-mini unlocks serious document analysis. |

| Ideal Use Cases | Basic chatbots, simple tasks | Advanced customer support, data analysis, content generation | 4.1-mini can easily handle much more demanding and nuanced work. |

As you can see, 4.1-mini isn't just a minor refresh. It brings major upgrades across the board, making it a much more powerful and versatile tool while also being kinder to your budget.

Understanding the Generational Leap

The jump from GPT-4o Mini to GPT-4.1 Mini is one of those moments that really shows how fast this field is moving. GPT-4.1 Mini, which came out on April 14, 2025, just runs circles around its predecessor from July 18, 2024.

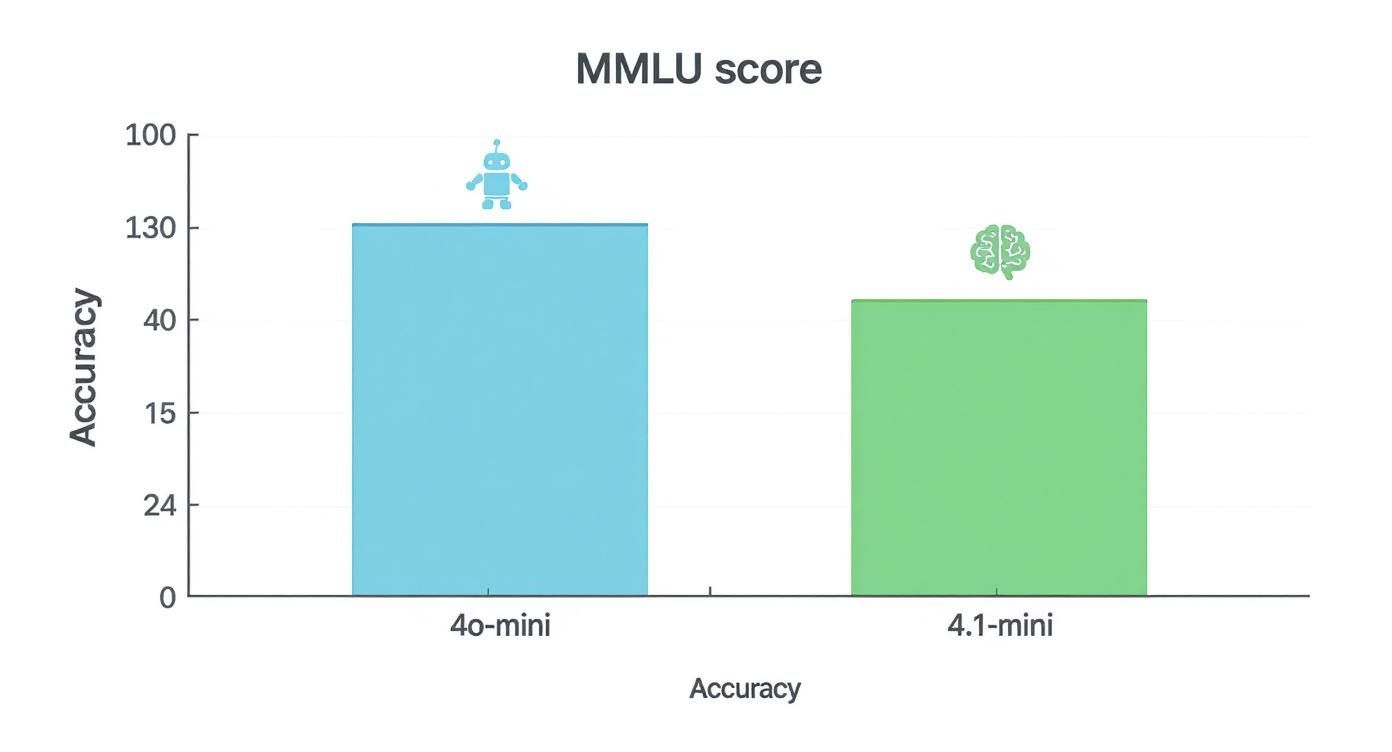

A great way to see this is the MMLU benchmark—a tough test that measures knowledge across 57 different subjects like law, math, and science. Here, GPT-4.1 Mini scored an 87.5%, which is right up there with the original, much larger GPT-4.0 model. You can learn more about how these models are built in our guide to the different types of LLM.

This chart really puts that improvement into perspective.

What this tells us is that 4.1-mini isn't just a small tweak. It's a fundamental improvement that brings the intelligence of a flagship model down into a smaller, more efficient package.

A Look Under the Hood: Technical Architecture and Capabilities

When you peel back the layers and compare 4o-mini and 4.1-mini, you quickly realize the differences are more than just skin deep. The new 4.1-mini isn't a minor update; it’s built on a completely re-engineered architecture that gives it capabilities far beyond what we’ve seen in a model of this class.

These foundational changes aren't just for academic interest—they have a direct and immediate impact on the kinds of tasks you can throw at the model. Whether you're analyzing dense documents or generating long-form content, the technical upgrades in 4.1-mini open up a whole new world of possibilities.

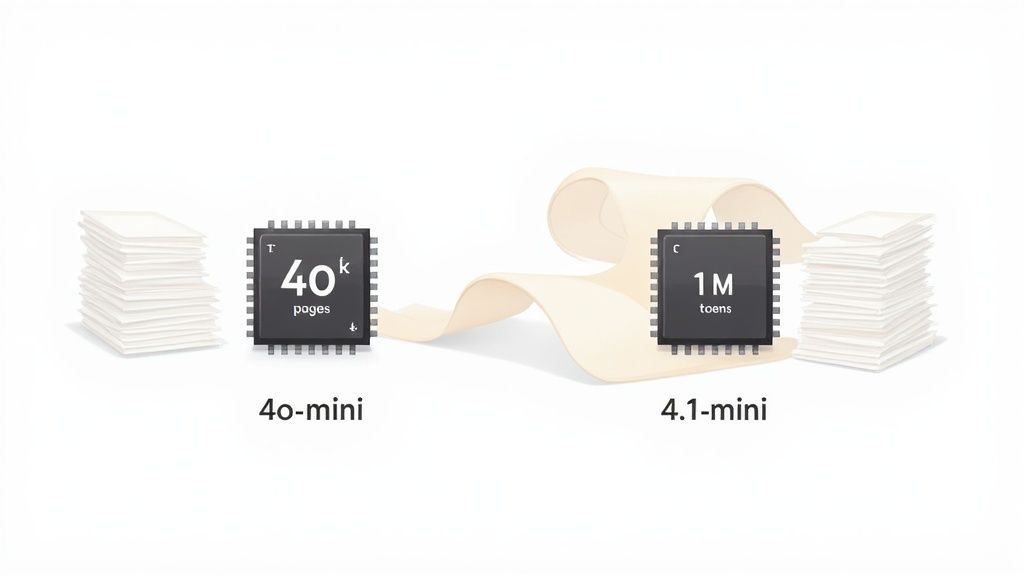

Context Window and Output Generation Limits

The single most significant upgrade is the model’s memory. The context window—how much information it can hold and process at once—has seen a massive expansion. GPT-4.1 Mini supports a staggering 1 million tokens, blowing past GPT-4o Mini's 128,000 tokens. That's an 8x increase in processing capacity. On top of that, GPT-4.1 Mini can generate up to 32,000 tokens in a single go, which is double the 16,400-token limit of its predecessor. You can see a full breakdown of these specs by checking out the GPT-4o Mini vs GPT-4.1 Mini comparison on docsbot.ai.

This isn't just about bigger numbers; it completely changes the game.

- Complex Document Analysis: You can now hand 4.1-mini an entire research paper, a lengthy legal contract, or a big chunk of a codebase and get back a nuanced summary or detailed analysis in a single prompt.

- Sustained Creativity: For long-form writing, like a chapter of a novel or a detailed technical guide, the model can maintain context, character voices, and technical consistency over a much longer stretch.

- Rich Conversational History: In chatbots, this means the model can remember far more of a conversation, leading to more coherent and personalized interactions without needing clunky external memory systems.

The leap to a 1 million token context window is a game-changer. It transforms 4.1-mini from a tool for short, transactional tasks into a powerful engine for deep, context-rich analysis and creation, effectively blurring the lines between "mini" and flagship models.

Knowledge Cutoff and Information Recency

Another critical improvement is how current the model is. The "knowledge cutoff" date dictates how much the model knows about recent events, technologies, and trends, and there’s a big difference here.

GPT-4.1 Mini was trained on data up to June 2024, whereas GPT-4o Mini’s knowledge stops in October 2023. That eight-month gap might not sound like a lot, but in the tech world, it’s an eternity.

This has real-world consequences for many use cases.

- Topical Content: If you're writing a blog post about the latest software release or market trend, 4.1-mini will deliver more accurate and relevant content right out of the box.

- Technical Support: For questions about new programming frameworks, recent API updates, or newly discovered vulnerabilities, 4.1-mini's answers will be far more reliable.

- Market Research: When you need to analyze recent consumer behavior or news cycles, having a model with a more current understanding of the world makes a huge difference.

If you're juggling different models for various projects, knowing these architectural details is key. For those using tools like LM Studio, our guide on how to select a model in LM Studio can help you match the right model to the right job. Ultimately, the best choice always comes down to aligning the model's technical strengths with the specific demands of your task.

Analyzing Speed: Latency and Throughput

When you're building an application that people will actually use, speed isn't a luxury—it's everything. That tiny delay between a user's question and the AI's answer, what we call latency, can make or break the entire experience. This is where the difference between 4o-mini and 4.1-mini really hits home.

The upgrade to 4.1-mini isn't just a small step up; it's a massive leap in performance. For anything that needs to feel like a real-time conversation—think live customer service bots, interactive coding assistants, or on-the-fly data analysis—this improvement is critical. A slow model creates awkward pauses that kill the flow and just flat-out frustrate users.

The Near-Instant Feel of 4.1-mini

The performance numbers tell a clear story. 4.1-mini cuts down latency by nearly 50% compared to 4o-mini. For anyone who has used it, especially for shorter queries, the response feels almost immediate. This is a huge deal for ChatGPT, where 4.1-mini is now the "fast" default and the go-to model for free users after they hit their GPT-4 limits.

This isn't just about shaving milliseconds off a benchmark. It fundamentally changes how people interact with the AI. When a response comes back as quickly as a human would reply, the AI starts to feel less like a clunky tool and more like a genuine partner.

The difference between a 400ms response and an 800ms response is huge in practice. One feels like a conversation, the other feels like a query. For any product that faces a user, this speed is a competitive edge that directly boosts retention and how people feel about its quality.

This speed boost gives developers the freedom to build more responsive and engaging products without the AI becoming a frustrating bottleneck.

Throughput and Scaling Up

Beyond the speed of a single response, we have to talk about throughput—how many requests the model can juggle at once. This is what matters when your application starts to take off. A model with high throughput can handle sudden traffic spikes without slowing down for everyone, which is the key to scaling successfully.

While 4o-mini was no slouch, 4.1-mini's architecture is built to handle a much higher volume of simultaneous requests. This translates directly to bottom-line benefits:

- Lower Infrastructure Costs: You can serve more users with the same hardware, which keeps your operational costs down.

- A Consistent Experience: The AI stays snappy and reliable for every user, even during your busiest hours.

- More Use Cases: High-traffic platforms that might have buckled under the load with 4o-mini are now fair game.

For any developer trying to get the most out of their system, these numbers are crucial. It's not just about raw latency; mastering AI input-output throughput is what really lets you squeeze every bit of efficiency out of the model you deploy.

Ultimately, the speed gains in 4.1-mini are more than just an incremental update. They open the door to a whole new class of real-time, scalable AI applications that just weren't practical with the older, slower "mini" models.

Comparing Cost Efficiency and Pricing Models

When you're building a project, performance is only half the story. The budget is often the deciding factor, and this is where the choice between 4o-mini and 4.1-mini becomes crystal clear. Both models are built for efficiency, but 4.1-mini represents a huge leap forward in making top-tier AI accessible. We're not talking about a small discount here; it's a game-changing price reduction that redefines what’s possible for high-volume applications.

With its new pricing, 4.1-mini opens the door for startups and large companies to scale their AI tools without seeing their costs spiral out of control. This frees up developers to think bigger, creating more complex, token-heavy applications that would have been too expensive to run on previous models. Getting a handle on these cost differences is essential for budgeting accurately and getting the most bang for your buck.

Analyzing the Total Cost of Ownership

The price-per-token is just the tip of the iceberg. To really understand the costs, you have to look at the total cost of ownership (TCO). This includes not just your API bills but also the developer hours spent tweaking prompts and the infrastructure needed to get the job done right. Here’s a key insight: because 4.1-mini is smarter and follows instructions more precisely, you'll likely spend less time on prompt engineering and need fewer retries to get the output you want.

This becomes especially compelling when you realize 4.1-mini costs 83% less than its predecessor while delivering intelligence that rivals much larger models. That combination of lower latency and drastically reduced costs makes it a no-brainer for any application handling a high volume of requests where speed and budget are top priorities.

For a deeper dive into the pricing of different OpenAI models, check out our guide on the ChatGPT 4o pricing structure. It’s also wise to get familiar with broader AI cost reduction strategies to maximize your project’s financial efficiency.

The real economic advantage of 4.1-mini isn't just its lower token price. It's the combined effect of reduced latency, higher intelligence, and lower cost, which creates a powerful multiplier for ROI in any scaled application.

Practical Cost Modeling Scenarios

Let's move from theory to practice. To see how these pricing differences shake out in the real world, I've put together a few cost projections for common applications. This table shows what you might expect to spend monthly on each model, making the savings with 4.1-mini immediately obvious.

Cost Modeling Scenarios 4o-mini vs 4.1-mini

| Use Case Scenario | Estimated Monthly Tokens (Input + Output) | Projected Monthly Cost (4o-mini) | Projected Monthly Cost (4.1-mini) | Monthly Savings with 4.1-mini |

|---|---|---|---|---|

| High-Volume Customer Service Bot | 100 Million | $150 | $30 | $120 (80%) |

| Content Generation Engine | 250 Million | $375 | $75 | $300 (80%) |

| Internal Data Analysis Tool | 50 Million | $75 | $15 | $60 (80%) |

As the table makes plain, the savings are substantial and consistent, no matter the scale. For a startup running a customer service bot, an extra $120 a month can be funneled directly back into growth. For a larger company generating tons of content, saving $300 or more every month quickly adds up to thousands in annual savings.

When it comes to your bottom line, 4.1-mini is the clear financial winner.

Choosing the Right Model for Your Application

Alright, let's get down to brass tacks. We've looked at the specs, but now it's time to connect the dots and figure out which of these models actually makes sense for your project. While the numbers show 4.1-mini is the clear front-runner on paper, knowing why and where it shines is what turns a technical spec into a smart business decision.

Honestly, for almost any new application you're building today, 4.1-mini isn't just a better option; it's the strategic one. You get intelligence that's nearly on par with flagship models, a huge drop in latency, and a whopping 83% cost reduction. That's a business case that’s tough to argue with.

Let's break down where each model fits best.

When to Use GPT-4.1-mini

If your application is user-facing and demands both speed and smarts, 4.1-mini is your go-to. It truly excels in environments where performance directly shapes the user experience and makes your operations more efficient. If your project fits one of these descriptions, this is the model for you.

1. Real-Time Customer Support Chatbots

When a user is waiting for an answer, every millisecond counts. 4.1-mini’s speed is a game-changer here, cutting response times from a noticeable delay to something that feels instantaneous. This shift completely transforms the user experience for support bots, real-time code assistants, or interactive data tools. In practical terms, this means you can support more concurrent users without the lag, keeping everyone happy. You can get more details on this performance leap on f22labs.com.

2. Complex Document Analysis and Summarization

The 1 million token context window is genuinely transformative. Forget chopping up documents; this model can digest and reason over massive amounts of text in a single go. This is a huge deal for specific fields:

- Legal Tech: Imagine feeding it an entire case file to pull out key arguments or identify risks in a long contract.

- Financial Analysis: It can summarize dense quarterly earnings reports or market analyses in seconds.

- Academic Research: You can have it process a stack of research papers at once to synthesize findings and spot emerging trends.

3. Scalable Content Creation Engines

If your business is pumping out content, cost is always a factor. 4.1-mini’s lower price point finally makes high-volume generation affordable without compromising too much on quality. This makes it perfect for:

- Drafting blog posts and brainstorming ideas automatically.

- Writing thousands of unique product descriptions for e-commerce sites.

- Creating personalized email campaigns at scale.

Choosing 4.1-mini is really an investment in a better user experience and future scalability. It can handle complex, real-time work at a fraction of the cost, making it the default choice for any new project with an eye toward growth.

When GPT-4o-mini Might Still Suffice

While 4.1-mini is the clear winner for new development, there are a few situations where sticking with 4o-mini might be a pragmatic, if temporary, choice.

1. Legacy Systems with Minimal Maintenance

Do you have an old app running on 4o-mini that just works? If it’s stable, users aren't complaining, and you have no plans to update it, then the effort to migrate might not be worth it. This often applies to low-traffic internal tools where a bit of latency isn't a deal-breaker.

2. Simple, Non-Critical Internal Scripts

For basic backend tasks—like sorting support tickets or doing simple text classification—4o-mini is often good enough. The extra horsepower and speed of 4.1-mini would simply be overkill for these kinds of simple, transactional jobs.

3. Prototyping and Initial Concept Testing

When you're in the early days of just trying to see if an idea has legs, 4o-mini can be a quick way to get a proof-of-concept running, especially if your dev environment is already set up for it. Just be sure to plan an upgrade to 4.1-mini before you go live. You don't want to build your foundation on a model that's already a step behind.

At the end of the day, the 4o-mini vs 4.1-mini decision is about looking ahead. For any application that will grow, interact with users, or chew on complex data, the path forward is clearly with 4.1-mini.

Frequently Asked Questions About 4o-mini and 4.1-mini

When you're weighing two similar AI models, a lot of practical questions pop up. It's not just about benchmarks; it's about how these models fit into your actual workflow. Let's tackle some of the most common questions about 4o-mini and 4.1-mini to help you make a clear-headed decision.

Think of this as the final check-in before you commit to a model, whether you're migrating an existing app or starting fresh.

Is Migrating from 4o-mini to 4.1-mini Difficult?

Good news: the switch is about as painless as it gets. For most projects, the only thing you'll need to do is change the model name in your API call. Both models use the same basic API structure, so you can breathe a sigh of relief—no major code refactoring is needed.

That said, you should absolutely run some regression tests. 4.1-mini is a smarter model and follows instructions more precisely, which means its outputs will often be better but potentially different. A quick round of testing will confirm that these higher-quality responses don't accidentally break anything downstream in your application.

The migration path is intentionally straightforward. The main lift isn't in rewriting code but in verifying that the superior outputs from 4.1-mini integrate smoothly with your application's logic and user expectations.

Are There Any Scenarios Where 4o-mini Still Performs Better?

This question comes up a lot, but honestly, the answer is a resounding no. When you look at the metrics that matter—speed, intelligence, cost, and context handling—4.1-mini is the clear winner across the board. It’s simply a more advanced and efficient model.

The only real reason to stick with 4o-mini is if you're maintaining a stable legacy system that you have no plans to touch. For any new project or an application you're actively improving, the performance gains and cost savings from 4.1-mini make the upgrade a no-brainer.

How Does API Compatibility Compare Between Models?

The API compatibility is rock-solid. You're looking at the same endpoint structure, request formats, and authentication you're already used to. This consistency is a huge win because it means you won't have to relearn anything or make complicated changes to your integration code.

Here’s a quick breakdown of what stays the same:

- Endpoint Naming: The only real change is swapping the old model ID for the new one (e.g.,

gpt-4.1-mini-preview). - Parameter Support: All the usual parameters like

temperature,max_tokens, andtop_pwork exactly the same way in both models. - Response Structure: The JSON response format is identical, so your existing parsing logic will work perfectly without any adjustments.

This high level of compatibility makes it incredibly easy to A/B test the models or just flip the switch for a full migration.

Ready to build with smarter, more efficient prompts? At Promptaa, we provide a comprehensive library to create, manage, and discover the best prompts for any AI model, including the latest like 4.1-mini. Organize your prompts and unlock better results today by exploring our platform.